keras-attention-augmented-convs

keras-attention-augmented-convs copied to clipboard

keras-attention-augmented-convs copied to clipboard

An op outside of the function building code is being passed a "Graph" tensor

Error TypeError: An op outside of the function building code is being passed a "Graph" tensor. It is possible to have Graph tensors leak out of the function building context by including a tf.init_scope in your function building code. For example, the following function will fail: @tf.function def has_init_scope(): my_constant = tf.constant(1.) with tf.init_scope(): added = my_constant * 2 The graph tensor has name: model_7/attention_augmentation2d_19/stack_6:0

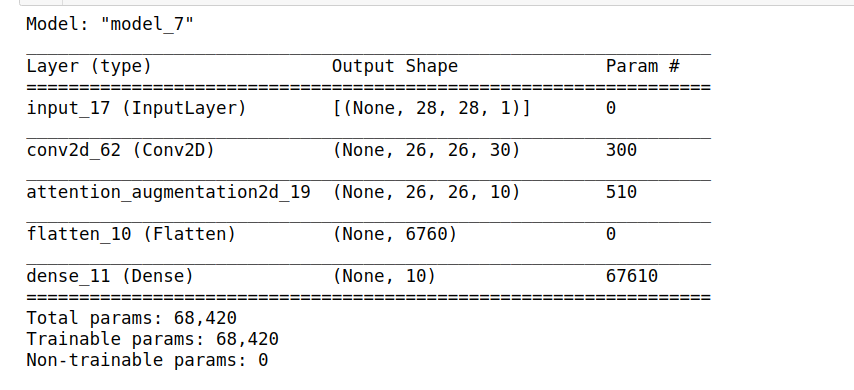

Model: ip = Input((28,28,1)) depth_k=10 depth_v=10 z=2 * depth_k + depth_v x = Conv2D(z,kernel_size=(3,3))(ip) x = AttentionAugmentation2D(depth_k, depth_v, num_heads=2)(x) a=Flatten()(x) a=Dense(10,activation='softmax')(a) model=Model(ip, a) model.compile(loss='categorical_crossentropy', optimizer="Adam", metrics=['accuracy']) model.fit(x_train, y_train, batch_size=32, epochs=300, verbose=1, validation_data=(x_test, y_test))

model.summary()

add tf.compat.v1.disable_eager_execution() before model.fit() maybe work for u.

I encountered this issue without using model.fit(). I replaced all K.zeros(K.stack(...)) with tf.zeros(tf.stack(...)) in the codes and it magically works 😂 ...

This problem would easily disappear if you install TensorFlow 2.0.1.

@gitlabspy Thank you. Your solution worked for me too.

Any idea to solve the error?

Epoch 1/300 32/5076 [..............................] - ETA: 16s

_FallbackException Traceback (most recent call last) /usr/local/lib/python3.7/dist-packages/tensorflow_core/python/ops/gen_array_ops.py in fill(dims, value, name) 3585 _ctx._context_handle, _ctx._thread_local_data.device_name, "Fill", -> 3586 name, _ctx._post_execution_callbacks, dims, value) 3587 return _result

_FallbackException: This function does not handle the case of the path where all inputs are not already EagerTensors.

During handling of the above exception, another exception occurred:

TypeError Traceback (most recent call last) 37 frames /usr/local/lib/python3.7/dist-packages/tensorflow_core/python/eager/execute.py in quick_execute(op_name, num_outputs, inputs, attrs, ctx, name) 59 tensors = pywrap_tensorflow.TFE_Py_Execute(ctx._handle, device_name, 60 op_name, inputs, attrs, ---> 61 num_outputs) 62 except core._NotOkStatusException as e: 63 if name is not None:

TypeError: An op outside of the function building code is being passed a "Graph" tensor. It is possible to have Graph tensors leak out of the function building context by including a tf.init_scope in your function building code. For example, the following function will fail: @tf.function def has_init_scope(): my_constant = tf.constant(1.) with tf.init_scope(): added = my_constant * 2 The graph tensor has name: model_1/attention_augmentation2d_3/stack_6:0

the following code does not work

from keras.layers import Input from keras.models import Model ip = Input(shape=(32, 32, 3)) x = augmented_conv2d(ip, filters=20, kernel_size=(3, 3), depth_k=0.2, depth_v=0.2, # dk/v (0.2) * f_out (20) = 4 num_heads=4, relative_encodings=True)

model = Model(ip, x)

model.summary()

# Check if attention builds properly

x = tf.zeros((1, 32, 32, 3))

y = model(x)

print("Attention Augmented Conv out shape : ", y.shape)

File "d:\demo\vscode_tt7920\vscodeTensor\ISAJinanMapping\augument\attn_augconv.py", line 241, in rel_to_abs * col_pad = K.zeros(K.stack([B, Nh, L, 1])) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\keras\backend.py", line 1674, in zeros ** v = tf.zeros(shape=shape, dtype=tf_dtype, name=name)

TypeError: <tf.Tensor 'attention_augmentation2d/stack_6:0' shape=(4,) dtype=int32> is out of scope and cannot be used here. Use return values, explicit Python locals or TensorFlow collections to access it. Please see https://www.tensorflow.org/guide/function#all_outputs_of_a_tffunction_must_be_return_values for more information.

<tf.Tensor 'attention_augmentation2d/stack_6:0' shape=(4,) dtype=int32> was defined here: File "d:\demo\vscode_tt7920\vscodeTensor\ISAJinanMapping\augument\attn_augconv.py", line 320, in x = augmented_conv2d(ip, filters=20, kernel_size=(3, 3), File "d:\demo\vscode_tt7920\vscodeTensor\ISAJinanMapping\augument\attn_augconv.py", line 306, in augmented_conv2d attn_out = AttentionAugmentation2D(depth_k, depth_v, num_heads, relative_encodings)(qkv_conv) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\keras\utils\traceback_utils.py", line 65, in error_handler return fn(*args, **kwargs) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\keras\engine\base_layer.py", line 1011, in call return self._functional_construction_call( File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\keras\engine\base_layer.py", line 2498, in _functional_construction_call outputs = self._keras_tensor_symbolic_call( File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\keras\engine\base_layer.py", line 2345, in _keras_tensor_symbolic_call return self._infer_output_signature( File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\keras\engine\base_layer.py", line 2404, in _infer_output_signature outputs = call_fn(inputs, *args, **kwargs) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\keras\utils\traceback_utils.py", line 96, in error_handler return fn(*args, **kwargs) File "d:\demo\vscode_tt7920\vscodeTensor\ISAJinanMapping\augument\attn_augconv.py", line 158, in call if self.relative: File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\autograph\operators\control_flow.py", line 1363, in if_stmt _py_if_stmt(cond, body, orelse) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\autograph\operators\control_flow.py", line 1416, in _py_if_stmt return body() if cond else orelse() File "d:\demo\vscode_tt7920\vscodeTensor\ISAJinanMapping\augument\attn_augconv.py", line 159, in call h_rel_logits, w_rel_logits = self.relative_logits(q) File "d:\demo\vscode_tt7920\vscodeTensor\ISAJinanMapping\augument\attn_augconv.py", line 216, in relative_logits rel_logits_w = self.relative_logits_1d(q, self.key_relative_w, height, width, File "d:\demo\vscode_tt7920\vscodeTensor\ISAJinanMapping\augument\attn_augconv.py", line 229, in relative_logits_1d rel_logits = self.rel_to_abs(rel_logits) File "d:\demo\vscode_tt7920\vscodeTensor\ISAJinanMapping\augument\attn_augconv.py", line 241, in rel_to_abs col_pad = K.zeros(K.stack([B, Nh, L, 1])) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\util\traceback_utils.py", line 150, in error_handler return fn(*args, **kwargs) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\util\dispatch.py", line 1176, in op_dispatch_handler return dispatch_target(*args, **kwargs) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\keras\backend.py", line 4114, in stack return tf.stack(x, axis=axis) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\util\traceback_utils.py", line 150, in error_handler return fn(*args, **kwargs) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\util\dispatch.py", line 1176, in op_dispatch_handler return dispatch_target(*args, **kwargs) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\ops\array_ops.py", line 1468, in stack return ops.convert_to_tensor(values, name=name) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\profiler\trace.py", line 183, in wrapped return func(*args, **kwargs) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\framework\ops.py", line 1638, in convert_to_tensor ret = conversion_func(value, dtype=dtype, name=name, as_ref=as_ref) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\ops\array_ops.py", line 1591, in _autopacking_conversion_function return _autopacking_helper(v, dtype, name or "packed") File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\ops\array_ops.py", line 1527, in _autopacking_helper return gen_array_ops.pack(elems_as_tensors, name=scope) File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\ops\gen_array_ops.py", line 6569, in pack _, _, _op, _outputs = _op_def_library._apply_op_helper( File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\framework\op_def_library.py", line 797, in _apply_op_helper op = g._create_op_internal(op_type_name, inputs, dtypes=None, File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\framework\func_graph.py", line 735, in _create_op_internal return super(FuncGraph, self)._create_op_internal( # pylint: disable=protected-access File "C:\Anaconda3\envs\tf_gpu2d10B\lib\site-packages\tensorflow\python\framework\ops.py", line 3800, in _create_op_internal ret = Operation(

The tensor <tf.Tensor 'attention_augmentation2d/stack_6:0' shape=(4,) dtype=int32> cannot be accessed from here, because it was defined in FuncGraph(name=attention_augmentation2d_scratch_graph, id=2371477264752), which is out of scope.

Call arguments received by layer "attention_augmentation2d" (type AttentionAugmentation2D): • inputs=tf.Tensor(shape=(None, 32, 32, 12), dtype=float32) • kwargs={'training': 'None'}