tsai

tsai copied to clipboard

tsai copied to clipboard

batch_tfms not applied to valid batch?

Hi,

I've came across a strange issue with the batch transform. The after_batch transform is not applied to dls.valid. Is this intended behavior?

I've replicated the issue with the below sample code:

from tsai.all import *

X, y, splits = get_UCR_data('LSST', split_data=False)

tfms = [None, TSClassification()]

batch_tfms = [TSSmooth(filt_len=10000)]

dls = get_ts_dls(X, y, splits=splits, tfms=tfms, batch_tfms=batch_tfms)

dls.train.show_batch()

dls.valid.show_batch()

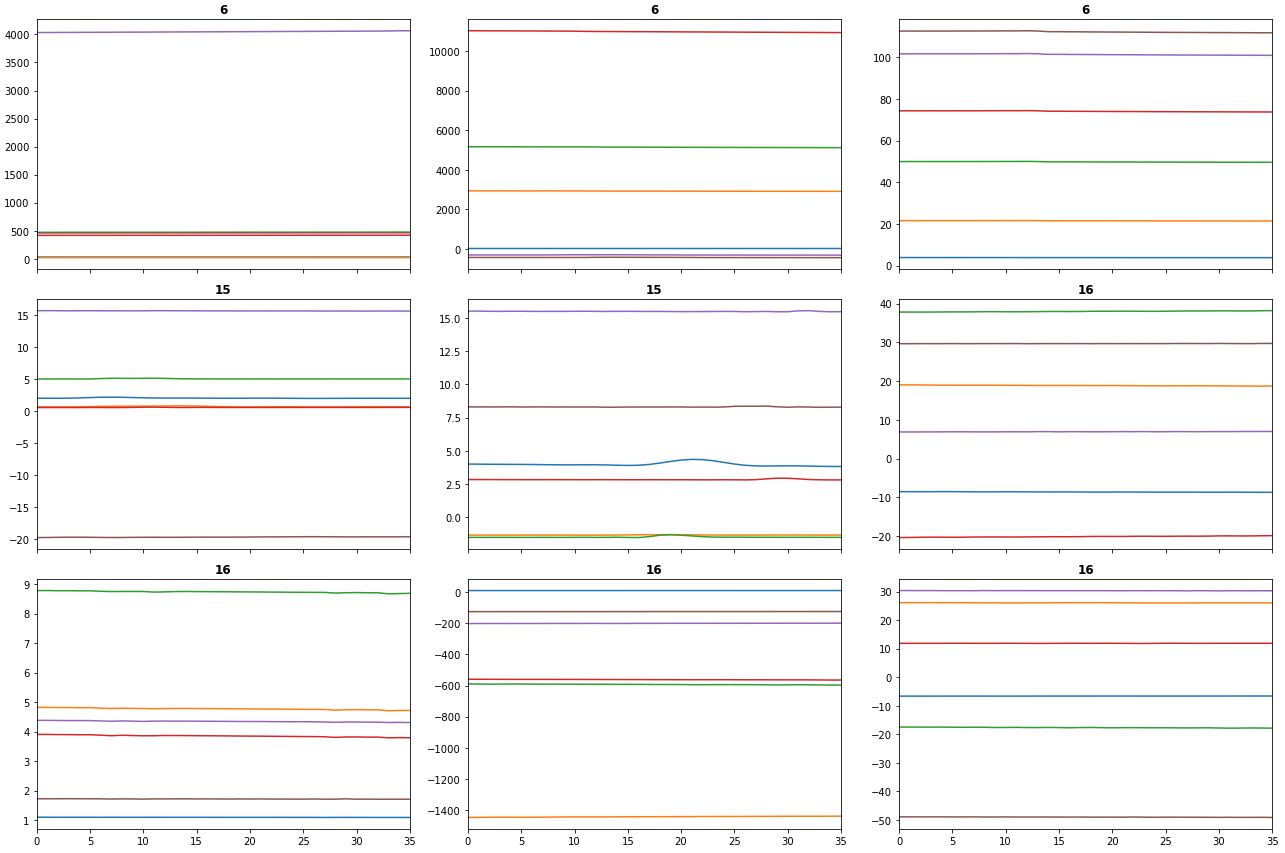

Basically I set a very long smoothing filter to destroy the data. I expect to observe flat lines as a result of this:

Output of dls.train.show_batch():

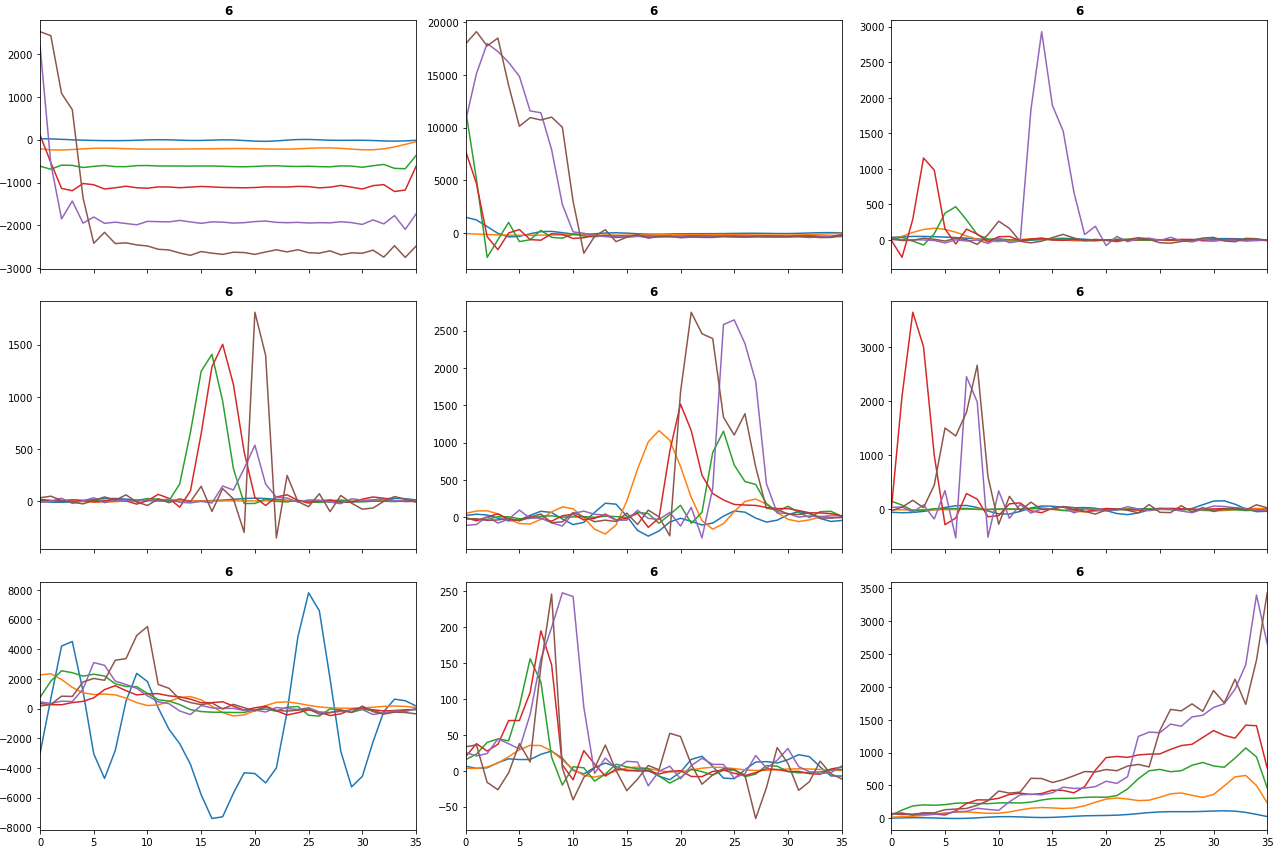

Output of dls.valid.show_batch():

As you can see, the train batch is all flat. However, the transform is not applied to the valid batch. I'd appreciate any help on this.

Thanks, Yigit

Does that happen only with augmentation Transforms (those that inherit from fastai's RandTranform) or it happens with normal preprocessing transforms too?

@vrodriguezf I didn't do a thorough testing of this, but using TSRollingMean() instead of TSSmooth() addressed the problem. TSRollingMean() inherits Transform and not RandTransform, so you might be correct with your above guess.

Hi @yttuncel , Victor is right. This works as design by fastai. Here's an extract from their documentation:

A RandTransform is only applied to the training set by default, so you have to pass split_idx=0 if you are calling it directly and not through a Datasets. That behavior can be changed by setting the attr split_idx of the transform to None.

So if you want to use TSSmooth(filt_len=10000) on both training, validation and testing you should do it this way:

from tsai.all import *

X, y, splits = get_UCR_data('LSST', split_data=False)

tfms = [None, TSClassification()]

tsmooth = TSSmooth(filt_len=10000)

tsmooth.split_idx = None

batch_tfms = [tsmooth]

dls = get_ts_dls(X, y, splits=splits, tfms=tfms, batch_tfms=batch_tfms)

dls.train.show_batch()

dls.valid.show_batch()

I've tested it and it works well. Please, try it if you can. If it does, feel free to close this issue.

Issue closed due to lack of response.