serving

serving copied to clipboard

serving copied to clipboard

tensorflow_model_server with --model_base_path pointing to a gs:// bucket getting too long to start.

System information

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): Ubuntu 16.04

- TensorFlow Serving installed from (source or binary): Docker image

- TensorFlow Serving version: tensorflow/serving:2.2.0

I'm using tensorflow/serving:2.2.0 as base for a new docker image.

Below the Dockerfile to build the new image.

The main change on this Dockerfile is that I'm copying my TF model COPY container-files / into the image.

FROM tensorflow/serving:2.2.0

# Copy files

COPY container-files /

# Only root can access the google-cloud-storage-private-key access.

RUN chown root:root /etc/gs && \

chmod 640 /etc/gs

ENV GOOGLE_APPLICATION_CREDENTIALS=/etc/gs/credential.json

# Set where models should be stored in the container

ENV MODEL_BASE_PATH=/models

# The only required piece is the model name in order to differentiate endpoints

ENV MODEL_NAME=inception

# Create models dir

RUN mkdir -p ${MODEL_BASE_PATH}/${MODEL_NAME}

# Create a script that runs the model server so we can use environment variables

# while also passing in arguments from the docker command line

RUN echo '#!/bin/bash \n\n\

tensorflow_model_server --port=8500 --rest_api_port=8080 \

--model_name=${MODEL_NAME} --model_base_path=${MODEL_BASE_PATH}/${MODEL_NAME} \

"$@"' > /usr/bin/tf_serving_entrypoint.sh \

&& chmod +x /usr/bin/tf_serving_entrypoint.sh

# Expose ports

# gRPC

# EXPOSE 8500

# REST

EXPOSE 8080

# Remove entrypoint from parent image

ENTRYPOINT []

CMD ["/usr/bin/tf_serving_entrypoint.sh"]

The new generated TF serving docker image works fine and only takes a few seconds to start, see below.

$ docker run -it -p 8080:8080 tfs-model:001-dev

2020-07-22 17:05:04.322982: I tensorflow_serving/model_servers/server.cc:86] Building single TensorFlow model file config: model_name: inception model_base_path: /models/inception

2020-07-22 17:05:04.323206: I tensorflow_serving/model_servers/server_core.cc:464] Adding/updating models.

2020-07-22 17:05:04.323220: I tensorflow_serving/model_servers/server_core.cc:575] (Re-)adding model: inception

2020-07-22 17:05:04.424089: I tensorflow_serving/core/basic_manager.cc:739] Successfully reserved resources to load servable {name: inception version: 1}

2020-07-22 17:05:04.424164: I tensorflow_serving/core/loader_harness.cc:66] Approving load for servable version {name: inception version: 1}

2020-07-22 17:05:04.424196: I tensorflow_serving/core/loader_harness.cc:74] Loading servable version {name: inception version: 1}

2020-07-22 17:05:04.424295: I external/org_tensorflow/tensorflow/cc/saved_model/reader.cc:31] Reading SavedModel from: /models/inception/1

2020-07-22 17:05:04.594622: I external/org_tensorflow/tensorflow/cc/saved_model/reader.cc:54] Reading meta graph with tags { serve }

2020-07-22 17:05:04.594652: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:295] Reading SavedModel debug info (if present) from: /models/inception/1

2020-07-22 17:05:04.594801: I external/org_tensorflow/tensorflow/core/platform/cpu_feature_guard.cc:143] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

2020-07-22 17:05:05.006016: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:234] Restoring SavedModel bundle.

2020-07-22 17:05:06.465954: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:183] Running initialization op on SavedModel bundle at path: /models/inception/1

2020-07-22 17:05:06.952038: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:364] SavedModel load for tags { serve }; Status: success: OK. Took 2527751 microseconds.

2020-07-22 17:05:07.040463: I tensorflow_serving/servables/tensorflow/saved_model_warmup.cc:105] No warmup data file found at /models/inception/1/assets.extra/tf_serving_warmup_requests

2020-07-22 17:05:07.042644: I tensorflow_serving/core/loader_harness.cc:87] Successfully loaded servable version {name: inception version: 1}

2020-07-22 17:05:07.044539: I tensorflow_serving/model_servers/server.cc:355] Running gRPC ModelServer at 0.0.0.0:8500 ...

[warn] getaddrinfo: address family for nodename not supported

2020-07-22 17:05:07.045586: I tensorflow_serving/model_servers/server.cc:375] Exporting HTTP/REST API at:localhost:8080 ...

[evhttp_server.cc : 238] NET_LOG: Entering the event loop ...

But I need to have my models in a Google Cloud Storage bucket.

Then in the Dockerfile above, I replaced the ENV MODEL_BASE_PATH=/models by ENV MODEL_BASE_PATH=gs://my_bucket/model to allow the TF serving grab the models directly from the google storage.

The new docker image, using gs:// also works fine after the start, but it is taking around 20min to be ready.

$ docker run -it -p 8080:8080 tfs-model:001-dev

2020-07-22 16:06:42.998266: I tensorflow_serving/model_servers/server.cc:86] Building single TensorFlow model file config: model_name: inception model_base_path: gs://my_bucket/model/inception

2020-07-22 16:06:42.998465: I tensorflow_serving/model_servers/server_core.cc:464] Adding/updating models.

2020-07-22 16:06:42.998477: I tensorflow_serving/model_servers/server_core.cc:575] (Re-)adding model: inception

2020-07-22 16:06:51.742294: I tensorflow_serving/core/basic_manager.cc:739] Successfully reserved resources to load servable {name: inception version: 1}

2020-07-22 16:06:51.742359: I tensorflow_serving/core/loader_harness.cc:66] Approving load for servable version {name: inception version: 1}

2020-07-22 16:06:51.742396: I tensorflow_serving/core/loader_harness.cc:74] Loading servable version {name: inception version: 1}

2020-07-22 16:06:53.834245: I external/org_tensorflow/tensorflow/cc/saved_model/reader.cc:31] Reading SavedModel from: gs://my_bucket/model/inception/1

2020-07-22 16:06:55.691746: I external/org_tensorflow/tensorflow/cc/saved_model/reader.cc:54] Reading meta graph with tags { serve }

2020-07-22 16:06:55.691830: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:295] Reading SavedModel debug info (if present) from: gs://my_bucket/model/inception/1

2020-07-22 16:06:56.379065: I external/org_tensorflow/tensorflow/core/platform/cpu_feature_guard.cc:143] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA

2020-07-22 16:06:56.834227: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:234] Restoring SavedModel bundle.

2020-07-22 16:23:30.737994: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:183] Running initialization op on SavedModel bundle at path: gs://my_bucket/model/inception/1

2020-07-22 16:23:31.237985: I external/org_tensorflow/tensorflow/cc/saved_model/loader.cc:364] SavedModel load for tags { serve }; Status: success: OK. Took 997403750 microseconds.

2020-07-22 16:23:32.011964: I tensorflow_serving/servables/tensorflow/saved_model_warmup.cc:105] No warmup data file found at gs://my_bucket/model/inception/1/assets.extra/tf_serving_warmup_requests

2020-07-22 16:23:38.855881: I tensorflow_serving/core/loader_harness.cc:87] Successfully loaded servable version {name: inception version: 1}

2020-07-22 16:23:38.862442: I tensorflow_serving/model_servers/server.cc:355] Running gRPC ModelServer at 0.0.0.0:8500 ...

[warn] getaddrinfo: address family for nodename not supported

[evhttp_server.cc : 238] NET_LOG: Entering the event loop ...

2020-07-22 16:23:38.865961: I tensorflow_serving/model_servers/server.cc:375] Exporting HTTP/REST API at:localhost:8080 ...

The model's files stored in the gs://.

-rw-rw-r-- 1 kleysonr kleysonr 9695088 Jul 22 10:57 model/inception/1/saved_model.pb

-rw-rw-r-- 1 kleysonr kleysonr 214767 Jul 22 10:59 model/inception/1/variables/variables.data-00000-of-00002

-rw-rw-r-- 1 kleysonr kleysonr 261431716 Jul 22 10:59 model/inception/1/variables/variables.data-00001-of-00002

-rw-rw-r-- 1 kleysonr kleysonr 51348 Jul 22 10:59 model/inception/1/variables/variables.index

Some of you guys might say that it could be some local network issue and/or the time needed to download the files to my local computer. But I created a new Google Cloud Run instance using the same docker image and I got the same problem.

@kleysonr, Sorry for the delayed response. This is interesting. Can you please let us know where is the Tensorflow Model Server running.

@rmothukuru I'm not sure that I understood very well your question, but I've created a docker image and I ran this docker image in both my local computer (running ubuntu 16.04) and also as an instance in the Google Cloud Run.

To investigate model loading time within model server binary, metrics in https://github.com/tensorflow/tensorflow/blob/23ddb02643654685f839e17a2ce72916775e5fe5/tensorflow/cc/saved_model/loader.cc#L43 would give insight.

To further investigate the latency between docker and gs://, folks in docker forum or google cloud storage may have more insight about how to get the instrumentation. Please let us know if you have follow-up questions!

I'm experiencing the same issue with startup times when using the TF Serving gpu pod on kubernetes, except I'm loading the models from an s3:// URL instead of a gs:// url

To investigate model loading time within model server binary, metrics in https://github.com/tensorflow/tensorflow/blob/23ddb02643654685f839e17a2ce72916775e5fe5/tensorflow/cc/saved_model/loader.cc#L43 would give insight.

To further investigate the latency between docker and gs://, folks in docker forum or google cloud storage may have more insight about how to get the instrumentation. Please let us know if you have follow-up questions!

As there is another mention regarding the same issue with s3:// , looks like be some problem with TFS instead of third-party softwares.

IIUC both {gs, docker} and {s3, kubernetes} is about TFS running in a container so similar setups from this perspective.

I think the advice of further breaking down e2e latency into 1) within TFS loading latency (metric https://github.com/tensorflow/tensorflow/blob/23ddb02643654685f839e17a2ce72916775e5fe5/tensorflow/cc/saved_model/loader.cc#L43) and metrics for the rest part apply in general here.

How about comparing numbers of TFS loading metrics with e2e load time to proceed?

@kleysonr, Can you please respond to @minglotus-6's comment. Thanks!

Automatically closing due to lack of recent activity. Please update the issue when new information becomes available, and we will reopen the issue. Thanks!

@rmothukuru @minglotus-6 comment is beyond my knowledge. I don't know how to proceed.

But looks like there are some 'bug' in the TF when dealing with remote storages.

Best Regards.

To confirm about the question, TFS model server docker image could load local models pretty fast, but it takes long to read from cloud file storage (assuming the saved model file, so bytes read and graph initialization time are approx. the same).

So it'd make sense to get some insight (preferably measurement or metrics) about I/O between docker and remote file system when model is loading.

But looks like there are some 'bug' in the TF when dealing with remote storages.

That's the follow-up step to identify the bottleneck and thereby the suggestion to get insight and measure. In this step, we'd surely be willingly improve (e.g., by using a file API that works better with remote storages) if there are suggestions from docker or remote storages (e.g., what is this better API could be different sometimes for a specific remote storages, a general API is ideal though).

@kleysonr, Can you please respond to @minglotus-6's comment. Thanks!

@rmothukuru Sorry, it's not clear to me what is supposed I answer.

I'm just a TFS user. I don't know how to dig deep in the code and measurements to get insights about specific loading times.

@kleysonr,

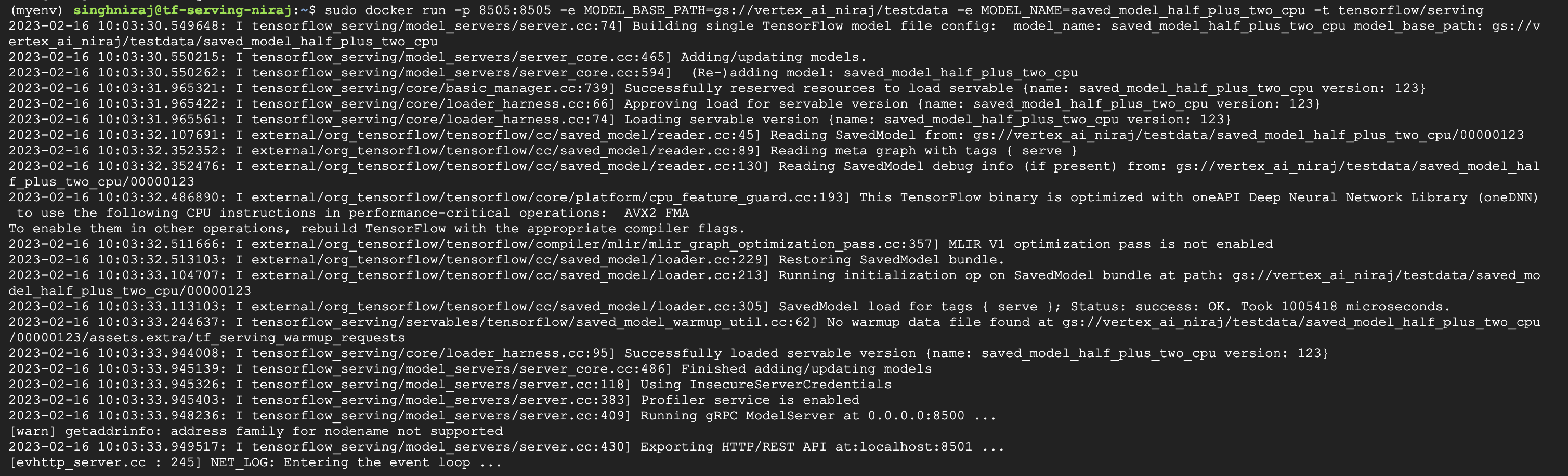

I am unable to replicate this issue. I tried to load a test model from cloud storage(gs://) bucket and the model loaded instantly without any delay. Please find attached the screenshot of docker run command.

Please try with the latest tensorflow serving image and let us know if you face any issues. Also, if possible please share us the stored model gsutil URL, so we can try to replicate the issue on our end. Thank you!

This issue was closed due to lack of activity after being marked stale for past 14 days.