recommenders

recommenders copied to clipboard

recommenders copied to clipboard

Creating a frozen graph from TF Recommender Model to use model on server.

Hi!

So right now, I'm creating an item-to-item recommender system that uses item numbers and item titles as features. One tower of my model focuses on "item A" and the other tower focuses on "item B." I have had no issues creating this model or serving predictions when I run the code in python. Here's a sample of some of the code:

item_a_id_lookup = tf.keras.layers.StringLookup()

item_a_id_lookup.adapt(purchases_train_ds.map(lambda x: x["item_A"]))

item_a_title_text = tf.keras.layers.TextVectorization()

item_a_title_text.adapt(purchases_train_ds.map(lambda x: x["title_A"]))

The tower corresponding to item A is given below:

class QueryModel(tf.keras.Model):

def __init__(self):

super().__init__()

self.item_a_embedding = tf.keras.Sequential([

item_a_id_lookup,

tf.keras.layers.Embedding(item_a_id_lookup.vocabulary_size(), 32)

])

self.item_a_title = tf.keras.Sequential([

item_a_title_text,

tf.keras.layers.Embedding(item_a_title_text.vocabulary_size(), 32, mask_zero=True),

tf.keras.layers.GlobalAveragePooling1D()

])

def call(self, inputs):

if type(inputs) is not dict:

return tf.concat([

self.item_a_embedding(tf.convert_to_tensor([inputs[0]])),

self.item_a_title(tf.convert_to_tensor([inputs[1]]))

], axis=1)

return tf.concat([

self.item_a_embedding(inputs['item_A']),

self.item_a_title(inputs['title_A'])

], axis=1)

Our goal is to export this model to a server to start seeing if we can get these recommendations on a website. We are using Redis AI to do this. One of the requirements of Redis AI is that the model be exported as a frozen graph, which I have done through the following code:

full_model = tf.function(lambda x: model.item_A_model(x))

full_model = full_model.get_concrete_function(tf.TensorSpec((2,), dtype=tf.string))

frozen_full_model = convert_variables_to_constants_v2(full_model)

frozen_full_model.graph.as_graph_def()

tf.io.write_graph(graph_or_graph_def=frozen_full_model.graph, logdir='./pdp', name='pdp_model.pb', as_text=False)

Note that this is only to test one tower of the model (the tower corresponding to item A). We simply want a graph that represents the model which takes in an item title and an item number and returns the 1x64 dimensional embedding associated with that item. This issue is not about the ScaNN recommendations just yet.

When we print out the structure of this graph using this code:

frozen_full_model.graph.as_graph_def()

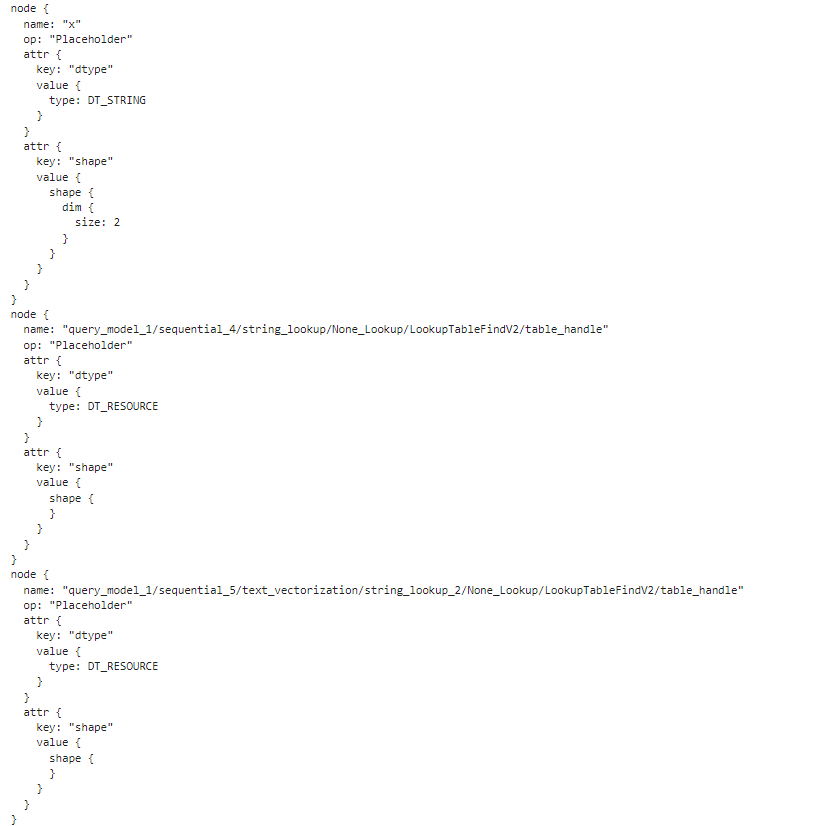

we get the following output for the first 3 nodes of our graph:

It makes sense that the first node (named "x") is a Placeholder because we need to specify the input. It seems that the nodes corresponding with the string lookup layer and the text vectorization layers are placeholders as well. We're not entirely sure of why that's the case. We'd expect them to be "Const." Is there something we have to do if we export StringLookups and TextVectorizations as a frozen graph?

Thanks for the help!