lucid

lucid copied to clipboard

lucid copied to clipboard

Research: Neuron Mechanics

🔬 This is an experiment in doing radically open research. I plan to post all my work on this openly as I do it, tracking it in this issue. I'd love for people to comment, or better yet collaborate! See more.

Please be respectful of the fact that this is unpublished research and that people involved in this are putting themselves in an unusually vulnerable position. Please treat it as you would unpublished work described in a seminar or by a colleague.

Description

Decomposed feature visualization is a new technique for exploring the mechanics of a feature. Instead of just visualizing the feature by creating a new input, one optimizes a previous hidden layer, then visualizes each of those components separately.

For example, in the following example it reveals that a car detecting neuron at layer mixed4c functions by doing a 5x5 convolution, looking for windows in the top half and wheels in the bottom:

When applied to adjacent layers, decomposed feature visualization can be thought of as visualizing the weights or the gradient of a two layers. It can also be thought of as a way to explain attribution. Look at the draft article!

I think this technique is exciting because it seems to significantly advance our mechanistic understanding of how convolutional networks operate. This seems critical to our ability to eventually audit models, or gain deep scientific understanding.

Links

- Draft article (password: "vis")

- Please don't share link to draft anywhere high profile. We'd like to save attention for a polished final version.

- colab notebook

Next Steps

- Fiddle with hyperparmeters / objectives for visualization.

- Try using neuron instead of channel objective.

- Try using cossim objectives? (See issue on new feature vis objectives)

- Scale up: visualize many neurons this way, potentially across many models.

- Find interesting examples of meaningful units and how they are implemented by the network.

- To start, pick interesting neurons from the feature vis appendix and explore those. Note this technique doesn't work well for 1x1 conv neurons.

- Ideally, find interesting trends in how neural nets do things.

- Explore using this to explain attribution (see demo in article).

- Explore using this to directly visualize hidden layer attribution. Instead of a saliency map with scalars, apply feature vis to each vector.

- Writing: There's a lot of work to be done on the draft article.

Question: How can we decompose a 1x1 feature? Or a larger feature where a spatial location selects for multiple things? Or a poly-semantic feature?

Idea: Do attribution to the previous layer -- ie. multiply by weights but don't add up -- and collect those vectors. Now you can do some really interesting things:

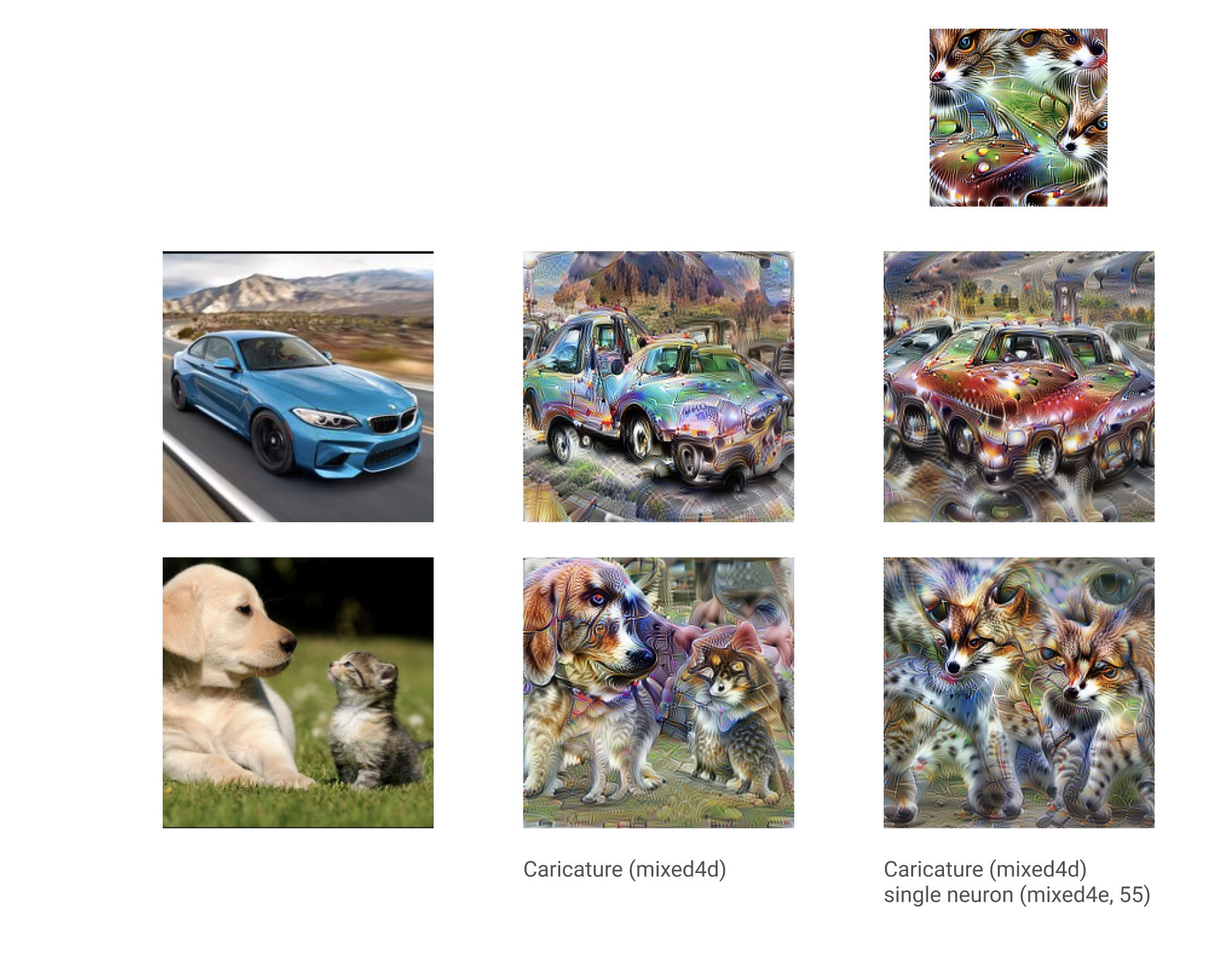

(1) Use NMF or clustering to decompose neuron into multiple different components. (2) t-sne and then use feature vis to get a sense of the space of things that cause neuron to activate. (3) Measure how multi-modal a neuron is? (4) Create a single neuron version of a caricature -- we can't normally caricature using a single neuron in any interesting sense, because there's no angle when you're only thinking about a single one. But you can think of the neurons weights as creating a stretched version of the previous layer, emphasizing some features, and then use that as a lens to create a caricature.

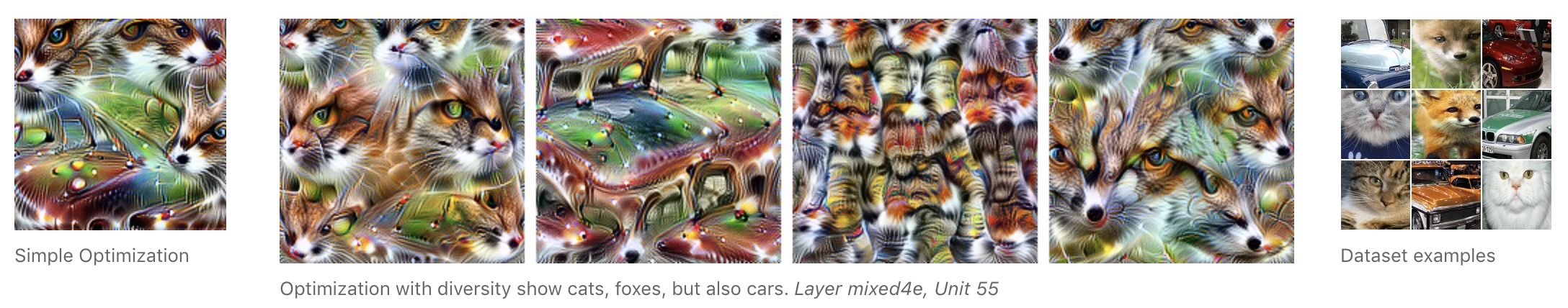

OK, let's consider our friend mixed4e, 55 from feature visualization's diversity section. It is a "poly-semantic" neuron, detecting both fronts of cars and cats:

(Re:1) Can this general approach separate out poly-semantic components?

Using k-means and filtering for activating neurons, I had some pretty good success:

(Re:4) Neuron specific caricatures seem to work.

New colab notebook for these ideas.