java

java copied to clipboard

java copied to clipboard

`asset_path_initializer` not fed when saving/reloading model

---- This is an issue posted by @maziyarpanahi ----

Hi @karllessard

We are experiencing the same issue however in the 0.4.0 release (after this fix https://github.com/tensorflow/java/pull/393 was included). Previously in 0.2.x and 0.3.x, we were able to load a model, save it on disk, and load it back (both models from TF Hub and HuggingFace as SavedModel).

However, now in 0.4.0, we can load any SavedModel from anywhere, but once it's saved on disk we face this similar error when trying to load it back (only TF Hub models):

[ERROR] org.tensorflow.exceptions.TFInvalidArgumentException: You must feed a value for placeholder tensor 'asset_path_initializer' with dtype string [[node asset_path_initializer]]

- Models coming from TF Hub have an extra

asset_path_initializerplaceholder, they used to work in the0.2.xand0.3.xreleases without a need to feed it or deal with it in any way. - Models coming from HuggingFace don't have an

asset_path_initializerplaceholder, so we don't have an issue with those

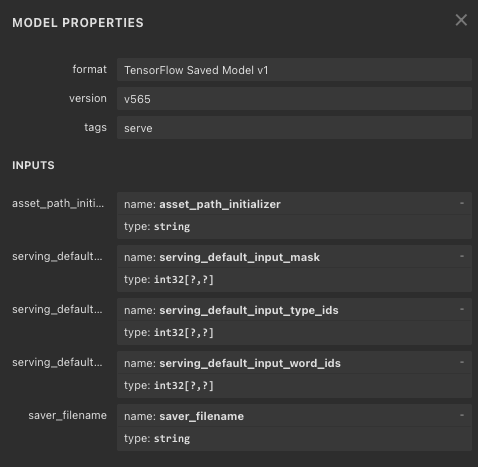

BERT on TF Hub:

BERT on HuggingFace:

Is there anything we need to do since 0.4.x is on TensorFlow 2.7.x, I am not sure if this is a change in newer TF or in tensorflow-java.

PS: We don't use any exporters, we saved the model differently. A very quick and simple example of how we do this:

val model = SavedModelBundle.load(modelPath, "serve")

/* Save the model */

val graph = model.graph()

val session = model.session()

val exportDir = Files.createDirectory(Paths.get("./saved_model")).toAbsolutePath.toString

val variablesFile = Paths.get(exportDir, "variables").toString

session.runner

.addTarget("StatefulPartitionedCall_1")

.feed("saver_filename", t.createTensor(variablesFile))

.run()

val graphFile = Paths.get(exportDir, TensorflowWrapper.SavedModelPB).toString

FileUtils.writeByteArrayToFile(new File(graphFile), graph.toGraphDef.toByteArray)

We have been doing this since TF v1 and it has been compatible with all the TF v2 up until 0.4.0, but now if we save it like this (only models from TF Hub and I am guessing those with assets) we see that error)

Originally posted by @maziyarpanahi in https://github.com/tensorflow/java/issues/387#issuecomment-1066628248

~@maziyarpanahi , can you please provide the exact link you use to download your BERT model from TFHub? Because I've just tried it with this version and I had no issue. You say the problem occurs as soon as you load the model using SavedModel.load(...), right?~

Actually that's not true, I'm able to reproduce the error even using a more "standard" way to save the model:

SavedModelBundle.load("models/bert-tfhub").use { bundle ->

SavedModelBundle.exporter("export-bert").apply {

bundle.functions().forEach { withFunction(it) }

}.export()

}

SavedModelBundle.load("export-bert").use { bundle -> // This line fail with same error message

println(bundle.function(Signature.DEFAULT_KEY).signature().toString())

}

Note: this saving method does not copy the original asset folder nor the keras_metadata.pb file but even if they are copied in the folder, it fails the same way when trying to load export-bert

Thanks @karllessard for creating a new issue and testing this. Just in case, this is how I export TF Hub models (it's pretty much the same as HuggingFace, they both end up having an assets directory, TF Hub puts a vocab.txt inside, but we never used assets directory when loading a model via Java)

EXPORTED_MODEL = 'bert_en_uncased_L-2_H-128_A-2'

TF_HUB_URL = 'https://tfhub.dev/tensorflow/small_bert/bert_en_uncased_L-2_H-128_A-2/2'

import tensorflow as tf

import tensorflow_hub as hub

encoder = hub.KerasLayer(TF_HUB_URL, trainable=False)

@tf.function

def my_module_encoder(input_mask, input_word_ids, input_type_ids):

inputs = {

'input_mask': input_mask,

'input_word_ids': input_word_ids,

'input_type_ids': input_type_ids

}

outputs = {

'sequence_output': encoder(inputs)['sequence_output']

}

return outputs

tf.saved_model.save(

encoder,

EXPORTED_MODEL,

signatures=my_module_encoder.get_concrete_function(

input_mask=tf.TensorSpec(shape=(None, None), dtype=tf.int32),

input_word_ids=tf.TensorSpec(shape=(None, None), dtype=tf.int32),

input_type_ids=tf.TensorSpec(shape=(None, None), dtype=tf.int32)

),

options=None

)

The schema of the SavedModel from TF Hub hasn't changed, it has always been with the assets directory and we never had a need to use it or feed anything for it. (asset_path_initializer placeholder has been there since 2.x at least as far as I remember) - Maybe something did change in 2.7 in terms of how to deal with the asset_path_initializer placeholder.

So playing around with this issue, I found out that in the original BERT model the following AssetFileDef is present in the saved_model.pb, while we don't add when exporting the model in TF Java:

asset_file_def {

tensor_info {

name: "asset_path_initializer:0"

}

filename: "vocab.txt"

}

Adding it allows me to reload the exported model successfully, here's how I rebuild the saved model to be saved in my test:

MetaGraphDef.Builder metaGraphDef =

metaGraphDefBuilder

.setSaverDef(saverDef)

.setGraphDef(graph.toGraphDef())

.addAssetFileDef(AssetFileDef.newBuilder().setTensorInfo(TensorInfo.newBuilder().setName("asset_path_initializer:0")).setFilename("vocab.txt"))

.setMetaInfoDef(MetaInfoDef.newBuilder().addAllTags(Arrays.asList(tags)));

I don't know if something has changed in the TF Runtime that now requires to have a AssetFileDef in the saved_model, but I think we need to add a way to create this entry.

In your case @maziyarpanahi , I see that you are playing directly with the GraphDef but how do you save the whole saved model protobuf, is it what TensorflowWrapper.SavedModelPBis about? If you have a way to add this AssetFileDef yourself before saving the model, that should fix the issue until we have something to propose on our side.

Additional note: the filename field is optional, just providing a tensor_info is sufficient to reload the model.

Question @maziyarpanahi or @wolliq, when SparkNLP creates its own copy of the saved model, does it "freeze" the graph, so that all variables values are embedded inside a single .pb file?

Ok... having a better look at this, I think I understand what's going on.

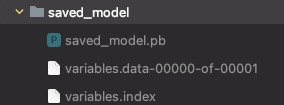

You are basically reexporting a TF model in a very custom way, not only programmatically but also "organizationally". In your case, the saved_model.pb you are writing is not actually a saved model definition, as it tends to indicates, but only a simple graph def. Then you save the variables in separate files under the same folder, that you will reload manually before inference.

By using a simple GraphDef, you are losing all metadata required to load properly a model that has been saved initially using tf.saved_model.save, which is the case with most models found in TFHub. It seems that newer versions of TF adds more dependencies into this metadata. I won't say it is a bug though, as there is probably no a contract saying that a graph definition alone should be sufficient to run a model that was previously saved as a bundle.

If you are reexporting the model only to update the variables, then I would strongly suggest that simply update the variable files under variables/ in your original (or a copy of the) saved model and that should be enough. This way you won't tamper with the saved model definition itself and you can simply reload the model using SavedModelBundle.load. You can then zip the whole folder to serialize it (seems that's already what you are doing with your custom folder).

I've never tried the solution I'm proposing above but I expect it to work and you shouldn't have this issue anymore since the model definition is left untouched. Plus, that would prevent you facing other issues of this kind as we can expect that more dependency to the model metadata could appear in the next releases of TensorFlow.

If you cannot do this quickly enough, then did you tried only feeding up a dummy value to asset_path_initializer when you run a session?

Hi @karllessard

You are basically reexporting a TF model in a very custom way, not only programmatically but also "organizationally". In your case, the saved_model.pb you are writing is not actually a saved model definition, as it tends to indicates, but only a simple graph def. Then you save the variables in separate files under the same folder, that you will reload manually before inference.

If you are reexporting the model only to update the variables, then I would strongly suggest that simply update the variable files under variables/ in your original (or a copy of the) saved model and that should be enough. This way you won't tamper with the saved model definition itself and you can simply reload the model using SavedModelBundle.load. You can then zip the whole folder to serialize it (seems that's already what you are doing with your custom folder).

Yes, this is true. We started using TensorFlow on Java on 1.12 and for very limited trainable graphs which were frozen before being saved. I think throughout the years saving GraphDef stayed the same while both TensorFlow and TF on Java evolved. For most of the models which won't be fine-tuned or changed (like BERT), we can just save them simply without going through this. (everything will be the same)

If you cannot do this quickly enough, then did you tried only feeding up a dummy value to asset_path_initializer when you run a session?

I think we will start refactoring to use more native APIs from tensorflow-java to save/load straightaway since lots of models cannot be further trained within Java, for those we do train we will test what you suggested just so we can stay with new changes from TensorFlow, and for the short-term, I think we will try to see if we can feed asset_path_initializer some dummy inputs so we can move to TensorFlow 2.7 before refactoring.

I will let you know quickly what happens and thanks again Karl especially since this seems to be an edge case and not a bug.

@karllessard quick question regarding feeding dummy values to asset path, I keep getting the following error:

You must feed a value for placeholder tensor 'asset_path_initializer' with dtype string

[[{{node asset_path_initializer}}]]

org.tensorflow.exceptions.TFInvalidArgumentException: You must feed a value for placeholder tensor 'asset_path_initializer' with dtype string

[[{{node asset_path_initializer}}]]

val dummyTensor = TString.scalarOf("vocab.txt")

.feed("asset_path_initializer:0", dummyTensor)

// or

// .feed("asset_path_initializer", dummyTensor)

This is what I get from model.metaGraphDef().getAssetFileDef(0) which is also confirm what you mentioned earlier regarding maybe adding addAssetFileDef would solve. However, I am not sure how I can directly feed this asset_path_initializer:0:

tensor_info {

name: "asset_path_initializer:0"

}

filename: "vocab.txt"

So you feed this placeholder in the same session runner that you use for inference and still get the same error?

Yes, it's the same session runner that complains about the same error in TensorFlow 2.7:

[ERROR] org.tensorflow.exceptions.TFInvalidArgumentException: You must feed a value for placeholder tensor 'asset_path_initializer' with dtype string

So I thought maybe feeding it with any arbitrary TString would work, but it complains about the same error.

I also checked, the graph file we save and load via Bytes (importGraphDef(GraphDef.parseFrom(graphBytesDef)) and save/load as saved_model.pb, they all have graph.operation("asset_path_initializer").name() correctly inside. So I am not sure why I can't feed this operation and still complains about the same error. (so we are not removing anything from the graph regarding asset_path_initializer unless there are other info required which is not in those bytes)

Ok, I understand better what is happening. This asset_path_initializer is marked as a dependency to one of the "init ops" in the graph (i.e. these ops that must be executed once before running the graph, often to assign a default value to the variables).

Before, initializing the model must be done manually, but in TF Java >= 0.4.0, it does it automatically. The problem though is that asset_path_initializer is a placeholder so it requires to be fed. The init runner does not support this, hence the failure. So the exception is not thrown by your model inference but by the initialization part.

In your case though, since you are reloading the variable values manually, you don't necessarily need/want to run this initialization. So a solution could be to let users the ability to disable entirely the session initialization. Unfortunately, TF Java does not exposes the method to the outside world to do this, so that would require a change in the code.

I'll try to think if there is a way we can avoid doing a new release for this and come back to you. This is still a corner case since most users will use the SavedModelBundle to run a graph from a saved model, which does not have this issue since the initialization in this case is taken care by the TF native library itself (which probably knows something about asset_path_initializer explicitly).

I know it is a dirty hack but maybe using reflection you can access the Session.setInitialized() protected method to disable the initialization, right after you create the session like in here. Can you give it a try?

Hi @karllessard

Thanks for the detailed explanation. Unfortunately, we use TensorFlow and offer features to users in Spark NLP rather than leaving users with the need to do anything, unlike other libraries where that have DL engines which are more flexible. When we load/read a TF model the rest is on auto-pilot without any option for users to choose anything. (so all the models have universal feeds/fetch depending on their architecture regardless of where they are coming from - like BERT, so it is a bit hard to keep checking if this BERT has an extra init or something to be fed)

I can see a few possibilities:

- If possible have this automatically handled like pre-0.4.0 since it's not really being used. (with the possibility of users wanting to override it with a new value as you did via addAssetFileDef)

- When importing a graph via graph.importGraphDef it is clear there is the node for

asset_path_initializerand also there is a way to see it as an init viagraph.initializers(). Maybe a way to remove/delete the node and initializer right there?

graph.toGraphDef.getNodeList

name: "asset_path_initializer"

op: "Placeholder"

attr {

key: "dtype"

value {

type: DT_STRING

}

}

attr {

key: "shape"

value {

shape {

}

}

}

graph.initializers()

[<Placeholder 'asset_path_initializer'>, <VarHandleOp 'Variable'>, <AssignVariableOp 'Variable/Assign'>]

- The very last option, since these models are always first saved/exported in Python and then loaded in Spark NLP, can we just remove/delete this

asset_path_initializernode/init right there before all this? (we have notebooks for every model, we can just update them with a line or two to remove this since there is absolutely no use for it)

Hi @maziyarpanahi ,

Sorry but what I meant by "users" is TF java users (i.e. you) and not SparkNLP users :)

So my idea was that you could disabled automatic session initialization within your TensorflowWrapper class. If you can give it a quick try by calling Session.setInitialized() right after you create the Session instance, it could work, but you need to use reflection to access this method as it is right now package-protected. So that would be a temporary hack until you use SavedModelBundle... Wdyt?

I've also thought about deleting the node from the graph def but the problem is that node is an input to another variable node so we'll probably need to mutate this other node as well and I was afraid that could become a bit too complex. Still that could be possible. Not sure that we can prevent the Python client to create that node in the first place, I'm gonna check.

Another dirty hack (but maybe less dirty?) is to exploit a "flaw" in TF Java that will mark the session as initialized even if the initialization fail. You can run Session.initialize() manually just after instantiating it and catch/discard any exception that is happening there since you don't really care in your case. Something like:

Session session = new Session(graph, false); // false disables automatic initialization but still requires that you can `initialize()` manually

try {

session.initialize()

} catch (Exception e) {

// ignore this

}

// restore manually the variables and you can safely use the session now ...

I think that's probably your best option so far. I plan to handle this properly in TF Java but that would be via AssetFileDef, which requires you to use the SavedModelBundle loader so it won't be helpful for you right now until you refactor that code.

Thanks @karllessard, your second suggestion worked perfectly!

Session session = new Session(graph); // I didn't disable the auto init here, it works without that

try {

session.initialize()

} catch (Exception e) {

// ignore this

}

I will do more tests here to be sure nothing is affected by this (it shouldn't since we use this part only for embeddings from TF Hub and HuggingFace) and hopefully, we are ready to be migrated to TF 2.7 (0.4.1). By the time you are done with AssetFileDef I think I'll be ready to finally use the TF native exporter (this also shouldn't be hard, it's just a matter of refactoring and lots of tests)

Many thanks again Karl, this really helped me to migrate to TF 2.7 and not fall behind on the tensorflow-java releases. IF anything new happened I'll update this thread.

Wonderful! Let me know if you observe any strange behavior.

One more question for you: is the reason of re-exporting a saved model only for updating the variables with newly trained values? If so, I think that could be very common case that would benefit from having a dedicated new endpoint for this, something like SavedModelBundle.updateVariablesFromSession(Session).

Wonderful! Let me know if you observe any strange behavior. Absolutely!

One more question for you: is the reason of re-exporting a saved model only for updating the variables with newly trained values? If so, I think that could be very common case that would benefit from having a dedicated new endpoint for this, something like

SavedModelBundle.updateVariablesFromSession(Session).

Yes, at the beginning the reason for that was to save the trained models. Unfortunately, we started using the same process to save models which obviously don't need all that like embeddings. I would say having a feature like updateVariablesFromSession would be very useful!