addons

addons copied to clipboard

addons copied to clipboard

Mutual information metric

Description

Adds the MutualInformation metric.

Implementation based on the mutual information estimator described in Weihao Gao, Sreeram Kannan, Sewoong Oh, and Pramod Viswanath. Estimating mutual information for discrete-continuous mixtures. https://arxiv.org/pdf/1709.06212v3.pdf

The estimator works on any kind of distribution: discrete, continuous or a mix of both.

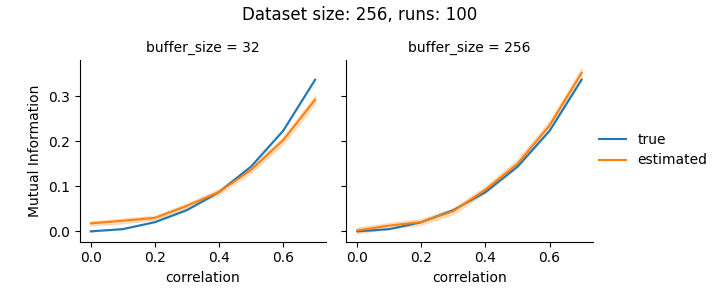

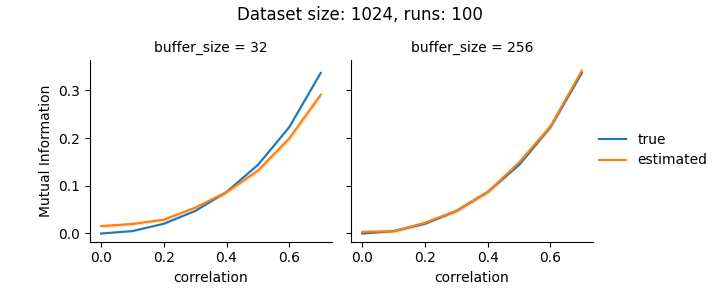

This implementation has a memory complexity of O(buffer_sizexcompute_batch_size) and a time complexity of O(buffer_size^2). They can be adjusted with the corresponding parameters. Smaller value of buffer_size increases the bias of the estimator while small value of compute_batch_size increases the computation time.

The effect of the parameter buffer_size is depicted in the following figures. They show the performance of the estimator when X and Y are drawn from a multivariate gaussian for different correlation coefficients. We can see that lower values increase the bias, but preserves the variance.

Type of change

- [X] New Metric and the changes conform to the metric contribution guidelines

Checklist:

- [X] I've properly formatted my code according to the guidelines

- [X] By running pre-commit hooks

- [X] I have made corresponding changes to the documentation

- [X] I have added tests that prove my fix is effective or that my feature works

Hi @bhack, can you have a look at this?

Will review this weekend if no one else gets to it. Thanks for the contribution

Thank you for your contribution. We sincerely apologize for any delay in reviewing, but TensorFlow Addons is transitioning to a minimal maintenance and release mode. New features will not be added to this repository. For more information, please see our public messaging on this decision: TensorFlow Addons Wind Down

Please consider sending feature requests / contributions to other repositories in the TF community with a similar charters to TFA: Keras Keras-CV Keras-NLP