postgrest-js

postgrest-js copied to clipboard

postgrest-js copied to clipboard

API no working reliably (FetchError: Unexpected token < in JSON at position 1)

Bug report

Describe the bug

Calling Supabase API and RPC functions sometimes gives the following error "FetchError: Unexpected token < in JSON at position 1"

To Reproduce

No general way to reproduce it as it is an intermittent issue probably related to server performance or how Supabase provisions the Postgres cluster. But chances are that you can query your logs for 500 errors or look into your GA (Google Analytics) and you will find some there.

Expected behavior

API should work as expected and not only sometimes.

Screenshots

Additional context

Initially, I was getting a "FetchError: Unexpected token < in JSON at position 1" error at the application level so I started looking deeper and find out that Cloudflare is sending and timeout response that can not be decoded as a JSON response and hence the error.

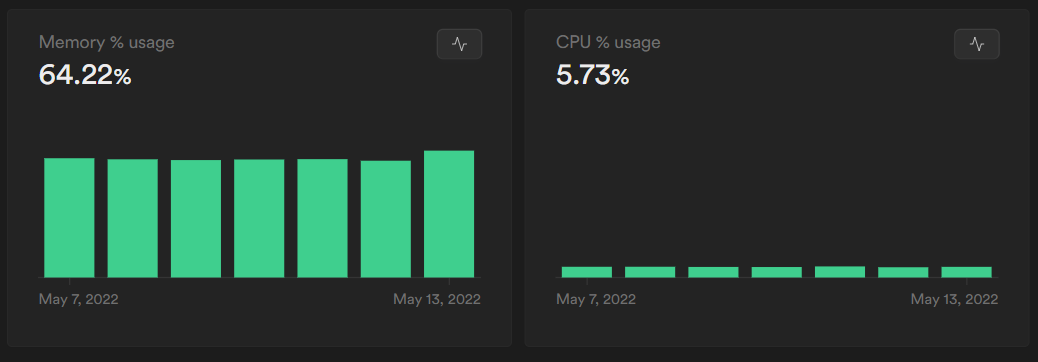

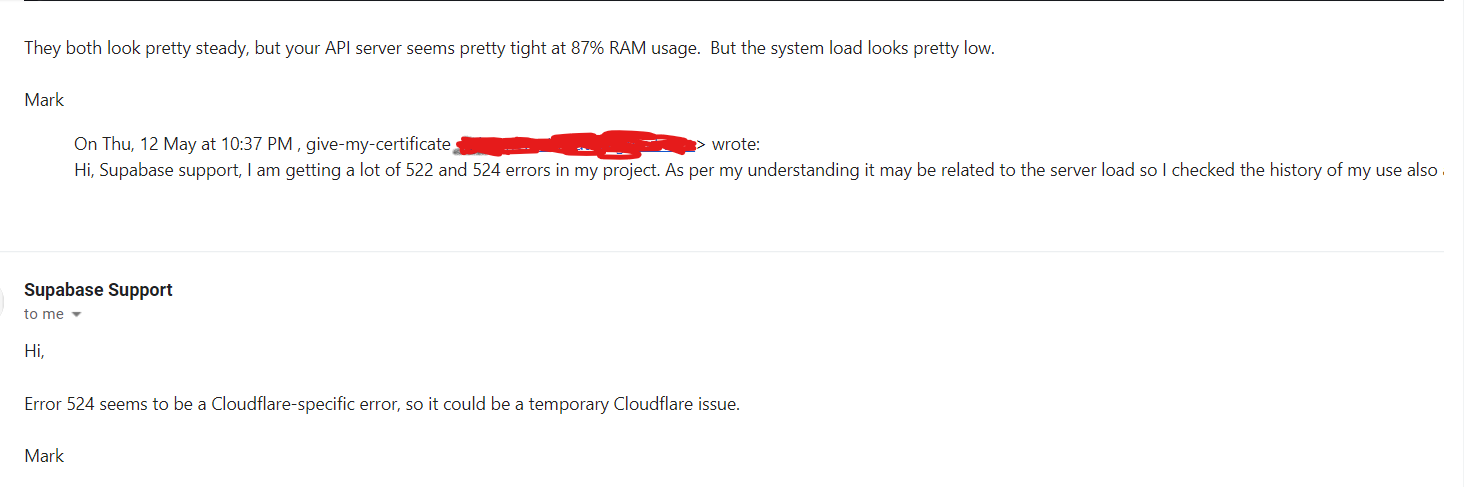

So I look into the logs and found that there are logs with 522 and 524 status codes. I thought that this could be an issue with the server resources and checked for the server loads but that is not the case here as evident by the screenshot attached.

Tried raising the issue with Supabase support and received the most unhelpful response that is possible and they never reply to the follow-up emails.

I believe this is related to the issue I created a few days ago => https://github.com/supabase/supabase/issues/6813

Essentially the JSON body response from Supabase API is always undefined in my case...

@jellohouse Yes, It is the same. I did some more research on top of that as in why the JSON body response from Supabase API is undefined. It seems that the handshake between cloudflare and Supabase servers are not completing. However I am not very sure, But I have a hunch that Supabase servers are overloading from time to time as I have also noticed that API response times are also not consistent

@steve-chavez Is there any way I can help in resolving the same, Default cloudflare timeout is 60 second which should be enough for all queries except bulk insert/Update and rpc functions (with complex joins). I also go through Supabase source code but everything looks fine from a distance. In my understanding it must be something to do with the postgresql configruaton set by Supabase as my server have a lot of free RAM and CPU, Less than 500K records per table. Max 20 tables including Auth once. Still slow query logs are showing few simple write queries taking more than 100 seconds.

I would love to help in debugging the issue further but how the Supabase servers are configured are closed source.

@awalias @kiwicopple Please look into it as communicating with your support is literally not possible

No reply in 4 days

@give-my-certificate We're thinking of handling Cloudflare timeouts with retries in https://github.com/supabase/postgrest-js/pull/267.

n my understanding it must be something to do with the postgresql configruaton set by Supabase as my server have a lot of free RAM and CPU

Bear in mind that if you do many UPDATEs, then locks on rows will be acquired - this might not cause noticeable CPU/RAM consumption, but could cause waiting and slow responses.

Sorry about the slow reply in support, I'll come back to this one.

@steve-chavez

Bear in mind that if you do many UPDATEs, then locks on rows will be acquired - this might not cause noticeable CPU/RAM consumption, but could cause waiting and slow responses.

You are right, I forgot about locks, However, After your comment, I crosschecked query logs with slow query timestamps and it doesn't looks like a lock problem in this case.

One weird thing though, I upgraded my instance type from micro to small and it drastically reduced 522 and 524 errors despite having no RAM crunch.

We're thinking of handling Cloudflare timeouts with retries in https://github.com/supabase/postgrest-js/pull/267.

I have gone through the pull request code and discussion and have few questions/edge case scenarios. Also as I am no expert in databases, So pardon me if these are the foolish scenarios.

- What about array push operations, where the push/pop operation (Using RPC functions) times out on Cloudflare while the actual database write is successful, wouldn't a retry create duplicate entries.

- Similarly for the write operations, where primary key is missing, There may be a chance of having two or more records with same data .

- In a case where timeout is happening due to resource crunch on the server. Wouldn't retries create too much load on top.

- Also as timeouts are also happening on storage operations. Could there be a case where the object table updates without actual object being uploaded to s3. In this scenario even retries won't work.

Also as I am no expert in databases, So pardon me if these are the foolish scenarios.

These are great questions @give-my-certificate.

What about array push operations, where the push/pop operation (Using RPC functions) times out on Cloudflare while the actual database write is successful, wouldn't a retry create duplicate entries.

Yeah, on https://github.com/supabase/postgrest-js/pull/267 there won't be retries for POST requests, underlyingly the js library calls RPC with the POST method.

For now retries in the PR are only targeting GET requests.

Similarly for the write operations, where primary key is missing, There may be a chance of having two or more records with same data .

Same here, POST is used for insert and upsert.

In a case where timeout is happening due to resource crunch on the server. Wouldn't retries create too much load on top.

That's a concern I have as well. My idea is to additionally add backpressure to the REST API, so when it's overloaded it will send a 503 Service Unavailable plus a Retry-After: x header that the js client can use to retry after x seconds.

This overloaded state could also be notified through the UI somehow, so the user can try to fix queries or consider upgrading the instance.

Also as timeouts are also happening on storage operations. Could there be a case where the object table updates without actual object being uploaded to s3. In this scenario even retries won't work.

Updates are done with the PATCH method, so no retries as well.

Overall this is still being worked on, https://github.com/supabase/postgrest-js/pull/267 is still a draft.

ran into same issue today! :|,

i am using supabase as backend for projects so try to understand and resolve these errors!

This one was solved with https://github.com/PostgREST/postgrest/pull/2449/ and released on v10.1.0. This version(actually v10.1.1) is already deployed to all supabase projects.

If a timeout happens, nowadays a 504 Gateway timeout status will be reported with the Timed out acquiring connection from connection pool error message.

Will close for now. Please reopen if the error persists.