clonezilla

clonezilla copied to clipboard

clonezilla copied to clipboard

Issue with megasr (software raid 0, not mdadm)

hello I tried to make disk-image backup for a cisco ucs server using the megasr module to access /dev/sda which is a RAID 0.

Jan 31 16:30:38 server kernel: :LSI MegaSR RAID5 version v18.03.2022.0626, built on Jun 21 2022 at 07:02:05

Jan 31 16:30:38 server kernel: :megasr: [RAID HBA] 0x8086:0xa1d6:0x1137:0x0101: bus 0:slot 17:func 5

Jan 31 16:30:38 server kernel: :megasr[ahci]: HBA capabilities[0xe734ff45], S64A:[x] SNCQ:[x] SSNTF:[x] SSS:[ ] ISS:[0x3] SPM:[ ] SSC:[x] PSC:[x] NCS:[0x1f] CCCS:[ ] EMS:[x] NP:[0x5]

Jan 31 16:30:38 server kernel: :megasr[ahci]: Device:[0:Micron_5300_MTFD] NCQ:[Yes] Queue Depth:[0x20] capacity=0x1bf244b0

Jan 31 16:30:38 server kernel: :megasr[ahci]: Device:[2:Micron_5300_MTFD] NCQ:[Yes] Queue Depth:[0x20] capacity=0x1bf244b0

Jan 31 16:30:38 server kernel: :megasr: raid 1 logical drive is online, is fully initialized, has 2 drives, and has a size of 0x1bd2c000 sectors.

Jan 31 16:30:38 server kernel: :megasr[cfg]: mgr, improper system shutdown detected, raid 5 LD init state changed to Not initialized

Jan 31 16:30:38 server kernel: :megasr[raid_key]: [ ] RAID5 support [ ] SAS drive support

Jan 31 16:30:38 server kernel: scsi host0: LSI MegaSR RAID5

Jan 31 16:30:38 server kernel: :megasr: [RAID HBA] 0x8086:0xa186:0x1137:0x0101: bus 0:slot 23:func 0

Jan 31 16:30:38 server kernel: scsi 0:2:0:0: Direct-Access LSI MegaSR 1.0 PQ: 0 ANSI: 5

Jan 31 16:30:38 server kernel: :megasr[ahci]: HBA capabilities[0xe734ff47], S64A:[x] SNCQ:[x] SSNTF:[x] SSS:[ ] ISS:[0x3] SPM:[ ] SSC:[x] PSC:[x] NCS:[0x1f] CCCS:[ ] EMS:[x] NP:[0x7]

Jan 31 16:30:39 server kernel: :megasr[cfg]: err ldf RCL packet free logic error

Jan 31 16:30:39 server kernel: :megasr[raid_key]: [ ] RAID5 support [ ] SAS drive support

Jan 31 16:30:39 server kernel: scsi host1: LSI MegaSR RAID5

Jan 31 16:30:39 server kernel: scsi 0:2:0:0: Attached scsi generic sg0 type 0

Jan 31 16:30:39 server kernel: sd 0:2:0:0: [sda] 466796544 512-byte logical blocks: (239 GB/223 GiB)

Jan 31 16:30:39 server kernel: sd 0:2:0:0: [sda] 4096-byte physical blocks

Jan 31 16:30:39 server kernel: sd 0:2:0:0: [sda] Write Protect is off

Jan 31 16:30:39 server kernel: sd 0:2:0:0: [sda] Mode Sense: 17 00 00 00

Jan 31 16:30:39 server kernel: sd 0:2:0:0: [sda] Write cache: disabled, read cache: disabled, doesn't support DPO or FUA

Jan 31 16:30:39 server kernel: sda: sda1 sda2 sda3

Jan 31 16:30:39 server kernel: sd 0:2:0:0: [sda] Attached SCSI disk

Clonezilla is discovering the disk as md126

[root@server2 dd31-2023-02-02-11-img]# cat md126.txt

MD_LEVEL=raid1

MD_DEVICES=2

MD_CONTAINER=/dev/md/ddf0

MD_MEMBER=0

MD_UUID=04082469:9acd8130:20436f81:0183d381

MD_DEVNAME=vd0_0

MD_DEVICE_dev_sda_ROLE=0

MD_DEVICE_dev_sda_DEV=/dev/sda

MD_DEVICE_dev_sdb_ROLE=1

MD_DEVICE_dev_sdb_DEV=/dev/sdb

But is it not a mdadm device, the RAID 0 is managed by the megasr kernel module.

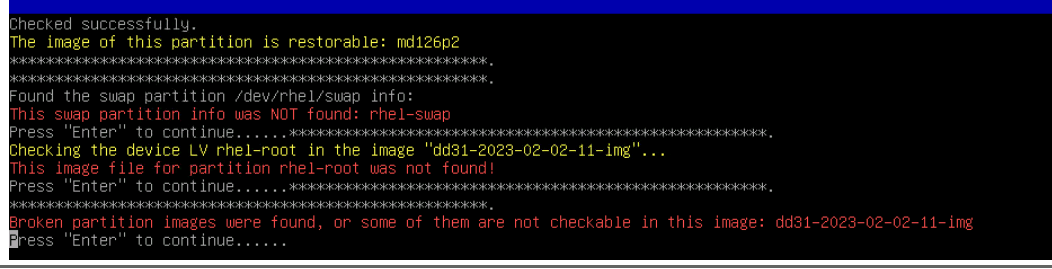

The backup seems to run correctly, but at the end the checking if failing for 2 of 3 partition.

- md126p2 /boot is checked restorable

- md126p3 rhel lvm have error:

-

- /dev/mapper/rhel-swap: This swap partition info was NOT found

-

- /dev/mapper/rhel-root: This image file for partition rhel-root was not found!

- md126p1 /boot/efi, I dont know.

Any idea?

How can I only backup the /dev/sda drive?

[root@server2 dd31-2023-02-02-11-img]# cat blkdev.list

KNAME NAME SIZE TYPE FSTYPE MOUNTPOINT MODEL

loop0 loop0 302.7M loop squashfs /usr/lib/live/mount/rootfs/filesystem.squashfs

sda sda 223.6G disk ddf_raid_member Micron_5300_MTFDDAV240TDS

sda1 |-sda1 600M part vfat

sda2 |-sda2 1G part xfs

sda3 |-sda3 221G part LVM2_member

md126 |-md126 222.6G raid1

md126p1 | |-md126p1 600M part vfat

md126p2 | |-md126p2 1G part xfs

md126p3 | `-md126p3 221G part LVM2_member

md127 `-md127 0B md

sdb sdb 223.6G disk ddf_raid_member Micron_5300_MTFDDAV240TDS

sdb1 |-sdb1 600M part vfat

sdb2 |-sdb2 1G part xfs

sdb3 |-sdb3 221G part LVM2_member

md126 |-md126 222.6G raid1

md126p1 | |-md126p1 600M part vfat

md126p2 | |-md126p2 1G part xfs

md126p3 | `-md126p3 221G part LVM2_member

md127 `-md127 0B md

sdc sdc 0B disk vKVM-Mapped vHDD

sdd sdd 0B disk vKVM-Mapped vFDD

sde sde 0B disk CIMC-Mapped vHDD

sdf sdf 0B disk Flexutil HDD

sr0 sr0 363M rom vKVM-Mapped vDVD

sr1 sr1 1024M rom CIMC-Mapped vDVD

sr2 sr2 1024M rom Flexutil DVD 1

sr3 sr3 1024M rom Flexutil DVD 2

[root@server2 dd31-2023-02-02-11-img]# cat blkid.list

/dev/loop0: TYPE="squashfs"

/dev/md126p1: UUID="A6BC-0C1C" BLOCK_SIZE="512" TYPE="vfat" PARTLABEL="EFI System Partition" PARTUUID="7b05d612-3b8a-4bfa-8f20-ee59085c28e8"

/dev/md126p2: UUID="8172a5bd-b2c7-4823-9b12-0b2995dbce74" BLOCK_SIZE="4096" TYPE="xfs" PARTUUID="26098ebb-ac62-48f4-a002-ff5504b97ed0"

/dev/md126p3: UUID="Qje8C1-wUm2-tjcu-oJeM-eInu-Xwyd-z5D4pz" TYPE="LVM2_member" PARTUUID="6a056e3a-e2e7-47d9-81f3-4dae0d2f6054"

/dev/sda1: UUID="A6BC-0C1C" BLOCK_SIZE="512" TYPE="vfat" PARTLABEL="EFI System Partition" PARTUUID="7b05d612-3b8a-4bfa-8f20-ee59085c28e8"

/dev/sda2: UUID="8172a5bd-b2c7-4823-9b12-0b2995dbce74" BLOCK_SIZE="4096" TYPE="xfs" PARTUUID="26098ebb-ac62-48f4-a002-ff5504b97ed0"

/dev/sda3: UUID="Qje8C1-wUm2-tjcu-oJeM-eInu-Xwyd-z5D4pz" TYPE="LVM2_member" PARTUUID="6a056e3a-e2e7-47d9-81f3-4dae0d2f6054"

/dev/sdb1: UUID="A6BC-0C1C" BLOCK_SIZE="512" TYPE="vfat" PARTLABEL="EFI System Partition" PARTUUID="7b05d612-3b8a-4bfa-8f20-ee59085c28e8"

/dev/sdb2: UUID="8172a5bd-b2c7-4823-9b12-0b2995dbce74" BLOCK_SIZE="4096" TYPE="xfs" PARTUUID="26098ebb-ac62-48f4-a002-ff5504b97ed0"

/dev/sdb3: UUID="Qje8C1-wUm2-tjcu-oJeM-eInu-Xwyd-z5D4pz" TYPE="LVM2_member" PARTUUID="6a056e3a-e2e7-47d9-81f3-4dae0d2f6054"

[root@server2 user]# cat clonezilla.log

Starting /usr/sbin/ocs-sr at 2023-02-02 11:37:34 UTC...

*****************************************************.

Clonezilla image dir: /home/partimag

Shutting down the Logical Volume Manager

Shutting Down logical volume: /dev/rhel/home

Shutting Down logical volume: /dev/rhel/root

Shutting Down logical volume: /dev/rhel/swap

Shutting Down volume group: rhel

Finished Shutting down the Logical Volume Manager

The selected devices: md126

PS. Next time you can run this command directly:

/usr/sbin/ocs-sr -q2 -c -j2 -z9p -i 0 -fsck -senc -p choose savedisk dd31-2023-02-02-11-img md126

*****************************************************.

The selected devices: md126

Searching for data/swap/extended partition(s)...

The data partition to be saved: md126p1 md126p2 md126p3

The selected devices: md126p1 md126p2 md126p3

The following step is to save the hard disk/partition(s) on this machine as an image:

*****************************************************.

Machine: UCSC-C220-M5SX

md126 (239GB_Unknown_model_md-uuid-04082469:9acd8130:20436f81:0183d381)

md126p1 (600M_vfat_md-uuid-04082469:9acd8130:20436f81:0183d381)

md126p2 (1G_xfs_md-uuid-04082469:9acd8130:20436f81:0183d381)

md126p3 (221G_LVM2_member_md-uuid-04082469:9acd8130:20436f81:0183d381)

*****************************************************.

-> "/home/partimag/dd31-2023-02-02-11-img".

Shutting down the Logical Volume Manager

Shutting Down logical volume: /dev/rhel/home

Shutting Down logical volume: /dev/rhel/root

Shutting Down logical volume: /dev/rhel/swap

Shutting Down volume group: rhel

Finished Shutting down the Logical Volume Manager

Starting saving /dev/md126p1 as /home/partimag/dd31-2023-02-02-11-img/md126p1.XXX...

/dev/md126p1 filesystem: vfat.

*****************************************************.

*****************************************************.

Use partclone with zstdmt to save the image.

Image file will not be split.

*****************************************************.

If this action fails or hangs, check:

* Is the disk full ?

*****************************************************.

Running: partclone.vfat -z 10485760 -N -L /var/log/partclone.log -c -s /dev/md126p1 --output - | zstdmt -c -3 > /home/partimag/dd31-2023-02-02-11-img/md126p1.vfat-ptcl-img.zst 2> /tmp/img_out_err.2uDt0F

Partclone v0.3.20 http://partclone.org

Starting to clone device (/dev/md126p1) to image (-)

Reading Super Block

memory needed: 21125124 bytes

bitmap 153600 bytes, blocks 2*10485760 bytes, checksum 4 bytes

Calculating bitmap... Please wait...

done!

File system: FAT32

Device size: 629.1 MB = 1228800 Blocks

Space in use: 8.3 MB = 16248 Blocks

Free Space: 620.8 MB = 1212552 Blocks

Block size: 512 Byte

Total block 1228800

Syncing... OK!

Partclone successfully cloned the device (/dev/md126p1) to the image (-)

>>> Time elapsed: 7.34 secs (~ .122 mins)

*****************************************************.

Finished saving /dev/md126p1 as /home/partimag/dd31-2023-02-02-11-img/md126p1.vfat-ptcl-img.zst

*****************************************************.

Starting saving /dev/md126p2 as /home/partimag/dd31-2023-02-02-11-img/md126p2.XXX...

/dev/md126p2 filesystem: xfs.

*****************************************************.

*****************************************************.

Use partclone with zstdmt to save the image.

Image file will not be split.

*****************************************************.

If this action fails or hangs, check:

* Is the disk full ?

*****************************************************.

Running: partclone.xfs -z 10485760 -N -L /var/log/partclone.log -c -s /dev/md126p2 --output - | zstdmt -c -3 > /home/partimag/dd31-2023-02-02-11-img/md126p2.xfs-ptcl-img.zst 2> /tmp/img_out_err.jUKKvK

Partclone v0.3.20 http://partclone.org

Starting to clone device (/dev/md126p2) to image (-)

Reading Super Block

xfsclone.c: Open /dev/md126p2 successfullyxfsclone.c: fs_close

memory needed: 21004292 bytes

bitmap 32768 bytes, blocks 2*10485760 bytes, checksum 4 bytes

Calculating bitmap... Please wait...

xfsclone.c: Open /dev/md126p2 successfullyxfsclone.c: bused = 51065, bfree = 211079

xfsclone.c: fs_close

done!

File system: XFS

Device size: 1.1 GB = 262144 Blocks

Space in use: 209.2 MB = 51065 Blocks

Free Space: 864.6 MB = 211079 Blocks

Block size: 4096 Byte

Total block 262144

Syncing... OK!

Partclone successfully cloned the device (/dev/md126p2) to the image (-)

>>> Time elapsed: 9.27 secs (~ .154 mins)

*****************************************************.

Finished saving /dev/md126p2 as /home/partimag/dd31-2023-02-02-11-img/md126p2.xfs-ptcl-img.zst

*****************************************************.

Parsing LVM layout for md126p1 md126p2 md126p3 ...

rhel /dev/md126p3 Qje8C1-wUm2-tjcu-oJeM-eInu-Xwyd-z5D4pz

Parsing logical volumes...

Saving the VG config...

WARNING: Not using device /dev/sdb3 for PV Qje8C1-wUm2-tjcu-oJeM-eInu-Xwyd-z5D4pz.

WARNING: Not using device /dev/sda3 for PV Qje8C1-wUm2-tjcu-oJeM-eInu-Xwyd-z5D4pz.

WARNING: PV Qje8C1-wUm2-tjcu-oJeM-eInu-Xwyd-z5D4pz prefers device /dev/md126p3 because device is in subsystem.

WARNING: PV Qje8C1-wUm2-tjcu-oJeM-eInu-Xwyd-z5D4pz prefers device /dev/md126p3 because device is in subsystem.

Volume group "rhel" successfully backed up.

done.

Checking if the VG config was saved correctly...

done.

Saving block devices info in /home/partimag/dd31-2023-02-02-11-img/blkdev.list...

Saving block devices attributes in /home/partimag/dd31-2023-02-02-11-img/blkid.list...

Checking the integrity of partition table in the disk /dev/md126...

Reading the partition table for /dev/md126...RETVAL=0

*****************************************************.

Saving the primary GPT of md126 as /home/partimag/dd31-2023-02-02-11-img/md126-gpt-1st by dd...

34+0 records in

34+0 records out

17408 bytes (17 kB, 17 KiB) copied, 0.00163796 s, 10.6 MB/s

*****************************************************.

Saving the secondary GPT of md126 as /home/partimag/dd31-2023-02-02-11-img/md126-gpt-2nd by dd...

32+0 records in

32+0 records out

16384 bytes (16 kB, 16 KiB) copied, 0.00215308 s, 7.6 MB/s

*****************************************************.

Saving the GPT of md126 as /home/partimag/dd31-2023-02-02-11-img/md126-gpt.gdisk by gdisk...

The operation has completed successfully.

*****************************************************.

Saving the MBR data for md126...

1+0 records in

1+0 records out

512 bytes copied, 0.000596034 s, 859 kB/s

End of saveparts job for image /home/partimag/dd31-2023-02-02-11-img.

*****************************************************.

*****************************************************.

End of savedisk job for image dd31-2023-02-02-11-img.

Checking if udevd rules have to be restored...

This program is not started by Clonezilla server, so skip notifying it the job is done.

This program is not started by Clonezilla server, so skip notifying it the job is done.

Finished!

Finished!

Partition table type: gpt

The partition table file for this disk was found: md126, /home/partimag/dd31-2023-02-02-11-img/md126-pt.sf

The image of this partition is restorable: md126p1

The image of this partition is restorable: md126p2

*****************************************************.

Broken partition images were found, or some of them are not checkable in this image: dd31-2023-02-02-11-img

Yes, backup of /dev/md126 is correct. Are you sure that you have not mounted /dev/md126p3 swap partition before cloning? Or you can simply exclude that partition.

Yes, backup was done automatically with GUI.

But why a mdadm device is detected?

[root@server~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda3 rhel lvm2 a-- <221.00g 0

[root@server~]# vgs

VG #PV #LV #SN Attr VSize VFree

rhel 1 3 0 wz--n- <221.00g 0

[root@server~]# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

home rhel -wi-ao---- <167.00g

root rhel -wi-ao---- 50.00g

swap rhel -wi-ao---- 4.00g

I feel that clonezilla will not be able to restore...

/dev/sda, etc, are physical devices.

/dev/md126p1, etc, are logical (think: virtual) drives/partitions made on top of the physical RAIDed ones.

The RAID controller translates between them.

When restoring do so to the logical device.

Back up your data then test clone and restore.

But it is not a mdadm RAID0 metadevice... This is a megasr module RAID0

00:11.5 RAID bus controller: Intel Corporation C620 Series Chipset Family SSATA Controller [RAID mode] (rev 09)

# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 222.6G 0 disk

├─sda1 8:1 0 600M 0 part /boot/efi

├─sda2 8:2 0 1G 0 part /boot

└─sda3 8:3 0 221G 0 part

├─rhel-root 253:0 0 50G 0 lvm /

├─rhel-swap 253:1 0 4G 0 lvm [SWAP]

└─rhel-home 253:2 0 167G 0 lvm /home

[ 1.585572] scsi host1: LSI MegaSR RAID5

[ 1.590800] scsi 0:2:0:0: Attached scsi generic sg0 type 0

[ 1.594419] sd 0:2:0:0: [sda] 466796544 512-byte logical blocks: (239 GB/223 GiB)

[ 1.594422] sd 0:2:0:0: [sda] 4096-byte physical blocks

[ 1.594427] sd 0:2:0:0: [sda] Write Protect is off

[ 1.594428] sd 0:2:0:0: [sda] Mode Sense: 17 00 00 00

[ 1.594438] sd 0:2:0:0: [sda] Write cache: disabled, read cache: disabled, doesn't support DPO or FUA

[ 1.596148] sda: sda1 sda2 sda3

[ 1.597329] sd 0:2:0:0: [sda] Attached SCSI disk

I tried to check the image and it failed

The documentation: https://www.cisco.com/c/en/us/support/docs/servers-unified-computing/ucs-c-series-rack-servers/214860-installing-red-hat-using-the-embedded-sa.html?dtid=osscdc000283

An universal way to avoid such problem could be to create a bootable clonezilla iso with the actual kernel/module of the target hosts... Maybe with https://github.com/Tomas-M/linux-live

There is a command "ocs-live-swap-kernel" comes with the clonezilla package. Hence you can find a Debian box, follow this to install the packages drbl and clonezilla, etc.: https://drbl.org/installation/02-install-required-packages.php Then you run: ocs-live-swap-kernel --help It will give some examples about how to swap the Linux kernel in Clonezilla live zip file. It might be buggy, so if you encounter any issue, please let us know. Thanks.

Steven

Thanks, I made up a quick and dirty script to create a bootable disk with current kernel and initramfs + ssh/dd.

It was a lot of fun...

https://github.com/defdefred/RescueMe

Unfortunately, grub2-mkrescue for EFI was not working for me...

OK, thanks for sharing that.

Steven