PyShortTextCategorization

PyShortTextCategorization copied to clipboard

PyShortTextCategorization copied to clipboard

Update transformers to 4.38.2

This PR updates transformers from 4.36.0 to 4.38.2.

Changelog

4.38.2

Fix backward compatibility issues with Llama and Gemma:

We mostly made sure that performances are not affected by the new change of paradigm with ROPE. Fixed the ROPE computation (should always be in float32) and the `causal_mask` dtype was set to bool to take less RAM.

YOLOS had a regression, and Llama / T5Tokenizer had a warning popping for random reasons

- FIX [Gemma] Fix bad rebase with transformers main (29170)

- Improve _update_causal_mask performance (29210)

- [T5 and Llama Tokenizer] remove warning (29346)

- [Llama ROPE] Fix torch export but also slow downs in forward (29198)

- RoPE loses precision for Llama / Gemma + Gemma logits.float() (29285)

- Patch YOLOS and others (29353)

- Use torch.bool instead of torch.int64 for non-persistant causal mask buffer (29241)

4.38.1

Fix eager attention in Gemma!

- [Gemma] Fix eager attention 29187 by sanchit-gandhi

TLDR:

diff

- attn_output = attn_output.reshape(bsz, q_len, self.hidden_size)

+ attn_output = attn_output.view(bsz, q_len, -1)

4.38.0

New model additions

💎 Gemma 💎

Gemma is a new opensource Language Model series from Google AI that comes with a 2B and 7B variant. The release comes with the pre-trained and instruction fine-tuned versions and you can use them via `AutoModelForCausalLM`, `GemmaForCausalLM` or `pipeline` interface!

Read more about it in the Gemma release blogpost: https://hf.co/blog/gemma

python

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b")

model = AutoModelForCausalLM.from_pretrained("google/gemma-2b", device_map="auto", torch_dtype=torch.float16)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

You can use the model with Flash Attention, SDPA, Static cache and quantization API for further optimizations !

* Flash Attention 2

python

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b")

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-2b", device_map="auto", torch_dtype=torch.float16, attn_implementation="flash_attention_2"

)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

* bitsandbytes-4bit

python

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b")

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-2b", device_map="auto", load_in_4bit=True

)

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

* Static Cache

python

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b")

model = AutoModelForCausalLM.from_pretrained(

"google/gemma-2b", device_map="auto"

)

model.generation_config.cache_implementation = "static"

input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda")

outputs = model.generate(**input_ids)

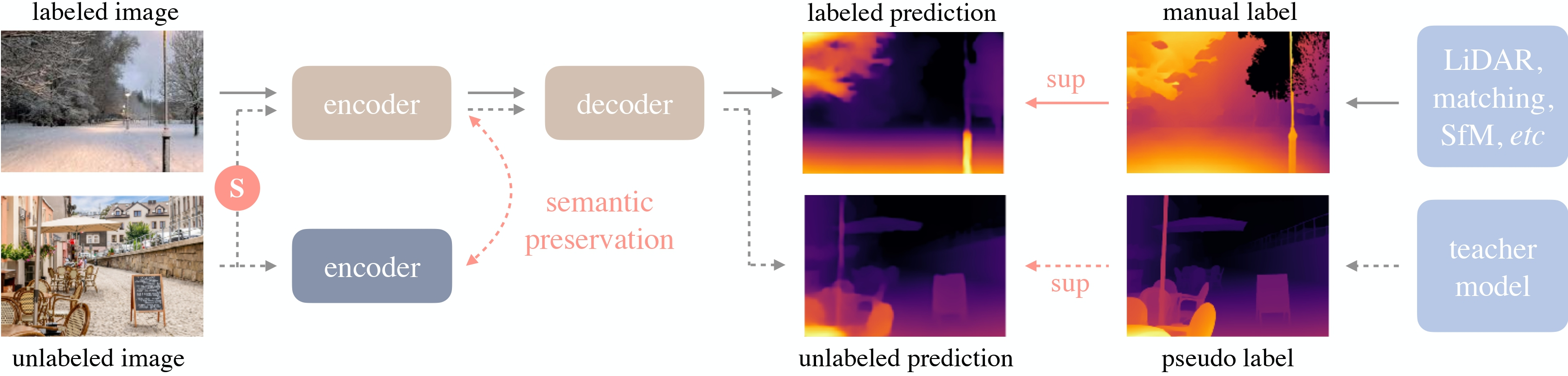

Depth Anything Model

The Depth Anything model was proposed in [Depth Anything: Unleashing the Power of Large-Scale Unlabeled Data](https://arxiv.org/abs/2401.10891) by Lihe Yang, Bingyi Kang, Zilong Huang, Xiaogang Xu, Jiashi Feng, Hengshuang Zhao. Depth Anything is based on the [DPT](https://huggingface.co/docs/transformers/v4.38.0/en/model_doc/dpt) architecture, trained on ~62 million images, obtaining state-of-the-art results for both relative and absolute depth estimation.

* Add Depth Anything by NielsRogge in 28654

Stable LM

StableLM 3B 4E1T was proposed in [StableLM 3B 4E1T: Technical Report](https://stability.wandb.io/stability-llm/stable-lm/reports/StableLM-3B-4E1T--VmlldzoyMjU4?accessToken=u3zujipenkx5g7rtcj9qojjgxpconyjktjkli2po09nffrffdhhchq045vp0wyfo) by Stability AI and is the first model in a series of multi-epoch pre-trained language models.

StableLM 3B 4E1T is a decoder-only base language model pre-trained on 1 trillion tokens of diverse English and code datasets for four epochs. The model architecture is transformer-based with partial Rotary Position Embeddings, SwiGLU activation, LayerNorm, etc.

The team also provides StableLM Zephyr 3B, an instruction fine-tuned version of the model that can be used for chat-based applications.

* Add `StableLM` by jon-tow in 28810

⚡️ Static cache was introduced in the following PRs ⚡️

Static past key value cache allows `LlamaForCausalLM`' s forward pass to be compiled using `torch.compile` !

This means that `(cuda) graphs` can be used for inference, which **speeds up the decoding step by 4x!**

A forward pass of Llama2 7B takes around `10.5` ms to run with this on an A100! Equivalent to TGI performances! ⚡️

* [`Core generation`] Adds support for static KV cache by ArthurZucker in 27931

* [`CLeanup`] Revert SDPA attention changes that got in the static kv cache PR by ArthurZucker in 29027

* Fix static generation when compiling! by ArthurZucker in 28937

* Static Cache: load models with MQA or GQA by gante in 28975

* Fix symbolic_trace with kv cache by fxmarty in 28724

⚠️ Support for `generate` is not included yet. This feature is experimental and subject to changes in subsequent releases.

py

from transformers import AutoTokenizer, AutoModelForCausalLM, StaticCache

import torch

import os

compilation triggers multiprocessing

os.environ["TOKENIZERS_PARALLELISM"] = "true"

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Llama-2-7b-hf")

model = AutoModelForCausalLM.from_pretrained(

"meta-llama/Llama-2-7b-hf",

device_map="auto",

torch_dtype=torch.float16

)

set up the static cache in advance of using the model

model._setup_cache(StaticCache, max_batch_size=1, max_cache_len=128)

trigger compilation!

compiled_model = torch.compile(model, mode="reduce-overhead", fullgraph=True)

run the model as usual

input_text = "A few facts about the universe: "

input_ids = tokenizer(input_text, return_tensors="pt").to("cuda").input_ids

model_outputs = compiled_model(input_ids)

Quantization

🧼 HF Quantizer 🧼

`HfQuantizer` makes it easy for quantization method researchers and developers to add inference and / or quantization support in 🤗 transformers. If you are interested in adding the support for new methods, please refer to this documentation page: https://huggingface.co/docs/transformers/main/en/hf_quantizer

* `HfQuantizer` class for quantization-related stuff in `modeling_utils.py` by poedator in 26610

* [`HfQuantizer`] Move it to "Developper guides" by younesbelkada in 28768

* [`HFQuantizer`] Remove `check_packages_compatibility` logic by younesbelkada in 28789

* [docs] HfQuantizer by stevhliu in 28820

⚡️AQLM ⚡️

AQLM is a new quantization method that enables no-performance degradation in 2-bit precision. Check out this demo about how to run Mixtral in 2-bit on a free-tier Google Colab instance: https://huggingface.co/posts/ybelkada/434200761252287

* AQLM quantizer support by BlackSamorez in 28928

* Removed obsolete attribute setting for AQLM quantization. by BlackSamorez in 29034

🧼 Moving canonical repositories 🧼

The canonical repositories on the hugging face hub (models that did not have an organization, like `bert-base-cased`), have been moved under organizations.

You can find the entire list of models moved here: https://huggingface.co/collections/julien-c/canonical-models-65ae66e29d5b422218567567

Redirection has been set up so that your code continues working even if you continue calling the previous paths. We, however, still encourage you to update your code to use the new links so that it is entirely future proof.

* canonical repos moves by julien-c in 28795

* Update all references to canonical models by LysandreJik in 29001

Flax Improvements 🚀

The Mistral model was added to the library in Flax.

* Flax mistral by kiansierra in 26943

TensorFlow Improvements 🚀

With Keras 3 becoming the standard version of Keras in TensorFlow 2.16, we've made some internal changes to maintain compatibility. We now have full compatibility with TF 2.16 as long as the `tf-keras` compatibility package is installed. We've also taken the opportunity to do some cleanup - in particular, the objects like `BatchEncoding` that are returned by our tokenizers and processors can now be directly passed to Keras methods like `model.fit()`, which should simplify a lot of code and eliminate a long-standing source of annoyances.

* Add tf_keras imports to prepare for Keras 3 by Rocketknight1 in 28588

* Wrap Keras methods to support BatchEncoding by Rocketknight1 in 28734

* Fix Keras scheduler import so it works for older versions of Keras by Rocketknight1 in 28895

Pre-Trained backbone weights 🚀

Enable loading in pretrained backbones in a new model, where all other weights are randomly initialized. Note: validation checks are still in place when creating a config. Passing in `use_pretrained_backbone` will raise an error. You can override by setting

`config.use_pretrained_backbone = True` after creating a config. However, it is not yet guaranteed to be fully backwards compatible.

py

from transformers import MaskFormerConfig, MaskFormerModel

config = MaskFormerConfig(

use_pretrained_backbone=False,

backbone="microsoft/resnet-18"

)

config.use_pretrained_backbone = True

Both models have resnet-18 backbone weights and all other weights randomly

initialized

model_1 = MaskFormerModel(config)

model_2 = MaskFormerModel(config)

* Enable instantiating model with pretrained backbone weights by amyeroberts in 28214

Introduce a helper function `load_backbone` to load a backbone from a backbone's model config e.g. `ResNetConfig`, or from a model config which contains backbone information. This enables cleaner modeling files and crossloading between timm and transformers backbones.

py

from transformers import ResNetConfig, MaskFormerConfig

from transformers.utils.backbone_utils import load_backbone

Resnet defines the backbone model to load

config = ResNetConfig()

backbone = load_backbone(config)

Maskformer config defines a model which uses a resnet backbone

config = MaskFormerConfig(use_timm_backbone=True, backbone="resnet18")

backbone = load_backbone(config)

config = MaskFormerConfig(backbone_config=ResNetConfig())

backbone = load_backbone(config)

* [`Backbone`] Use `load_backbone` instead of `AutoBackbone.from_config` by amyeroberts in 28661

* Backbone kwargs in config by amyeroberts in 28784

Add in API references, list supported backbones, updated examples, clarification and moving information to better reflect usage and docs

* [docs] Backbone by stevhliu in 28739

* Improve Backbone API docs by merveenoyan in 28666

Image Processor work 🚀

* Raise unused kwargs image processor by molbap in 29063

* Abstract image processor arg checks by molbap in 28843

Bugfixes and improvements 🚀

* Fix id2label assignment in run_classification.py by jheitmann in 28590

* Add missing key to TFLayoutLM signature by Rocketknight1 in 28640

* Avoid root logger's level being changed by ydshieh in 28638

* Add config tip to custom model docs by Rocketknight1 in 28601

* Fix lr_scheduler in no_trainer training scripts by bofenghuang in 27872

* [`Llava`] Update convert_llava_weights_to_hf.py script by isaac-vidas in 28617

* [`GPTNeoX`] Fix GPTNeoX + Flash Attention 2 issue by younesbelkada in 28645

* Update image_processing_deformable_detr.py by sounakdey in 28561

* [`SigLIP`] Only import tokenizer if sentencepiece available by amyeroberts in 28636

* Fix phi model doc checkpoint by amyeroberts in 28581

* get default device through `PartialState().default_device` as it has been officially released by statelesshz in 27256

* integrations: fix DVCLiveCallback model logging by dberenbaum in 28653

* Enable safetensors conversion from PyTorch to other frameworks without the torch requirement by LysandreJik in 27599

* `tensor_size` - fix copy/paste error msg typo by scruel in 28660

* Fix windows err with checkpoint race conditions by muellerzr in 28637

* add dataloader prefetch factor in training args and trainer by qmeeus in 28498

* Support single token decode for `CodeGenTokenizer` by cmathw in 28628

* Remove deprecated eager_serving fn by Rocketknight1 in 28665

* fix a hidden bug of `GenerationConfig`, now the `generation_config.json` can be loaded successfully by ParadoxZW in 28604

* Update README_es.md by vladydev3 in 28612

* Exclude the load balancing loss of padding tokens in Mixtral-8x7B by khaimt in 28517

* Use save_safetensor to disable safe serialization for XLA by jeffhataws in 28669

* Add back in generation types by amyeroberts in 28681

* [docs] DeepSpeed by stevhliu in 28542

* Improved type hinting for all attention parameters by nakranivaibhav in 28479

* improve efficient training on CPU documentation by faaany in 28646

* [docs] Fix doc format by stevhliu in 28684

* [`chore`] Add missing space in warning by tomaarsen in 28695

* Update question_answering.md by yusyel in 28694

* [`Vilt`] align input and model dtype in the ViltPatchEmbeddings forward pass by faaany in 28633

* [`docs`] Improve visualization for vertical parallelism by petergtz in 28583

* Don't fail when `LocalEntryNotFoundError` during `processor_config.json` loading by ydshieh in 28709

* Fix duplicate & unnecessary flash attention warnings by fxmarty in 28557

* support PeftMixedModel signature inspect by Facico in 28321

* fix: corrected misleading log message in save_pretrained function by mturetskii in 28699

* [`docs`] Update preprocessing.md by velaia in 28719

* Initialize _tqdm_active with hf_hub_utils.are_progress_bars_disabled(… by ShukantPal in 28717

* Fix `weights_only` by ydshieh in 28725

* Stop confusing the TF compiler with ModelOutput objects by Rocketknight1 in 28712

* fix: suppress `GatedRepoError` to use cache file (fix 28558). by scruel in 28566

* Unpin pydantic by ydshieh in 28728

* [docs] Fix datasets in guides by stevhliu in 28715

* [Flax] Update no init test for Flax v0.7.1 by sanchit-gandhi in 28735

* Falcon: removed unused function by gante in 28605

* Generate: deprecate old src imports by gante in 28607

* [`Siglip`] protect from imports if sentencepiece not installed by amyeroberts in 28737

* Add serialization logic to pytree types by angelayi in 27871

* Fix `DepthEstimationPipeline`'s docstring by ydshieh in 28733

* Fix input data file extension in examples by khipp in 28741

* [Docs] Fix Typo in English & Japanese CLIP Model Documentation (TMBD -> TMDB) by Vinyzu in 28751

* PatchtTST and PatchTSMixer fixes by wgifford in 28083

* Enable Gradient Checkpointing in Deformable DETR by FoamoftheSea in 28686

* small doc update for CamemBERT by julien-c in 28644

* Pin pytest version <8.0.0 by amyeroberts in 28758

* Mark test_constrained_beam_search_generate as flaky by amyeroberts in 28757

* Fix typo of `Block`. by xkszltl in 28727

* [Whisper] Make tokenizer normalization public by sanchit-gandhi in 28136

* Support saving only PEFT adapter in checkpoints when using PEFT + FSDP by AjayP13 in 28297

* Add French translation: french README.md by ThibaultLengagne in 28696

* Don't allow passing `load_in_8bit` and `load_in_4bit` at the same time by osanseviero in 28266

* Move CLIP _no_split_modules to CLIPPreTrainedModel by lz1oceani in 27841

* Use Conv1d for TDNN by gau-nernst in 25728

* Fix transformers.utils.fx compatibility with torch<2.0 by fxmarty in 28774

* Further pin pytest version (in a temporary way) by ydshieh in 28780

* Task-specific pipeline init args by amyeroberts in 28439

* Pin Torch to <2.2.0 by Rocketknight1 in 28785

* [`bnb`] Fix bnb slow tests by younesbelkada in 28788

* Prevent MLflow exception from disrupting training by codiceSpaghetti in 28779

* don't initialize the output embeddings if we're going to tie them to input embeddings by tom-p-reichel in 28192

* [Whisper] Refactor forced_decoder_ids & prompt ids by patrickvonplaten in 28687

* Resolve DeepSpeed cannot resume training with PeftModel by lh0x00 in 28746

* Wrap Keras methods to support BatchEncoding by Rocketknight1 in 28734

* DeepSpeed: hardcode `torch.arange` dtype on `float` usage to avoid incorrect initialization by gante in 28760

* Add artifact name in job step to maintain job / artifact correspondence by ydshieh in 28682

* Split daily CI using 2 level matrix by ydshieh in 28773

* [docs] Correct the statement in the docstirng of compute_transition_scores in generation/utils.py by Ki-Seki in 28786

* Adding [T5/MT5/UMT5]ForTokenClassification by hackyon in 28443

* Make `is_torch_bf16_available_on_device` more strict by ydshieh in 28796

* Add tip on setting tokenizer attributes by Rocketknight1 in 28764

* enable graident checkpointing in DetaObjectDetection and add tests in Swin/Donut_Swin by SangbumChoi in 28615

* [docs] fix some bugs about parameter description by zspo in 28806

* Add models from deit by rajveer43 in 28302

* [Docs] Fix spelling and grammar mistakes by khipp in 28825

* Explicitly check if token ID's are None in TFBertTokenizer constructor by skumar951 in 28824

* Add missing None check for hf_quantizer by jganitkevitch in 28804

* Fix issues caused by natten by ydshieh in 28834

* fix / skip (for now) some tests before switch to torch 2.2 by ydshieh in 28838

* Use `-v` for `pytest` on CircleCI by ydshieh in 28840

* Reduce GPU memory usage when using FSDP+PEFT by pacman100 in 28830

* Mark `test_encoder_decoder_model_generate` for `vision_encoder_deocder` as flaky by amyeroberts in 28842

* Support custom scheduler in deepspeed training by VeryLazyBoy in 26831

* [Docs] Fix bad doc: replace save with logging by chenzizhao in 28855

* Ability to override clean_code_for_run by w4ffl35 in 28783

* [WIP] Hard error when ignoring tensors. by Narsil in 27484

* [`Doc`] update contribution guidelines by ArthurZucker in 28858

* Correct wav2vec2-bert inputs_to_logits_ratio by ylacombe in 28821

* Image Feature Extraction pipeline by amyeroberts in 28216

* ClearMLCallback enhancements: support multiple runs and handle logging better by eugen-ajechiloae-clearml in 28559

* Do not use mtime for checkpoint rotation. by xkszltl in 28862

* Adds LlamaForQuestionAnswering class in modeling_llama.py along with AutoModel Support by nakranivaibhav in 28777

* [Docs] Update project names and links in awesome-transformers by khipp in 28878

* Fix LongT5ForConditionalGeneration initialization of lm_head by eranhirs in 28873

* Raise error when using `save_only_model` with `load_best_model_at_end` for DeepSpeed/FSDP by pacman100 in 28866

* Fix `FastSpeech2ConformerModelTest` and skip it on CPU by ydshieh in 28888

* Revert "[WIP] Hard error when ignoring tensors." by ydshieh in 28898

* unpin torch by ydshieh in 28892

* Explicit server error on gated model by Wauplin in 28894

* [Docs] Fix backticks in inline code and documentation links by khipp in 28875

* Hotfix - make `torchaudio` get the correct version in `torch_and_flax_job` by ydshieh in 28899

* [Docs] Add missing language options and fix broken links by khipp in 28852

* fix: Fixed the documentation for `logging_first_step` by removing "evaluate" by Sai-Suraj-27 in 28884

* fix Starcoder FA2 implementation by pacman100 in 28891

* Fix Keras scheduler import so it works for older versions of Keras by Rocketknight1 in 28895

* ⚠️ Raise `Exception` when trying to generate 0 tokens ⚠️ by danielkorat in 28621

* Update the cache number by ydshieh in 28905

* Add npu device for pipeline by statelesshz in 28885

* [Docs] Fix placement of tilde character by khipp in 28913

* [Docs] Revert translation of 'slow' decorator by khipp in 28912

* Fix utf-8 yaml load for marian conversion to pytorch in Windows by SystemPanic in 28618

* Remove dead TF loading code by Rocketknight1 in 28926

* fix: torch.int32 instead of torch.torch.int32 by vodkaslime in 28883

* pass kwargs in stopping criteria list by zucchini-nlp in 28927

* Support batched input for decoder start ids by zucchini-nlp in 28887

* [Docs] Fix broken links and syntax issues by khipp in 28918

* Fix max_position_embeddings default value for llama2 to 4096 28241 by karl-hajjar in 28754

* Fix a wrong link to CONTRIBUTING.md section in PR template by B-Step62 in 28941

* Fix type annotations on neftune_noise_alpha and fsdp_config TrainingArguments parameters by peblair in 28942

* [i18n-de] Translate README.md to German by khipp in 28933

* [Nougat] Fix pipeline by NielsRogge in 28242

* [Docs] Update README and default pipelines by NielsRogge in 28864

* Convert `torch_dtype` as `str` to actual torch data type (i.e. "float16" …to `torch.float16`) by KossaiSbai in 28208

* [`pipelines`] updated docstring with vqa alias by cmahmut in 28951

* Tests: tag `test_save_load_fast_init_from_base` as flaky by gante in 28930

* Updated requirements for image-classification samples: datasets>=2.14.0 by alekseyfa in 28974

* Always initialize tied output_embeddings if it has a bias term by hackyon in 28947

* Clean up staging tmp checkpoint directory by woshiyyya in 28848

* [Docs] Add language identifiers to fenced code blocks by khipp in 28955

* [Docs] Add video section by NielsRogge in 28958

* [i18n-de] Translate CONTRIBUTING.md to German by khipp in 28954

* [`NllbTokenizer`] refactor with added tokens decoder by ArthurZucker in 27717

* Add sudachi_projection option to BertJapaneseTokenizer by hiroshi-matsuda-rit in 28503

* Update configuration_llama.py: fixed broken link by AdityaKane2001 in 28946

* [`DETR`] Update the processing to adapt masks & bboxes to reflect padding by amyeroberts in 28363

* ENH: Do not pass warning message in case `quantization_config` is in config but not passed as an arg by younesbelkada in 28988

* ENH [`AutoQuantizer`]: enhance trainer + not supported quant methods by younesbelkada in 28991

* Add SiglipForImageClassification and CLIPForImageClassification by NielsRogge in 28952

* [`Doc`] Fix docbuilder - make `BackboneMixin` and `BackboneConfigMixin` importable from `utils`. by amyeroberts in 29002

* Set the dataset format used by `test_trainer` to float32 by statelesshz in 28920

* Introduce AcceleratorConfig dataclass by muellerzr in 28664

* Fix flaky test vision encoder-decoder generate by zucchini-nlp in 28923

* Mask Generation Task Guide by merveenoyan in 28897

* Add tie_weights() to LM heads and set bias in set_output_embeddings() by hackyon in 28948

* [TPU] Support PyTorch/XLA FSDP via SPMD by alanwaketan in 28949

* FIX [`Trainer` / tags]: Fix trainer + tags when users do not pass `"tags"` to `trainer.push_to_hub()` by younesbelkada in 29009

* Add cuda_custom_kernel in DETA by SangbumChoi in 28989

* DeformableDetrModel support fp16 by DonggeunYu in 29013

* Fix copies between DETR and DETA by amyeroberts in 29037

* FIX: Fix error with `logger.warning` + inline with recent refactor by younesbelkada in 29039

* Patch to skip failing `test_save_load_low_cpu_mem_usage` tests by amyeroberts in 29043

* Fix a tiny typo in `generation/utils.py::GenerateEncoderDecoderOutput`'s docstring by sadra-barikbin in 29044

* add test marker to run all tests with require_bitsandbytes by Titus-von-Koeller in 28278

* Update important model list by LysandreJik in 29019

* Fix max_length criteria when using inputs_embeds by zucchini-nlp in 28994

* Support : Leverage Accelerate for object detection/segmentation models by Tanmaypatil123 in 28312

* fix num_assistant_tokens with heuristic schedule by jmamou in 28759

* fix failing trainer ds tests by pacman100 in 29057

* `auto_find_batch_size` isn't yet supported with DeepSpeed/FSDP. Raise error accrodingly. by pacman100 in 29058

* Honor trust_remote_code for custom tokenizers by rl337 in 28854

* Feature: Option to set the tracking URI for MLflowCallback. by seanswyi in 29032

* Fix trainer test wrt DeepSpeed + auto_find_bs by muellerzr in 29061

* Add chat support to text generation pipeline by Rocketknight1 in 28945

* [Docs] Spanish translation of task_summary.md by aaronjimv in 28844

* [`Awq`] Add peft support for AWQ by younesbelkada in 28987

* FIX [`bnb` / `tests`]: Fix currently failing bnb tests by younesbelkada in 29092

* fix the post-processing link by davies-w in 29091

* Fix the `bert-base-cased` tokenizer configuration test by LysandreJik in 29105

* Fix a typo in `examples/pytorch/text-classification/run_classification.py` by Ja1Zhou in 29072

* change version by ArthurZucker in 29097

* [Docs] Add resources by NielsRogge in 28705

* ENH: added new output_logits option to generate function by mbaak in 28667

* Bnb test fix for different hardwares by Titus-von-Koeller in 29066

* Fix two tiny typos in `pipelines/base.py::Pipeline::_sanitize_parameters()`'s docstring by sadra-barikbin in 29102

* storing & logging gradient norm in trainer by shijie-wu in 27326

* Fixed nll with label_smoothing to just nll by nileshkokane01 in 28708

* [`gradient_checkpointing`] default to use it for torch 2.3 by ArthurZucker in 28538

* Move misplaced line by kno10 in 29117

* FEAT [`Trainer` / `bnb`]: Add RMSProp from `bitsandbytes` to HF `Trainer` by younesbelkada in 29082

* Abstract image processor arg checks. by molbap in 28843

* FIX [`bnb` / `tests`] Propagate the changes from 29092 to 4-bit tests by younesbelkada in 29122

* Llama: fix batched generation by gante in 29109

* Generate: unset GenerationConfig parameters do not raise warning by gante in 29119

* [`cuda kernels`] only compile them when initializing by ArthurZucker in 29133

* FIX [`PEFT` / `Trainer` ] Handle better peft + quantized compiled models by younesbelkada in 29055

* [`Core tokenization`] `add_dummy_prefix_space` option to help with latest issues by ArthurZucker in 28010

* Revert low cpu mem tie weights by amyeroberts in 29135

* Add support for fine-tuning CLIP-like models using contrastive-image-text example by tjs-intel in 29070

* Save (circleci) cache at the end of a job by ydshieh in 29141

* [Phi] Add support for sdpa by hackyon in 29108

* Generate: missing generation config eos token setting in encoder-decoder tests by gante in 29146

* Added image_captioning version in es and included in toctree file by gisturiz in 29104

* Fix drop path being ignored in DINOv2 by fepegar in 29147

* [`pipeline`] Add pool option to image feature extraction pipeline by amyeroberts in 28985

Significant community contributions

The following contributors have made significant changes to the library over the last release:

* nakranivaibhav

* Improved type hinting for all attention parameters (28479)

* Adds LlamaForQuestionAnswering class in modeling_llama.py along with AutoModel Support (28777)

* khipp

* Fix input data file extension in examples (28741)

* [Docs] Fix spelling and grammar mistakes (28825)

* [Docs] Update project names and links in awesome-transformers (28878)

* [Docs] Fix backticks in inline code and documentation links (28875)

* [Docs] Add missing language options and fix broken links (28852)

* [Docs] Fix placement of tilde character (28913)

* [Docs] Revert translation of 'slow' decorator (28912)

* [Docs] Fix broken links and syntax issues (28918)

* [i18n-de] Translate README.md to German (28933)

* [Docs] Add language identifiers to fenced code blocks (28955)

* [i18n-de] Translate CONTRIBUTING.md to German (28954)

* ThibaultLengagne

* Add French translation: french README.md (28696)

* poedator

* `HfQuantizer` class for quantization-related stuff in `modeling_utils.py` (26610)

* kiansierra

* Flax mistral (26943)

* hackyon

* Adding [T5/MT5/UMT5]ForTokenClassification (28443)

* Always initialize tied output_embeddings if it has a bias term (28947)

* Add tie_weights() to LM heads and set bias in set_output_embeddings() (28948)

* [Phi] Add support for sdpa (29108)

* SangbumChoi

* enable graident checkpointing in DetaObjectDetection and add tests in Swin/Donut_Swin (28615)

* Add cuda_custom_kernel in DETA (28989)

* rajveer43

* Add models from deit (28302)

* jon-tow

* Add `StableLM` (28810)

4.37.2

Selection of fixes

* Protecting the imports for SigLIP's tokenizer if sentencepiece isn't installed

* Fix permissions issue on windows machines when using trainer in multi-node setup

* Allow disabling safe serialization when using Trainer. Needed for Neuron SDK

* Fix error when loading processor from cache

* torch < 1.13 compatible `torch.load`

Commits

* [Siglip] protect from imports if sentencepiece not installed (28737)

* Fix weights_only (28725)

* Enable safetensors conversion from PyTorch to other frameworks without the torch requirement (27599)

* Don't fail when LocalEntryNotFoundError during processor_config.json loading (28709)

* Use save_safetensor to disable safe serialization for XLA (28669)

* Fix windows err with checkpoint race conditions (28637)

* [SigLIP] Only import tokenizer if sentencepiece available (28636)

4.37.1

A patch release to resolve import errors from removed custom types in generation utils

* Add back in generation types 28681

4.37.0

Model releases

Qwen2

Qwen2 is the new model series of large language models from the Qwen team. Previously, the Qwen series was released, including Qwen-72B, Qwen-1.8B, Qwen-VL, Qwen-Audio, etc.

Qwen2 is a language model series including decoder language models of different model sizes. For each size, we release the base language model and the aligned chat model. It is based on the Transformer architecture with SwiGLU activation, attention QKV bias, group query attention, mixture of sliding window attention and full attention, etc. Additionally, we have an improved tokenizer adaptive to multiple natural languages and codes.

* Add qwen2 by JustinLin610 in 28436

Phi-2

Phi-2 is a transformer language model trained by Microsoft with exceptionally strong performance for its small size of 2.7 billion parameters. It was previously available as a custom code model, but has now been fully integrated into transformers.

* [Phi2] Add support for phi2 models by susnato in 28211

* [Phi] Extend implementation to use GQA/MQA. by gugarosa in 28163

* update docs to add the `phi-2` example by susnato in 28392

* Fixes default value of `softmax_scale` in `PhiFlashAttention2`. by gugarosa in 28537

SigLIP

The SigLIP model was proposed in Sigmoid Loss for Language Image Pre-Training by Xiaohua Zhai, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer. SigLIP proposes to replace the loss function used in CLIP by a simple pairwise sigmoid loss. This results in better performance in terms of zero-shot classification accuracy on ImageNet.

* Add SigLIP by NielsRogge in 26522

* [SigLIP] Don't pad by default by NielsRogge in 28578

ViP-LLaVA

The VipLlava model was proposed in Making Large Multimodal Models Understand Arbitrary Visual Prompts by Mu Cai, Haotian Liu, Siva Karthik Mustikovela, Gregory P. Meyer, Yuning Chai, Dennis Park, Yong Jae Lee.

VipLlava enhances the training protocol of Llava by marking images and interact with the model using natural cues like a “red bounding box” or “pointed arrow” during training.

* Adds VIP-llava to transformers by younesbelkada in 27932

* Fix Vip-llava docs by younesbelkada in 28085

FastSpeech2Conformer

The FastSpeech2Conformer model was proposed with the paper Recent Developments On Espnet Toolkit Boosted By Conformer by Pengcheng Guo, Florian Boyer, Xuankai Chang, Tomoki Hayashi, Yosuke Higuchi, Hirofumi Inaguma, Naoyuki Kamo, Chenda Li, Daniel Garcia-Romero, Jiatong Shi, Jing Shi, Shinji Watanabe, Kun Wei, Wangyou Zhang, and Yuekai Zhang.

FastSpeech 2 is a non-autoregressive model for text-to-speech (TTS) synthesis, which develops upon FastSpeech, showing improvements in training speed, inference speed and voice quality. It consists of a variance adapter; duration, energy and pitch predictor and waveform and mel-spectrogram decoder.

* Add FastSpeech2Conformer by connor-henderson in 23439

Wav2Vec2-BERT

The Wav2Vec2-BERT model was proposed in Seamless: Multilingual Expressive and Streaming Speech Translation by the Seamless Communication team from Meta AI.

This model was pre-trained on 4.5M hours of unlabeled audio data covering more than 143 languages. It requires finetuning to be used for downstream tasks such as Automatic Speech Recognition (ASR), or Audio Classification.

* Add new meta w2v2-conformer BERT-like model by ylacombe in 28165

* Add w2v2bert to pipeline by ylacombe in 28585

4-bit serialization

Enables saving and loading transformers models in 4bit formats - you can now push bitsandbytes 4-bit weights on Hugging Face Hub. To save 4-bit models and push them on the hub, simply install the latest `bitsandbytes` package from pypi `pip install -U bitsandbytes`, load your model in 4-bit precision and call `save_pretrained` / `push_to_hub`. An example repo [here](https://huggingface.co/ybelkada/Mixtral-8x7B-Instruct-v0.1-bnb-4bit)

python

from transformers import AutoModelForCausalLM, AutoTokenizer

model_id = "facebook/opt-125m"

model = AutoModelForCausalLM.from_pretrained(model_id, load_in_4bit=True)

model.push_to_hub("ybelkada/opt-125m-bnb-4bit")

* [bnb] Let's make serialization of 4bit models possible by poedator in 26037

* [`Docs`] Add 4-bit serialization docs by younesbelkada in 28182

4D Attention mask

Enable passing in 4D attention masks to models that support it. This is useful for reducing memory footprint of certain generation tasks.

* 4D `attention_mask` support by poedator in 27539

Improved quantization support

Ability to customise which modules are quantized and which are not.

* [`Awq`] Enable the possibility to skip quantization for some target modules by younesbelkada in 27950

* add `modules_in_block_to_quantize` arg in GPTQconfig by SunMarc in 27956

Added fused modules support

* [docs] Fused AWQ modules by stevhliu in 27896

* [`Awq`] Add llava fused modules support by younesbelkada in 28239

* [`Mixtral` / `Awq`] Add mixtral fused modules for Awq by younesbelkada in 28240

SDPA Support for LLaVa, Mixtral, Mistral

* Fix SDPA correctness following torch==2.1.2 regression by fxmarty in 27973

* [`Llava` / `Vip-Llava`] Add SDPA into llava by younesbelkada in 28107

* [`Mixtral` & `Mistral`] Add support for sdpa by ArthurZucker in 28133

* [SDPA] Make sure attn mask creation is always done on CPU by patrickvonplaten in 28400

* Fix SDPA tests by fxmarty in 28552

Whisper: Batched state-of-the-art long-form transcription

All decoding strategies (temperature fallback, compression/log-prob/no-speech threshold, ...) of OpenAI's long-form transcription (see: https://github.com/openai/whisper or section 4.5 in paper) have been added. Contrary to https://github.com/openai/whisper, Transformers long-form transcription is fully compatible with pure FP16 and Batching!

For more information see: https://github.com/huggingface/transformers/pull/27658.

* [Whisper] Finalize batched SOTA long-form generation by patrickvonplaten in 27658

Generation: assisted generation upgrades, speculative decoding, and ngram speculation

[Assisted generation](https://huggingface.co/blog/assisted-generation) was reworked to accept arbitrary sources of candidate sequences. This enabled us to smoothly integrate [ngram speculation](https://twitter.com/joao_gante/status/1747322413006643259), and opens the door for new candidate generation methods. Additionally, we've added the [speculative decoding](https://arxiv.org/abs/2211.17192) strategy on top of assisted generation: when you call assisted generation with an assistant model and `do_sample=True`, you'll benefit from the faster speculative decoding sampling 🏎️💨

* Generate: `assisted_decoding` now accepts arbitrary candidate generators by gante in 27751

* Generate: assisted decoding now uses `generate` for the assistant by gante in 28031

* Generate: speculative decoding by gante in 27979

* Generate: fix speculative decoding by gante in 28166

* Adding Prompt lookup decoding by apoorvumang in 27775

* Fix _speculative_sampling implementation by ofirzaf in 28508

torch.load pickle protection

Adding pickle protection via weights_only=True in the torch.load calls.

* make torch.load a bit safer by julien-c in 27282

Build methods for TensorFlow Models

Unlike PyTorch, TensorFlow models build their weights "lazily" after model initialization, using the shape of their inputs to figure out what their weight shapes should be. We previously needed a full forward pass through TF models to ensure that all layers received an input they could use to build their weights, but with this change we now have proper `build()` methods that can correctly infer shapes and build model weights. This avoids a whole range of potential issues, as well as significantly accelerating model load times.

* Proper build() methods for TF by Rocketknight1 in 27794

* Replace build() with build_in_name_scope() for some TF tests by Rocketknight1 in 28046

* More TF fixes by Rocketknight1 in 28081

* Even more TF test fixes by Rocketknight1 in 28146

4.36.2

Patch release to resolve some critical issues relating to the recent cache refactor, flash attention refactor and training in the multi-gpu and multi-node settings:

* Resolve training bug with PEFT + GC 28031

* Resolve cache issue when going beyond context window for Mistral/Mixtral FA2 28037

* Re-enable passing `config` to `from_pretrained` with FA 28043

* Fix resuming from checkpoint when using FDSP with FULL_STATE_DICT 27891

* Resolve bug when saving a checkpoint in the multi-node setting 28078

4.36.1

A patch release for critical torch issues mostly:

- Fix SDPA correctness following torch==2.1.2 regression 27973

- [Tokenizer Serialization] Fix the broken serialisation 27099

- Fix bug with rotating checkpoints 28009

- Hot-fix-mixstral-loss ([27948](https://github.com/huggingface/transformers/pull/27948))

🔥

Links

- PyPI: https://pypi.org/project/transformers

- Changelog: https://data.safetycli.com/changelogs/transformers/

- Repo: https://github.com/huggingface/transformers