spring-cloud-sleuth

spring-cloud-sleuth copied to clipboard

spring-cloud-sleuth copied to clipboard

SpanId is generated twice when kafka message is produced and received

Hello, Met a strange behaviour when kafka message is sent or received. Could you help to undestand what is wrong in my example, please? For example we have a tracing flow like this: I make http request to my controller -> then I send message to kafka -> I consume this message in the same (or other, it doesn't matter I think) service. First I should clarify: there should be generated only 2 spanId - first when http request is started and second when kafka message is consumed, right?

If it's true, then let me describe how I'm getting 3 or 4 spanIds instead of 2 with my minimal sample.

Sample Minimal sample: https://github.com/kzkvv/spring-sleuth-span-test

Dependencies versions: org.springframework.cloud:spring-cloud-starter-sleuth:3.1.1 org.springframework.kafka:spring-kafka:2.8.4 org.springframework.boot:spring-boot-starter-web:2.6.6 org.springframework.boot:spring-boot-starter-test:2.6.6 org.springframework.kafka:spring-kafka-test:2.8.4

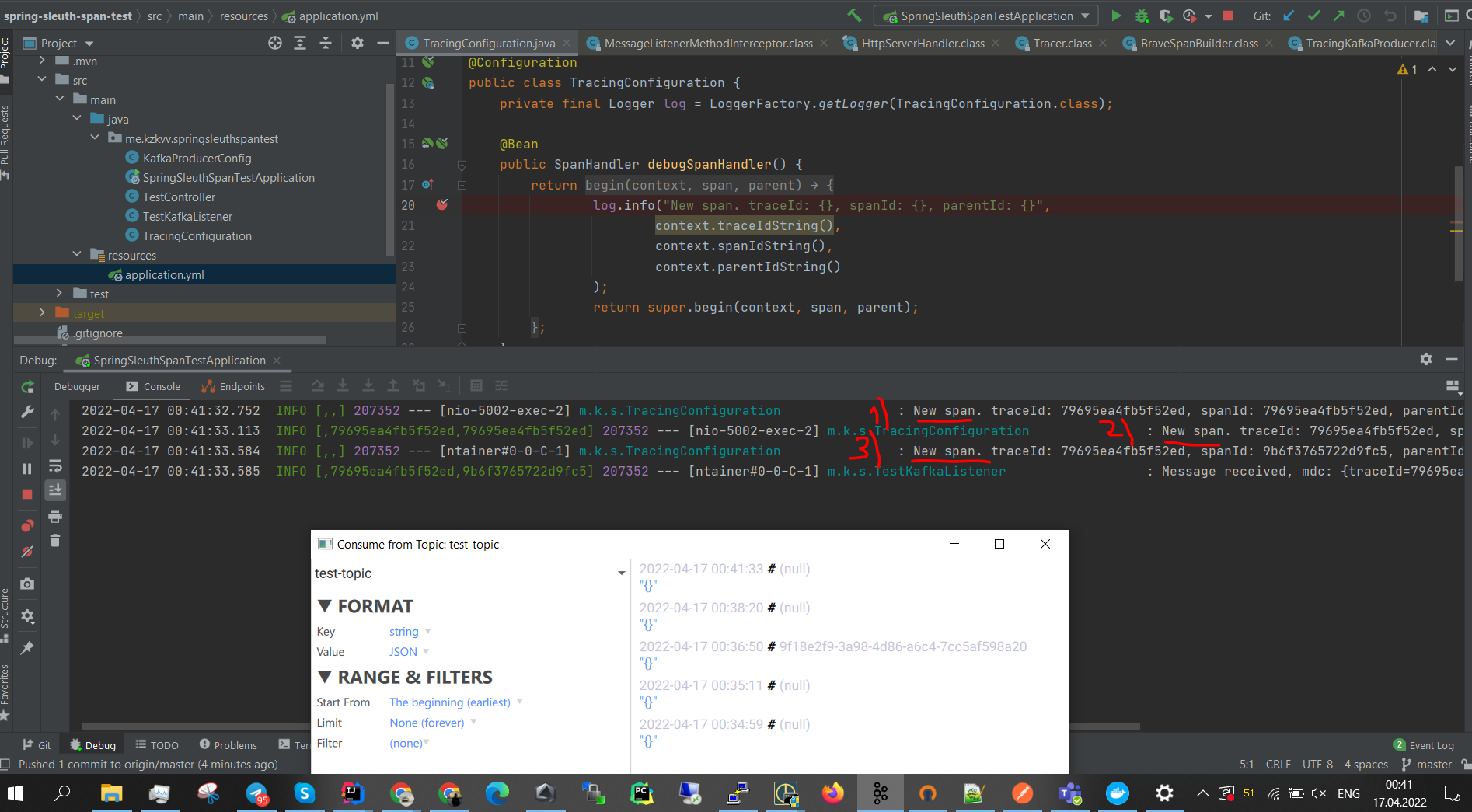

I added a SpanHandler to log when each new span is generated. Let's check each case:

- Do not change nothing in configs, just start the example and make a GET request to this URL: /test/1 This endpoint use Producer bean to send kafka messages, as described in spring sleuth integration docs Result: in this case all looks as expected, we have 2 new spans, it's ok. ParentId=null looks strange in second log record, but it's not important in my question I think.

2022-04-12 10:21:37.512 INFO [,,] 35864 --- [nio-5002-exec-5] m.k.s.TracingConfiguration : New span. traceId: ff8fe4ed89817eef, spanId: ff8fe4ed89817eef, parentId: null

2022-04-12 10:21:38.571 INFO [,,] 35864 --- [ntainer#0-0-C-1] m.k.s.TracingConfiguration : New span. traceId: d2278aee4bec9395, spanId: d2278aee4bec9395, parentId: null

2022-04-12 10:21:38.571 INFO [,d2278aee4bec9395,d2278aee4bec9395] 35864 --- [ntainer#0-0-C-1] m.k.s.TestKafkaListener : Message received, mdc: {traceId=d2278aee4bec9395, spanId=d2278aee4bec9395}

- Complete the same steps without any changes using another endpoint: GET /test/2 - this endpoint use KafkaTemplate to send kafka message Result: a new span is generated 3 times instead of 2

2022-04-12 10:42:08.930 INFO [,,] 33720 --- [nio-5002-exec-4] m.k.s.TracingConfiguration : New span. traceId: f36d5b4acb5949d1, spanId: f36d5b4acb5949d1, parentId: null

2022-04-12 10:42:09.500 INFO [,f36d5b4acb5949d1,f36d5b4acb5949d1] 33720 --- [nio-5002-exec-4] m.k.s.TracingConfiguration : New span. traceId: f36d5b4acb5949d1, spanId: e98ea3ba5b0c9575, parentId: f36d5b4acb5949d1

2022-04-12 10:42:10.267 INFO [,,] 33720 --- [ntainer#0-0-C-1] m.k.s.TracingConfiguration : New span. traceId: f36d5b4acb5949d1, spanId: 53c0abed1b94840a, parentId: e98ea3ba5b0c9575

2022-04-12 10:42:10.267 INFO [,f36d5b4acb5949d1,53c0abed1b94840a] 33720 --- [ntainer#0-0-C-1] m.k.s.TestKafkaListener : Message received, mdc: {traceId=f36d5b4acb5949d1, spanId=53c0abed1b94840a}

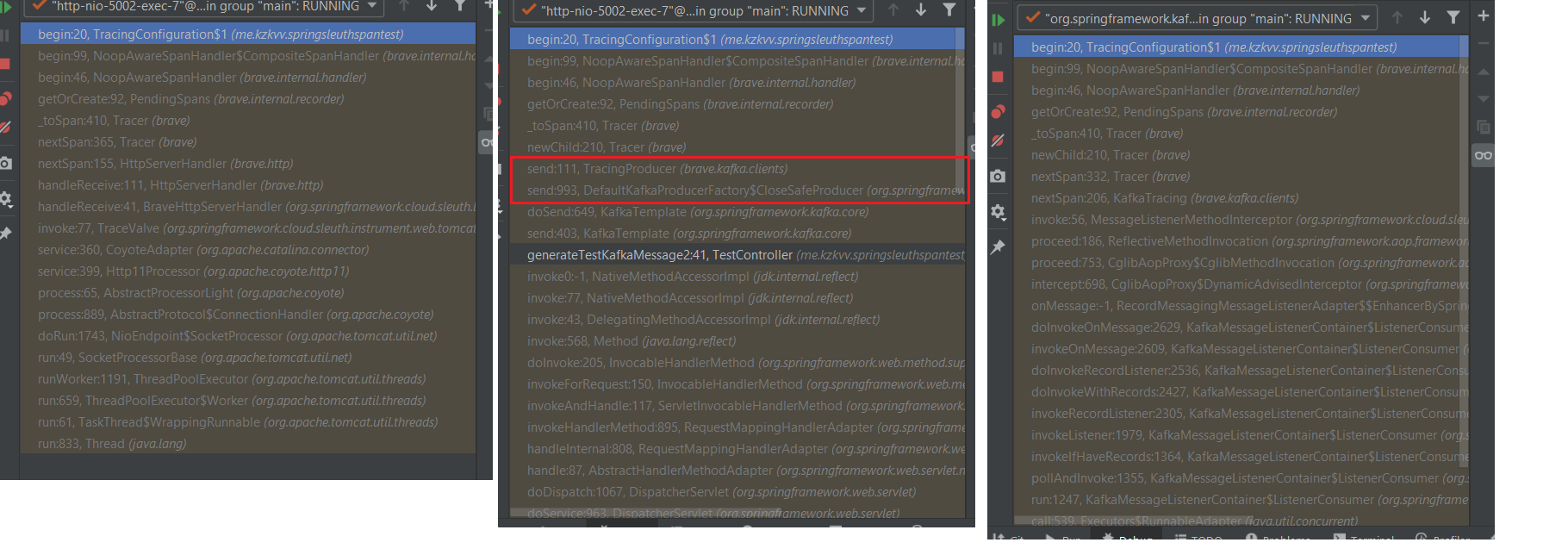

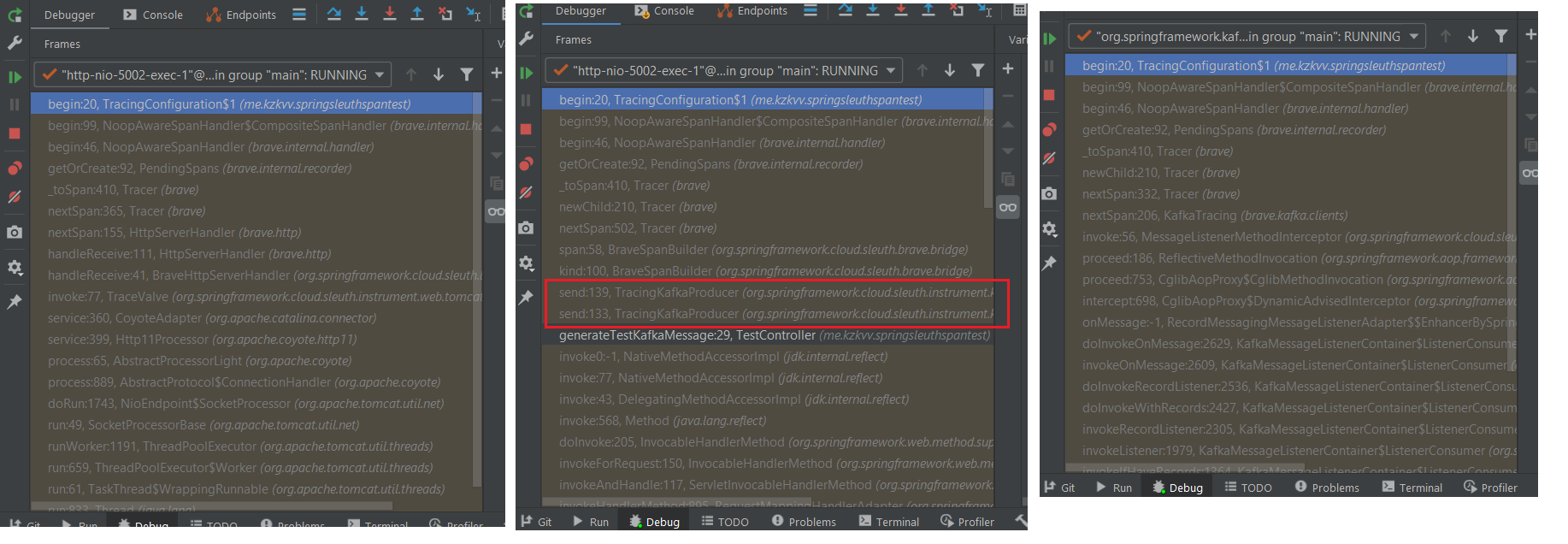

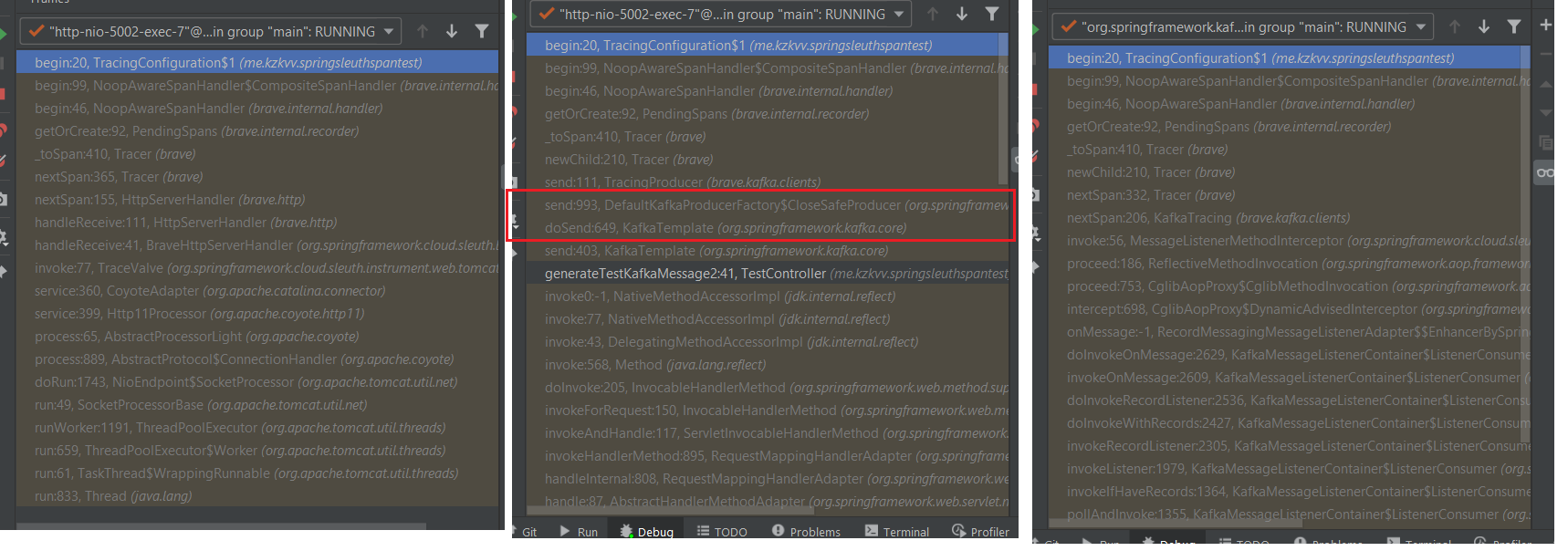

Components initiated a new span generation: here is one extra generation that I marked with red

But here we completed this flow with disabled property in spring yaml config: spring.sleuth.kafka.enabled=false

Let's check the same flow when this property is enabled.

- Set

spring.sleuth.kafka.enabled=truein spring yaml config (it'sfalsein my example by default) Then make a GET request to URL /test/1 to send message using Producer bean Result: spanId is generated 3 times instead of 2

2022-04-12 10:30:49.266 INFO [,,] 31716 --- [nio-5002-exec-5] m.k.s.TracingConfiguration : New span. traceId: a861ea863b528882, spanId: a861ea863b528882, parentId: null

2022-04-12 10:30:49.720 INFO [,a861ea863b528882,a861ea863b528882] 31716 --- [nio-5002-exec-5] m.k.s.TracingConfiguration : New span. traceId: a861ea863b528882, spanId: ecd31ddbebb5b9f8, parentId: a861ea863b528882

2022-04-12 10:30:50.352 INFO [,,] 31716 --- [ntainer#0-0-C-1] m.k.s.TracingConfiguration : New span. traceId: a861ea863b528882, spanId: 0d2eebaa13924f30, parentId: ecd31ddbebb5b9f8

2022-04-12 10:30:50.353 INFO [,a861ea863b528882,0d2eebaa13924f30] 31716 --- [ntainer#0-0-C-1] m.k.s.TestKafkaListener : Message received, mdc: {traceId=a861ea863b528882, spanId=0d2eebaa13924f30}

Components initiated a new span generation: here is one extra generation that I marked with red

- Try with

spring.sleuth.kafka.enabled=trueusing endpoint /test/2 (generate a messsage using KafkaTemplate) Result is the same: spanId is generated 3 times instead of 2

2022-04-12 10:48:53.058 INFO [,,] 39884 --- [nio-5002-exec-4] m.k.s.TracingConfiguration : New span. traceId: 6c1c1c3ccae12c25, spanId: 6c1c1c3ccae12c25, parentId: null

2022-04-12 10:48:53.434 INFO [,6c1c1c3ccae12c25,6c1c1c3ccae12c25] 39884 --- [nio-5002-exec-4] m.k.s.TracingConfiguration : New span. traceId: 6c1c1c3ccae12c25, spanId: b979e26f258f9e3e, parentId: 6c1c1c3ccae12c25

2022-04-12 10:48:54.315 INFO [,,] 39884 --- [ntainer#0-0-C-1] m.k.s.TracingConfiguration : New span. traceId: 6c1c1c3ccae12c25, spanId: 64f20e2839e1de1e, parentId: b979e26f258f9e3e

2022-04-12 10:48:54.316 INFO [,6c1c1c3ccae12c25,64f20e2839e1de1e] 39884 --- [ntainer#0-0-C-1] m.k.s.TestKafkaListener : Message received, mdc: {traceId=6c1c1c3ccae12c25, spanId=64f20e2839e1de1e}

Components initiated a new span generation:

- When I delete

consumerFactorybean fromKafkaProducerConfigthen I got 4 new spans instead of 2 (2 while producing and another 2 while consuming). It's not a big problem, but it looks like spring sleuth docs are not complete, I can't find description about this point except this small part in integration docs.

And as result I have these questions:

- What I should change to use KafkaTemplate to produce kafka messages and @KafkaListener annotation to consume them without extra span generation? Or is it impossible now and I should use Producer beans only?

- What is the difference between

spring.sleuth.kafka.enabledand another similar propertyspring.sleuth.messageing.kafka.enabled? Which values should I specify to avoid extra spans generation?

After a few days of experiments, I came to a dead end :( I will be very grateful for any advice or answers

I can't replicate this problem using the sample. I didn't even manage to properly receive the kafka message after the controller processed it. I used the following docker compose yaml for kafka.

version: "3"

networks:

kafka-cluster:

name: kafka-cluster

driver: bridge

services:

zookeeper:

image: bitnami/zookeeper:3.6.2

container_name: zookeeper

ports:

- '2181:2181'

environment:

- ALLOW_ANONYMOUS_LOGIN=yes

networks:

- kafka-cluster

kafka1:

image: bitnami/kafka:2.7.0

container_name: kafka1

ports:

- '9093:9093'

- '9092:9092'

environment:

- KAFKA_CFG_ZOOKEEPER_CONNECT=zookeeper:2181

- ALLOW_PLAINTEXT_LISTENER=yes

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=CLIENT:PLAINTEXT,EXTERNAL:PLAINTEXT

- KAFKA_CFG_LISTENERS=CLIENT://:9092,EXTERNAL://:9093

- KAFKA_CFG_ADVERTISED_LISTENERS=CLIENT://localhost:9092,EXTERNAL://localhost:9093

- KAFKA_INTER_BROKER_LISTENER_NAME=CLIENT

- KAFKA_CFG_AUTO_CREATE_TOPICS_ENABLE=true

depends_on:

- zookeeper

networks:

- kafka-cluster

I also added Zipkin dependency and modified the app properties like this

spring:

kafka:

consumer:

# bootstrap-servers: localhost:9093

auto-offset-reset: earliest

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

trusted-packages: java.lang

producer:

# bootstrap-servers: localhost:9093

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.springframework.kafka.support.serializer.JsonSerializer

sleuth:

kafka:

enabled: true

propagation:

type: B3

sampler:

probability: 1.0

server:

port: ${SERVER_PORT:5002}

logging:

level:

root: INFO

brave: DEBUG

Can you double check that the sample actually sends and receives a message from Kafka?

Hm, it's strange, because this sample works as before with your docker-compose too. What I changed:

- Used your docker-compose with kafka (copied and pushed to my sample repository)

- Updated the yaml config (already pushed to my sample repository too) Then I got the same result - 3 spanId are generated instead of 2 when using '/test/2' endpoint:

2022-04-17 00:55:34.195 INFO [,,] 210128 --- [nio-5002-exec-4] m.k.s.TracingConfiguration : New span. traceId: 14b5802cfa51e550, spanId: 14b5802cfa51e550, parentId: null

2022-04-17 00:55:34.578 INFO [,14b5802cfa51e550,14b5802cfa51e550] 210128 --- [nio-5002-exec-4] m.k.s.TracingConfiguration : New span. traceId: 14b5802cfa51e550, spanId: 6f428a7b5b853f81, parentId: 14b5802cfa51e550

2022-04-17 00:55:35.202 INFO [,,] 210128 --- [ntainer#0-0-C-1] m.k.s.TracingConfiguration : New span. traceId: 14b5802cfa51e550, spanId: 99b5b93422fcb5e8, parentId: 6f428a7b5b853f81

2022-04-17 00:55:35.202 INFO [,14b5802cfa51e550,99b5b93422fcb5e8] 210128 --- [ntainer#0-0-C-1] m.k.s.TestKafkaListener : Message received, mdc: {traceId=14b5802cfa51e550, spanId=99b5b93422fcb5e8}

At the same time I successfully received kafka messages (it's "Message received ..." log record produced in TestKafkaListener)

I can see also these messages in topic "test-topic" using kafka UI (I connected to localhost:9092 broker created from your docker-compose)

I have a question about the zipkin dependency: is it a neccesary dependency t use the spring sleuth framework? We didn't plan to use zipkin in our tech stack. Looks like it's an optional service with optional dependency, otherwise this requirement should be described in spring-sleuth docs and should be included in 'spring-cloud-starter-sleuth'.

I have a question about the zipkin dependency: is it a neccesary dependency t use the spring sleuth framework? We didn't plan to use zipkin in our tech stack. Looks like it's an optional service with optional dependency, otherwise this requirement should be described in spring-sleuth docs and should be included in 'spring-cloud-starter-sleuth'.

It's not necessary but I wanted to visualize the spans

Please upgrade to Micrometer Tracing. Spring Cloud Sleuth is feature complete and out of OSS support.