spring-cloud-gateway

spring-cloud-gateway copied to clipboard

spring-cloud-gateway copied to clipboard

Some requests are failing when using HEAD requests

Describe the bug Some requests are failing randomly when HEAD requests and chunked responses are used. Noticed that Netty sometimes tries to read a last "zero" chunk as a start of HTTP response. Netty tries to determine the HTTP protocol version from last "zero" chunk.

2021-11-08 16:59:26.943 DEBUG 24184 --- [ctor-http-nio-8] r.n.channel.ChannelOperationsHandler : [4a6c80b6, L:/10.1.222.229:64941 - R:destination-service-balancer-1447849684.us-east-1.elb.amazonaws.com/54.211.139.210:8080] Decoding failed: DefaultFullHttpResponse(decodeResult: failure(java.lang.IllegalArgumentException: invalid version format: 0), version: HTTP/1.0, content: UnpooledByteBufAllocator$InstrumentedUnpooledUnsafeHeapByteBuf(ridx: 0, widx: 0, cap: 0))

HTTP/1.0 999 Unknown :

java.lang.IllegalArgumentException: invalid version format: 0

at io.netty.handler.codec.http.HttpVersion.<init>(HttpVersion.java:116) ~[netty-codec-http-4.1.69.Final.jar:4.1.69.Final]

at io.netty.handler.codec.http.HttpVersion.valueOf(HttpVersion.java:78) ~[netty-codec-http-4.1.69.Final.jar:4.1.69.Final]

at io.netty.handler.codec.http.HttpResponseDecoder.createMessage(HttpResponseDecoder.java:157) ~[netty-codec-http-4.1.69.Final.jar:4.1.69.Final]

at io.netty.handler.codec.http.HttpObjectDecoder.decode(HttpObjectDecoder.java:273) ~[netty-codec-http-4.1.69.Final.jar:4.1.69.Final]

at io.netty.handler.codec.http.HttpClientCodec$Decoder.decode(HttpClientCodec.java:238) ~[netty-codec-http-4.1.69.Final.jar:4.1.69.Final]

at io.netty.handler.codec.ByteToMessageDecoder.decodeRemovalReentryProtection(ByteToMessageDecoder.java:507) ~[netty-codec-4.1.69.Final.jar:4.1.69.Final]

at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:446) ~[netty-codec-4.1.69.Final.jar:4.1.69.Final]

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:276) ~[netty-codec-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.CombinedChannelDuplexHandler.channelRead(CombinedChannelDuplexHandler.java:251) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.handler.logging.LoggingHandler.channelRead(LoggingHandler.java:280) ~[netty-handler-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:719) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:655) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:581) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:493) ~[netty-transport-4.1.69.Final.jar:4.1.69.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:986) ~[netty-common-4.1.69.Final.jar:4.1.69.Final]

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74) ~[netty-common-4.1.69.Final.jar:4.1.69.Final]

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30) ~[netty-common-4.1.69.Final.jar:4.1.69.Final]

at java.base/java.lang.Thread.run(Thread.java:834) ~[na:na]

Attached log file. Failed request is GET /api/timeout-scg/test?id=99b6f3f0-abd6-4af8-8145-1af9a1ce0cd7.

This unstable behavior blocks our migration from Zuul-based implementation to SCG.

Sample I created a destination service for demo purposes and deployed it to EC2. This service is accessible via AWS load balancer. To reproduce the issue:

- clone spring-cloud-gateway-timeout-demo

cd gatewaymvn clean test- this will start sending GET and HEAD requests in parallel with response status code assertions

JDK vesion: OpenJdk64-11.0.10 OS: MacOS 11 & Ubuntu 18.04

We identified that the issue is reproducible only with classic AWS load balancers. No issue when an application load balancer is used

Given the stack trace, this is likely a netty bug

The community support is very disappointing. 3 months of silence and closing the issue with the keyword "likely"...

I am not a netty expert. I can ask @violetagg if she can take a look

I am not a netty expert. I can ask @violetagg if she can take a look

I'll take a look at the provided details

it still happens with boot 2.6.3

Noticed that Netty sometimes tries to read a last "zero" chunk as a start of HTTP response. Netty tries to determine the HTTP protocol version from last "zero" chunk.

This is not a zero chunk as there is no Transfer-encoding. This is an unexpected content sent after the response.

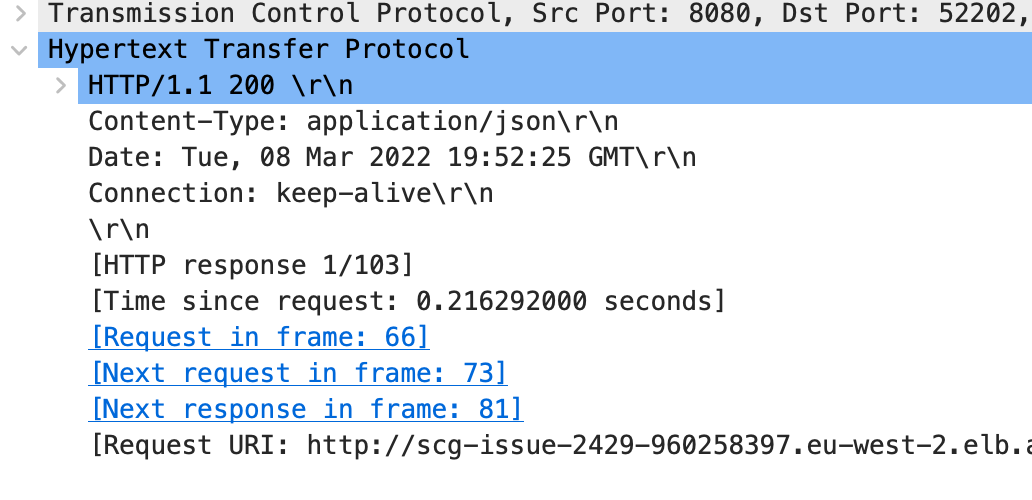

The response from the destination service for some HEAD requests is strange:

This is from the Reactor Netty wiretap - client port 52200 (see at the end of the response)

2022-03-08 19:52:26.151 DEBUG 2745 --- [or-http-epoll-3] reactor.netty.http.client.HttpClient : [e1a87c22-2, L:/IP:52200 - R:IP:8080] READ: 115B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 48 54 54 50 2f 31 2e 31 20 32 30 30 20 0d 0a 43 |HTTP/1.1 200 ..C|

|00000010| 6f 6e 74 65 6e 74 2d 54 79 70 65 3a 20 61 70 70 |ontent-Type: app|

|00000020| 6c 69 63 61 74 69 6f 6e 2f 6a 73 6f 6e 0d 0a 44 |lication/json..D|

|00000030| 61 74 65 3a 20 54 75 65 2c 20 30 38 20 4d 61 72 |ate: Tue, 08 Mar|

|00000040| 20 32 30 32 32 20 31 39 3a 35 32 3a 32 35 20 47 | 2022 19:52:25 G|

|00000050| 4d 54 0d 0a 43 6f 6e 6e 65 63 74 69 6f 6e 3a 20 |MT..Connection: |

|00000060| 6b 65 65 70 2d 61 6c 69 76 65 0d 0a 0d 0a 30 0d |keep-alive....0.|

|00000070| 0a 0d 0a |... |

+--------+-------------------------------------------------+----------------+

And this is the tcp dump

In comparison this is a GOOD HEAD response

wiretap - client port 52202:

2022-03-08 19:52:25.789 DEBUG 2745 --- [or-http-epoll-2] reactor.netty.http.client.HttpClient : [1a081fb1-1, L:/IP:52202 - R:IP:8080] READ: 110B

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 48 54 54 50 2f 31 2e 31 20 32 30 30 20 0d 0a 43 |HTTP/1.1 200 ..C|

|00000010| 6f 6e 74 65 6e 74 2d 54 79 70 65 3a 20 61 70 70 |ontent-Type: app|

|00000020| 6c 69 63 61 74 69 6f 6e 2f 6a 73 6f 6e 0d 0a 44 |lication/json..D|

|00000030| 61 74 65 3a 20 54 75 65 2c 20 30 38 20 4d 61 72 |ate: Tue, 08 Mar|

|00000040| 20 32 30 32 32 20 31 39 3a 35 32 3a 32 35 20 47 | 2022 19:52:25 G|

|00000050| 4d 54 0d 0a 43 6f 6e 6e 65 63 74 69 6f 6e 3a 20 |MT..Connection: |

|00000060| 6b 65 65 70 2d 61 6c 69 76 65 0d 0a 0d 0a |keep-alive.... |

+--------+-------------------------------------------------+----------------+

tcpdump:

@vtkhir Can you take a tcp dump from the destination service so that we can see what exactly was sent by Tomcat?

If you would like us to look at this issue, please provide the requested information. If the information is not provided within the next 7 days this issue will be closed.

Hey @violetagg @spencergibb appreciate you're looking into this issue, we're following it!

Seems we found what exactly causes incompatibility with SCG - the issue is reproducible only with AWS Classic Load Balancer. So we decided to try the Application AWS Load Balancer, and considered no unexpected content sent after the response. That approached us to change production AWS setup and successfully finalise migration to latest SCG

@vtkhir We have the same problem. Have you solved it

Hello @johnson622 Yeap, just moved ahead with migration all outdated AWS CLBs to ALBs as described in previous comment

Hello @johnson622 Yeap, just moved ahead with migration all outdated AWS CLBs to ALBs as described in previous comment

@oserohin Thank you very much. What can we do now? Our production environment has been abnormal, do i need to wait for your repair?When can you repair it?

Closing as it is an issue on AWS