visitor-flutter

visitor-flutter copied to clipboard

visitor-flutter copied to clipboard

Runtime error: out of memory (legacy replication on arm64 Pi 3B+)

Context

I'm running go-sbot on my Pi 3B+ with 64-bit Debian. The SSB account currently follows 3 accounts and is followed by 1. The sbot is running with default configuration.

Error

The sbot is stable upon start-up. However, once I connect to an instance of Patchwork on my laptop (sbotcli connect ...) the memory usage quickly climbs and the sbot crashes after approximately 60 seconds: fatal error: runtime: out of memory. It spits out approximately 3,000 lines of stack trace :)

I'm not familiar enough with Go to give great analysis of the error output but it seems that the final code reference is: /go-ssb/plugins/gossip/fetch.go:61.

I'd be happy to provide the stack-trace if that's helpful.

The sbot is far more stable when I run it with EBT (-enable-ebt true).

Hmmm... Thanks for the report and I confirm that makes some sense, sadly.

While there is config to disable outgoing live queries (createHistoryStream calls) in legacy (meaning non-EBT) mode, it will still try to serve incoming live requests which incur more overhead than I'd like...

The problem is, by closing the live:true portion of the queries it is possible to keep the memory usage down but patchwork/ssb-server doesn't handle that right and needs to reconnect to get data that got replicated after the sync is done and the streams are closed (it doesnt re-do the createHistoryStream calls).

Thanks for the extra details.

So it seems that for our purposes it is best to run with EBT enabled. Are there any major downsides of EBT that we should be aware of? I realise there are still ongoing improvements, and that some glitches may occur, but overall it's a good bet?

overall it's a good bet?

yes, definitely the way forward! I just was unsure if there are more unknowns/issues in the new code, which is why I didn't enable it by default. I guess this makes you the beta-tester now :smile_cat:

It's very good to hear that the EBT mode can keep up with patchwork though! The main overhead is instantiating all those individual muxrpc calls apparently, not querying the database for them, which is a good datapoint, too.

Again, let me know if you run into issue with the EBT code or ask if one of the mentioned improvments (or their implications) isn't clear.

OK fantastic, thanks! I'll be sure to get in touch as questions arise.

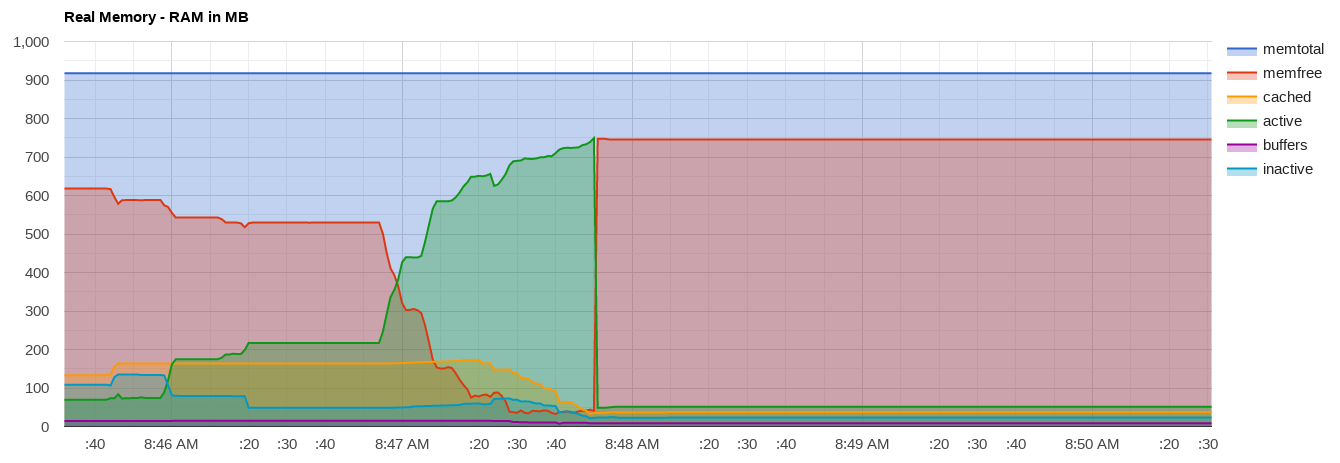

Here's a fun graph of the memory exhaustion:

go-sbot was started at 8:46 and the sbotcli connect call was made just before 8:47. Crash occurs just before 8:48 AM.

Hmm.. ok but it seems like there is a potential fix here, tho. That's definitely climbing too fast.

I have the new room server on the plate for another couple of weeks. Then we will get back to this beast and potentially use the new fixtures to setup something like a burn-in test and sync over and over. I'm sure some fixes besides new partial-replication code will come from that as well.

Sounds good. I'm going to reopen the issue since this is on-going.

There's no pressure from our side so take your time. Best wishes for the room server work!

Related https://github.com/ssbc/go-ssb/issues/124#issuecomment-1290833492

Maybe this is a dupe of #124 after all? Something to test in https://github.com/ssbc/go-ssb/pull/180 :+1:

Potential for OOM using legacy replication is now reduced with https://github.com/ssbc/go-ssb/pull/180 for the time being.