kuberay

kuberay copied to clipboard

kuberay copied to clipboard

[Bug] Containers exit with OOM and Error in ray-cluster.autoscaler.yaml

Search before asking

- [X] I searched the issues and found no similar issues.

KubeRay Component

ray-operator

What happened + What you expected to happen

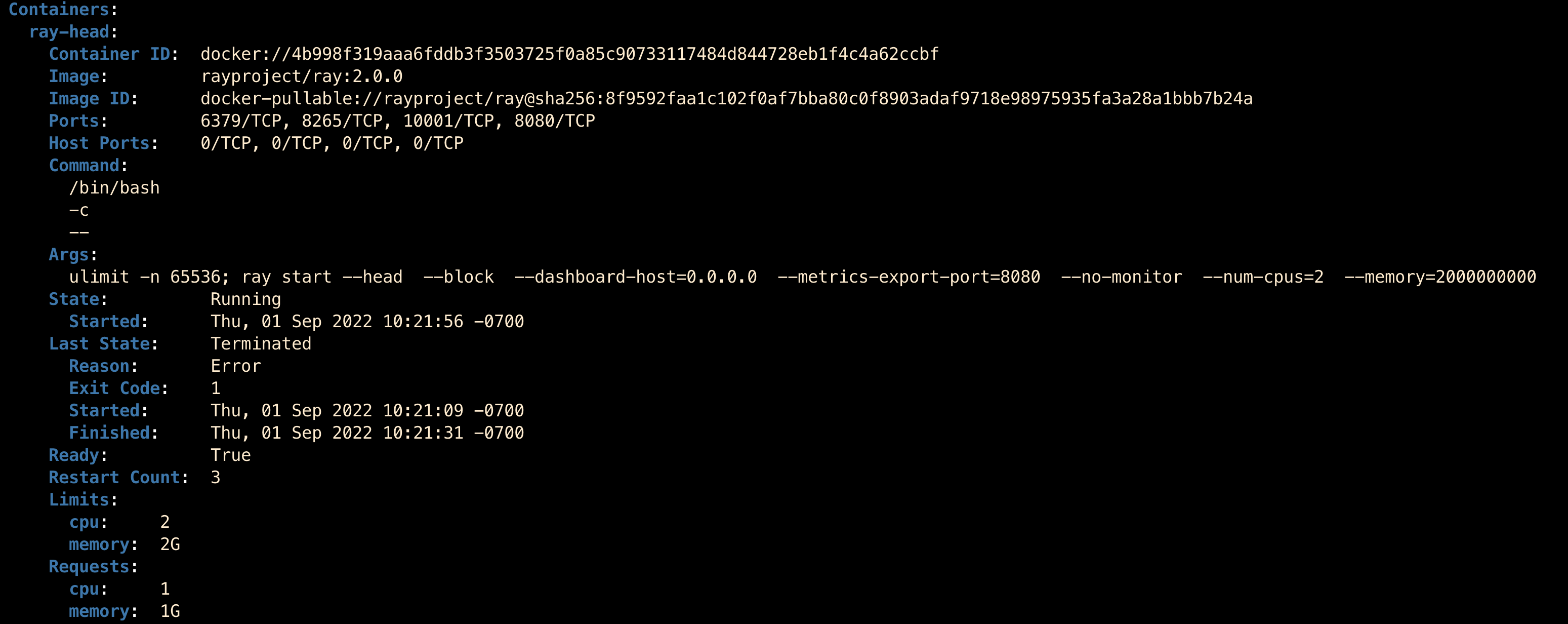

I followed the instruction in the document. When I try to deploy the ray cluster with ray-cluster.autoscaler.yaml, both the head node and worker nodes will crash with OOM. Next, I increased both memory limit and memory request configuration for both head and worker, and the nodes will not OOM but containers will still exit with Error as shown in the following figures.

log for head node

autoscaler Traceback (most recent call last):

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/_private/gcs_utils.py", line 120, in check_health

autoscaler resp = stub.CheckAlive(req, timeout=timeout)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/grpc/_channel.py", line 946, in __call__

autoscaler return _end_unary_response_blocking(state, call, False, None)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/grpc/_channel.py", line 849, in _end_unary_response_blocking

autoscaler raise _InactiveRpcError(state)

autoscaler grpc._channel._InactiveRpcError: <_InactiveRpcError of RPC that terminated with:

autoscaler status = StatusCode.UNAVAILABLE

autoscaler details = "failed to connect to all addresses"

autoscaler debug_error_string = "{"created":"@1662053084.513131210","description":"Failed to pick subchannel","file":"src/core/ext/filters/client_channel/client_channel.cc","file_line":3134,"referenced_errors":[{"created":"@1662053084.513043376","description":"failed to connect to all addresses","file":"src/core/lib/transport/error_utils.cc","file_line":163,"grpc_status":14}]}"

autoscaler >

autoscaler Traceback (most recent call last):

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/_private/gcs_utils.py", line 120, in check_health

autoscaler resp = stub.CheckAlive(req, timeout=timeout)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/grpc/_channel.py", line 946, in __call__

autoscaler return _end_unary_response_blocking(state, call, False, None)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/grpc/_channel.py", line 849, in _end_unary_response_blocking

autoscaler raise _InactiveRpcError(state)

autoscaler grpc._channel._InactiveRpcError: <_InactiveRpcError of RPC that terminated with:

autoscaler status = StatusCode.UNAVAILABLE

autoscaler details = "failed to connect to all addresses"

autoscaler debug_error_string = "{"created":"@1662053096.398618757","description":"Failed to pick subchannel","file":"src/core/ext/filters/client_channel/client_channel.cc","file_line":3134,"referenced_errors":[{"created":"@1662053096.398526049","description":"failed to connect to all addresses","file":"src/core/lib/transport/error_utils.cc","file_line":163,"grpc_status":14}]}"

autoscaler >

autoscaler Traceback (most recent call last):

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/_private/gcs_utils.py", line 120, in check_health

autoscaler resp = stub.CheckAlive(req, timeout=timeout)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/grpc/_channel.py", line 946, in __call__

autoscaler return _end_unary_response_blocking(state, call, False, None)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/grpc/_channel.py", line 849, in _end_unary_response_blocking

autoscaler raise _InactiveRpcError(state)

autoscaler grpc._channel._InactiveRpcError: <_InactiveRpcError of RPC that terminated with:

autoscaler status = StatusCode.UNAVAILABLE

autoscaler details = "failed to connect to all addresses"

autoscaler debug_error_string = "{"created":"@1662053107.646716096","description":"Failed to pick subchannel","file":"src/core/ext/filters/client_channel/client_channel.cc","file_line":3134,"referenced_errors":[{"created":"@1662053107.646629554","description":"failed to connect to all addresses","file":"src/core/lib/transport/error_utils.cc","file_line":163,"grpc_status":14}]}"

autoscaler >

autoscaler 2022-09-01 10:25:19,870 INFO monitor.py:196 -- Starting autoscaler metrics server on port 44217

autoscaler 2022-09-01 10:25:19,885 INFO monitor.py:213 -- Monitor: Started

autoscaler 2022-09-01 10:25:20,100 INFO node_provider.py:155 -- Creating KuberayNodeProvider.

autoscaler 2022-09-01 10:25:20,102 INFO autoscaler.py:250 -- disable_node_updaters:True

autoscaler 2022-09-01 10:25:20,102 INFO autoscaler.py:259 -- disable_launch_config_check:True

autoscaler 2022-09-01 10:25:20,103 INFO autoscaler.py:270 -- foreground_node_launch:True

autoscaler 2022-09-01 10:25:20,103 INFO autoscaler.py:280 -- worker_liveness_check:False

autoscaler 2022-09-01 10:25:20,104 INFO autoscaler.py:288 -- worker_rpc_drain:True

autoscaler 2022-09-01 10:25:20,105 INFO autoscaler.py:334 -- StandardAutoscaler: {'provider': {'type': 'kuberay', 'namespace': 'default', 'disable_node_updaters': True, 'disable_launch_config_check': True, 'foreground_node_launch': True, 'worker_liveness_check': False, 'worker_rpc_drain': True}, 'cluster_name': 'raycluster-autoscaler', 'head_node_type': 'head-group', 'available_node_types': {'head-group': {'min_workers': 0, 'max_workers': 0, 'node_config': {}, 'resources': {'CPU': 2, 'memory': 2000000000}}, 'small-group': {'min_workers': 1, 'max_workers': 300, 'node_config': {}, 'resources': {'CPU': 2, 'memory': 1000000000}}}, 'max_workers': 300, 'idle_timeout_minutes': 1.0, 'upscaling_speed': 1000, 'file_mounts': {}, 'cluster_synced_files': [], 'file_mounts_sync_continuously': False, 'initialization_commands': [], 'setup_commands': [], 'head_setup_commands': [], 'worker_setup_commands': [], 'head_start_ray_commands': [], 'worker_start_ray_commands': [], 'auth': {}}

autoscaler 2022-09-01 10:25:20,118 INFO monitor.py:354 -- Autoscaler has not yet received load metrics. Waiting.

autoscaler 2022-09-01 10:25:25,132 INFO monitor.py:354 -- Autoscaler has not yet received load metrics. Waiting.

ray-head 2022-09-01 10:25:18,692 INFO usage_lib.py:479 -- Usage stats collection is enabled by default without user confirmation because this terminal is detected to be non-interactive. To disable this, add `--disable-usage-stats` to the command that starts the cluster, or run the following command: `ray disable-usage-stats` before starting the cluster. See https://docs.ray.io/en/master/cluster/usage-stats.html for more details.

ray-head 2022-09-01 10:25:18,694 INFO scripts.py:719 -- Local node IP: 172.17.0.5

ray-head 2022-09-01 10:25:28,027 SUCC scripts.py:756 -- --------------------

ray-head 2022-09-01 10:25:28,028 SUCC scripts.py:757 -- Ray runtime started.

ray-head 2022-09-01 10:25:28,029 SUCC scripts.py:758 -- --------------------

ray-head 2022-09-01 10:25:28,030 INFO scripts.py:760 -- Next steps

ray-head 2022-09-01 10:25:28,030 INFO scripts.py:761 -- To connect to this Ray runtime from another node, run

ray-head 2022-09-01 10:25:28,030 INFO scripts.py:766 -- ray start --address='172.17.0.5:6379'

ray-head 2022-09-01 10:25:28,031 INFO scripts.py:780 -- Alternatively, use the following Python code:

ray-head 2022-09-01 10:25:28,031 INFO scripts.py:782 -- import ray

ray-head 2022-09-01 10:25:28,032 INFO scripts.py:795 -- ray.init(address='auto')

ray-head 2022-09-01 10:25:28,032 INFO scripts.py:799 -- To connect to this Ray runtime from outside of the cluster, for example to

ray-head 2022-09-01 10:25:28,033 INFO scripts.py:803 -- connect to a remote cluster from your laptop directly, use the following

ray-head 2022-09-01 10:25:28,033 INFO scripts.py:806 -- Python code:

ray-head 2022-09-01 10:25:28,033 INFO scripts.py:808 -- import ray

ray-head 2022-09-01 10:25:28,034 INFO scripts.py:814 -- ray.init(address='ray://<head_node_ip_address>:10001')

ray-head 2022-09-01 10:25:28,035 INFO scripts.py:820 -- If connection fails, check your firewall settings and network configuration.

ray-head 2022-09-01 10:25:28,035 INFO scripts.py:826 -- To terminate the Ray runtime, run

ray-head 2022-09-01 10:25:28,036 INFO scripts.py:827 -- ray stop

ray-head 2022-09-01 10:25:28,036 INFO scripts.py:905 -- --block

ray-head 2022-09-01 10:25:28,037 INFO scripts.py:907 -- This command will now block forever until terminated by a signal.

ray-head 2022-09-01 10:25:28,037 INFO scripts.py:910 -- Running subprocesses are monitored and a message will be printed if any of them terminate unexpectedly. Subprocesses exit with SIGTERM will be treated as graceful, thus NOT reported.

autoscaler 2022-09-01 10:25:30,684 INFO autoscaler.py:386 --

autoscaler ======== Autoscaler status: 2022-09-01 10:25:30.682530 ========

autoscaler Node status

autoscaler ---------------------------------------------------------------

autoscaler Healthy:

autoscaler 1 head-group

autoscaler 1 small-group

autoscaler Pending:

autoscaler (no pending nodes)

autoscaler Recent failures:

autoscaler (no failures)

autoscaler

autoscaler Resources

autoscaler ---------------------------------------------------------------

autoscaler Usage:

autoscaler 0.0/4.0 CPU

autoscaler 0.00/2.794 GiB memory

autoscaler 0.00/0.571 GiB object_store_memory

autoscaler

autoscaler Demands:

autoscaler (no resource demands)

autoscaler 2022-09-01 10:25:31,756 INFO monitor.py:369 -- :event_summary:Resized to 4 CPUs.

Stream closed EOF for default/raycluster-autoscaler-head-8zfmq (ray-head)

autoscaler 2022-09-01 10:25:36,786 ERROR monitor.py:439 -- Error in monitor loop

autoscaler Traceback (most recent call last):

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/autoscaler/_private/monitor.py", line 484, in run

autoscaler self._run()

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/autoscaler/_private/monitor.py", line 338, in _run

autoscaler self.update_load_metrics()

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/autoscaler/_private/monitor.py", line 241, in update_load_metrics

autoscaler response = self.gcs_node_resources_stub.GetAllResourceUsage(request, timeout=60)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/grpc/_channel.py", line 946, in __call__

autoscaler return _end_unary_response_blocking(state, call, False, None)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/grpc/_channel.py", line 849, in _end_unary_response_blocking

autoscaler raise _InactiveRpcError(state)

autoscaler grpc._channel._InactiveRpcError: <_InactiveRpcError of RPC that terminated with:

autoscaler status = StatusCode.UNAVAILABLE

autoscaler details = "failed to connect to all addresses"

autoscaler debug_error_string = "{"created":"@1662053136.774460429","description":"Failed to pick subchannel","file":"src/core/ext/filters/client_channel/client_channel.cc","file_line":3134,"referenced_errors":[{"created":"@1662053136.774406762","description":"failed to connect to all addresses","file":"src/core/lib/transport/error_utils.cc","file_line":163,"grpc_status":14}]}"

autoscaler >

autoscaler The Ray head is not yet ready.

autoscaler Will check again in 5 seconds.

autoscaler The Ray head is not yet ready.

autoscaler Will check again in 5 seconds.

autoscaler The Ray head is not yet ready.

autoscaler Will check again in 5 seconds.

autoscaler The Ray head is ready. Starting the autoscaler.

autoscaler Traceback (most recent call last):

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/autoscaler/_private/monitor.py", line 484, in run

autoscaler self._run()

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/autoscaler/_private/monitor.py", line 338, in _run

autoscaler self.update_load_metrics()

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/autoscaler/_private/monitor.py", line 241, in update_load_metrics

autoscaler response = self.gcs_node_resources_stub.GetAllResourceUsage(request, timeout=60)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/grpc/_channel.py", line 946, in __call__

autoscaler return _end_unary_response_blocking(state, call, False, None)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/grpc/_channel.py", line 849, in _end_unary_response_blocking

autoscaler raise _InactiveRpcError(state)

autoscaler grpc._channel._InactiveRpcError: <_InactiveRpcError of RPC that terminated with:

autoscaler status = StatusCode.UNAVAILABLE

autoscaler details = "failed to connect to all addresses"

autoscaler debug_error_string = "{"created":"@1662053136.774460429","description":"Failed to pick subchannel","file":"src/core/ext/filters/client_channel/client_channel.cc","file_line":3134,"referenced_errors":[{"created":"@1662053136.774406762","description":"failed to connect to all addresses","file":"src/core/lib/transport/error_utils.cc","file_line":163,"grpc_status":14}]}"

autoscaler >

autoscaler

autoscaler During handling of the above exception, another exception occurred:

autoscaler

autoscaler Traceback (most recent call last):

autoscaler File "/home/ray/anaconda3/bin/ray", line 8, in <module>

autoscaler sys.exit(main())

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/scripts/scripts.py", line 2588, in main

autoscaler return cli()

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/click/core.py", line 1128, in __call__

autoscaler return self.main(*args, **kwargs)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/click/core.py", line 1053, in main

autoscaler rv = self.invoke(ctx)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/click/core.py", line 1659, in invoke

autoscaler return _process_result(sub_ctx.command.invoke(sub_ctx))

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/click/core.py", line 1395, in invoke

autoscaler return ctx.invoke(self.callback, **ctx.params)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/click/core.py", line 754, in invoke

autoscaler return __callback(*args, **kwargs)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/scripts/scripts.py", line 2334, in kuberay_autoscaler

autoscaler run_kuberay_autoscaler(cluster_name, cluster_namespace)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/autoscaler/_private/kuberay/run_autoscaler.py", line 63, in run_kuberay_autoscaler

autoscaler retry_on_failure=False,

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/autoscaler/_private/monitor.py", line 486, in run

autoscaler self._handle_failure(traceback.format_exc())

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/autoscaler/_private/monitor.py", line 453, in _handle_failure

autoscaler ray_constants.DEBUG_AUTOSCALING_ERROR, message, overwrite=True

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/_private/client_mode_hook.py", line 105, in wrapper

autoscaler return func(*args, **kwargs)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/experimental/internal_kv.py", line 94, in _internal_kv_put

autoscaler return global_gcs_client.internal_kv_put(key, value, overwrite, namespace) == 0

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/_private/gcs_utils.py", line 177, in wrapper

autoscaler return f(self, *args, **kwargs)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/ray/_private/gcs_utils.py", line 296, in internal_kv_put

autoscaler reply = self._kv_stub.InternalKVPut(req, timeout=timeout)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/grpc/_channel.py", line 946, in __call__

autoscaler return _end_unary_response_blocking(state, call, False, None)

autoscaler File "/home/ray/anaconda3/lib/python3.7/site-packages/grpc/_channel.py", line 849, in _end_unary_response_blocking

autoscaler raise _InactiveRpcError(state)

autoscaler grpc._channel._InactiveRpcError: <_InactiveRpcError of RPC that terminated with:

autoscaler status = StatusCode.UNAVAILABLE

autoscaler details = "failed to connect to all addresses"

autoscaler debug_error_string = "{"created":"@1662053141.828487750","description":"Failed to pick subchannel","file":"src/core/ext/filters/client_channel/client_channel.cc","file_line":3134,"referenced_errors":[{"created":"@1662053141.828483875","description":"failed to connect to all addresses","file":"src/core/lib/transport/error_utils.cc","file_line":163,"grpc_status":14}]}"

autoscaler >

Stream closed EOF for default/raycluster-autoscaler-head-8zfmq (autoscaler)

Reproduction script

minikube start --memory 6144 --cpus 4

kubectl create -k "github.com/ray-project/kuberay/ray-operator/config/default?ref=v0.3.0&timeout=90s"

kubectl apply -f https://raw.githubusercontent.com/ray-project/kuberay/release-0.3/ray-operator/config/samples/ray-cluster.autoscaler.yaml

Anything else

No response

Are you willing to submit a PR?

- [X] Yes I am willing to submit a PR!

cc @DmitriGekhtman

Well...that's no good. Let me try to reproduce that.

What happens if you instead use KinD with the default set-up (kind create cluster with no arguments)?

I'm also surprised by the --num-cpus 2 that appeared in the container entry-point -- I'd expect it to be --num-cpus 1.

I was not able to reproduce the issue -- we can take a look together at what's going on.

From our discussion, it looks like it might be specific to Minikube on M1 Macs. :(

TODO: Try to use kind on M1 Macs. If I can still reproduce this bug on kind cluster, M1 is highly possible to be the root cause.

[20220921 Updated]

@jasoonn tried to deploy Kuberay with kind on Mac M1. He faced the same bug as me.

[Possible Solution]

- Update ray-operator/Dockerfile to

RUN CGO_ENABLED=0 GOOS=linux GOARCH=arm64 GO111MODULE=on go build -a -o manager main.go - Build a multi-architecture image for Kuberay with docker/buildx.

- Build a multi-architecture image for Ray with docker/buildx.

cc @DmitriGekhtman

https://github.com/ray-project/ray/pull/31522 enables users to run Ray in Docker containers on ARM machines (including Mac M1) and on ARM cloud instances (e.g. AWS Graviton).

I will try to run KubeRay on Mac M1 with the new images.

cc @DmitriGekhtman