serve

serve copied to clipboard

serve copied to clipboard

Can't serve efficientnet model

Hello,

I have trained efficientnet model from torchvision and then I tried to serve it with Torchserve but I'm not sure how to implement model.py for the efficientnet_b0 model. I used image_classifier default handler and the below code for model.py (my problem has 12 classes):

import torch.nn as nn

from torchvision.models.efficientnet import EfficientNet, MBConvConfig

class ImageClassifier(EfficientNet):

def __init__(self):

super(ImageClassifier, self).__init__(MBConvConfig, dropout=0.2, num_classes=12)

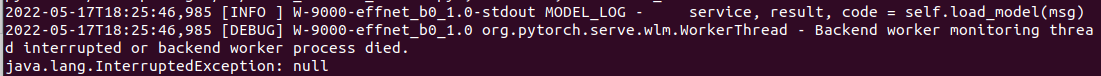

When I try to start the torchserve, I have the following error:

Has anyone tried this before and can help me? Thank you!

Can you check your logs/model_log.log? It may give a hint as to what's happening?

If not could you please fill out the repro template for new issues so I can repro?

This is my model_log.log

2022-05-17T19:39:05,682 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Listening on port: /tmp/.ts.sock.9000

2022-05-17T19:39:05,683 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - [PID]70810

2022-05-17T19:39:05,683 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Torch worker started.

2022-05-17T19:39:05,683 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Python runtime: 3.8.13

2022-05-17T19:39:05,696 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Connection accepted: /tmp/.ts.sock.9000.

2022-05-17T19:39:05,730 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - model_name: effnet_b0, batchSize: 1

2022-05-17T19:39:06,966 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Backend worker process died.

2022-05-17T19:39:06,967 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Traceback (most recent call last):

2022-05-17T19:39:06,967 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 189, in <module>

2022-05-17T19:39:06,967 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - worker.run_server()

2022-05-17T19:39:06,968 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 161, in run_server

2022-05-17T19:39:06,968 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - self.handle_connection(cl_socket)

2022-05-17T19:39:06,968 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 123, in handle_connection

2022-05-17T19:39:06,968 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - service, result, code = self.load_model(msg)

2022-05-17T19:39:06,969 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 95, in load_model

2022-05-17T19:39:06,969 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - service = model_loader.load(model_name, model_dir, handler, gpu,

2022-05-17T19:39:06,969 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_loader.py", line 112, in load

2022-05-17T19:39:06,969 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - initialize_fn(service.context)

2022-05-17T19:39:06,970 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/torch_handler/vision_handler.py", line 20, in initialize

2022-05-17T19:39:06,970 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - super().initialize(context)

2022-05-17T19:39:06,970 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/torch_handler/base_handler.py", line 77, in initialize

2022-05-17T19:39:06,970 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - self.model = self._load_pickled_model(

2022-05-17T19:39:06,970 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/torch_handler/base_handler.py", line 142, in _load_pickled_model

2022-05-17T19:39:06,982 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - model = model_class()

2022-05-17T19:39:06,983 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/tmp/models/0584418d3f604a60bab9c0ae3ada26c3/model.py", line 11, in __init__

2022-05-17T19:39:06,983 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - super(ImageClassifier, self).__init__(MBConvConfig, dropout=0.2, num_classes=12)

2022-05-17T19:39:06,984 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/torchvision/models/efficientnet.py", line 183, in __init__

2022-05-17T19:39:06,984 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - raise TypeError("The inverted_residual_setting should be List[MBConvConfig]")

2022-05-17T19:39:06,984 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - TypeError: The inverted_residual_setting should be List[MBConvConfig]

2022-05-17T19:39:08,713 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Listening on port: /tmp/.ts.sock.9000

2022-05-17T19:39:08,713 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - [PID]70836

2022-05-17T19:39:08,714 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Torch worker started.

2022-05-17T19:39:08,714 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Python runtime: 3.8.13

2022-05-17T19:39:08,716 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Connection accepted: /tmp/.ts.sock.9000.

2022-05-17T19:39:08,730 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - model_name: effnet_b0, batchSize: 1

2022-05-17T19:39:09,926 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Backend worker process died.

2022-05-17T19:39:09,926 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Traceback (most recent call last):

2022-05-17T19:39:09,927 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 189, in <module>

2022-05-17T19:39:09,927 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - worker.run_server()

2022-05-17T19:39:09,927 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 161, in run_server

2022-05-17T19:39:09,927 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - self.handle_connection(cl_socket)

2022-05-17T19:39:09,928 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 123, in handle_connection

2022-05-17T19:39:09,928 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - service, result, code = self.load_model(msg)

2022-05-17T19:39:09,928 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 95, in load_model

2022-05-17T19:39:09,928 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - service = model_loader.load(model_name, model_dir, handler, gpu,

2022-05-17T19:39:09,929 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_loader.py", line 112, in load

2022-05-17T19:39:11,628 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Listening on port: /tmp/.ts.sock.9000

2022-05-17T19:39:11,629 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - [PID]70862

2022-05-17T19:39:11,629 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Torch worker started.

2022-05-17T19:39:11,629 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Python runtime: 3.8.13

2022-05-17T19:39:11,631 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Connection accepted: /tmp/.ts.sock.9000.

2022-05-17T19:39:11,645 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - model_name: effnet_b0, batchSize: 1

2022-05-17T19:39:12,857 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Backend worker process died.

2022-05-17T19:39:12,858 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Traceback (most recent call last):

2022-05-17T19:39:12,858 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 189, in <module>

2022-05-17T19:39:12,859 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - worker.run_server()

2022-05-17T19:39:12,859 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 161, in run_server

2022-05-17T19:39:12,859 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - self.handle_connection(cl_socket)

2022-05-17T19:39:12,859 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 123, in handle_connection

2022-05-17T19:39:12,860 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - service, result, code = self.load_model(msg)

2022-05-17T19:39:12,860 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 95, in load_model

2022-05-17T19:39:12,860 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - service = model_loader.load(model_name, model_dir, handler, gpu,

2022-05-17T19:39:12,860 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_loader.py", line 112, in load

2022-05-17T19:39:12,860 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - initialize_fn(service.context)

2022-05-17T19:39:15,581 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Listening on port: /tmp/.ts.sock.9000

2022-05-17T19:39:15,582 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - [PID]70868

2022-05-17T19:39:15,582 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Torch worker started.

2022-05-17T19:39:15,582 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Python runtime: 3.8.13

2022-05-17T19:39:15,584 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Connection accepted: /tmp/.ts.sock.9000.

2022-05-17T19:39:15,612 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - model_name: effnet_b0, batchSize: 1

2022-05-17T19:39:16,821 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Backend worker process died.

2022-05-17T19:39:16,824 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - Traceback (most recent call last):

2022-05-17T19:39:16,824 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 189, in <module>

2022-05-17T19:39:16,824 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - worker.run_server()

2022-05-17T19:39:16,824 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 161, in run_server

2022-05-17T19:39:16,824 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - self.handle_connection(cl_socket)

2022-05-17T19:39:16,824 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 123, in handle_connection

2022-05-17T19:39:16,825 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - service, result, code = self.load_model(msg)

2022-05-17T19:39:16,825 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_service_worker.py", line 95, in load_model

2022-05-17T19:39:16,825 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - service = model_loader.load(model_name, model_dir, handler, gpu,

2022-05-17T19:39:16,825 [INFO ] W-9000-effnet_b0_1.0-stdout MODEL_LOG - File "/home/mariab/anaconda3/envs/weld_def/lib/python3.8/site-packages/ts/model_loader.py", line 112, in load

Deploy the model:

- Generate .mar file:

torch-model-archiver --model-name effnet_b0 --model-file model.py --serialized-file EffNetWeldModel10.pt --handler image_classifier --extra-files index_to_name.json --version 1.0 - Place the .mar file into the deployment/model-store directory:

mv effnet_b0.mar deployment/model-store/ - Deploy Torchserve:

torchserve --start --ncs --model-store deployment/model-store --models effnet_b0=effnet_b0.mar --ts-config config.properties

I trained the 'efficientnet_b0' model from torchvision

and save it using torch.save(model.state_dict(),'EffNetWeldModel10.pt')

'config.properties' looks like:

inference_address=http://127.0.0.1:2000 management_address=http://127.0.0.1:2001 metrics_address=http://127.0.0.1:2002

grpc_inference_port=6000 grpc_management_port=6001

I think this looks like the error

raise TypeError("The inverted_residual_setting should be List[MBConvConfig]")

You can debug more easily if you just run and make the model work without torchserve first

import torch.nn as nn

from torchvision.models.efficientnet import EfficientNet, MBConvConfig

class ImageClassifier(EfficientNet):

def __init__(self):

super(ImageClassifier, self).__init__(MBConvConfig, dropout=0.2, num_classes=12)

if __name__ == "__main__":

my_classifier = ImageClassifier()

etc..

I tried this and it works without torchserve.

Yes, the principal problem is with the "inverted_residual_setting" parameter from efficientnet model. I don't know how to set this parameter in super(ImageClassifier, self).__init__() from model.py

for example ResNet152 model looks like:

import torch.nn as nn

from torchvision.models.resnet import Bottleneck, ResNet

class ImageClassifier(ResNet):

def __init__(self):

super(ImageClassifier, self).__init__(Bottleneck, [3, 8, 36, 3],num_classes=12)

and it works with torchserve

@msaroufim I have the same problem as @MariaBocsa

The model is working fine when inferencing by calling

from torchvision.models import efficientnet_b0

model = efficientnet_b0(pretrained=True)

model.classifier = nn.Sequential(

nn.Dropout(p=0.2, inplace=True),

nn.Linear(in_features=1280, out_features=625, bias=True),

nn.ReLU(),

nn.Linear(in_features=625, out_features=128, bias=True),

nn.ReLU(),

nn.Linear(in_features=128, out_features=12, bias=True)

)

model.classifier = sequential_layers

But when supplying this model.py for archiving the model for serving it does not work

hence model.py looks like:

# Reference: https://github.com/pytorch/vision/blob/master/torchvision/models/efficientnet.py

import torch.nn as nn

from torchvision.models import efficientnet_b0

class ImageClassifier(efficientnet_b0):

def __init__(self):

super(ImageClassifier, self).__init__(pretrained=True)

self.classifier = nn.Sequential(

nn.Dropout(p=0.2, inplace=True),

nn.Linear(in_features=1280, out_features=625, bias=True),

nn.ReLU(),

nn.Linear(in_features=625, out_features=128, bias=True),

nn.ReLU(),

nn.Linear(in_features=128, out_features=12, bias=True)

)

Hi @MariaBocsa sorry for the delay, so one idea is that the error is expecting a List of config instead of a single one so you can pass in a list like so by wrapping with []

class ImageClassifier(EfficientNet):

def __init__(self):

super(ImageClassifier, self).__init__([MBConvConfig], dropout=0.2, num_classes=12)

The other problem could be that you're passing in a class definition instead of an object so you may need to do something like

class ImageClassifier(EfficientNet):

def __init__(self):

config = MBConvConfig(pass_in_parameters_here)

super(ImageClassifier, self).__init__(config, dropout=0.2, num_classes=12)

This doesn't feel like a torchserve bug to me but if you're still stuck let me know and I can repro quickest if you link me your .mar file