tidb-operator

tidb-operator copied to clipboard

tidb-operator copied to clipboard

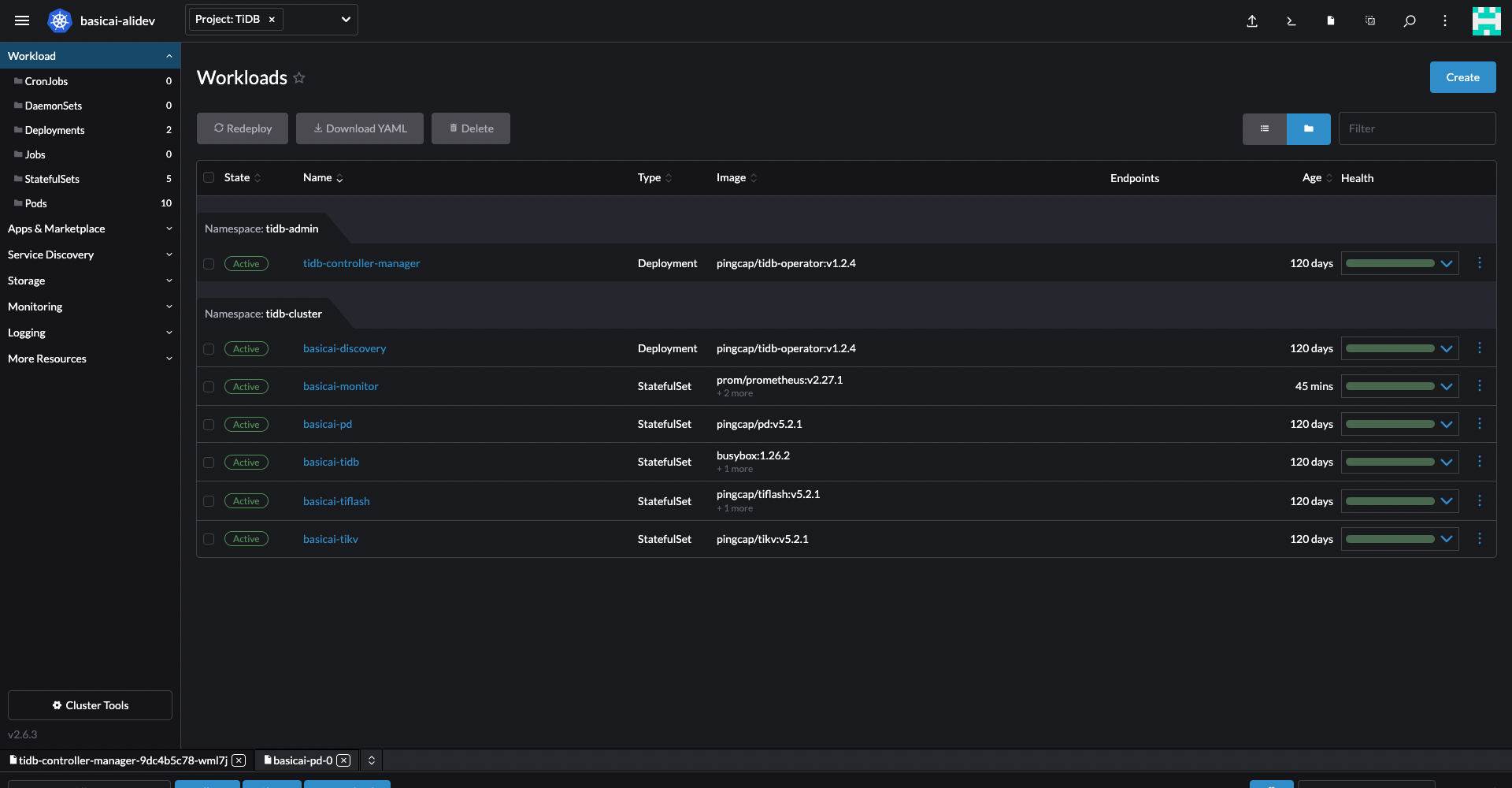

TiKV running replicas is not math the number in TiDBCluster CRD.

Bug Report

What version of Kubernetes are you using? 1.20

What version of TiDB Operator are you using? 1.2

What's the status of the TiDB cluster pods?

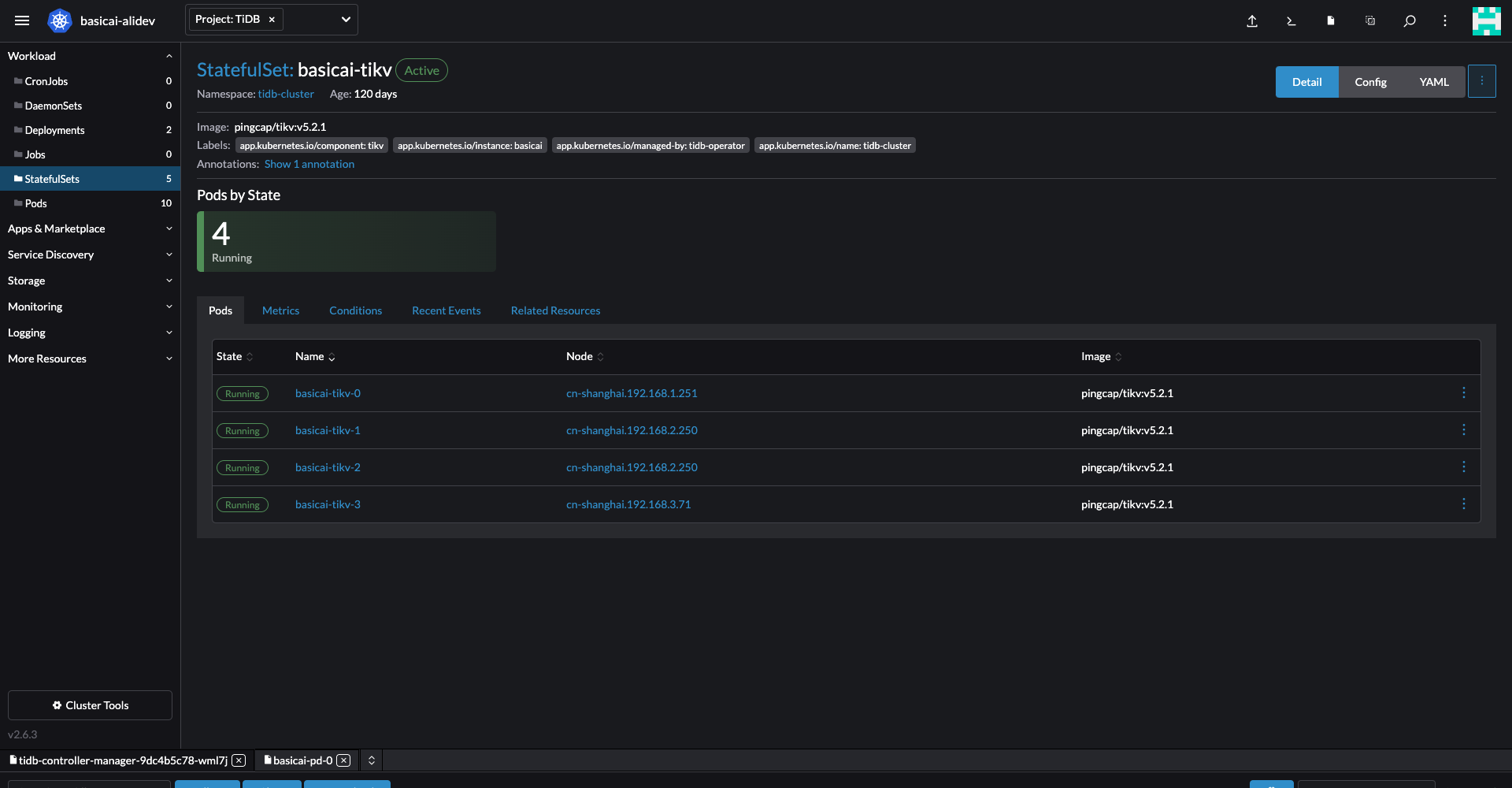

$ kubectl get po -n tidb-cluster -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

basicai-discovery-5d7d4dc88b-lr5xv 1/1 Running 0 110m 10.101.3.86 cn-shanghai.192.168.3.71 <none> <none>

basicai-monitor-0 3/3 Running 0 40m 10.101.3.211 cn-shanghai.192.168.1.251 <none> <none>

basicai-pd-0 1/1 Running 0 110m 10.101.3.207 cn-shanghai.192.168.1.251 <none> <none>

basicai-tidb-0 2/2 Running 0 104m 10.101.3.88 cn-shanghai.192.168.3.71 <none> <none>

basicai-tiflash-0 4/4 Running 0 109m 10.101.3.141 cn-shanghai.192.168.2.250 <none> <none>

basicai-tikv-0 1/1 Running 0 105m 10.101.3.208 cn-shanghai.192.168.1.251 <none> <none>

basicai-tikv-1 1/1 Running 0 106m 10.101.3.143 cn-shanghai.192.168.2.250 <none> <none>

basicai-tikv-2 1/1 Running 0 107m 10.101.3.142 cn-shanghai.192.168.2.250 <none> <none>

basicai-tikv-3 1/1 Running 0 12m 10.101.3.89 cn-shanghai.192.168.3.71 <none> <none>

What did you do? I migrate a TiDBCluster from one node pool to another in Aliyun ACK, but two of three TiKV nodes scheduled to same host. So I tried to increase TiKV replicas to 4 and decrease to 3, but the running replicas is still 4.

TiDBCluster CRD:

apiVersion: pingcap.com/v1alpha1

kind: TidbCluster

metadata:

name: basicai

spec:

version: v5.2.1

nodeSelector:

dedicated: infra

pd:

replicas: 1

storageClassName: alicloud-disk-tidb-pd

requests:

storage: "20Gi"

tidb:

replicas: 1

service:

type: ClusterIP

tikv:

replicas: 3

storageClassName: alicloud-disk-tidb-tikv

requests:

storage: "200Gi"

tiflash:

replicas: 1

storageClaims:

- resources:

requests:

storage: "200Gi"

storageClassName: alicloud-disk-tidb-tiflash

It seems to related to my deleting of schedulerName: tidb-scheduler in TiDBCluster CRD, and I manually deleted tidb-scheduler deployment. I tried to add back of schedulerName: tidb-scheduler, but tidb-scheduler not auto created.

you need to check tidb operator log exception,operator may prevent you from modifying the replicas.

@jaggerwang What's the status of the store for tikv-3? When you scale in TiKV, we will delete the TiKV store, the store state will become offline, and PD will start to migrate the replicas to the other TiKV stores and when the store state becomes tombstone, the Pod will be deleted.

This issue is stale because it has been open 60 days with no activity. Remove stale label or comment or this will be closed in 15 days