s3-backup

s3-backup copied to clipboard

s3-backup copied to clipboard

No backup from commits from other actions?

This is great, thanks. Is there a trigger I could use that works when commits are made from other actions?

Hi @eggbean

You might be running into this issue: https://github.com/peter-evans/create-pull-request/blob/main/docs/concepts-guidelines.md#triggering-further-workflow-runs

The short version is that in order to allow automated commits to trigger further workflow runs, you must use a PAT with the action that performs the commit. The default GITHUB_TOKEN will not allow it.

Ah okay thanks. Makes sense.

I've added this action to some repositories that are important to me, but I've noticed that it appears successful in the ran workflows list, but only looking at the log you see that it failed. It failed as I had not added the AWS secrets yet. Would this mean that I wouldn't be aware of the backup failing to work in the future? If I changed the access token then the backups would fail and I wouldn't be alerted.

Also, on a large repository I noticed that the action is taking a couple of minutes to run every time, even when only small changes were made. So is the action clobbering S3 with the entire repository every time, or is it using this time for file comparison? As there is no compute going on in S3 I suppose it is actually uploading the entire thing. This makes it not ideal for large repositories with many commits due to network fees? Thanks.

This action is simply a wrapper around the MinIO client mirror command. https://github.com/peter-evans/s3-backup/blob/master/entrypoint.sh#L18

Fairly sure it doesn't copy the whole repository, but just syncs the changes.

I would expect the command to fail if the access token is incorrect, but I haven't tested that specifically.

I've had this running on my most important repositories that I want to make sure I can never lose. A couple of these get multiple commits per day. What I am seeing is that the entire repository is indeed copied to S3 with every push.

I have versioning enabled on these buckets and even on files that have never changed, there are dozens of file versions on S3 and every file says that it was updated at the time of the last push.

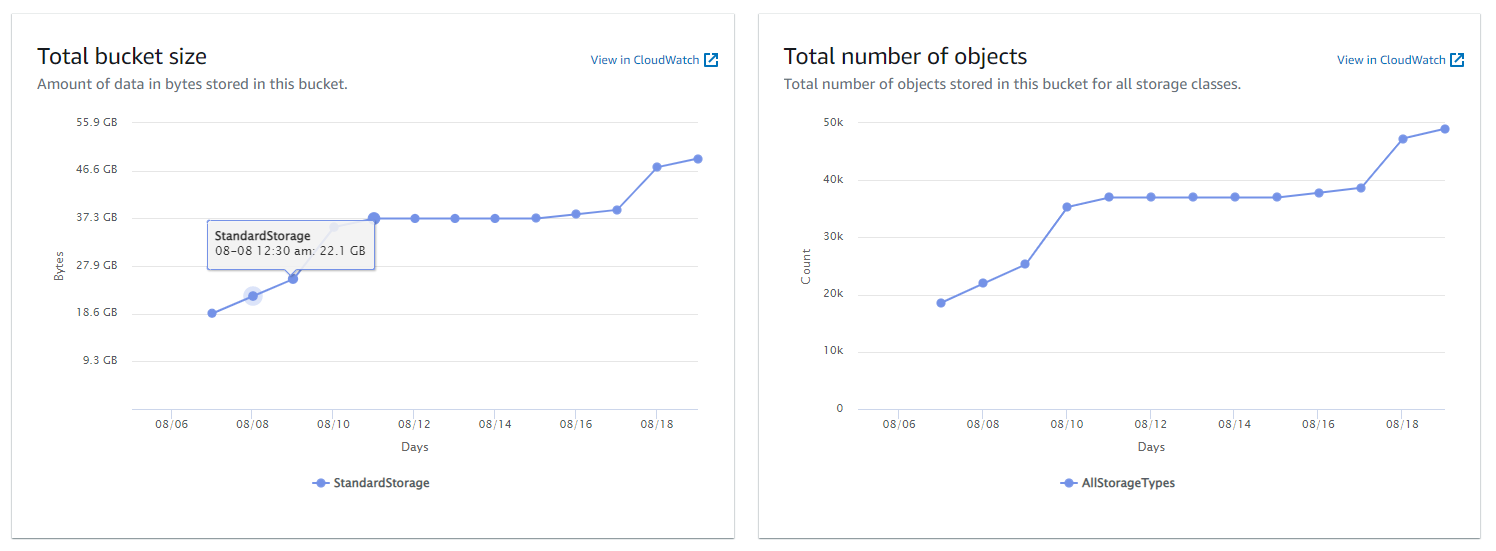

One repository is over a gigabyte in size, so with the versioning the bucket has snowballed to nearly 50GB.

I'm going to turn off versioning for now, as I have that in the git history anyway, but there is still the network ingress fees and the unnecessary clobbering.

I'm using the --overwrite and --remove arguments, like in your example and I have looked at MinIO's documentation and cannot see any other arguments that will stop this from happening.