openvino_contrib

openvino_contrib copied to clipboard

openvino_contrib copied to clipboard

Converted Mask-RCNN model trained on custom dataset does not return any meaningful detections

Hey there,

based on the Mask-RCNN example provided by @dkurt I tried to convert my own model which is based on Mask-RCNN with a ResNet50 backbone but trained on a custom dataset. Converting the model to IR works but when running the inference, the model doesn't return any meaningful detections.

I'm working with OpenVINO 2021.4 on Ubuntu 20.04 (x64), torch 1.10.0, torchvision 0.11.1, mo_pytorch commit https://github.com/openvinotoolkit/openvino_contrib/commit/4de1104e4e68222623b7dac7f97f0fcc53d17ebf (as suggested by @dkurt because of OpenVINO 2021.4).

I modified the Mask-RCNN example in the following way:

inst_classes = [

"__background__",

"aisle post",

"palette"

] # The model is trained on only 2 classes + background

[...]

# Load origin model

model = models.detection.maskrcnn_resnet50_fpn(pretrained=False, num_classes=len(inst_classes))

model.load_state_dict(torch.load("aisle_post_detector_maskrcnn_resnet50.pth"))

model.eval()

When I remove probability threshold filter in the example code and print all detections, the model returns the following:

[0. 2. 0.09952605 0. 0.12424597 0.47801715 1. ]

[-1. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0.]

[...]

Converting the same model to ONNX and running the inference using the ONNX runtime on the same input image returns three detections, one for class "aisle post" and two for class "palette" with probabilities > 0.9 each.

For reference here are some additional files:

Mask-RCNN example command line output, default model: out_default_model.txt Mask-RCNN example command line output, custom trained model: out_own_model.txt mo_pytorch conversion output (XML), default model: Mask_RCNN_default_model.xml.txt mo_pytorch conversion output (XML), custom trained model: Mask_RCNN_own_model.xml.txt

When comparing these files, lots of changes are shown, especially in the XML files. But I don't understand all the effects these changes might have.

Any help is very appreciated! Let me know if you need any additional information...

Best regards,

Jens

Hi!

First of all, please check if you training dataset has background as 0th class. If not, can you try to change background_label_id="0" to background_label_id="2" in the .xml?

If it's possible, please share trained weights and sample picture to validate.

Hey @dkurt,

yes, the training dataset has background as its 0th class. Here's the configuration:

"labels": [

"__background__",

"aisle post",

"palette"

]

I've uploaded the PyTorch weights file here: https://1drv.ms/u/s!Atf7EXiTPJ-qiJgtIh-lWxKBUkqwBA?e=3DOypo The input shape I used for testing is [1, 3, 300, 300].

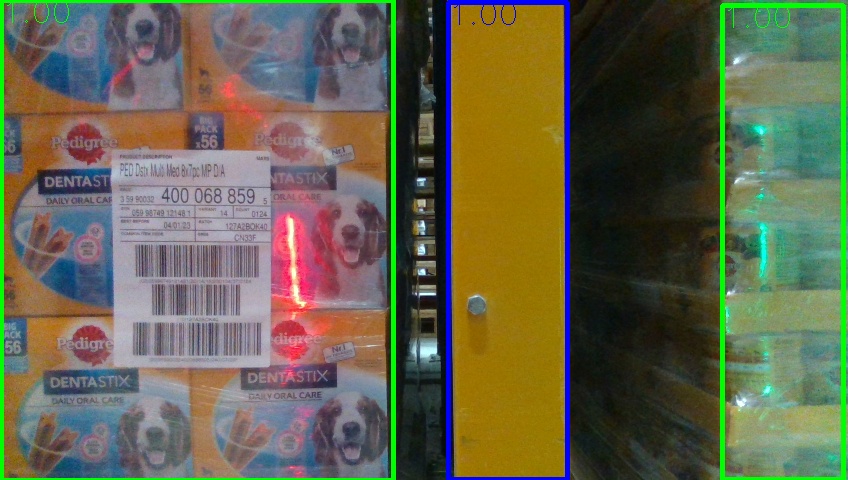

Here is the image that I used for testing:

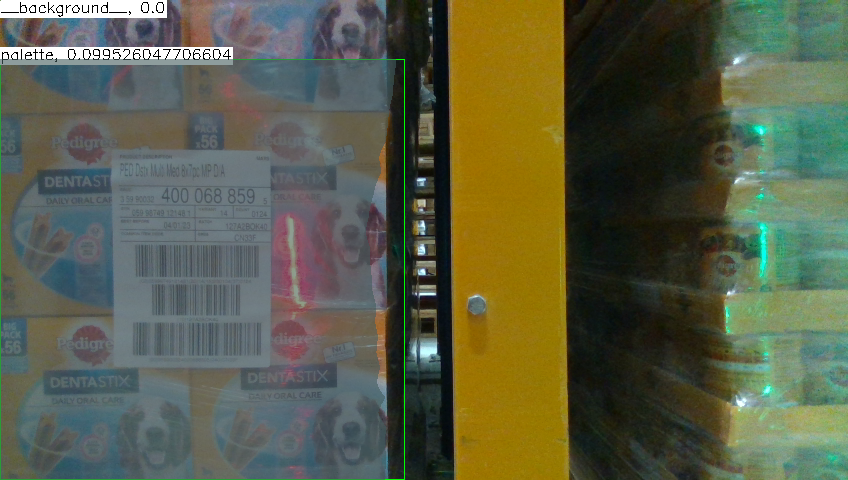

Here is the output of the same model exported to ONNX and run using the ONNX Runtime:

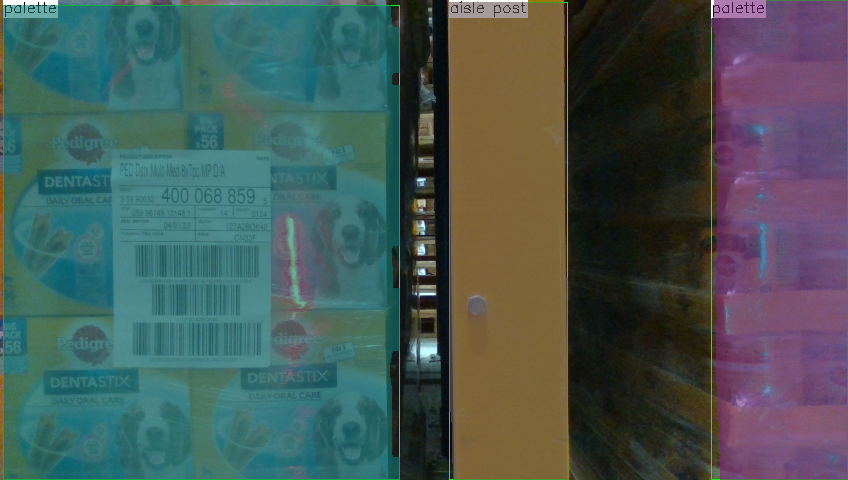

And here is the output I get from the modified mo_pytorch sample:

Thanks for your help :slightly_smiling_face:

@jsiebert, thanks for catching this! The thing is that by default, model has some parameters named min_size and max_size (800 and 1333 are default values correspondingly ). So in PyTorch inputs are resized to specific input shape at the beginning (see _resize_image_and_masks). So for your image of size 480x848 the actual input tensor shape is 754x1333 (printed from PyTorch internals).

Sorry for confusion, that mush be confusing. Let's keep this ticket open so we propose more clear solution. At this moment, you can use such shapes during conversion to achieve exact results. Also, I've found one bug with wrong proposals computation (fix https://github.com/openvinotoolkit/openvino_contrib/pull/272)

import torch

model = models.detection.maskrcnn_resnet50_fpn(pretrained=False, num_classes=len(inst_classes))

model.load_state_dict(torch.load("aisle_post_detector_maskrcnn_resnet50.pth", map_location=torch.device('cpu')))

model.eval()

# Convert model to OpenVINO IR

img_h = 754

img_w = 1333

mo_pytorch.convert(model,

input_shape=[1, 3, img_h, img_w],

model_name="Mask_RCNN",

scale=255,

reverse_input_channels=True)

# Prepare input image

img = cv.imread(args.input)

inp = cv.resize(img, (img_w, img_h)) # OpenCV target size is (width, height)

inp = np.expand_dims(inp.astype(np.float32).transpose(2, 0, 1), axis=0)

# Do inference

ie = IECore()

net = ie.read_network('Mask_RCNN.xml')

exec_net = ie.load_network(net, args.device)

out = exec_net.infer({'input': inp})

detections, masks, _ = out.values()

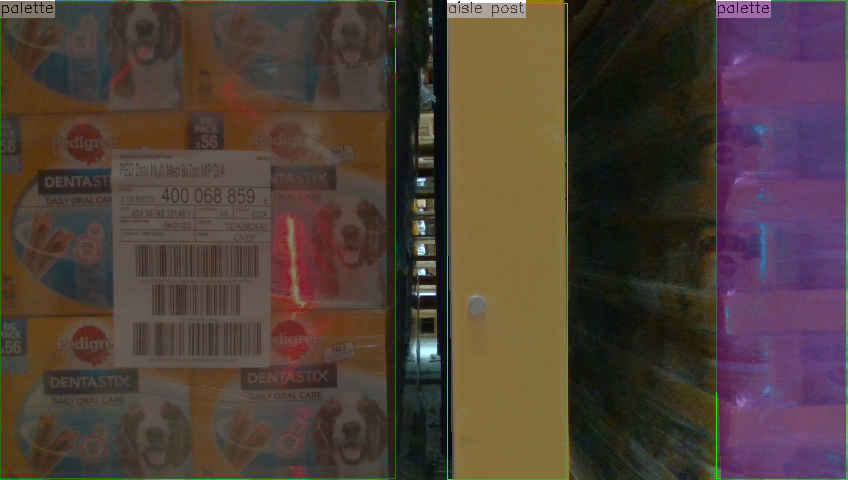

result for 754x1333

However if you process 300x300 input image - Torchvision resizes input to 800x800 so you need to use that input shape in OpenVINO sample:

img_h = 800

img_w = 800

result for 800x800

Hey @dkurt,

I've applied the workaround you've proposed as well as the fix for the proposals computation. Now inference works like a charm! Eagerly waiting for the final solution to this issue :wink: