Client Disconnected during Fine Tune

Describe the bug

When running openai api fine_tunes.create ... the streaming cli response is continually interrupted. A bit of investigation revealed that the underlying exception is Invalid chunk encoding "Connection broken: InvalidChunkLength(got length b'', 0 bytes read)".

As we iterate over the events on the stream, there is a ProtocolError because a response is coming back with no bytes in it.

The full call stack:

File "\lib\site-packages\openai\cli.py", line 537, in _stream_events

for event in events:

File "\lib\site-packages\openai\api_resources\fine_tune.py", line 158, in <genexpr>

return (

File "\lib\site-packages\openai\api_requestor.py", line 611, in <genexpr>

return (

File "\lib\site-packages\openai\api_requestor.py", line 107, in parse_stream

for line in rbody:

File "\lib\site-packages\requests\models.py", line 865, in iter_lines

for chunk in self.iter_content(

File "\lib\site-packages\requests\models.py", line 818, in generate

raise ChunkedEncodingError(e)

requests.exceptions.ChunkedEncodingError: ("Connection broken: InvalidChunkLength(got length b'', 0 bytes read)", InvalidChunkLength(got length b'', 0 bytes read))

To Reproduce

- Update the cli.py to not discard all exceptions while streaming events

- Start a fine tune and wait for error

Code snippets

No response

OS

Windows

Python version

3.10

Library version

0.27.0

I am seeing the same behavior.

(venv) coldadmin@big-potato:~/projects/chatgpt-custom$ pip list | grep -i openai

openai 0.27.2

(venv) coldadmin@big-potato:~/projects/chatgpt-custom$ openai api fine_tunes.follow -i ft-potato

[2023-03-19 10:44:18] Created fine-tune: ft-qR9e3uI7JKaovyIZEWpRu7W4

[2023-03-19 10:51:58] Fine-tune costs $0.00

[2023-03-19 10:51:59] Fine-tune enqueued. Queue number: 0

[2023-03-19 10:52:15] Fine-tune started

Stream interrupted (client disconnected).

To resume the stream, run:

openai api fine_tunes.follow -i ft-potato

The job itself is not finished and I can restart the follow.

Got the same error when I tried fine tuning with 'Ada' and 'Curie' models. Please find the error message below. The version is openai-0.27.2/0.25.0 , Python 3.9.12 and I am working on Mac Air.

`Found potentially duplicated files with name 'data_prepared.jsonl', purpose 'fine-tune' and size 1941 bytes file-62iG3zdcks0HnFnEnYXnfqCq file-6jaJJ52ZDPGEAUcpuViZpAKW Enter file ID to reuse an already uploaded file, or an empty string to upload this file anyway: file-6jaJJ52ZDPGEAUcpuViZpAKW Reusing already uploaded file: file-6jaJJ52ZDPGEAUcpuViZpAKW Created fine-tune: ft-qQWHmdbLTtCP7cHfIUPZCFS2 Streaming events until fine-tuning is complete...

(Ctrl-C will interrupt the stream, but not cancel the fine-tune) [2023-03-20 12:19:21] Created fine-tune: ft-qQWHmdbLTtCP7cHfIUPZCFS2

Stream interrupted (client disconnected). To resume the stream, run:

openai api fine_tunes.follow -i ft-qQWHmdbLTtCP7cHfIUPZCFS2 (base) xxxx@MBA-FVFHH1LBQ6LX NBA % openai api fine_tunes.create -t data_prepared.jsonl -m ada Found potentially duplicated files with name 'data_prepared.jsonl', purpose 'fine-tune' and size 1941 bytes file-62iG3zdcks0HnFnEnYXnfqCq file-6jaJJ52ZDPGEAUcpuViZpAKW Enter file ID to reuse an already uploaded file, or an empty string to upload this file anyway: Upload progress: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████| 1.94k/1.94k [00:00<00:00, 794kit/s] Uploaded file from data_prepared.jsonl: file-tVPLVKPPuMeyIaCmCI9NBOBZ Error: Error communicating with OpenAI: ('Connection aborted.', ConnectionResetError(54, 'Connection reset by peer')) `

same problem I was going to do a demo tomorrow seems I won't anymore :(

Any solution yet??

I tried atleast 10 times later and it was Ssucessful only twice. I did not make any code changes. The fine tuning was interrupted the first time , but I simply re-ran the tuning using the command suggested.

I believe that size of the data and the number of jobs ahead of you matters.

Could you write the command and say what data size and number of jobs you have used so I can try to replicate it? @FSDSAbhi

So far, nothing has worked.

I reduced my training data by 40% and re ran the fine tuning process. Initially it failed. Then I restarted the finetuning using the suggested command which is below. openai api fine_tunes.follow -i ft-qQWHmdbLTtCP7cHfIUPZCFS2

thanks, I will give it a try

thanks, I will give it a try

Did it work?

same problem(

For anyone else who is struggling with the same, I found a way which worked for me. Just to be clear before hand, I didn't get the same error as the author. The issue I had which also many people in this thread have was that the client kept getting disconnected and so there was no fine tuning occuring.

To solve this, I created the file via Python :

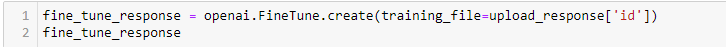

Then, I used the id to create a fine tuning response

After that, if you print out the response, this should come up:

If you look at the status, in this particular instance, it says pending which means that it is queued and the processing will start shortly. Soon enough, the status will change to processing and eventually, completed. We can just call the earlier code again and the status will change as the processing goes through. Earlier, I was using CLI where I was facing the client disconnection often.

Hope this works out for you too ! Let me know if you have any questions.

@sparrshn24 no, the @FSDSAbhi solution didn't work for me :( I ran it multiple times and still got

Stream interrupted (client disconnected).

To resume the stream, run:

openai api fine_tunes.follow -i ft-<ft process id>

@sparrshn24, thanks! the code ran, but the status is pending forever ::cries::

If anyone also wants to try, here is the copy and paste version:

openai.api_key = '<insert your api key here>'

file_name = "<insert the name of your json file here>"

upload_response = openai.File.create(file=open(file_name, "rb"), purpose="fine-tune")

file_id = upload_response.id

fine_tune_response = openai.FineTune.create(training_file=upload_response["id"])

You are right. It is status pending forever now. I will fix it and be back. I made it work for 2 occasions so I am pretty sure there is a solution. Thanks for letting me know.

@cassiasamp I believe the fine tuning is running now. So, first I fetched all my fine tunes using:

!openai api fine_tunes.list

After that, I removed all the fine tunes which were still pending.

I did this by :

!openai api fine_tunes.cancel -i "id of the fine tune"

Be careful not to confuse the id of the fine tune with the file id.

Then, I began a fresh batch. But I also noticed that, if you call the response, it still shows pending. Instead of calling the response object again, I used this command to track the progress:

!openai api fine_tunes.get -i "id of the fine tune"

The result :

One epoch done and rest on their way! Tell me if this works for you too.

Hi Everyone,

I keep an eye on the fine tuning system at OpenAI. The system's working fine but we often have rather long backlogs which is probably what's causing these backlogs. Feel free to poll for your job status if the stream disconnects. We don't have a root cause on what causes these disconnects but that should work as a workaround in the meantime

thanks @sparrshn24! everything ran fine 🙌🏽 but I also believe its was because for some reason, the fine tune completed yesterday

"message": "Completed epoch 4/4",

"object": "fine-tune-event"

I had some trouble saving the contents as a csv file, 'cause the API returns a SList. Since it is a bit out of the scope here, if anyone needs the code, let me know. But we seem to be on not very firm ground, thanks for letting us know @hallacy! I will probably stay away from live demos for a while 😅

This should be resolved now. If the problem persists in the latest version of the SDK, please open a new issue.