text-generation-webui

text-generation-webui copied to clipboard

text-generation-webui copied to clipboard

"RuntimeError: probability tensor contains either `inf`, `nan` or element < 0" 8bit mode not working 1070 Ti.

Describe the bug

I was able to get the smaller models working, OPT 1.3b, pygmalion 1.3b, but as suspected they were lackluster. I'm working on windows 10 with a 1070 Ti with 8gb of VRAM, and thought there was no chance for me using the bigger models, but... There's all these Reddit posts on halving the size with 8bit/bitsandbytes: https://www.reddit.com/r/MachineLearning/comments/11kwdu9/d_tutorial_run_llama_on_8gb_vram_on_windows/ https://www.reddit.com/r/PygmalionAI/comments/10r4ua5/anyone_run_locally_and_tried_to_use_8bit_on_older/ https://www.reddit.com/r/PygmalionAI/comments/10o0dfp/model_8bit_optimization_through_wsl/

I followed through them, and am getting this error when trying to generate text:

To create a public link, set share=True in launch().

D:\oobabooga\one-click-installers-oobabooga-windows\installer_files\env\lib\site-packages\transformers\models\gpt_neo\modeling_gpt_neo.py:195: UserWarning: where received a uint8 condition tensor. This behavior is deprecated and will be removed in a future version of PyTorch. Use a boolean condition instead. (Triggered internally at C:\cb\pytorch_1000000000000\work\aten\src\ATen\native\TensorCompare.cpp:413.)

attn_weights = torch.where(causal_mask, attn_weights, mask_value)

Exception in thread Thread-3 (gentask):

Traceback (most recent call last):

File "D:\oobabooga\one-click-installers-oobabooga-windows\installer_files\env\lib\threading.py", line 1016, in _bootstrap_inner

self.run()

File "D:\oobabooga\one-click-installers-oobabooga-windows\installer_files\env\lib\threading.py", line 953, in run

self._target(*self._args, **self._kwargs)

File "D:\oobabooga\one-click-installers-oobabooga-windows\text-generation-webui\modules\callbacks.py", line 65, in gentask

ret = self.mfunc(callback=_callback, **self.kwargs)

File "D:\oobabooga\one-click-installers-oobabooga-windows\text-generation-webui\modules\text_generation.py", line 199, in generate_with_callback

shared.model.generate(**kwargs)

File "D:\oobabooga\one-click-installers-oobabooga-windows\installer_files\env\lib\site-packages\torch\autograd\grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "D:\oobabooga\one-click-installers-oobabooga-windows\installer_files\env\lib\site-packages\transformers\generation\utils.py", line 1452, in generate

return self.sample(

File "D:\oobabooga\one-click-installers-oobabooga-windows\installer_files\env\lib\site-packages\transformers\generation\utils.py", line 2504, in sample

next_tokens = torch.multinomial(probs, num_samples=1).squeeze(1)

RuntimeError: probability tensor contains either inf, nan or element < 0

Is this just a bug or am I unable to use bitsandbytes? The only other 10 series card I have seen "working" was this guy with a 1080 Ti on dreambooth (his fix did not work for me)- https://github.com/james-things/bitsandbytes-prebuilt-all_arch

Feb 2nd bitsandbytes update says "Int8 Matmul backward for all GPUs" and in the hardware section I would meet the requirements (between a NVIDIA Kepler GPU or newer a GPU from 2018 or older)? However, in a blog it also stated: Support for Kepler GPUs (GTX 1080 etc)

While we support all GPUs from the past four years, some old GPUs like GTX 1080 still see heavy use. While these GPUs do not have Int8 tensor cores, they do have Int8 vector units (a kind of "weak" tensor core). As such, these GPUs can also experience Int8 acceleration. However, it requires a entire different stack of software for fast inference. While we do plan to integrate support for Kepler GPUs to make the LLM.int8() feature more widely available, it will take some time to realize this due to its complexity.

I have tried re-installing everything manually with big boy anaconda, but had no luck and just ended up with the same error message.

Is there an existing issue for this?

- [X] I have searched the existing issues

Reproduction

Enable load in 8 bit mode.

Launch oobabooga.

Load any model.

Attempt to generate text.

Nothing - RuntimeError: probability tensor contains either inf, nan or element < 0

Screenshot

Logs

To create a public link, set `share=True` in `launch()`.

D:\oobabooga\one-click-installers-oobabooga-windows\installer_files\env\lib\site-packages\transformers\models\gpt_neo\modeling_gpt_neo.py:195: UserWarning: where received a uint8 condition tensor. This behavior is deprecated and will be removed in a future version of PyTorch. Use a boolean condition instead. (Triggered internally at C:\cb\pytorch_1000000000000\work\aten\src\ATen\native\TensorCompare.cpp:413.)

attn_weights = torch.where(causal_mask, attn_weights, mask_value)

Exception in thread Thread-3 (gentask):

Traceback (most recent call last):

File "D:\oobabooga\one-click-installers-oobabooga-windows\installer_files\env\lib\threading.py", line 1016, in _bootstrap_inner

self.run()

File "D:\oobabooga\one-click-installers-oobabooga-windows\installer_files\env\lib\threading.py", line 953, in run

self._target(*self._args, **self._kwargs)

File "D:\oobabooga\one-click-installers-oobabooga-windows\text-generation-webui\modules\callbacks.py", line 65, in gentask

ret = self.mfunc(callback=_callback, **self.kwargs)

File "D:\oobabooga\one-click-installers-oobabooga-windows\text-generation-webui\modules\text_generation.py", line 199, in generate_with_callback

shared.model.generate(**kwargs)

File "D:\oobabooga\one-click-installers-oobabooga-windows\installer_files\env\lib\site-packages\torch\autograd\grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "D:\oobabooga\one-click-installers-oobabooga-windows\installer_files\env\lib\site-packages\transformers\generation\utils.py", line 1452, in generate

return self.sample(

File "D:\oobabooga\one-click-installers-oobabooga-windows\installer_files\env\lib\site-packages\transformers\generation\utils.py", line 2504, in sample

next_tokens = torch.multinomial(probs, num_samples=1).squeeze(1)

RuntimeError: probability tensor contains either `inf`, `nan` or element < 0

System Info

Windows 10, EVGA Geforce 1070 Ti.

change int threshold and it might work. do like 0.5-1.5 see hint:https://github.com/oobabooga/text-generation-webui/pull/198

it works for me on linux, should work the same on windows.. but I have 24g of ram

I'm guessing that you used the libbitsandbytes_cuda116.dll linked in that first guide? If so then you should use libbitsandbytes_cudaall.dll. It has better compatibility with older GPUs. It works on my 1080ti.

change int threshold and it might work. do like 0.5-1.5 see hint:#198

it works for me on linux, should work the same on windows.. but I have 24g of ram

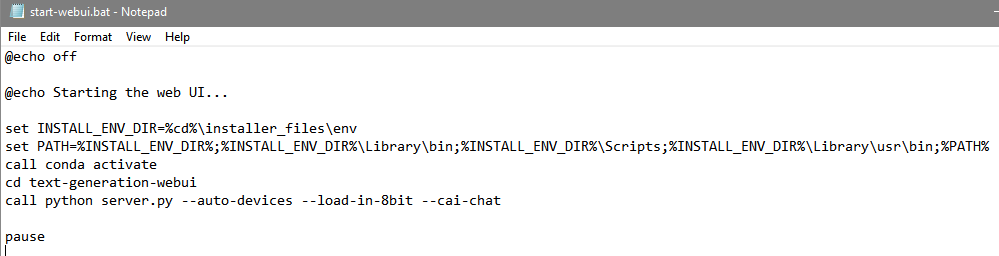

Is this right? python server.py --auto-devices --load-in-8bit --cai-chat --int8-threshold %1 I am gettings this error when launching: server.py: error: unrecognized arguments: --int8-threshold 1

What are the relevant packages that support the --int8-threshold argument? Is this the right version of pytorch? python 3.9.16 h6244533_2 pytorch 2.0.0 py3.9_cuda11.7_cudnn8_0 pytorch pytorch-cuda 11.7 h16d0643_3 pytorch pytorch-mutex 1.0 cuda pytorch****

Is something wrong with cuda? cuda-cccl 12.1.55 0 nvidia cuda-cudart 11.7.99 0 nvidia cuda-cudart-dev 11.7.99 0 nvidia cuda-cupti 11.7.101 0 nvidia cuda-libraries 11.7.1 0 nvidia cuda-libraries-dev 11.7.1 0 nvidia cuda-nvrtc 11.7.99 0 nvidia cuda-nvrtc-dev 11.7.99 0 nvidia cuda-nvtx 11.7.91 0 nvidia cuda-runtime 11.7.1 0 nvidia

You will have to add it to where it loads the model inside of models.py and pray.

You will have to add it to where it loads the model inside of models.py and pray.

What should I be adding to models.py?

I do it like this: https://github.com/Ph0rk0z/text-generation-webui-testing/commit/ecad08f54c3282356888ee8f4dbf112cb331544a