tensorflow-onnx

tensorflow-onnx copied to clipboard

tensorflow-onnx copied to clipboard

Convert TensorFlow, Keras, Tensorflow.js and Tflite models to ONNX

I use tensorflow 2 to train a mobilenetv2 320 ssd object detection but if i convert the pretrained i get a model with input without the StatefulPartitionedCall/map/while_loop body if i...

# Ask a Question ### Question ### Further information - Is this issue related to a specific model? **Model name**: **Model opset**: ### Notes

# Ask a Question ### Question ``` I am converting tensorflow 1.14 trained tensorflow object detection model to onnx and running inference. I am using tensorflow/tensorflow:1.14.0-gpu-py3 docker image. using onnxruntime-gpu==1.1...

changed t2onnx.convert on correct name tf2onnx.convert

ERROR - Tensorflow op [StatefulPartitionedCall/deepnn_model/transpose_5/Rank: Rank] is not supported

**Describe the bug** python -m tf2onnx.convert --saved-model ./sm --opset 17 --output model.onnx /home/luban/anaconda3/lib/python3.9/runpy.py:127: RuntimeWarning: 'tf2onnx.convert' found in sys.modules after import of package 'tf2onnx', but prior to execution of 'tf2onnx.convert'; this...

I want to convert a tf2 model to onnx, but it report a error.I go back to run example of convert a pretrained resnet50 model to test my usage. I...

onnx support tensorflow ops TensorListReserve、TensorListGetItem、TensorListSetItem、TensorListStack、TensorListFromTensor、TensorListConcatV2、TensorListGather... Error output: >>> import onnx>>> m = onnx.load('test.onnx')>>> onnx.checker.check_model(m) Traceback (most recent call last): File "", line 1, in File "C:\Users\scmckay\AppData\Roaming\Python\Python310\site-packages\onnx\checker.py", line 119, in check_model...

**Describe the bug** When converting a quantized tflite mode to onnx, extra nodes (e.g. transpose, re-shape, etc.) got emitted between Q-DQ pairs. This prevents ORT graph optimizer to effectively fuse...

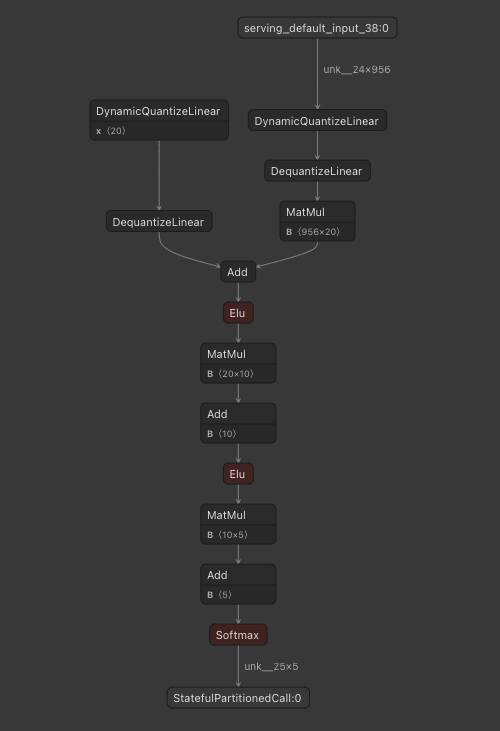

# Ask a Question ### Question I have model here is graph . I can't use quantize graph in my environment (Unity Baracuda 3.0)....

Is it possible to specify multiple inputs in the input_signature argument when converting? Or will it infer automatically from the provided model?