SimSwap

SimSwap copied to clipboard

SimSwap copied to clipboard

Are there parameters for making the source facial details stronger?

First of all, great work. Are there parameters that can keep more of the details from the source image, or is this something that needs to be trained on? For example, sometimes there might be key details missing from the eyes, or maybe there are other features(piercings, tattoos, moles, and so on for example) that I may want to keep. Thanks!

Thank you for carefully studying our project engineering. We do not have parameters to adjust the degree of facial feature retention, because this project is our open source code for ACMMM2020. From a research perspective, our goal at the time was that features such as tattoos and piercings should be removed, because this is not The nature of the character. Of course we will consider your suggestions, and maybe we will add an interface to control the degree of feature retention in the version 2 that we will release in the future. This should be cool, many thanks~

If you have any other suggestions, please feel free to ask questions, and we will consider your comments and make improvements. :)

Thanks for your reply! I'm honestly impressed that you can throw a full video into the script without any pre-production editing, so great work. I have some suggestions that should be easy to implement.

-

The bounding box is very visible after inference. Filling the face based on facial landmarks, then using that as a blurred matte should solve that issue.

-

An option to perform super resolution on the face during or after inference. An example would be something like Face Sparnet.

-

Support for the side of faces, or more extreme angles. I'm sure this is due to how the model was trained and not the actual project itself.

All of these things I can do myself, but I have to use other projects to implement them. It would be nice to have them native to what the script uses like ONNX and insightface.

Cool, I will try to add these modules to see if there is any improvement.

Cool, I will try to add these modules to see if there is any improvement.

Great. Another idea for a problem I've just realized. If the face is tilted too far, there are a lot of jitters on the swapped face. For example, if the head goes from a 0 degree angle to a 90 degree one, the facial alignment struggles a bit. To fix this, you can set a parameter by getting the rotation matrix from the top of the head to the bottom. It would be something like this in steps.

- Detect if head is tilted to far.

- If the head tilted to far, rotate then entire target video so that it is aligned, and the head's the chin at the bottom, and the top of the head at the top.

- Re-detect the face after the rotation, and swap.

- Rotate the entire target video back to its regular rotation.

- Continue inference and repeat if needed.

That should solve that issue. If this was Dlib I could probably implement this, but I'm not familiar with what you're using :).

I see, it sounds very reasonable, let me spend some time to try this idea these two days.

- An option to perform super resolution on the face during or after inference. An example would be something like Face Sparnet.

For info, I use an optional post-processing with GPEN [1] in one of my SimSwap-Colab notebooks: gpen/SimSwap_images.ipynb

If you want to try GPEN on Colab, independently from SimSwap, I have a small Github Gist for that purpose: https://gist.github.com/woctezuma/ecfc9849fac9f8d99edd7c1d6d03758a

Based on my experiments with super-resolution, GPEN looks the best among open-source super-resolution algorithms for faces. When it works, it is great. However, one has to be careful, because it can introduce artifacts, especially if the input photos had already been processed with a super-resolution algorithm. So it really has to be optional, to avoid inadvertently stacking super-resolution processing steps, which would degrade the final result.

[1] Yang, Tao, et al. GAN Prior Embedded Network for Blind Face Restoration in the Wild. arXiv preprint arXiv:2105.06070. 2021. (code and paper)

@woctezuma Wow, that works really well. Thanks for the tip!

@NNNNAI I actually tried implementing the video rotation idea, but that didn't solve the issue. The performance comes down to the function here. The alignment algorithm seems to make the face crop shake a lot, resulting in poor alignment on some more extreme angles.

Would also be good to have the mask saved as a separate output to bring the footage into after effects to edit

After playing with GPEN and DFNet (GPEN gives much better quality results in my opinion based on some tests).

Like the suggested above, but I would like to focus on local machine (Windows 10 + Anaconda) It will be really nice and useful to have optional extra command to add: GPEN as post:

1️⃣ - GPEN should run on every frame that SimSwap generated. 2️⃣ - Then SimSwap merge-connect everything together hopefully with lossless quality from the source video.

I couldn't install GPEN locally so I could only test it via google colab, but if this could be merged to SimSwap as optional command so the user won't have to use GPEN (like the current version) but using a command to run GPEN as post with it's basic properties (scaling, generate ONLY the final GPEN without the other files to speed up things and focus SimSwap's goal) will be more than nice!

@ftaker887 you say that you can solve the problem with the bounding box after inference. Would you share your solution/ source code for that?

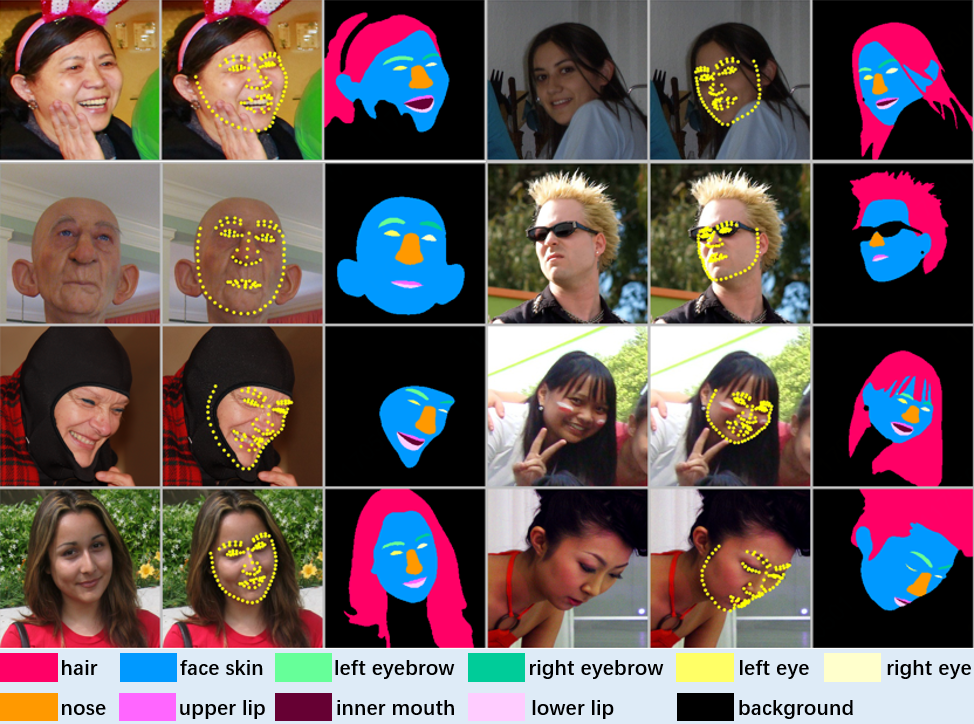

You can try to use this face parsing repo to get the mask, and blend the original image and swapping image according to the mask. The results by using mask or by bounding box are shown below. Many thansk~.

Impressive! the smooth masking bounding box looking really nice! @NNNNAI Any chance this feature will be added to SimSwap as extra command?

--MaskSmooth

or if needed extra parameters or values?

I will release this feature may be within a week, cause there are works I got to busy with recently. Many thanks~.

I will release this feature may be within a week, cause there are works I got to busy with recently. Many thanks~.

That will be great! thank you 👍

Thanks. I think I will wait until this feature is released.

Btw. I've added two extra parameters to set start and endframe (cut_in/cut_out) for the inference to be set from the commandline if one wants to process only parts of the input video. Video works fine so far, but I have problems to set the audio cut_in....

@instant-high You can check ffmpeg and moviepy, both third party libraries support audio injection.

@NNNNAI . Yes I know... But never did that in python.... Most of the time I use VisualBasic for my projects. (Some videotools, GUI for first order motion and motion co-segmentation,, wav2lip...)

@instant-high Hhhhh, I see, hope U can get it done~.

You can try to use this face parsing repo to get the mask, and blend the original image and swapping image according to the mask. The results by using mask or by bounding box are shown below. Many thansk~.

I've actually started training a model a few days ago using Self Correction for Human Parsing. It's very fast, and I believe it works without adding too many modules to SimSwap's repository.

The dataset I'm using is one that I've found here called LaPa, and would probably be best as it deals with occlusion, faces, and difficult situations.

The idea is that you would either mask it first for better alignment, or use it as a post processing step. There's another idea of using face inpainting after masking, but that's starting to get into third party plugin territory which might add complexity.

Would you like me to create a pull request when or if I get this implemented?

I don't know how to train my own model or anything complicated like that, I currently messing around with SimSwap built-in models and I see some issues I would love to see solve in future SimSwap, sometimes it can't handle some parts unlike the example above, and many times it is flickering in a way it won't recognize some frames that are similar to one before or after which is weird, also sometimes it is resizing the face because of not accurate face recognize I guess.

@ftaker887 I must mention that I'm VERY impressed from how accurate the mask is on the example you just posted! :o I see the issues I currently have with "Face behind shoulders" sometimes with Hands over face when someone for example fix their hair. and with THIS example you just showed... WOW!!! that looks very accurate with so much details related to how it mask it.

@NNNNAI is there a chance this will be used for making in SimSwap future version? If this is possible, I imagine how accurate the issues me and others may already tested, I'm no programmer and have no idea how to do such thing but I hope anyone here could merge with that way of masking. 🙏

@ftaker887 Wonderful~~~~~!!!!!!!!!!!!!!! But it will indeed add complexity to the original simswap repo by introducing self training mask model. How about this you create a individual repo named "simswap with more accuary mask" or something like that, and I will add a link refer to your repo in the simswap homepage when you get the function implemented.It all depends on you, looking forward to your feedback.

Sorry, but just another stupid question: Messing around with ./util/reverse2original.py I found out that in line 11

swaped_img = swaped_img.cpu().detach().numpy().transpose((1, 2, 0))

is the "swapped" result image

As an absolute python beginner I've managed to blur / resize this swaped image by adding this code:

for swaped_img, mat in zip(swaped_imgs, mats): swaped_img = swaped_img.cpu().detach().numpy().transpose((1, 2, 0)) img_white = np.full((crop_size,crop_size), 255, dtype=float)

swaped_img = cv2.resize(swaped_img,(256,256)) swaped_img = cv2.GaussianBlur(swaped_img, (19,19), sigmaX=0, sigmaY=0, borderType = cv2.BORDER_DEFAULT)

Wouldn't it be possible to make a smooth bounding box before blending it to the original image there? Need help for this because it's very hard to search the net for every function and every error message when something goes wrong.

Here's an example of blur and resize swaped_img

@ftaker887 Wonderful~~~~~!!!!!!!!!!!!!!! But it will indeed add complexity to the original simswap repo by introducing self training mask model. How about this you create a individual repo named "simswap with more accuary mask" or something like that, and I will add a link refer to your repo in the simswap homepage when you get the function implemented.It all depends on you, looking forward to your feedback.

Sure, that seems like a good idea! It may take a bit of time since I have to make sure everything is neat and ready to use.

The model is almost ready (this is an early iteration). It's not perfect, but it works well when it wants to. Hopefully when the training is done it will be a bit better.

Ok. But I was thinking about a simple, fixed mask around the square image that contains the swapped face before blending it to the whole frame. ? Soft border for that square.?

@instant-high I see.But actually, I have been use soft border blending in the original code.You can check it in line 34-35 ./util/reverse2origianl.py. There are some small problem about the code you provided. Firstly, you should not resize the swaped image which will lead to the misalign while blend the swapped image back to whole image. Secondly, you currently smooth the whole swapped image instead of the border which leads to your current oversmooth result inside the bounding box. If you still get confused, please feel free to ask~. Have a nice day~.

Resize and blur I only did to clarify what I mean. Of course that doesn't make sense. I'll check line 34/35 But why do we see sharp edges when you already did soft blending the whole square?

Thank you

But why do we see sharp edges when you already did soft blending the whole square?

Check these images: https://github.com/neuralchen/SimSwap/issues/14#issuecomment-877135637 Or this link for an easier comparison: https://imgsli.com/NjA2MzE

You can see that there is a blur near the edges of the bounding-box, whereas the center with the face remains sharp.

Bounding-box:

The issue is less important with the mask method:

Mask:

Because our current method will make the background more or less different from the original, soft border can only alleviate sharp edge but cannot completely solve sharp edge. So maybe using mask to blend would be a better choice.

Sorry again. Maybe I don't understand the code completly. What I'm trying to achieve is a mask like this for the whole square image whit the face and the background: that should work even if parts of the face, eg. the chin, are ouside the square,

Thank you for your patience

Thank you for your patience