LocalAI

LocalAI copied to clipboard

LocalAI copied to clipboard

Config files

This PR adds a first step into having a configuration mechanism for LocalAI.

Fixes: https://github.com/go-skynet/LocalAI/issues/28

It introduces two way of configuring LocalAI "virtual models": a single YAML file containing all the user-defined models and their mapping, that can be specified via CLI, or by creating multiple YAML files inside the models folder.

A config file might be:

- name: gpt-3.5-turbo

parameters:

model: ggml-gpt4all-j

context_size: 1024

threads: 10

stopwords:

- "HUMAN:"

- "GPT:"

roles:

user: "HUMAN:"

system: "GPT:"

template:

completion: completion

chat: ggml-gpt4all-j

- name: list2

parameters:

model: testmodel

context_size: 512

threads: 10

stopwords:

- "HUMAN:"

- "### Response:"

roles:

user: "HUMAN:"

system: "GPT:"

template:

completion: completion

chat: ggml-gpt4all-j

A model declaration, that can be inside the models directory can be:

name: gpt4all

parameters:

model: testmodel # A path to a model file inside the models folder

context_size: 512

threads: 10

stopwords:

- "HUMAN:"

- "### Response:"

cutstrings:

- ...

trimspace:

- ...

roles:

user: "HUMAN:"

system: "GPT:"

template:

completion: completion

chat: ggml-gpt4all-j

Every model declared will be available for inference and returned to the models list.

Fields:

- Inside

parameterscan be specified all the OpenAI request fields. That includes, temperature, top_p, top_k - Inside

templatecan be specified files to be used for endpoints to templates requests with. Files must end in "tmpl"

Supersedes https://github.com/go-skynet/LocalAI/pull/63

I want to play with this and have it in master. I'll add an e2e example with a WebUI right after so it is more clear on how to use it

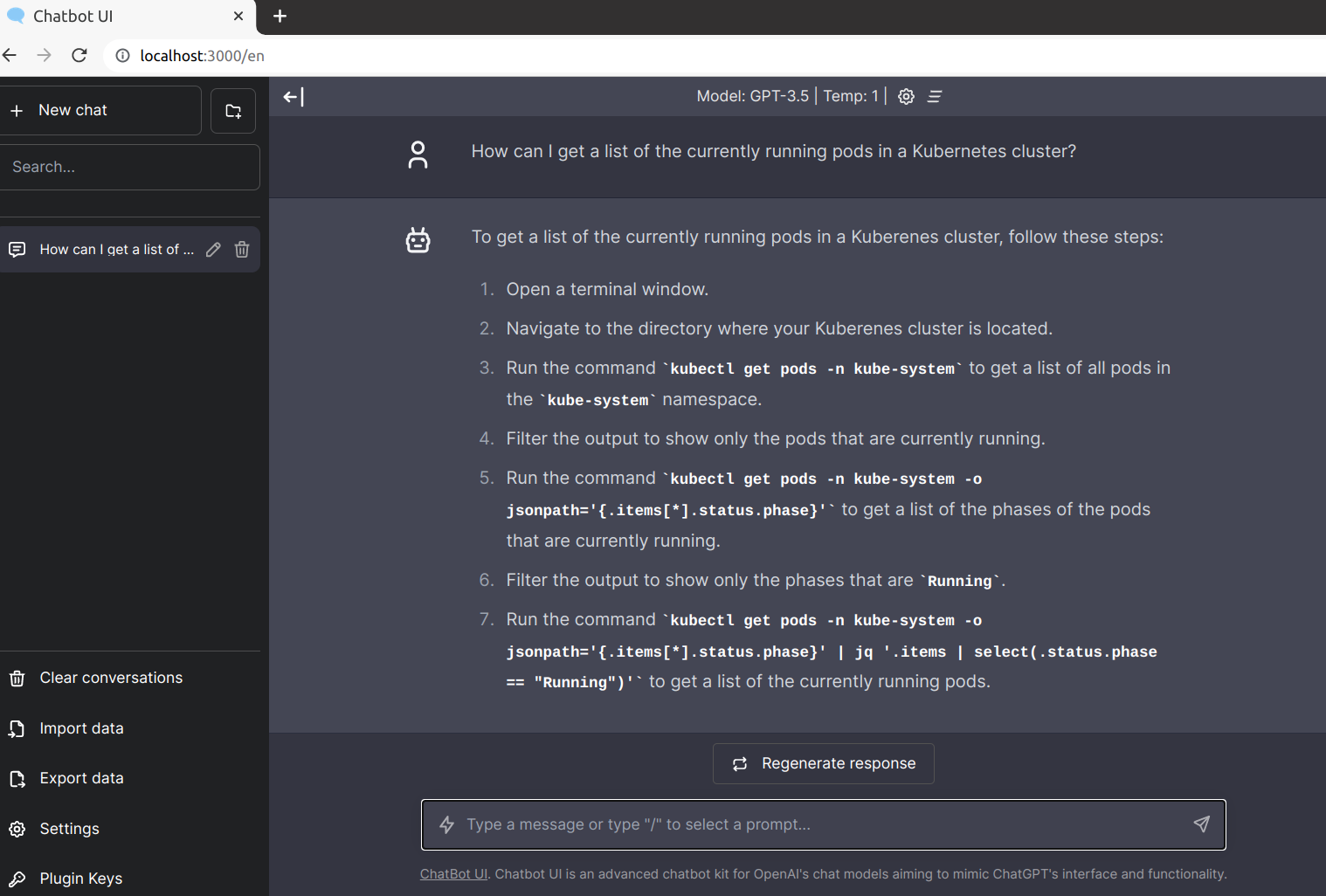

I've added an example which integrates with chatbot-ui @normen @mkellerman - there were a bunch of changes related to make it work

Also works on my machine! :100:

It did not stream, however. It took a long time, then finally appeared.