Add support for ObjectDetection

Adds a new ObjectDetectionTask which uses torchvision's Faster-RCNN under the hood.

Closes #442

So the tests are failing due to warnings caused by the new Multi-Weight API torchvision introduced in 0.13.

If we want to continue supporting the minimum torchvision 0.10.0, I could just ignore the warnings. Otherwise, I can update detection.py to use the new API and bump minimum torchvision version to 0.13.

What do you guys think?

This is great, thanks so much!

For torchvision dependency version, see what I did in #631 for the other trainers. It's possible to check the version of torchvision and use different syntax for each version. It's kinda ugly and I don't like it, but I'm not sure if it's wise to just drop support for all older torchvision versions. Open to feedback on this though.

For the torchmetrics dependency version, what was the reason for the version bump? It seems like MeanAveragePrecision was introduced in 0.7.0 (renamed from MAP) according to the changelog.

For torchvision dependency version, see what I did in https://github.com/microsoft/torchgeo/pull/631 for the other trainers. It's possible to check the version of torchvision and use different syntax for each version. It's kinda ugly and I don't like it, but I'm not sure if it's wise to just drop support for all older torchvision versions. Open to feedback on this though.

Saw this snippet.

if parse(torchvision.__version__) >= parse("0.12"):

if backbone_pretrained:

kwargs = {

"weights": getattr(

torchvision.models, f"ResNet{backbone[6:]}_Weights"

).DEFAULT

}

else:

kwargs = {"weights": None}

else:

kwargs = {"pretrained": backbone_pretrained}

This can work. Since the number of backbones supported also include the ResNext and Wide_Resnet variants, I opted to create a mapping between backbones and weights. Perhaps this could be moved to a common location in a later PR.

Agree with not dropping support. We only need to drop a version if it breaks something or if it requires really ugly code to handle it. Think we're good for now.

For the torchmetrics dependency version, what was the reason for the version bump? It seems like MeanAveragePrecision was introduced in 0.7.0 (renamed from MAP) according to the changelog.

You're right. Think it was just the filename that was changed (map.py -> mean_ap.py). Reverted that commit.

Another option would be to change the pretrained kwarg on our end so that instead of taking a boolean it takes whatever torchvision wants. Then it's up to the user to decide which pretrained model to use. Thoughts?

Another option would be to change the pretrained kwarg on our end so that instead of taking a boolean it takes whatever torchvision wants. Then it's up to the user to decide which pretrained model to use. Thoughts?

Sure, that could work. That way the user would be able to specify the weights to be used and if they leave it unspecified, we can just use the default weights. But specifying weights is only possible for torchvision > 0.12. For lower versions we will need to pass pretrained=True regardless of the weights specified by the user.

Hmm, but then it becomes difficult to store this configuration in a YAML file...

Right. The YAML would only be informative if you're using torchvision > 0.12

But even if the YAML is torchvision > 0.12 specific, can you store objects in YAML or only strings/floats/bools? We could store a string and exec it but that feels like a security risk...

The weights in the YAML can be string. We could just specify it like this.

experiment:

task: "nasa_marine_debris"

name: "nasa_marine_debris_test"

module:

detection_model: "faster-rcnn"

backbone: "resnet50"

+ pretrained: "ResNet50_Weights.IMAGENET1K_V1"

num_classes: 2

learning_rate: 1.2e-4

learning_rate_schedule_patience: 6

verbose: false

datamodule:

root_dir: "data/nasamr/nasa_marine_debris"

batch_size: 4

num_workers: 56

val_split_pct: 0.2

The pretrained arg can then be passed into the weights arg (once we pass it through torchvision's get_weight function)

Ah, we could use torchvision.prototype.models.get_weight to convert the string to the enum. But yeah, it would be a bit frustrating for the YAML to only work properly with torchvision 0.12.

Let's keep it like this for now but strongly consider dropping support for older torchvision at some point.

The pretrained arg will officially be dropped in version 0.15. Perhaps that would be a good time to drop old versions and peg minimum to 0.13.

Coverage drop is mainly caused by the code required for supporting minimum versions.

On that note, has anybody looked into combining the coverage reports for normal tests and minimal tests via coverage combine before uploading to codecov?

We run tests on both the latest version of dependencies and the minimum version of dependencies. Both tests generate coverage reports, and should automatically be merged when uploaded to codecov. This works on all of our other PRs, not sure why coverage is dropping for you.

This is weird. The coverage drop seems to be caused by unrelated files. Also a warning is shown stating there are unmerged base commits. Any ideas?

The coverage drop seems to be caused by unrelated files.

This usually means that coverage has increased in main since this branch was created. Usually, rebase or merge commits solve this. Wish codecov was smarter about this...

Since I've opened this PR, there have been a total of 4 commits merged to main. The two tutorial commits, one related to Sentinel2 and the torchvision constraint fix so it's unlikely a coverage increase is the reason.

I wonder if this has to do with me rebasing and squashing commits in this branch.

Yep, I have no idea what's going on here. It's just this PR, right? Probably best to reach out to codecov and see if this is a bug in codecov.

I'm focusing on 0.3.1 stuff at the moment, but if this is still broken after 0.3.1 is finished I can try to report it to codecov myself.

I went down the torchvision.detection rabbit hole awhile back. I think my only complaint with it was that they don't support negative sample images that don't have any objects in them during training. Do you know if this has changed? I need to catch up on all the releases.

Also I believe they added support for constructing a resnet fpn backbone from a pretrained torchvision resnet model. @calebrob6 and @anthonymlortiz, this might be of interest because we could add support for pretrained SSL resnet backbones to this task.

@isaaccorley

I went down the torchvision.detection rabbit hole awhile back. I think my only complaint with it was that they don't support negative sample images that don't have any objects in them during training. Do you know if this has changed? I need to catch up on all the releases.

They support negative samples. Support was added in 0.6.0 via pytorch/vision@e75b497

Also I believe they added support for constructing a resnet fpn backbone from a pretrained torchvision resnet model. @calebrob6 and @anthonymlortiz, this might be of interest because we could add support for pretrained SSL resnet backbones to this task.

This is supported as well. Just need to pass the backbone name and the pretrained flag.

They recently added (in v0.13.0) the Multi Weights API which allows you to select which weights your backbone should load. Adam opened an issue to track this - #762

I really wish torchvision would add support for oriented/rotated bounding boxes i.e. (x, y, w, h, a). Orientation is a significant factor when you're dealing with object detection in RS imagery. Especially if your objects are small and clustered together. detectron2 supports RotatedBoxes and the necessary ops like rotated nms, rotated ROIAlign, Rotated RPN etc and it works really well. Was able to get some nice results on the DOTA dataset using it.

There is an open issue regarding porting over the necessary ops to torchvision (pytorch/vision#2761) but there doesn't seem to be much interest :disappointed:.

I really wish

torchvisionwould add support for oriented/rotated bounding boxes i.e.(x, y, w, h, a). Orientation is a significant factor when you're dealing with object detection in RS imagery. Especially if your objects are small and clustered together.detectron2supports RotatedBoxes and the necessary ops like rotated nms, rotated ROIAlign, Rotated RPN etc and it works really well. Was able to get some nice results on the DOTA dataset using it.There is an open issue regarding porting over the necessary ops to

torchvision(pytorch/vision#2761) but there doesn't seem to be much interest 😞.

@conor-wallace was also able to get good benchmark results on DOTA with detectron2 and we use it a ton for aerial imagery now. Though we would love to never touch detectron2 again if torchvision added rotated oriented support.

Can you rebase to resolve the merge conflict?

Still no idea why codecov has been buggy lately. I'll force merge if I need to.

The drop in coverage here is legit, I was able to reproduce it locally:

$ pytest --cov=torchgeo/trainers --cov-report=term-missing tests/trainers/

=============================================================================================== test session starts ================================================================================================

platform darwin -- Python 3.9.13, pytest-7.1.3, pluggy-1.0.0

rootdir: /Users/ajstewart/torchgeo, configfile: pyproject.toml

plugins: anyio-3.6.1, nbmake-1.3.3, cov-3.0.0

collected 57 items

tests/trainers/test_byol.py .... [ 7%]

tests/trainers/test_classification.py .................. [ 38%]

tests/trainers/test_detection.py ... [ 43%]

tests/trainers/test_regression.py .... [ 50%]

tests/trainers/test_segmentation.py .................. [ 82%]

tests/trainers/test_utils.py .......... [100%]

---------- coverage: platform darwin, python 3.9.13-final-0 ----------

Name Stmts Miss Cover Missing

-------------------------------------------------------------------

torchgeo/trainers/__init__.py 9 0 100%

torchgeo/trainers/byol.py 141 1 99% 326

torchgeo/trainers/classification.py 164 0 100%

torchgeo/trainers/detection.py 101 5 95% 24, 66-68, 187-188

torchgeo/trainers/regression.py 87 1 99% 45

torchgeo/trainers/segmentation.py 99 0 100%

torchgeo/trainers/utils.py 52 0 100%

-------------------------------------------------------------------

TOTAL 653 7 99%

=============================================================================================== 57 passed in 52.34s ================================================================================================

See https://app.codecov.io/gh/microsoft/torchgeo/pull/758 for the pictorial representation.

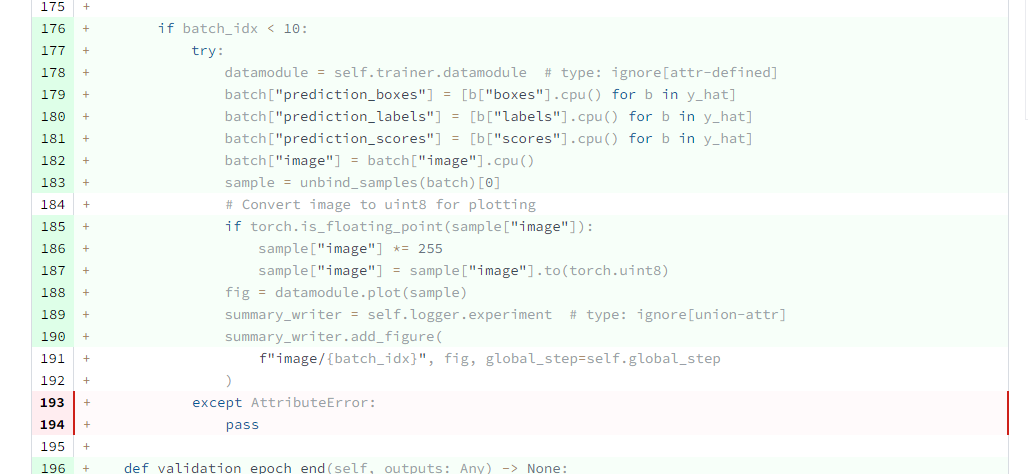

the try/except in the validation_step

There's another line too.

The try except block in the val_step is the only line left. Not sure how to test this. I'm guessing test_segmentation.py passes because it includes datamodules that don't have plot implemented so it would trigger the exception.

Maybe instead of try/except we should check if the datamodule has a plot method. Something like: if isinstance(getattr(datamodule, "plot"), Callable). Then in the test we can override the plot method to be None or something so it will cover both cases.

There's a reason for the try-except. Lightning is very much dynamically typed, and makes no guarantees that any of these attributes will exist:

self.trainer.datamoduledatamodule.plotself.logger.experiment

That's also the reason that we have to ignore mypy warnings on so many lines. The correct solution would be to use hasattr/getattr on all of these like @isaaccorley suggested. I was just lazy and wrapped everything in a single try-except.

If you want to rework the code to use hasattr/getattr, I would create a new PR and do the same for existing trainers. We should probably add plot methods to all datamodules like we have to all datasets, so that will cause this same issue in other tests too.

If you want to keep using the try-except, my suggestion would be to add a test that monkeypatches the datamodule to remove the plot method. This will give you full coverage and ensure that the trainer works even for datamodules without a plot method.

@isaaccorley does this need a predict step?

@isaaccorley does this need a predict step?

Ideally yes, we can have a predict step that returns the predicted boxes and class labels per sample.