MagicSource

MagicSource

@dtx525942103 Did u get a None during inference? I did nothing particular just normal steps in export, sending a random text as input.

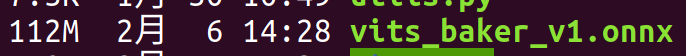

I have export it to onnx, seems no obstacles to do this:  ``` onnxexp glance -m vits_baker_v1.onnx vits!? Namespace(model='vits_baker_v1.onnx', subparser_name='glance', verbose=False, version=False) Exploring on onnx model: vits_baker_v1.onnx Model summary...

@AronWang @Aloento I have updated the exportation code here: https://github.com/jinfagang/lark I have reconstructed VITS training now it can be more easily training on other backbones.

Will be release after some code refactoring. Meanwhile, join QQ group: 526506088 for more discussion about speech model

@jaywalnut310 I probably would try Biaobei data for Chinese, I am totally newbie in tts though. Let me have a deep look. what would be phonemes like in Chinese?

@jaywalnut310 Hi, may I ask one last question, how's the latency compare with tacotron2 (I mean e2e lantency, tacotron2 may also need a vcoder which count in), is vits faster...

@jaywalnut310 I listened the sample audio from vits, it's much more better and natural than tactron2, so it's better and faster, more valuable to have a try. Do u guys...

@jaywalnut310 I can train on BIAOBEI dataset which is a opensource Chinese dataset. But can u tell me which way should I organise it?

@dtx525942103 that's amazing! It can synthesis so long voice! Do u plan to opensource your code training on Chinese?

@dtx525942103 同求