FastChat

FastChat copied to clipboard

FastChat copied to clipboard

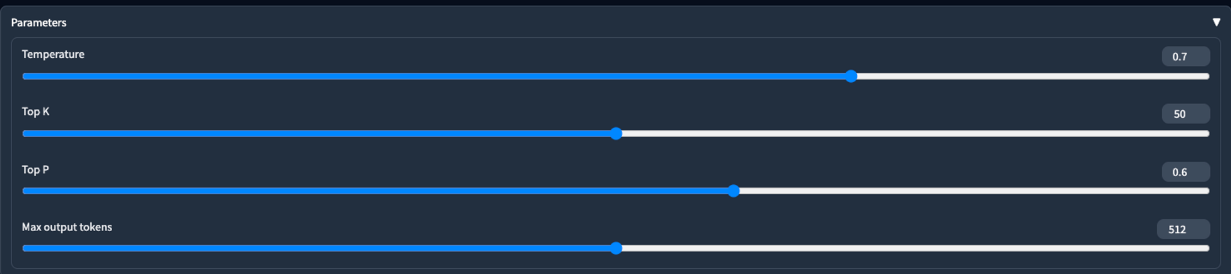

Add top_k top_p sampling parameters

trafficstars

Address https://github.com/lm-sys/FastChat/issues/350

Verification:

controller

2023-05-05 00:51:45 | INFO | gradio_web_server | ==== request ====

{'model': 'vicuna-13b', 'prompt': "A chat between a curious user and an artificial intelligence assistant.

The assistant gives helpful, detailed, and polite answers to the user's questions.

USER: testing ASSISTANT:", 'temperature': 0.7, 'top_k': 50, 'top_p': 0.6, 'max_new_tokens': 512, 'stop': None, 'stop_token_ids': None, 'echo': False}

worker

2023-05-05 00:51:45 | INFO | model_worker | request params {'model': 'vicuna-13b',

'prompt': "A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions.

USER: testing ASSISTANT:", 'temperature': 0.7, 'top_k': 50, 'top_p': 0.6, 'max_new_tokens': 512, 'stop': None, 'stop_token_ids': None, 'echo': False}...

UI