FastChat

FastChat copied to clipboard

FastChat copied to clipboard

What's the problem?

Execute python3 -m fastchat.model.apply_delta --base-model-path E:\llama-13b-hf --target-model-path D:\vicuna-13b-delta-v0 --delta-path D:\13b --low-cpu-mem, then show:

Traceback (most recent call last):

File "D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py", line 161, in

modify llama-13b-hf/tokenizer_config.json , change "LLaMATokenizer" to "LlamaTokenizer".

I have changed the word. But the problem still exists.

===== RESTART: D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py ====

Traceback (most recent call last):

File "D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py", line 161, in

Upgrade transformers version, check it by from transformers import LlamaTokenizer.

I have changed the word. But the problem still exists. ===== RESTART: D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py ==== Traceback (most recent call last): File "D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py", line 161, in apply_delta_low_cpu_mem( File "D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py", line 71, in apply_delta_low_cpu_mem base_tokenizer = AutoTokenizer.from_pretrained(base_model_path, use_fast=False) File "E:\Python\Python311\Lib\site-packages\transformers\models\auto\tokenization_auto.py", line 689, in from_pretrained raise ValueError( ValueError: Tokenizer class LlaMATokenizer does not exist or is not currently imported.

I have changed the word. But the problem still exists. ===== RESTART: D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py ==== Traceback (most recent call last): File "D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py", line 161, in apply_delta_low_cpu_mem( File "D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py", line 71, in apply_delta_low_cpu_mem base_tokenizer = AutoTokenizer.from_pretrained(base_model_path, use_fast=False) File "E:\Python\Python311\Lib\site-packages\transformers\models\auto\tokenization_auto.py", line 689, in from_pretrained raise ValueError( ValueError: Tokenizer class LlaMATokenizer does not exist or is not currently imported.

hi guy, check your word. LlaMATokenizer is not correct.

I have changed the word. But the problem still exists. ===== RESTART: D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py ==== Traceback (most recent call last): File "D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py", line 161, in apply_delta_low_cpu_mem( File "D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py", line 71, in apply_delta_low_cpu_mem base_tokenizer = AutoTokenizer.from_pretrained(base_model_path, use_fast=False) File "E:\Python\Python311\Lib\site-packages\transformers\models\auto\tokenization_auto.py", line 689, in from_pretrained raise ValueError( ValueError: Tokenizer class LlaMATokenizer does not exist or is not currently imported.

I have changed the word. But the problem still exists. ===== RESTART: D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py ==== Traceback (most recent call last): File "D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py", line 161, in apply_delta_low_cpu_mem( File "D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py", line 71, in apply_delta_low_cpu_mem base_tokenizer = AutoTokenizer.from_pretrained(base_model_path, use_fast=False) File "E:\Python\Python311\Lib\site-packages\transformers\models\auto\tokenization_auto.py", line 689, in from_pretrained raise ValueError( ValueError: Tokenizer class LlaMATokenizer does not exist or is not currently imported.

hi guy, check your word. LlaMATokenizer is not correct.

Could you give me any suggestions?

model = TFAutoModel.from_pretrained("bert-base-uncased") Downloading tf_model.h5: 0%| | 0.00/536M [01:06<?, ?B/s] Traceback (most recent call last):█▏ | 52.4M/536M [00:38<06:02, 1.34MB/s] File "E:\Python\Python311\Lib\site-packages\urllib3\response.py", line 444, in _error_catcher yield File "E:\Python\Python311\Lib\site-packages\urllib3\response.py", line 567, in read data = self._fp_read(amt) if not fp_closed else b"" ^^^^^^^^^^^^^^^^^^ File "E:\Python\Python311\Lib\site-packages\urllib3\response.py", line 533, in _fp_read return self._fp.read(amt) if amt is not None else self._fp.read() ^^^^^^^^^^^^^^^^^^ File "E:\Python\Python311\Lib\http\client.py", line 466, in read s = self.fp.read(amt) ^^^^^^^^^^^^^^^^^ File "E:\Python\Python311\Lib\socket.py", line 706, in readinto return self._sock.recv_into(b) ^^^^^^^^^^^^^^^^^^^^^^^ File "E:\Python\Python311\Lib\ssl.py", line 1278, in recv_into return self.read(nbytes, buffer) ^^^^^^^^^^^^^^^^^^^^^^^^^ File "E:\Python\Python311\Lib\ssl.py", line 1134, in read return self._sslobj.read(len, buffer) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ ssl.SSLError: [SSL: DECRYPTION_FAILED_OR_BAD_RECORD_MAC] decryption failed or bad record mac (_ssl.c:2576)

During handling of the above exception, another exception occurred:

Traceback (most recent call last): File "E:\Python\Python311\Lib\site-packages\requests\models.py", line 816, in generate yield from self.raw.stream(chunk_size, decode_content=True) File "E:\Python\Python311\Lib\site-packages\urllib3\response.py", line 628, in stream data = self.read(amt=amt, decode_content=decode_content) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File "E:\Python\Python311\Lib\site-packages\urllib3\response.py", line 566, in read with self._error_catcher(): File "E:\Python\Python311\Lib\contextlib.py", line 155, in exit self.gen.throw(typ, value, traceback) File "E:\Python\Python311\Lib\site-packages\urllib3\response.py", line 455, in _error_catcher raise SSLError(e) urllib3.exceptions.SSLError: [SSL: DECRYPTION_FAILED_OR_BAD_RECORD_MAC] decryption failed or bad record mac (_ssl.c:2576)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "

loading tf_model.h5: 10%|█████▏ | 52.4M/536M [00:53<06:02, 1.34MB/s]

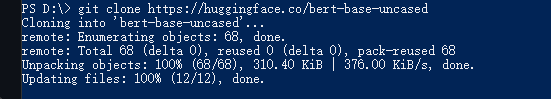

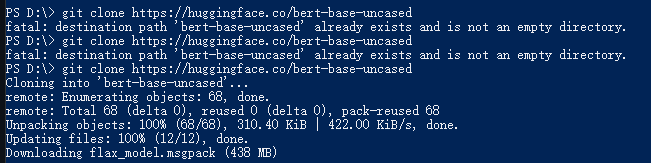

It looks like failed during downloading model from huggingface_hub. you'd better download it to local and then run your program. here is the model https://huggingface.co/bert-base-uncased ? @Burgeon0110

It seems that this process is dead.

DEAD!

Do u see this message?

File "D:\FastChat-main\FastChat-main\fastchat\model\apply_delta.py", line 161, in

@Burgeon0110 this is an old issue, the transformers library fixed that a while ago. Mind if we close this one? Do you still need help with it?

Feel free to open again if there's still issue.