chown/chgrp on dynamic provisioned pvc fails

/kind bug

What happened?

Using dynamic provisioning feature introduced by #274 with applications that try to chown their pv fails.

What you expected to happen?

With the old efs-provisioner, this caused no issues. But with dynamic provisioning in this csi driver, the chown command fails. I must admit, I don't understand how the uid/gid thing works with with EFS access points. The pod user does not seem to have any association with the uid/gid on the access point, however pods can read & write mounted pv just fine.

How to reproduce it (as minimally and precisely as possible)?

- Use the dynamic provisioning feature introuduced by #274

- Create a storageClasss:

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: efs-sc mountOptions: - tls parameters: directoryPerms: "700" fileSystemId: fs-<id> provisioningMode: efs-ap provisioner: efs.csi.aws.com - Use it with application charts that have a chown step in the beginning, like:

- https://github.com/grafana/helm-charts/blob/8dfa6da2790911ee78e2c9cf62f950f20e4a8129/charts/grafana/templates/_pod.tpl#L22-L40

- https://github.com/jenkins-x-charts/nexus/blob/f05c2172a550c6bf3daf2c25eef940e67290d346/Dockerfile#L9-L10

For the first chart, we saw the initContainer failing. We just tried disabling the initContainer on the grafana chart with these chart overrides:

initChownData:

enabled: false

And the application worked fine.

For the nexus chart, there's a whole bunch of errors from the logs,

chgrp: changing group of '/nexus-data/elasticsearch/nexus': Operation not permitted

Perhaps these charts don't really need that step with dynamic provisioning. Another nexus chart seems to have an option to skip the chown step; https://github.com/travelaudience/docker-nexus/blob/a86261e35734ae514c0236e8f371402e2ea0feec/run#L3-L6

Anything else we need to know?:

Environment

- Kubernetes version (use

kubectl version):Client Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.1", GitCommit:"c4d752765b3bbac2237bf87cf0b1c2e307844666", GitTreeState:"clean", BuildDate:"2020-12-23T02:22:53Z", GoVersion:"go1.15.6", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"17+", GitVersion:"v1.17.12-eks-7684af", GitCommit:"7684af4ac41370dd109ac13817023cb8063e3d45", GitTreeState:"clean", BuildDate:"2020-10-20T22:57:40Z", GoVersion:"go1.13.15", Compiler:"gc", Platform:"linux/amd64"} - Driver version:

amazon/aws-efs-csi-driver:master quay.io/k8scsi/csi-node-driver-registrar:v1.3.0 k8s.gcr.io/sig-storage/csi-provisioner:v2.0.2

I'm also seeing this. This means that things like postgres, which fails to start if its data directory is not owned by the postgres user, don't work with dynamic provisioning.

From https://docs.aws.amazon.com/efs/latest/ug/accessing-fs-nfs-permissions.html,

By default, root squashing is disabled on EFS file systems. Amazon EFS behaves like a Linux NFS server with no_root_squash. If a user or group ID is 0, Amazon EFS treats that user as the root user, and bypasses permissions checks (allowing access and modification to all file system objects). Root squashing can be enabled on a client connection when the AWS Identity and Access Management (AWS IAM) identity or resource policy does not allow access to the ClientRootAccess action. When root squashing is enabled, the root user is converted to a user with limited permissions on the NFS server.

I can reproduce this without a file system policy, and with a file system policy that grants elasticfilesystem:ClientRootAccess, it doesn't seem to make a difference. Granting elasticfilesystem:ClientRootAccess to the driver and pod's roles also doesn't help.

Thanks for reporting this. We're investigating possible fixes, but in the meantime let me explain the reason this is happening.

Dynamic provisioning shares a single file system among multiple PVs by using EFS Access Points. The way Access Points work is they allow server-side overwrites of user/group information, overriding whatever the user/group of the app/container is. When we create an AP with dynamic provisioning we allocate a unique UID/GID (for instance 50000:50000) to overwrite all operations to, and create a unique directory (e.g. /ap50000) that is owned by that user/group. This ensures that no matter how the container is configured it has read/write access to its root directory.

What is happening in this case is the application is trying to take its own steps to make its root directory writeable. For instance, if in the container there is an application user with UID/GID 100:100, when it does a ls -la on its FS root directory it sees that it is owned by 50000:50000, not 100:100, so it assumes it needs to do a chown/chmod for it to work. However, even if we allowed this command to go through the application would lose access to its own volume.

This is why the original issue above was resolved by disabling the chown/chgrp checks. This method can be used as a workaround for any application, since you can trust the PVs to be writeable out of box.

This is why the original issue above was resolved by disabling the chown/chgrp checks. This method can be used as a workaround for any application

Some applications don't support disabling ownership checks. E.g. I'm not aware of any way to disable it in Postgres. In such cases, the only workaround I've found is to create a user for the UID assigned by the driver (something like useradd --uid "$(stat -c '%u' "$mountpath")") and then run the application as that user.

Part of the reason efs-provisioner worked seamlessly is it relied on a beta annotation "pv.beta.kubernetes.io/gid" https://github.com/kubernetes-retired/external-storage/blob/201f40d78a9d3fd57d8a441cfc326988d88f35ec/nfs/pkg/volume/provision.go#L62 that silently does basically what your workaround does: it ensures that the Pod using the PV has the annotated group in its supplemental groups (i.e. if the annotation says '100' and you execute groups as the pod user, '100' will be among them)

This feature is very old and predates the rigorous KEP/feature tracking system that exists today, and I think it's been forgotten by sig-storage. Certainly i am culpable for relying on it but doing nothing to make it more than a beta annotation, I'll try to bring it up in isg-storage and see if we can rely on it as an alternative solution.

Part of the reason efs-provisioner worked seamlessly is it relied on a beta annotation "pv.beta.kubernetes.io/gid" https://github.com/kubernetes-retired/external-storage/blob/201f40d78a9d3fd57d8a441cfc326988d88f35ec/nfs/pkg/volume/provision.go#L62 that silently does basically what your workaround does: it ensures that the Pod using the PV has the annotated group in its supplemental groups

Does EFS still reject the chown call when using efs-provisioner, or is it that applications are expected not to call chown since their group already owns the directory?

If I'm reading the Postgres code correctly, it seems to check the UID of the directory. So I'm not sure supplemental groups would work for all use cases.

Does EFS still reject the chown call when using efs-provisioner, or is it that applications are expected not to call

chownsince their group already owns the directory?

It's the latter, so you are right, the supplemental group approach won't work in postgres case.

Does a subdirectory of the access point have the same restriction on chown'ing? If not, I suppose one could create the subdirectory before starting the applicatoin, maybe with an initContainer, and then let the application chown it. Still not a very elegant workaround tho.

Issues go stale after 90d of inactivity.

Mark the issue as fresh with /remove-lifecycle stale.

Stale issues rot after an additional 30d of inactivity and eventually close.

If this issue is safe to close now please do so with /close.

Send feedback to sig-contributor-experience at kubernetes/community. /lifecycle stale

/remove-lifecycle stale

Can someone please suggest a workaround for postgres chown issue when using EFS with dynamic provisioning via access points? (I honestly hope I don't have to fallback to static provisioning to make postgres work on EKS!)

This is why the original issue above was resolved by disabling the chown/chgrp checks. This method can be used as a workaround for any application

Some applications don't support disabling ownership checks. E.g. I'm not aware of any way to disable it in Postgres. In such cases, the only workaround I've found is to create a user for the UID assigned by the driver (something like

useradd --uid "$(stat -c '%u' "$mountpath")") and then run the application as that user.

@gabegorelick - if I setup runAsUser and runAsGroup in pod security context then postgres pod fails with a FATAL error

The files belonging to this database system will be owned by user "postgres".

This user must also own the server process.

The database cluster will be initialized with locale "en_US.utf8".

The default database encoding has accordingly been set to "UTF8".

The default text search configuration will be set to "english".

Data page checksums are disabled.

fixing permissions on existing directory /var/lib/postgresql/data/pgdata ... ok

creating subdirectories ... ok

selecting dynamic shared memory implementation ... posix

selecting default max_connections ... 20

selecting default shared_buffers ... 400kB

selecting default time zone ... Etc/UTC

creating configuration files ... ok

2021-09-07 11:06:38.315 UTC [68] FATAL: data directory "/var/lib/postgresql/data/pgdata" has wrong ownership

2021-09-07 11:06:38.315 UTC [68] HINT: The server must be started by the user that owns the data directory.

child process exited with exit code 1

initdb: removing contents of data directory "/var/lib/postgresql/data/pgdata"

running bootstrap script ...

Here is the kubernetes manifest I am using

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: postgres

spec:

serviceName: postgres-service

selector:

matchLabels:

app: postgres

replicas: 2

template:

metadata:

labels:

app: postgres

spec:

securityContext:

fsGroup: 999

runAsUser: 999

runAsGroup: 999

containers:

- name: postgres

image: postgres:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- name: postgres-persistent-storage

mountPath: /var/lib/postgresql/data

env:

- name: POSTGRES_DB

value: crm

- name: PGDATA

value: /var/lib/postgresql/data/pgdata

- name: POSTGRES_USER

valueFrom:

secretKeyRef:

name: db-credentials

key: user

- name: POSTGRES_PASSWORD

valueFrom:

secretKeyRef:

name: db-credentials

key: pwd

# Volume Claim

volumes:

- name: postgres-persistent-storage

persistentVolumeClaim:

claimName: efs-claim

This issue probably needs to be addressed, but I'm not sure about using EFS for postgres. I have seen less demanding storage use cases where EFS struggles.

Can someone please suggest a workaround for postgres chown issue when using EFS with dynamic provisioning via access points?

I used https://github.com/kubernetes-sigs/aws-efs-csi-driver/pull/434. That's still not merged though, so you'll have to run a fork if you want it. But that is the only way that I'm aware of to specify a UID for the provisioned volumes.

if I setup runAsUser and runAsGroup in pod security context then postgres pod fails with a FATAL error

One workaround is to not do this. Instead, use runAsUser: 0 and fsGroup: 0. Then in your container's entrypoint, invoke postgres (or whatever process you want to run) as the UID of the dynamic volume.

One workaround is to not do this. Instead, use

runAsUser: 0andfsGroup: 0. Then in your container's entrypoint, invoke postgres (or whatever process you want to run) as the UID of the dynamic volume.

@gabegorelick can you please guide me on what do I need to change the entrypoint command of container from cmd [postgres] to?

I see the PR fixing this is still not merged. I'm not running postgres but trying to run freshclam with EFS storage for the signatures database. In the entrypoint they chown the database directory with no prior checks or options to overload.

I would love to see this Pr merged in. Currently hitting this issue while trying to run docker:dind with a pv

i am also having same issue with some of my applications

Ran into this issue, as gabegorelick mention, only got it to work by chowning the mount path with the UID and GUID of the dynamic volume and then setting postgres user to the same IDs. Not ideal, but it works...

Helm code:

command: ["bash", "-c"]

args: ["usermod -u $(stat -c '%u' '{{ .Values.postgres_volume_mount_path }}') postgres && \

groupmod -g $(stat -c '%u' '{{ .Values.postgres_volume_mount_path }}') postgres && \

chown -R postgres:postgres {{ .Values.postgres_volume_mount_path }} && \

/usr/local/bin/docker-entrypoint.sh postgres"]

When is this going to be fixed? I see no issues creating and mounting manually created APs with the proper UID/GUIDs but this driver doesn't seem to respect what I pass as SC params.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

meta.helm.sh/release-name: aws-efs-csi-driver

meta.helm.sh/release-namespace: aws-efs-csi

storageclass.kubernetes.io/is-default-class: "false"

labels:

app.kubernetes.io/managed-by: Helm

name: efs-csi-app-sc

parameters:

basePath: /csi

directoryPerms: "2771"

uid: "0"

gid: "40055"

fileSystemId: fs-blablabl

provisioningMode: efs-ap

provisioner: efs.csi.aws.com

reclaimPolicy: Delete

volumeBindingMode: Immediate

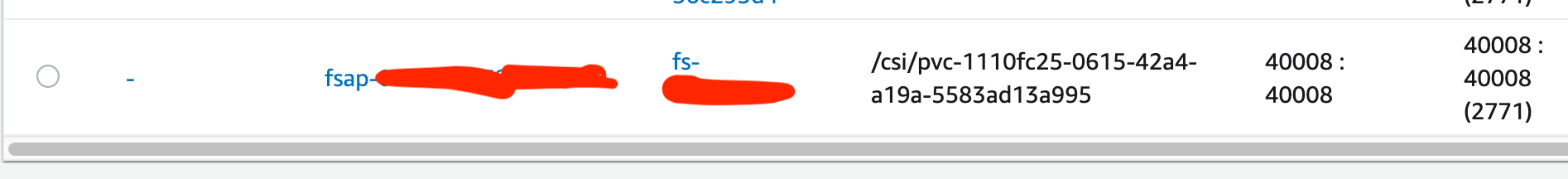

Gives me this in AWS:

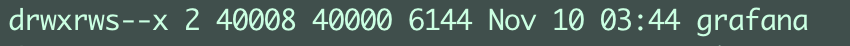

And an even weirder result on the pod (notice GUID):

This driver is messed up on so many levels.

We've hit this attempting to run a mailer in a container. If everything was set up correctly, the code would never need to call chown() so a quick workaround (to avoid patching and recompiling) was to nuke the calls to chown()/fchown() to return 0.

Method 1 - LD_PRELOAD (using gcc):

#include <unistd.h> int chown(const char *path, uid_t owner, gid_t group) { return 0; } int fchown(int fd, uid_t owner, gid_t group) { return 0;} gcc -c exim_chown.c; gcc -shared -o libeximchown.so exim_chown.o LD_PRELOAD=/tmp/libeximchown.so /usr/sbin/exim ... (must not be setuid)

Method 2 - patch (x86) object directly (using objdump):

https://gist.github.com/shaded-enmity/b8fd0dc883e3c26681c4fe9f32c9493d $ ./ret0.sh libpostfix-util.so chown fchown Patching chown@plt at offset 48800 in libpostfix-util.so Patching fchown@plt at offset 52096 in libpostfix-util.so

I would hope that this problem can be handled with a high priority by the platform, without expecting every application that might want to use the system to review and if need be change its code. If, as this issue suggests, multiple applications are failing because the platform doesn't adhere to the traditional expectations of how unix users and groups work, how many more problems must still lie undiscovered?

So if I read this rightly #434 (merged) will allow the uid and gid values to be squashed to preferred values, and if these are set to match the user/group that is running, when new files are created they will appear with the user/group that created them and this should avoid typical logic deciding it needs to use chown. (Should this go further and support something like idmapd?)

The second question is: What is the right behaviour for chown in such an envionment? Should it fail if the requested state doesn't match the current state (because the user and group are fixed and can't be changed)? Should it always succeed (because the process will have access to the file regardless of the displayed user/group)? Or should that decision be another parameter so that the person setting this up can choose which of those two options is right for them?

Gives me this in AWS:

And an even weirder result on the pod (notice GUID):

This driver is messed up on so many levels.

This is because the current helm chart installs the 1.3.5 version which hasn't included the configuration for GID and UID yet, in order to use that, make sure that you override the image tag value with the "master" tag that enabled this parameters.

You can override this value in the values.yaml file as following:

nameOverride: ""

fullnameOverride: ""

replicaCount: 2

image:

repository: amazon/aws-efs-csi-driver

tag: "master" ## here!!

pullPolicy: IfNotPresent

...

or with the helm command

helm upgrade --install aws-efs-csi-driver --namespace kube-system aws-efs-csi-driver/aws-efs-csi-driver --set image.tag="master"

to check what version are running your pods, just

kubectl describe pods -n kube-system efs-csi-controller-{....} | grep Image

NOTE: make sure you use this just as a workaround while the new release with the gid and uid is published, since it's not reliable to be always pulling the master tag

Even with master or the latest release (1.3.6), setting the AP to 0:0, a simple chown -R root:root /path as uid 0, still gives:

chown: /mountpath: Operation not permitted

id

uid=0(root) gid=0(root) groups=0(root)

Like @svaranasi-traderev said; this driver seems to be very messed up and AWS doesn't seem to care at all.

Even with

masteror the latest release (1.3.6), setting the AP to0:0, a simplechown -R root:root /pathas uid0, still gives:chown: /mountpath: Operation not permittedid

uid=0(root) gid=0(root) groups=0(root)Like @svaranasi-traderev said; this driver seems to be very messed up and AWS doesn't seem to care at all.

Noteworthy however:

When setting uid and gid to 0 (root) and then creating a new file within the mountpath as any other user than root (this will chown the file to 0:0, as described in https://github.com/kubernetes-sigs/aws-efs-csi-driver/issues/618), this arbitrary user can then successfully run a chown on this file, which would not work if uid is set to anything other than 0.

This leads me to believe, that any FS operation on this mountpath is executed as the AP user, regardless of the current active user. Again, don't understand the magic behind this yet, so this conclusion might be obvious to others :sweat_smile:. Is this default behavior when setting a user for an NFS mount?

When setting

uidandgidto0(root) and then creating a new file within the mountpath as any other user than root (this will chown the file to 0:0, as described in #618), this arbitrary user can then successfully run a chown on this file, which would not work if uid is set to anything other than0.

The chown command only exits with a non-zero exitcode on Alpine Linux. Eventhough all files are already owned by uid 0.

When setting

uidandgidto0(root) and then creating a new file within the mountpath as any other user than root (this will chown the file to 0:0, as described in #618), this arbitrary user can then successfully run a chown on this file, which would not work if uid is set to anything other than0.The chown command only exits with a non-zero exitcode on Alpine Linux. Eventhough all files are already owned by uid

0.

What I mean is this:

- As user

fooI create the filefoowithin the mount path:

foo@nginx:/mnt$ touch foo

- Since

uidandgidare set to0, this file will be owned as root (https://github.com/kubernetes-sigs/aws-efs-csi-driver/issues/618):

foo@nginx:/mnt$ ls -la

total 8

drwx------ 2 root root 6144 Jan 20 15:18 .

drwxr-xr-x 1 root root 51 Jan 20 14:57 ..

-rw-r--r-- 1 root root 0 Jan 20 15:18 foo

- As user

fooI runchown foo:foo foo. This will succeed. It will not succeed ifuid(andgid) is set to anything other than0.

Thus the chown command (and any other command accessing the FS for that matter) seems to actually run as the AP user, not as the current active user.

@Jasper-Ben

Again, don't understand the magic behind this yet, so this conclusion might be obvious to others sweat_smile. Is this default behavior when setting a user for an NFS mount?

Yup, it's kinda expected for a root_squash NFS mount.

It can be cleanly amended with setfacl for a "real" NFS

setfacl -m u:user:rwx dir

setfacl -m g:group:rwx dir

setfacl -m d:u:user:rwx dir

setfacl -m d:g:group:rwx dir

but (obviously) EFS does not support ACLs...

Why can't the AP just be created with root_no_squash?

Why can't the AP just be created with root_no_squash?

I don't think it works for EFS.

According to the docs EFS does? https://docs.aws.amazon.com/efs/latest/ug/accessing-fs-nfs-permissions.html#accessing-fs-nfs-permissions-root-user

Edit: maybe not for the AP though?

@Jasper-Ben yup, not for AP