pytorch-cifar

pytorch-cifar copied to clipboard

pytorch-cifar copied to clipboard

Resnet18 experiment results are inconsistent

I did a simple experiment with ResNet18 , and achieve the precision, 93%. But I found that it was different from pytorch's implementation torchvision.models.

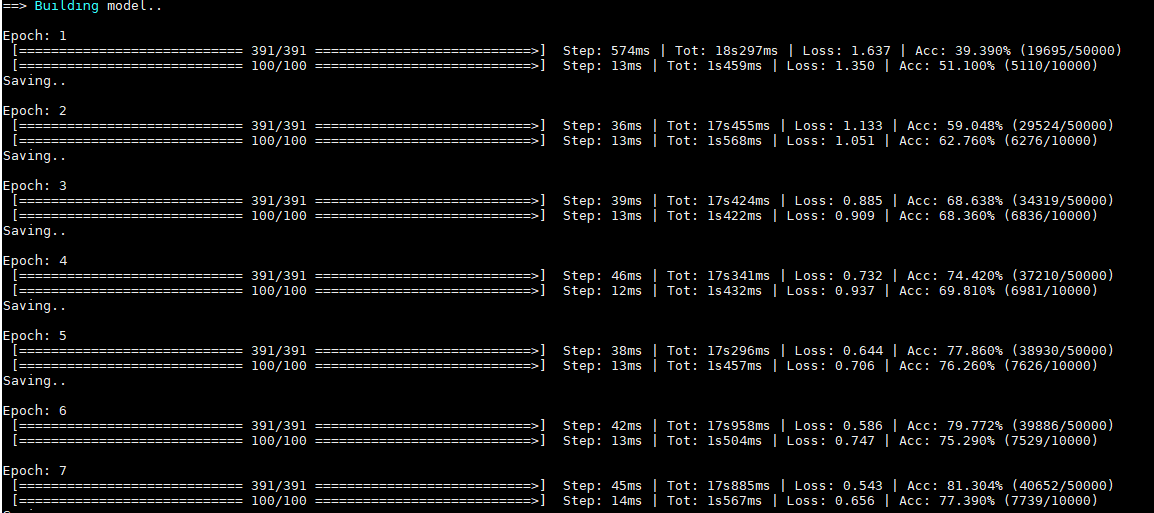

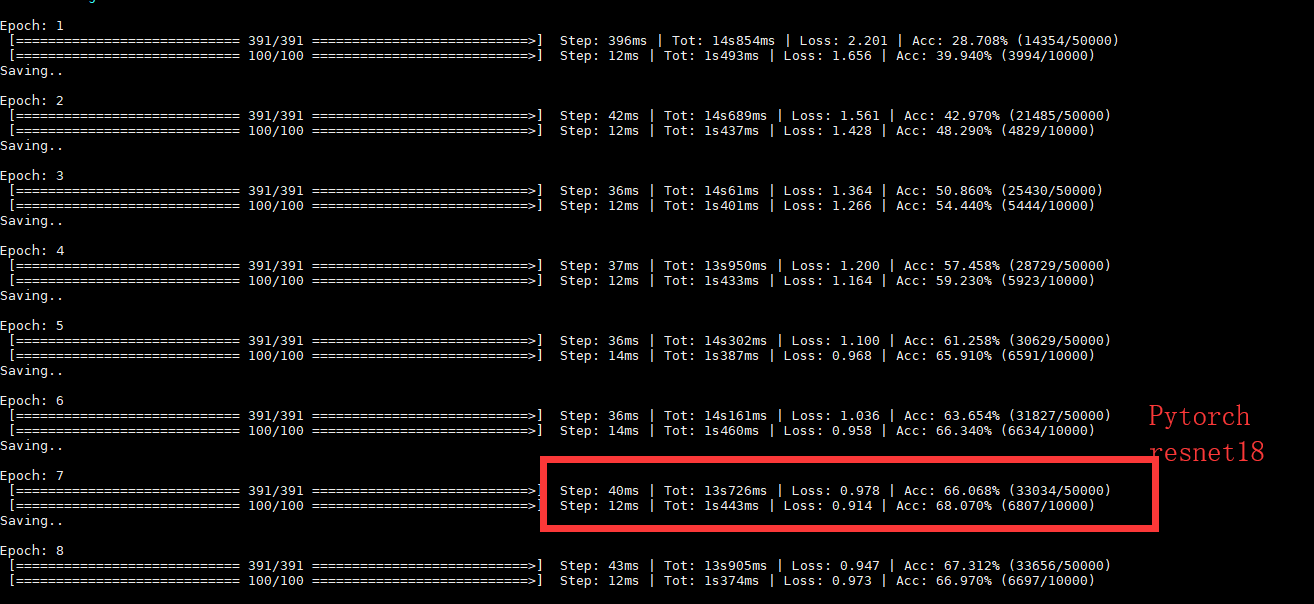

I used your code to train, and it's faster. And I used torchvision.models.resnet18() to train, the first few epochs converge more slowly.

The same parameters, but different results.

I want to know what makes them different.

The training process is as follows

The ResNet18 in the repo modified the convolution in the beginning and the fc layers to accommodate CIFAR-10 dataset, which is slightly different from the ResNet paper for running the ImageNet dataset. Maybe this is it?

This may be the reason. Thank you very much, I don't know much about Resnet. I am just implementing some algorithms for comparison experiments.

Another thing you should be aware which is not mentioned in the readme is that the models here are trained with data augmentation which in comparison should give better results if you train the same exact model on the same dataset but without augmentation.

Even in the resnet paper they define a different resnet layout for CIFAR10 which is different for resnet layout for IMAGENET. The configuration you see in the table in the paper is for INAGENET. Since CIFAR10 has smaller images all those downsamplings in the regular resnet will leave almost nothing in the end for fc layers. The specific resnet layout for CIFAR10 is defined in section 4.2 of the resnet paper. The ones that are already in torchvision modules are for imagenet. This repo uses the resnet layout for CIFAR10.

I tried to add some tweaks to ResNet18 and got 94.22, but when I ran without any changes it achieved 94.85 (after a total of 100 epochs)... was it just luck?

Is that difference significant in the first place, I personally view such differences in my experiments even in the range of 1%-3% as not that significant. After all chasing % numbers should not be our goal.

I just saw others also achieved ~95% on ResNet18 (and was about to delete my comment).

I agree. My goal wasn't to chase numbers, but to test out my ideas, which no one would care if I don't have experimental support.

My goal was to test out my ideas, which no one would care if I don't have experimental support

Agreed!

The implementation of CIFAR in the original ResNet paper is reproduced in my repository, please see (https://github.com/Lornatang/ResNet-PyTorch/tree/master/examples/cifar) Thank you, please give me some suggestions.

@Lornatang https://github.com/Lornatang/ResNet/tree/master/examples/cifar gives a 404.

@psteinb New Link:https://github.com/Lornatang/ResNet-PyTorch/tree/master/examples/cifar