frontends-team-compass

frontends-team-compass copied to clipboard

frontends-team-compass copied to clipboard

Lab extension analytics

I've recently written some scripts to get metadata from npm for all extensions (published with keyword 'jupyterlab-extension', similar to extension manager), and do some analysis of this data. I want to share the code, and hopefully set up a job/page so that updated reports can be viewed on demand. On the weekly call yesterday, the suggestion was to put the code in this repo, but I see the description of the repo is written as

A repository for team interaction, syncing, and handling meeting notes across the JupyterLab ecosystem.

I also figured any PRs to the code might end up making a lot of noise on this repo. So,for now I've put the code up on https://github.com/vidartf/lab_ext_profile, and hope to move that over to the jupyterlab org later :)

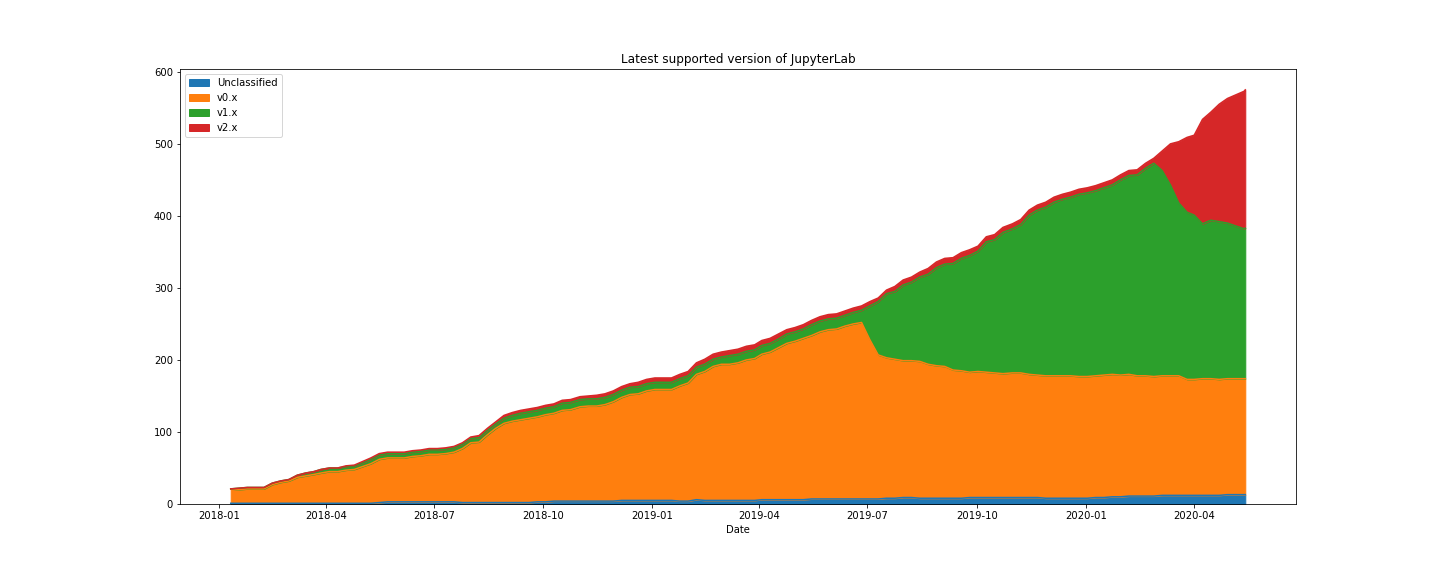

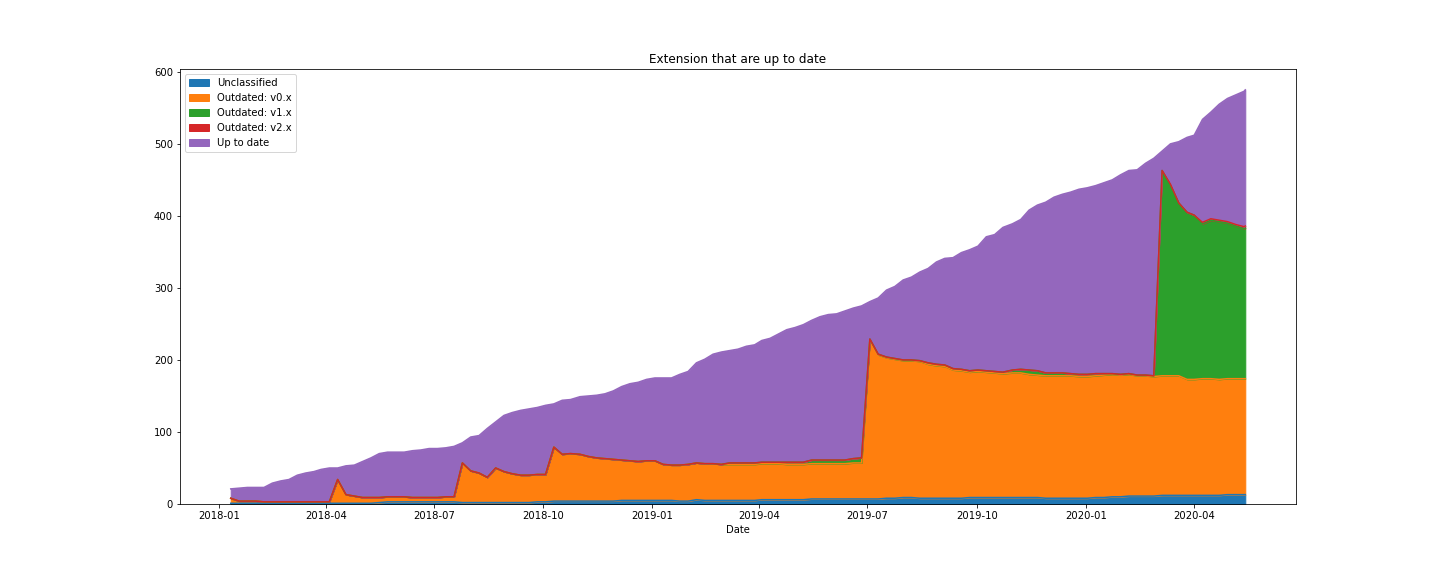

Some data to look at:

Thanks, Vidar, that's really interesting.

I am trying to interpret the second graph. Is it saying that on ~2019-07 a bunch of outdated 0.x extensions created or is that the date that 1.0 came out, rendering existing extensions now outdated?

Another thing that might be interesting would be to weight each extension by number of downloads...

I am trying to interpret the second graph. Is it saying that on ~2019-07 a bunch of outdated 0.x extensions created or is that the date that 1.0 came out, rendering existing extensions now outdated?

The second option, 1.0 came out, so a lot of extensions were suddenly not up to date with the latest release of lab.

Another thing that might be interesting would be to weight each extension by number of downloads...

Yeah, I'm working on it, but the data we have will be the somewhat opaque score fields:

"score": {

"final": 0.9237841281241451,

"detail": {

"quality": 0.9270640902288084,

"popularity": 0.8484861649808381,

"maintenance": 0.9962706951777409

}

},

Another thing that might be interesting would be to weight each extension by number of downloads...

Looking at it now, I'm also concerned that the "popularity" of a widget on npm is directly related to whether or not it supports the latest lab version or not, and not whether users actually like it.

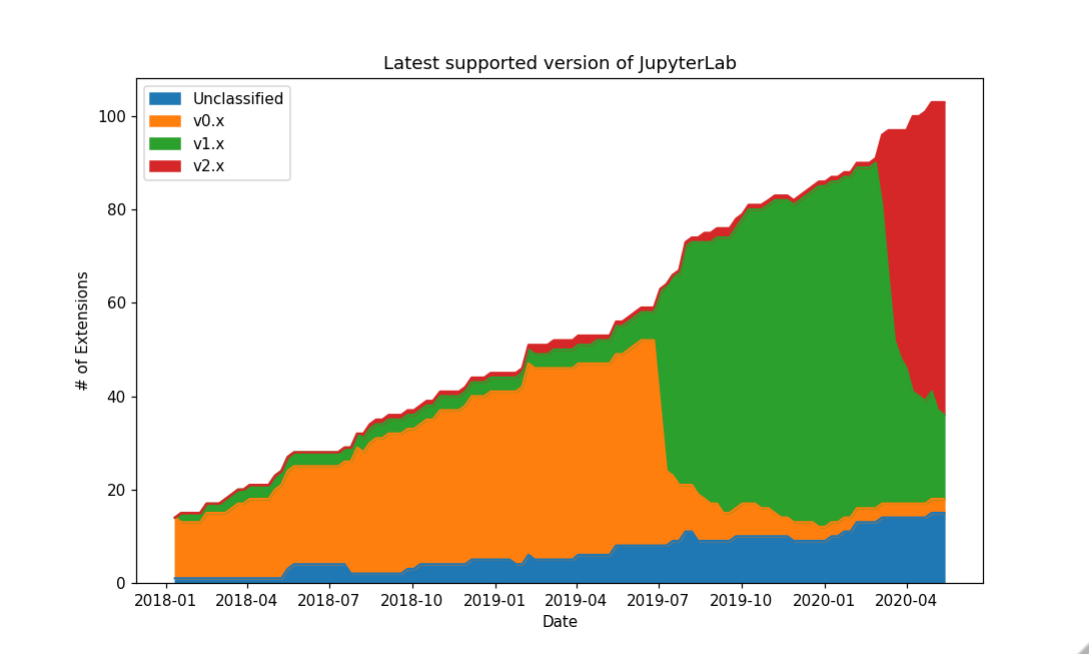

Here is the plot filtered to the ~100 extensions that are currently most "popular":

@vidartf this is an awesome analysis, I think it's really great.

Perhaps this can be a good rubric to reach out to other extensions and provide guidance (and nudges) to them on upgrading their extensions. Maybe just an issue boilerplate language with helpful links etc could be opened in, say, the repositories of the top 10 extensions that haven't upgrade each month.

Great graphs! Might be interesting to see how the @jupterlab and @jupyter extensions compare to third-party extensions.

@choldgraf I think that users quickly start nudging authors once a new (incompatible) version of lab is released already. What if this idea of opening issues was more pre-emptive (before the release) and framed as "beta-test invitation"? Something along "Your extension is quite popular among users and we would like to invite you to our beta tests so you can try to upgrade it before we release and share your expertise". This might help in forming the porting guidelines (a great work was done for 2.0 recently).

Can you filter out the many example jupyterlab_xkcd and jupyterlab_apod extensions? Or perhaps filter out extensions that have less than a certain number of downloads per week.

@krassowski - agreed, though I think there's both a push and a pull. The push may come from users but the pull can come from the JupyterLab community - e.g., providing links to upgrade documentation, common gotchas, etc. Or even for really crucial Lab plugins, offers to help with spot-checking PRs.

Can you filter out the many example jupyterlab_xkcd and jupyterlab_apod extensions?

I don't know, is there a common way to identify them from the metadata?

Or perhaps filter out extensions that have less than a certain number of downloads per week.

As mentioned above, any extension that only supports 0.35 will at this point probably not have a lot of downloads per week, but it might have been a very popular extension when 0.35 was the latest version. The "popularity" score of npm is also rather opaque, so it might be hard to select a natural cut-off.

Can you filter out the many example jupyterlab_xkcd and jupyterlab_apod extensions?

Also: Note that this is using data about extensions published to npm, not repos on github. I don't know if it is common in tutorials for users to publish the example clones?

I'd say it is a fairly reasonable outcome for folks to publish an example just to try out the mechanism, and the number of published examples bears that out ;).

Sure, but it still leaves us with the question: Is there some way for us to detect it, or some other criteria we can enforce to separate 1) clear example clones from 2) an extension that started as an example clone but has now been changed into a "proper" extension?

Not right now, we'd have to add another tag or some other filter criteria. In the meantime, I'd say it is fair to at least strip out xkcd and apod as known false positives.