jetty.project

jetty.project copied to clipboard

jetty.project copied to clipboard

Intermittently occuring unconsumed input

Jetty version(s) We recently updated our apache.karaf from 4.2.9 to 4.2.15 Karaf (4.2.9) was using 9.4.28.v20200408 and with this Version everything was fine. Karaf (4.2.15) is now using 9.4.43.v20210629 and with this Version we have problems.

Java version/vendor (use: java -version)

1.8.0_181-b13

OS type/version linux

Description After we updated several of our integration tests failed with the following Stacktrace:

org.springframework.web.client.ResourceAccessException: I/O error on PUT request for "http://localhost:XXXX/bla/blubbs": Unexpected end of file from server; nested exception is java.net.SocketException: Unexpected end of file from server

at org.springframework.web.client.RestTemplate.doExecute(RestTemplate.java:785)

at org.springframework.web.client.RestTemplate.execute(RestTemplate.java:711)

at org.springframework.web.client.RestTemplate.exchange(RestTemplate.java:602)

at

Caused by: java.net.SocketException: Unexpected end of file from server

at sun.net.www.http.HttpClient.parseHTTPHeader(HttpClient.java:851)

at sun.net.www.http.HttpClient.parseHTTP(HttpClient.java:678)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(HttpURLConnection.java:1587)

at sun.net.www.protocol.http.HttpURLConnection.getInputStream(HttpURLConnection.java:1492)

at java.net.HttpURLConnection.getResponseCode(HttpURLConnection.java:480)

at org.springframework.http.client.SimpleClientHttpResponse.getRawStatusCode(SimpleClientHttpResponse.java:55)

at org.springframework.web.client.DefaultResponseErrorHandler.hasError(DefaultResponseErrorHandler.java:61)

at org.springframework.web.client.RestTemplate.handleResponse(RestTemplate.java:807)

at org.springframework.web.client.RestTemplate.doExecute(RestTemplate.java:777)

... 35 more

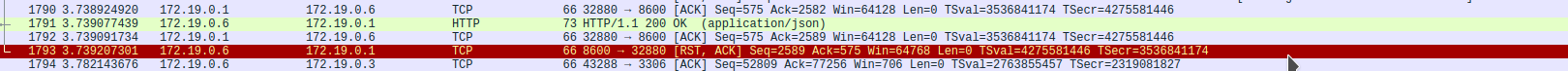

After some debuging we ended up with this wireshark findings:

We saw that Jetty was [RST, ACK] our connection.

We saw that Jetty was [RST, ACK] our connection.

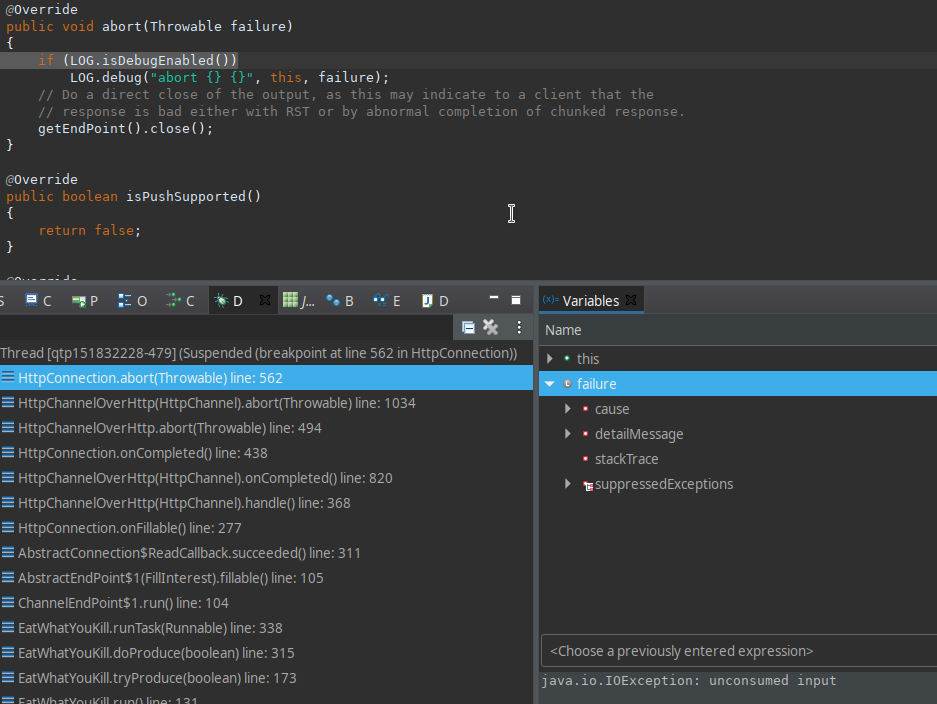

After a short debug session we found the lines that are closing our connection.

So we tried to find the lines that were throwing this exception and found this EARLY_EOF:

If i run the integration tests with log level INFO the error almost always occures, but if i want to see more and set the log level to DEBUG i can't reproduce the exception even if i loop around the whole test and run it for minutes.

How to reproduce? I am not aware of doing anything special.

- post request (very small body) creating an object

- get request trying to find created object

- put request (very small body) updating object

- put request (very small body) updating other stuff on object

- put request (very small body) updating other stuff on object

Sometimes i can't send request 4 and sometimes a cant send request 5, because of the IOError. If i switch my client to httpKeepAlive = false and open a new connection on every request everything works fine.

I will try to provide more infos if needed.

1.8.0_181-b13

That version of Java 1.8 expired on November 16, 2018 and should not be used in production. Mind the expiration dates in the release notes on Java releases, they are important, especially so with anything networking related, doubly so if the networking has SSL/TLS involved.

See https://www.oracle.com/java/technologies/javase/8u181-relnotes.html

What does your server side component do for each of those 5 steps?

I knocked out a quick testcase to attempt to replicate.

https://github.com/eclipse/jetty.project/blob/jetty-9.4.x-7448-httpurlconnection-unconsume-input/jetty-servlet/src/test/java/org/eclipse/jetty/servlet/HttpUrlConnectionUnconsumedInputTest.java

This does not fail, even with 10 PUT requests. Tested on OpenJDK 1.8.0_322, 11.0.12+7, and 17.0.1+12

I suspect you either have a bug in your client, or your server side component that is either not reading (or writing) the entire body content that your headers (request and response both) are reporting (a common bug that causes a break in the http/1.1 connection persistence model)

Thanks for the quick reply @joakime. I found this discussion (https://github.com/eclipse/jetty.project/issues/2867#issuecomment-448004375) where gregw mentions this filter to test if all the stream are read until the end.

I plugged it into our application and started our tests. The filter shows no errors in the log file, but the 'unconsumend input' connection resets still appear. Is there something else i could try?

public void doFilter(ServletRequest request, ServletResponse response, FilterChain chain) throws IOException, ServletException {

chain.doFilter(request, response);

String url = ((HttpServletRequest) request).getRequestURL().toString();

LOGGER.info("StreamReadingFilter reading: " + url);

try {

InputStream in;

try {

in = request.getInputStream();

} catch (Throwable x) {

Reader reader = request.getReader();

int character = reader.read();

LOGGER.info("Reader: " + character);

while (character >= 0) {

LOGGER.error("######### (" + character + ")");

character = reader.read();

}

return;

}

byte[] buffer = new byte[1000];

int read = in.read(buffer);

LOGGER.info("Read: " + read);

while (read >= 0) {

LOGGER.error("######### (" + read + ")");

read = in.read(buffer);

}

} catch (Exception e) {

LOGGER.error(e.getMessage());

throw e;

}

}

Regards, Matthias

It's quite an anti-pattern for a Filter to read body content.

You essentially break the Servlet endpoint, and even prevent behaviors with HttpServletRequest.getParameter() and HttpServletRequest.getPath() from functioning properly.

You also break Error handling if the error dispatch goes through this Filter too.

Why are you doing that?

As written above this was just a test to ensure that the application always reads the whole content.

Regards, Matthias

The error and stacktrace in your report is about the client reporting an early EOF on the response. What is your response doing?

Use the wireshark capture you have, and verify the response body content length behaviors.

IE: if the response has a Content-Length header, did wireshark show that the same number of BYTES were sent? (I suspect you'll see that less bytes were sent in the body then was reported in the response Content-Length header.)

If the response has Transfer-Encoding: chunked, is the final chunk sent? (If this is the case, we need details on everything, the request, response, etc)

As written above this was just a test to ensure that the application always reads the whole content.

You seem to be using REST. Most server side REST libraries have a configuration to ensure that the body content is fully read. These configurations should be used instead of the Filter hack, as they also inform the REST library of bad behaviors of the client. Such as a client sending 2 or more document bodies, or including extra non-whitespace content before or after the document. (this can be accidental, or even malicious, it's good for your REST library to handle this) (A REST document is commonly a JSON in REST nowadays, but you'll still find people using REST with xml, so I call it a REST document)

Thanks @joakime youre help is much appriciated. We cant find any suspicious looking request or response in our wireshark capture. We tried to enforce the reading with DeserializationFeature.FAIL_ON_TRAILING_TOKENS in our Jackson mapper, but that didn't raise an exception.

I will try to reproduce the behaviour in a smaller project that i can share on github.

Regards Matthias

@matthkoch you can take that test case in the branch here and modify it to fit the request/response body sizes you are using, perhaps that might trip over something useful. If you are using SSL, that's important to know. If you are using GZIP, that's also important to know.

This issue has been automatically marked as stale because it has been a full year without activity. It will be closed if no further activity occurs. Thank you for your contributions.

This issue has been closed due to it having no activity.