vscode-dvc

vscode-dvc copied to clipboard

vscode-dvc copied to clipboard

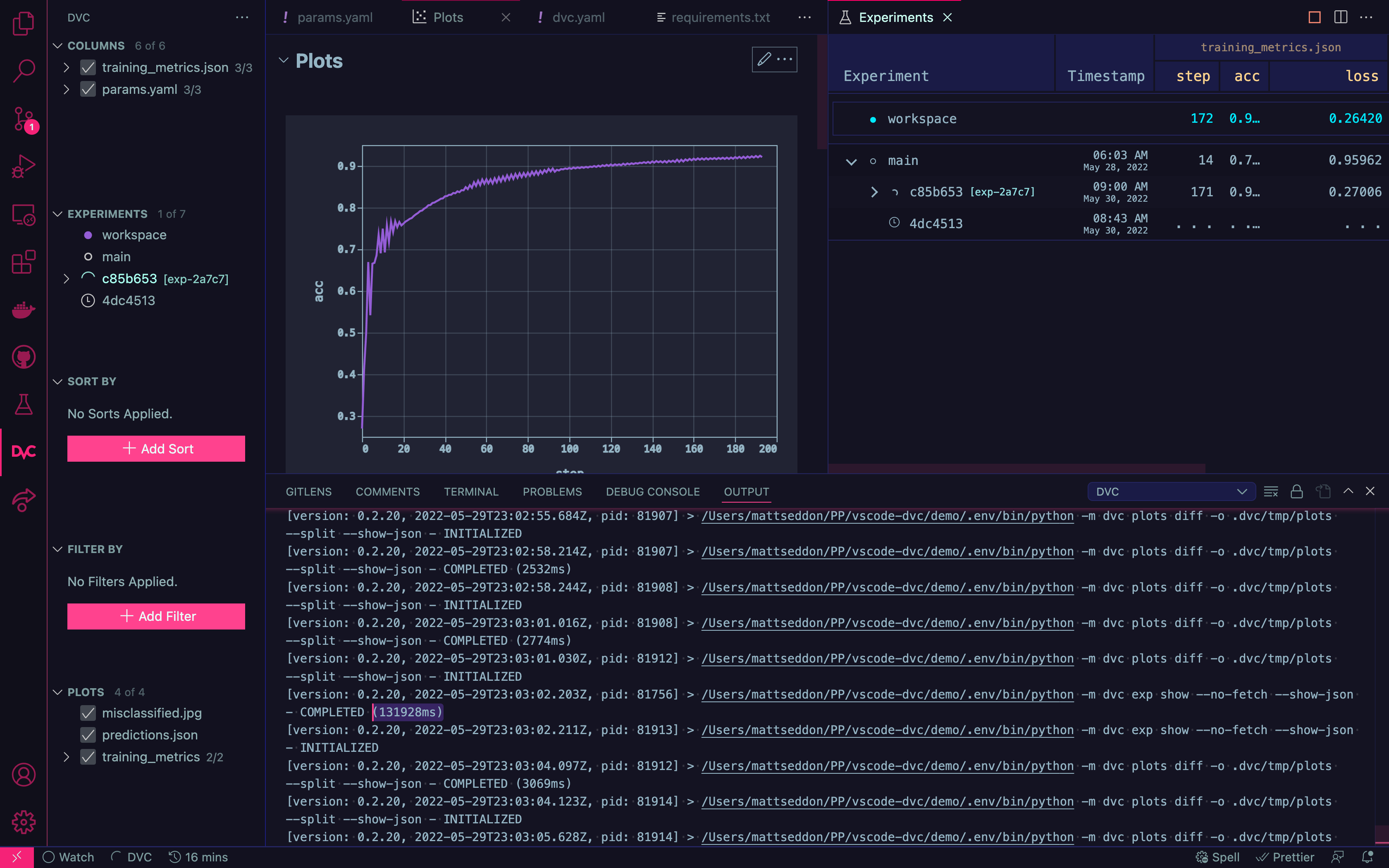

`exp show`: performance issues

When running a checkpoint based experiment with 200 epochs (in the workspace) exp show --no-fetch --show-json can take ~2m to return.

The command seemed to max out at 138696ms run time. Run time drops after the experiment run has been completed to ~1m.

Repository: demo project in this repo.

Note: This is a toy project with only a handful of params/metrics/deps/outs. I suspect the performance would be worse for a real project.

Related to #828 and https://github.com/iterative/dvc/issues/7693

@mattseddon I'm able to confirm on the latest dev branch (and looks like it started in https://github.com/iterative/dvc/pull/7724). 2.10.2 still works fast for me. Can you confirm the same?

I can recreate this performance with 2.10.2. I only have to run a checkpoint experiment with ~50 epochs and the time for exp show to return blows out to ~30s. This time steadily increases as the number of completed checkpoints grows.

At around 100 epochs the time to return is ~50 seconds. At 150 epochs the time to return is ~90 seconds.

This was the return time for exp show started at 171:

❯ dvc doctor

DVC version: 2.10.2 (pip)

---------------------------------

Platform: Python 3.9.9 on macOS-12.4-x86_64-i386-64bit

Supports:

webhdfs (fsspec = 2022.3.0),

http (aiohttp = 3.8.1, aiohttp-retry = 2.4.6),

https (aiohttp = 3.8.1, aiohttp-retry = 2.4.6),

s3 (s3fs = 2022.3.0, boto3 = 1.21.21)

Cache types: reflink, hardlink, symlink

Cache directory: apfs on /dev/disk1s5s1

Caches: local

Remotes: s3

Workspace directory: apfs on /dev/disk1s5s1

Repo: dvc (subdir), git

LMK if you need anything else from me.

Thanks,

That's interesting. Here's what I get using the vscode demo with 150 checkpoints:

$ time dvc exp show --no-fetch --show-json

...

dvc exp show --no-fetch --show-json 2.43s user 0.26s system 84% cpu 3.171 total

$ time dvc exp show --no-pager

───────────────────────────────────────────────────────────────────────────────────────

Experiment Created step loss lr … data/MNIST …

───────────────────────────────────────────────────────────────────────────────────────

workspace - 149 0.27006 0.003 0 0aed307 …

main May 29, 2022 14 0.95962 0.003 0 0aed307 …

│ ╓ 5637b85 [exp-4469f] 03:14 PM 149 0.27006 0.003 0 0aed307 …

│ ╟ 9007c9a 03:14 PM 148 0.2713 0.003 0 0aed307 …

│ ╟ 3808fd6 03:14 PM 147 0.27167 0.003 0 0aed307 …

│ ╟ 1545709 03:14 PM 146 0.27324 0.003 0 0aed307 …

│ ╟ a6ca8ac 03:14 PM 145 0.27345 0.003 0 0aed307 …

│ ╟ 0c2c6c8 03:13 PM 144 0.27498 0.003 0 0aed307 …

│ ╟ 0139f4d 03:13 PM 143 0.27595 0.003 0 0aed307 …

│ ╟ 41fa37f 03:13 PM 142 0.27681 0.003 0 0aed307 …

│ ╟ 0a4e1a8 03:13 PM 141 0.27782 0.003 0 0aed307 …

│ ╟ c4d8076 03:13 PM 140 0.27877 0.003 0 0aed307 …

│ ╟ 8149da5 03:13 PM 139 0.27984 0.003 0 0aed307 …

│ ╟ a91ef6a 03:13 PM 138 0.28048 0.003 0 0aed307 …

│ ╟ 752dd2d 03:13 PM 137 0.28151 0.003 0 0aed307 …

│ ╟ b707159 03:13 PM 136 0.28216 0.003 0 0aed307 …

│ ╟ 2809d6c 03:13 PM 135 0.28337 0.003 0 0aed307 …

│ ╟ 2636c4d 03:13 PM 134 0.28416 0.003 0 0aed307 …

│ ╟ 8746e6d 03:13 PM 133 0.28495 0.003 0 0aed307 …

│ ╟ 974de1c 03:13 PM 132 0.28616 0.003 0 0aed307 …

│ ╟ 9ed4760 03:13 PM 131 0.28719 0.003 0 0aed307 …

│ ╟ 686e55c 03:13 PM 130 0.28817 0.003 0 0aed307 …

│ ╟ 14837f4 03:13 PM 129 0.28901 0.003 0 0aed307 …

│ ╟ 110a12b 03:13 PM 128 0.29026 0.003 0 0aed307 …

│ ╟ 4afca5a 03:13 PM 127 0.29124 0.003 0 0aed307 …

│ ╟ b76a8f7 03:13 PM 126 0.29225 0.003 0 0aed307 …

│ ╟ 781234c 03:13 PM 125 0.29303 0.003 0 0aed307 …

│ ╟ b1f9647 03:13 PM 124 0.29442 0.003 0 0aed307 …

│ ╟ 363f953 03:13 PM 123 0.29546 0.003 0 0aed307 …

│ ╟ 7726215 03:13 PM 122 0.29647 0.003 0 0aed307 …

│ ╟ c343594 03:13 PM 121 0.2976 0.003 0 0aed307 …

│ ╟ 65c8265 03:13 PM 120 0.29883 0.003 0 0aed307 …

│ ╟ 936249b 03:13 PM 119 0.29947 0.003 0 0aed307 …

│ ╟ f79ca52 03:13 PM 118 0.30101 0.003 0 0aed307 …

│ ╟ 423cc18 03:13 PM 117 0.30181 0.003 0 0aed307 …

│ ╟ a59a50a 03:13 PM 116 0.30336 0.003 0 0aed307 …

│ ╟ 366ffd9 03:13 PM 115 0.30434 0.003 0 0aed307 …

│ ╟ e3e7b59 03:13 PM 114 0.30585 0.003 0 0aed307 …

│ ╟ 9af0aae 03:13 PM 113 0.30691 0.003 0 0aed307 …

│ ╟ 18952a0 03:13 PM 112 0.30833 0.003 0 0aed307 …

│ ╟ ae4d4ed 03:13 PM 111 0.30938 0.003 0 0aed307 …

│ ╟ 39aa03a 03:13 PM 110 0.31058 0.003 0 0aed307 …

│ ╟ ace9cf0 03:13 PM 109 0.31164 0.003 0 0aed307 …

│ ╟ e3c44ff 03:13 PM 108 0.31314 0.003 0 0aed307 …

│ ╟ a0d7935 03:13 PM 107 0.3144 0.003 0 0aed307 …

│ ╟ ea2062d 03:13 PM 106 0.31563 0.003 0 0aed307 …

│ ╟ 1f08888 03:13 PM 105 0.31676 0.003 0 0aed307 …

│ ╟ a4872f7 03:13 PM 104 0.31818 0.003 0 0aed307 …

│ ╟ 9572f1f 03:13 PM 103 0.31912 0.003 0 0aed307 …

│ ╟ 30d1580 03:13 PM 102 0.32096 0.003 0 0aed307 …

│ ╟ 76f8bc9 03:13 PM 101 0.3217 0.003 0 0aed307 …

│ ╟ 34d6627 03:12 PM 100 0.32365 0.003 0 0aed307 …

│ ╟ 9e0e6d8 03:12 PM 99 0.32437 0.003 0 0aed307 …

│ ╟ e1921a7 03:12 PM 98 0.32641 0.003 0 0aed307 …

│ ╟ 8f71caf 03:12 PM 97 0.32697 0.003 0 0aed307 …

│ ╟ c632752 03:12 PM 96 0.32921 0.003 0 0aed307 …

│ ╟ 32eeb59 03:12 PM 95 0.32961 0.003 0 0aed307 …

│ ╟ 49d036c 03:12 PM 94 0.33224 0.003 0 0aed307 …

│ ╟ 2a1ce72 03:12 PM 93 0.33278 0.003 0 0aed307 …

│ ╟ 5619d93 03:12 PM 92 0.33503 0.003 0 0aed307 …

│ ╟ 76411b1 03:12 PM 91 0.33512 0.003 0 0aed307 …

│ ╟ c2a6c5c 03:12 PM 90 0.33842 0.003 0 0aed307 …

│ ╟ bf8278b 03:12 PM 89 0.33781 0.003 0 0aed307 …

│ ╟ c2faf92 03:12 PM 88 0.34174 0.003 0 0aed307 …

│ ╟ 3b896a7 03:12 PM 87 0.34074 0.003 0 0aed307 …

│ ╟ c0ea163 03:12 PM 86 0.3451 0.003 0 0aed307 …

│ ╟ 7289b7c 03:12 PM 85 0.34303 0.003 0 0aed307 …

│ ╟ b35ebff 03:12 PM 84 0.34844 0.003 0 0aed307 …

│ ╟ 5b42cd1 03:12 PM 83 0.34631 0.003 0 0aed307 …

│ ╟ 06f7ed0 03:12 PM 82 0.352 0.003 0 0aed307 …

│ ╟ dd4a7ad 03:12 PM 81 0.34936 0.003 0 0aed307 …

│ ╟ f1178a3 03:12 PM 80 0.35562 0.003 0 0aed307 …

│ ╟ 178665f 03:12 PM 79 0.35235 0.003 0 0aed307 …

│ ╟ ca1d504 03:12 PM 78 0.35979 0.003 0 0aed307 …

│ ╟ 603f0d7 03:12 PM 77 0.35545 0.003 0 0aed307 …

│ ╟ 26c7c7b 03:12 PM 76 0.36377 0.003 0 0aed307 …

│ ╟ a3e052f 03:12 PM 75 0.35923 0.003 0 0aed307 …

│ ╟ eb23cde 03:12 PM 74 0.36785 0.003 0 0aed307 …

│ ╟ 436b4b3 03:12 PM 73 0.36314 0.003 0 0aed307 …

│ ╟ 5fcb7be 03:12 PM 72 0.37247 0.003 0 0aed307 …

│ ╟ 4bbf1c3 03:12 PM 71 0.36704 0.003 0 0aed307 …

│ ╟ ae1e5cc 03:12 PM 70 0.37731 0.003 0 0aed307 …

│ ╟ e70e0a2 03:12 PM 69 0.37113 0.003 0 0aed307 …

│ ╟ 06df04a 03:12 PM 68 0.38241 0.003 0 0aed307 …

│ ╟ 6423dd9 03:12 PM 67 0.3753 0.003 0 0aed307 …

│ ╟ ae8a80c 03:12 PM 66 0.38792 0.003 0 0aed307 …

│ ╟ 8f37b7d 03:12 PM 65 0.37913 0.003 0 0aed307 …

│ ╟ 4d2cfe7 03:12 PM 64 0.39383 0.003 0 0aed307 …

│ ╟ 58396e9 03:12 PM 63 0.38411 0.003 0 0aed307 …

│ ╟ b53dd63 03:12 PM 62 0.40055 0.003 0 0aed307 …

│ ╟ 39437b5 03:12 PM 61 0.38832 0.003 0 0aed307 …

│ ╟ a27f2f3 03:12 PM 60 0.39631 0.003 0 0aed307 …

│ ╟ cb9f380 03:12 PM 59 0.39274 0.003 0 0aed307 …

│ ╟ 3d2f389 03:12 PM 58 0.39905 0.003 0 0aed307 …

│ ╟ cd53a2c 03:12 PM 57 0.39987 0.003 0 0aed307 …

│ ╟ a36a8c6 03:12 PM 56 0.40121 0.003 0 0aed307 …

│ ╟ d2712e9 03:11 PM 55 0.4058 0.003 0 0aed307 …

│ ╟ eab01ab 03:11 PM 54 0.40597 0.003 0 0aed307 …

│ ╟ 776d5cf 03:11 PM 53 0.41351 0.003 0 0aed307 …

│ ╟ 6a63ae0 03:11 PM 52 0.40997 0.003 0 0aed307 …

│ ╟ 98a6989 03:11 PM 51 0.42015 0.003 0 0aed307 …

│ ╟ dce0585 (481ce5b) 03:11 PM 50 0.41636 0.003 0 0aed307 …

│ ╓ 481ce5b [exp-0d67a] 03:10 PM 49 0.42814 0.003 0 0aed307 …

│ ╟ b781e71 03:10 PM 48 0.42314 0.003 0 0aed307 …

│ ╟ f69e705 03:10 PM 47 0.43617 0.003 0 0aed307 …

│ ╟ 1ebedbe 03:10 PM 46 0.43151 0.003 0 0aed307 …

│ ╟ 77beba1 03:10 PM 45 0.44394 0.003 0 0aed307 …

│ ╟ 4cac879 03:09 PM 44 0.44079 0.003 0 0aed307 …

│ ╟ f693d35 03:09 PM 43 0.45266 0.003 0 0aed307 …

│ ╟ 6346a79 03:09 PM 42 0.45039 0.003 0 0aed307 …

│ ╟ 4ab7f91 03:09 PM 41 0.46178 0.003 0 0aed307 …

│ ╟ 1470ca1 03:09 PM 40 0.45995 0.003 0 0aed307 …

│ ╟ 8c6c3ff 03:09 PM 39 0.47107 0.003 0 0aed307 …

│ ╟ efc0701 03:09 PM 38 0.46972 0.003 0 0aed307 …

│ ╟ 57c76ef 03:09 PM 37 0.48044 0.003 0 0aed307 …

│ ╟ 4a6663a 03:09 PM 36 0.47956 0.003 0 0aed307 …

│ ╟ 4e895fe 03:09 PM 35 0.49051 0.003 0 0aed307 …

│ ╟ 46dcd18 03:09 PM 34 0.48994 0.003 0 0aed307 …

│ ╟ 27049be 03:09 PM 33 0.50066 0.003 0 0aed307 …

│ ╟ f293967 03:09 PM 32 0.50073 0.003 0 0aed307 …

│ ╟ b9fed46 03:09 PM 31 0.51183 0.003 0 0aed307 …

│ ╟ 509faf1 (4ed61ea) 03:09 PM 30 0.51262 0.003 0 0aed307 …

│ ╓ 4ed61ea [exp-28b70] 03:09 PM 29 0.52383 0.003 0 0aed307 …

│ ╟ 85980f6 03:09 PM 28 0.52544 0.003 0 0aed307 …

│ ╟ 5722747 03:09 PM 27 0.53738 0.003 0 0aed307 …

│ ╟ d3c240c 03:09 PM 26 0.54014 0.003 0 0aed307 …

│ ╟ 411131b 03:09 PM 25 0.55106 0.003 0 0aed307 …

│ ╟ 1088f46 03:09 PM 24 0.55666 0.003 0 0aed307 …

│ ╟ 0053b29 03:09 PM 23 0.56516 0.003 0 0aed307 …

│ ╟ b4afeba 03:09 PM 22 0.5756 0.003 0 0aed307 …

│ ╟ 3e331cc 03:09 PM 21 0.58187 0.003 0 0aed307 …

│ ╟ bb22a4a 03:09 PM 20 0.59621 0.003 0 0aed307 …

│ ╟ e3fbd7a 03:09 PM 19 0.60074 0.003 0 0aed307 …

│ ╟ 785aa2b 03:09 PM 18 0.61718 0.003 0 0aed307 …

│ ╟ 82e1be0 03:09 PM 17 0.62095 0.003 0 0aed307 …

│ ╟ 48ffb81 03:09 PM 16 0.64096 0.003 0 0aed307 …

│ ╟ a2a12f3 03:09 PM 15 0.6395 0.003 0 0aed307 …

│ ╟ 7c14adc 03:08 PM 14 0.66219 0.003 0 0aed307 …

│ ╟ f4bd0ba 03:08 PM 13 0.66191 0.003 0 0aed307 …

│ ╟ 06b05f5 03:08 PM 12 0.69235 0.003 0 0aed307 …

│ ╟ 9b8edb6 03:08 PM 11 0.69175 0.003 0 0aed307 …

│ ╟ 11c6725 03:08 PM 10 0.72476 0.003 0 0aed307 …

│ ╟ 1094b32 03:08 PM 9 0.7269 0.003 0 0aed307 …

│ ╟ b4c3ec2 03:08 PM 8 0.76173 0.003 0 0aed307 …

│ ╟ 57545a0 03:08 PM 7 0.76877 0.003 0 0aed307 …

│ ╟ 3103ea0 03:08 PM 6 0.79497 0.003 0 0aed307 …

│ ╟ 5edfe64 03:08 PM 5 0.80261 0.003 0 0aed307 …

│ ╟ 0dfec3f 03:08 PM 4 0.83646 0.003 0 0aed307 …

│ ╟ 4df8065 03:08 PM 3 0.84026 0.003 0 0aed307 …

│ ╟ c977b3a 03:08 PM 2 0.88872 0.003 0 0aed307 …

│ ╟ eb9f42c 03:08 PM 1 0.89464 0.003 0 0aed307 …

├─╨ 2ea95b2 03:08 PM 0 0.95559 0.003 0 0aed307 …

───────────────────────────────────────────────────────────────────────────────────────

dvc exp show --no-pager 2.28s user 0.24s system 88% cpu 2.832 total

$ dvc doctor

DVC version: 2.10.2

---------------------------------

Platform: Python 3.10.2 on macOS-12.3.1-arm64-arm-64bit

Supports:

azure (adlfs = 2022.4.0, knack = 0.9.0, azure-identity = 1.7.1),

gdrive (pydrive2 = 1.10.1),

gs (gcsfs = 2022.5.0),

hdfs (fsspec = 2022.5.0, pyarrow = 7.0.0),

webhdfs (fsspec = 2022.5.0),

http (aiohttp = 3.8.1, aiohttp-retry = 2.4.5),

https (aiohttp = 3.8.1, aiohttp-retry = 2.4.5),

s3 (s3fs = 2022.5.0, boto3 = 1.21.21),

ssh (sshfs = 2022.3.1),

oss (ossfs = 2021.8.0),

webdav (webdav4 = 0.9.4),

webdavs (webdav4 = 0.9.4)

Cache types: reflink, hardlink, symlink

Cache directory: apfs on /dev/disk3s1s1

Caches: local

Remotes: https

Workspace directory: apfs on /dev/disk3s1s1

Repo: dvc (subdir), git

What do the contents of your project look like? Here's what I have:

$ tree -a -I .dvc

.

├── .dvcignore

├── .gitignore

├── .vscode

│ └── extensions.json

├── data

│ └── MNIST

│ ├── .gitignore

│ ├── raw

│ │ ├── t10k-images-idx3-ubyte

│ │ ├── t10k-images-idx3-ubyte.gz

│ │ ├── t10k-labels-idx1-ubyte

│ │ ├── t10k-labels-idx1-ubyte.gz

│ │ ├── train-images-idx3-ubyte

│ │ ├── train-images-idx3-ubyte.gz

│ │ ├── train-labels-idx1-ubyte

│ │ └── train-labels-idx1-ubyte.gz

│ └── raw.dvc

├── dvc.lock

├── dvc.yaml

├── misclassified.jpg

├── model.pt

├── params.yaml

├── predictions.json

├── requirements.txt

├── train.py

├── training_metrics

│ └── scalars

│ ├── acc.tsv

│ └── loss.tsv

└── training_metrics.json

6 directories, 24 files

@dberenbaum I can recreate with a long-running experiment outside of the VS Code extension context.

Our directories are nearly the same if I exclude the .env directory:

❯ tree -a -I .dvc -I .env

.

├── .dvcignore

├── .gitignore

├── .vscode

│ ├── extensions.json

│ └── settings.json

├── data

│ └── MNIST

│ ├── .gitignore

│ ├── raw

│ │ ├── t10k-images-idx3-ubyte

│ │ ├── t10k-images-idx3-ubyte.gz

│ │ ├── t10k-labels-idx1-ubyte

│ │ ├── t10k-labels-idx1-ubyte.gz

│ │ ├── train-images-idx3-ubyte

│ │ ├── train-images-idx3-ubyte.gz

│ │ ├── train-labels-idx1-ubyte

│ │ └── train-labels-idx1-ubyte.gz

│ └── raw.dvc

├── dvc.lock

├── dvc.yaml

├── misclassified.jpg

├── model.pt

├── params.yaml

├── predictions.json

├── requirements.txt

├── train.py

├── training_metrics

│ └── scalars

│ ├── acc.tsv

│ └── loss.tsv

└── training_metrics.json

6 directories, 25 files

.vscode/settings.json being the only difference.

Could be a hardware issue as my laptop is a few years old now.

After the experiment completes:

❯ time dvc exp show --show-json --no-fetch

...

dvc exp show --show-json --no-fetch 26.77s user 5.32s system 93% cpu 34.441 total

How many checkpoints do you have that's taking 34s? I have tried all kinds of configurations and repos and haven't seen any scenario that takes over 5 seconds.

Our directories are nearly the same if I exclude the

.envdirectory:

I added a .venv with the project requirements (dvc, torch, torchvision) but am not seeing much slowdown. I have also tried adding a lot of untracked files to the repo.

Could be a hardware issue as my laptop is a few years old now.

I doubt it. I tried it on my old laptop and still saw fast performance.

If you have a chance, could you try https://github.com/iterative/dvc/wiki/Debugging,-Profiling-and-Benchmarking-DVC#profiling-dvc and upload results so we could look into what's happening?

@mattseddon We now have the most recent issue (introduced after 2.10.2) fixed (https://github.com/iterative/dvc/issues/7824), so it would probably be more helpful to focus on upstream over 2.10.2. Before doing any other investigation (ignore my comment above), could you please confirm whether you still see >10s for dvc exp show for the dev version of dvc?

@dberenbaum Sorry for the delay in testing this again.

When first trying to run experiments this morning I arrived back at:

❯ dvc exp run

'data/MNIST/raw.dvc' didn't change, skipping

Running stage 'train':

> python train.py

EPOCH: 1 / 200

Updating lock file 'dvc.lock'

ERROR: Error running 'checkpoint' task, 'stage: 'train'' will be aborted - [Errno 24] Too many open files: '/vscode-dvc/.git/objects/pack/pack-49ca96a6a0e893c7642baa988cedf98fdc5b3b58.pack'

Running the following command:

❯ git gc

Enumerating objects: 41936, done.

Counting objects: 100% (41936/41936), done.

Delta compression using up to 16 threads

Compressing objects: 100% (21645/21645), done.

Writing objects: 100% (41936/41936), done.

Total 41936 (delta 30120), reused 26829 (delta 19363), pack-reused 0

Removing duplicate objects: 100% (256/256), done.

Checking connectivity: 54078, done.

And then running an experiment dropped the command run times (during the experiment) to:

@ ~50 checkpoints:

dvc exp show --show-json --no-fetch 2.71s user 0.63s system 110% cpu 3.019 total

@ ~100 checkpoints:

dvc exp show --show-json --no-fetch 4.30s user 0.86s system 93% cpu 5.537 total

@ ~150 checkpoints:

dvc exp show --show-json --no-fetch 5.72s user 1.15s system 89% cpu 7.692 total

@ ~200 checkpoints:

dvc exp show --show-json --no-fetch 7.02s user 1.28s system 86% cpu 9.540 total

So this is my mistake, sorry. IMO we still need to improve on 7.02s but this is way better than what we had.

Now, am I running dvc exp gc wrong (usually with -T and we don't have any tags with experiments against them) or should there be something in the docs to let users know that they need to run git gc periodically or update their config?

@pmrowla Any thoughts on how to handle this?

git gc is normally run automatically when you use regular git CLI commands. I'm assuming that the test repo in this case is only ever being used to run dvc exp commands (and no one is doing any other regular git operations like commit/push/fetch/merge), but I'm not sure that this will really affect typical users

The test repo is actually a sub directory inside this repo.

In that case, we could maybe consider documenting it as a potential troubleshooting method for users experiencing performance issues. But I don't think we should ever be forcing a git gc ourselves (relying on git's automatic gc & the user's git configuration should be preferred here)

Seems like a reasonable starting point. It's a bit unclear from the Git docs when and how often gic gc is run automatically, so hard to tell how much of an issue this is without a lot of testing.

Agreed with @pmrowla we should not be running git gc automatically. People might rely on Git default and being able to find things using reflog, etc.

@pmrowla @mattseddon do you a sense what kind of objects is causing this? is it just amount of exps, or something else? Is there a way to to do something like git optimize? Just curious about the root cause of this. W/o better understanding it's hard to act here.

Closing this. Let's first get to people complaining about this.