peft

peft copied to clipboard

peft copied to clipboard

TypeError: dispatch_model() got an unexpected keyword argument 'offload_index'

================================

ruuning the following code on kaggle notebook give me this error

`import os os.environ["CUDA_VISIBLE_DEVICES"]="0" import torch import torch.nn as nn import bitsandbytes as bnb from transformers import AutoTokenizer, AutoConfig, AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained( "bigscience/bloom-7b1", load_in_8bit=True, device_map='auto', )

tokenizer = AutoTokenizer.from_pretrained("bigscience/bloom-7b1")`

======================================

on kaggle notebook give me the this error

======================================

`---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

/tmp/ipykernel_40247/570955428.py in

/opt/conda/lib/python3.7/site-packages/transformers/models/auto/auto_factory.py in from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs) 470 model_class = _get_model_class(config, cls._model_mapping) 471 return model_class.from_pretrained( --> 472 pretrained_model_name_or_path, *model_args, config=config, **hub_kwargs, **kwargs 473 ) 474 raise ValueError(

/opt/conda/lib/python3.7/site-packages/transformers/modeling_utils.py in from_pretrained(cls, pretrained_model_name_or_path, *model_args, **kwargs) 2695 # Dispatch model with hooks on all devices if necessary 2696 if device_map is not None: -> 2697 dispatch_model(model, device_map=device_map, offload_dir=offload_folder, offload_index=offload_index) 2698 2699 if output_loading_info:

TypeError: dispatch_model() got an unexpected keyword argument 'offload_index'`

+1, got the same error when running alpaca lora

+1, got the same error when running alpaca lora

Why they give me this error. The same code is working on colab but not kaggle notebook.

+1 It used to work on Kaggle, I literally changed nothing about the env, and suddenly I discovered it broke today.

+1 It used to work on Kaggle, I literally changed nothing about the env, and suddenly I discovered it broke today.

So the solution is ? Leave it

+1 It used to work on Kaggle, I literally changed nothing about the env, and suddenly I discovered it broke today.

So the solution is ? Leave it

There's no solution. A workaround is just don't use device_map="auto" till they fix it I guess 🤷

The device_map="auto" why not working with my code.

+1 It used to work on Kaggle, I literally changed nothing about the env, and suddenly I discovered it broke today.

So the solution is ? Leave it

There's no solution. A workaround is just don't use

device_map="auto"till they fix it I guess 🤷

I ask another question please give me response

+1 It used to work on Kaggle, I literally changed nothing about the env, and suddenly I discovered it broke today.

So the solution is ? Leave it

There's no solution. A workaround is just don't use

device_map="auto"till they fix it I guess 🤷

I mean I create another issues. Want to use pytorch 2 for faster training getting some bad error

Hi

Can you try to upgrade accelerate ?

pip install --upgrade accelerate

Hi Can you try to upgrade

accelerate?pip install --upgrade accelerate

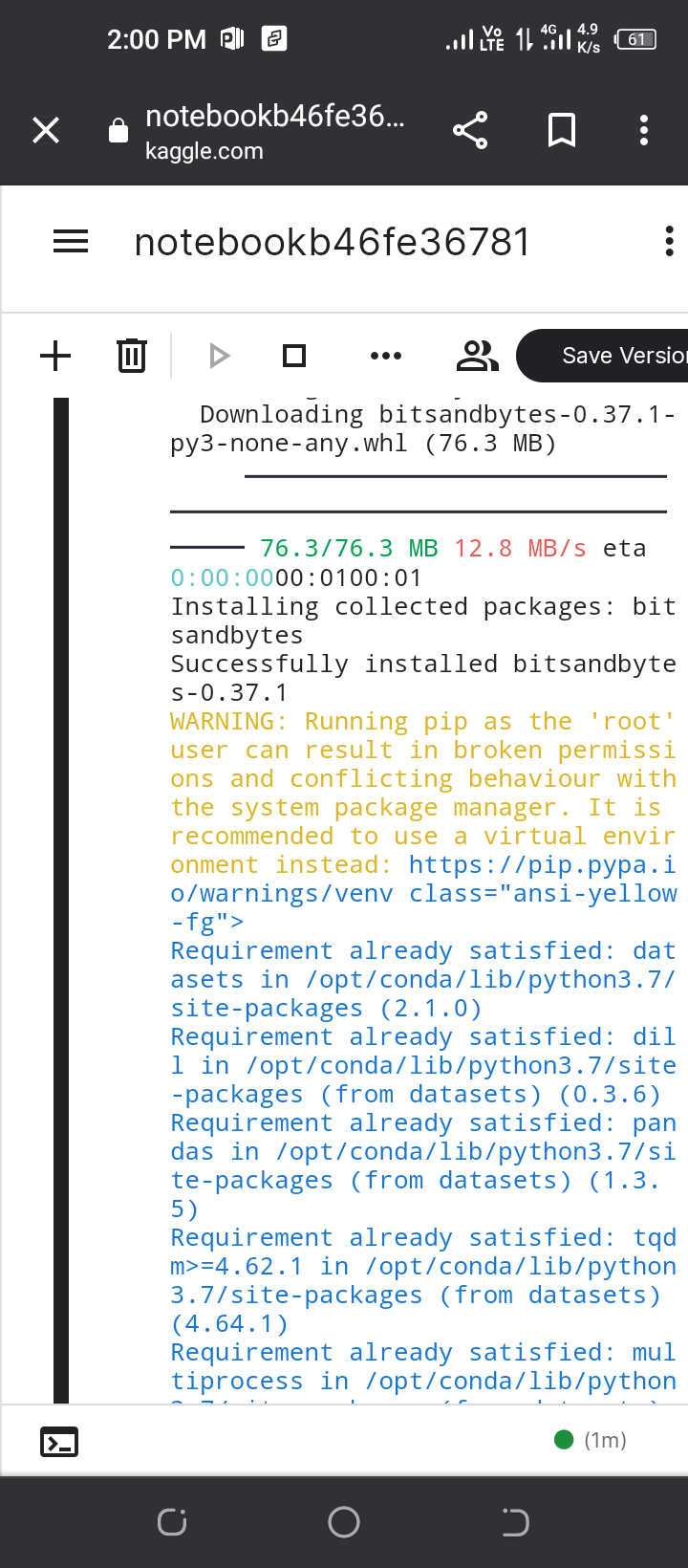

When I installing these packages it give me warning. I will shere the warning in while.

They give me warning ⚠️

They give me warning ⚠️

Hi Can you try to upgrade

accelerate?pip install --upgrade accelerate

Can you shere the working installation solution for kaggle Like

!pip install transformers Datasets

!pip install accelerate lora

Etc

@imrankh46 -- the issue is that your accelerate version is out of date. As @younesbelkada suggested, try something like:

!pip install -U transformers

!pip install -U accelerate

Hi Can you try to upgrade

accelerate?pip install --upgrade accelerate

that works for me, thank you!

Thanks! Feel free to close the issue

This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Even After Upgrading, It Shows the same error

@TheFaheem I agree with you ran into the same issue.

Hi @vrunm Can you share a reproducible script?

@TheFaheem I agree with you ran into the same issue.

First disconnect the run time. And then install the following library.

!pip install -q -U transformers datasets

!pip install -q -U accelerate

Not the Package Problem. Kaggle NB Sucks!

Just Restart the kernel and run the package update lines first. It Should be Work fine.

Awesome thanks for confirming!

@TheFaheem Thanks I got it to work in a kaggle notebook.

Even After Upgrading, It Shows the same error

@TheFaheem I install these first packages:

!pip install -U -q transformers bitsandbytes accelerate loralib

!pip install -q git+https://github.com/huggingface/peft.git

!pip install accelerate==0.18.0

and then run the model. And then if I install any other package because if I install other related packages I still get the same error.

For example if I install first:

! pip install langchain

! pip install annoy

! pip install sentence_transformers

and then the model packages. I get the error.