papernotes

papernotes copied to clipboard

papernotes copied to clipboard

Hyperspherical Prototype Networks

trafficstars

Metadata

- Authors: Pascal Mettes, Elise van der Pol, Cees G. M. Snoek

- Organization: University of Amsterdam

- Conference: NeurIPS 2019

- Paper: https://arxiv.org/pdf/1901.10514.pdf

- Code: https://github.com/psmmettes/hpn

Summary

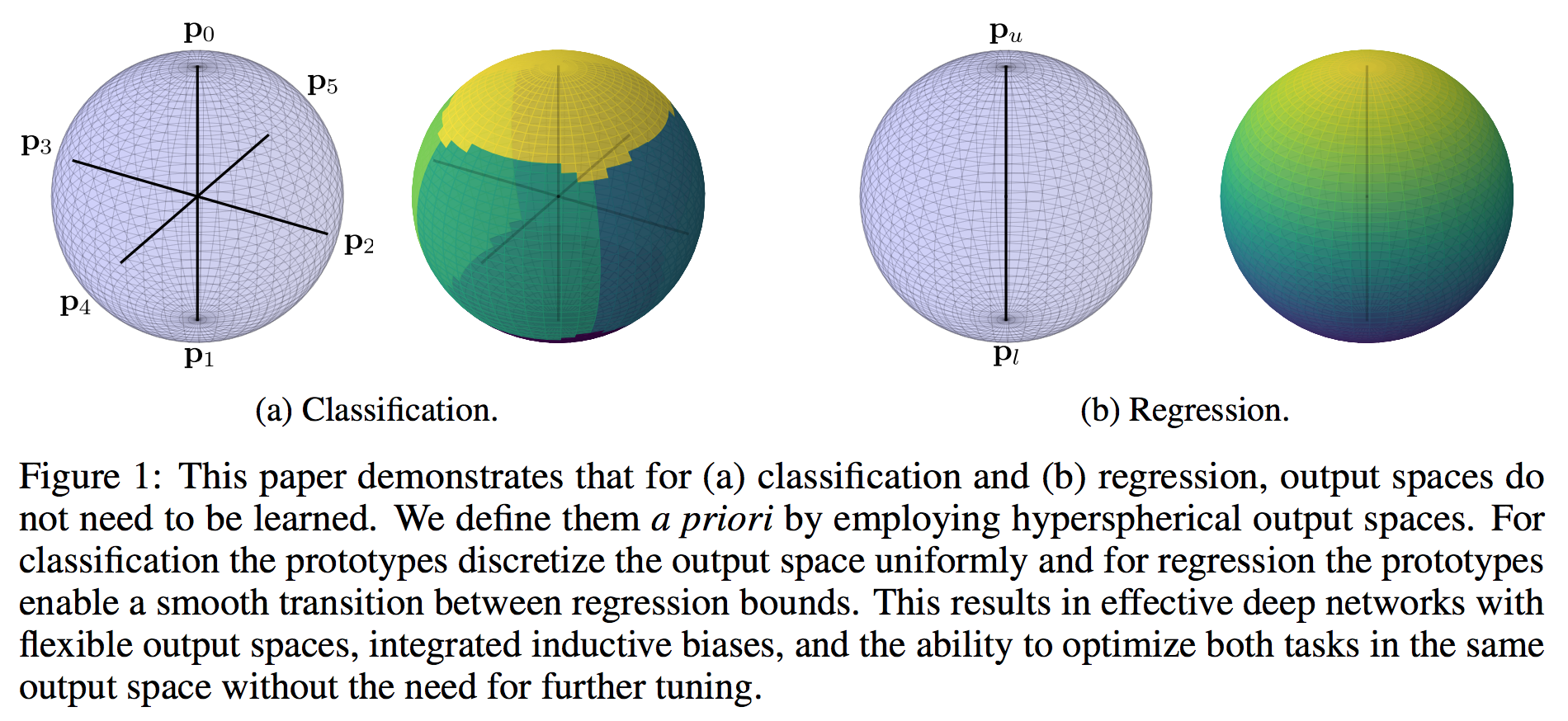

- Motivation: Unify classification and regression via hyperspherical output space with class prototypes defined a priori. The output number is no longer constrained to fixed output size (i.e., #class for classification or single dimension for regression).

Class prototype is a feature vector representing its original class. You can think of word2vec, where word is the original class and its feature vector is its "class prototype".

- Intuition: Class prototypes provide inductive biases for easier optimization and better generalization (i.e.,, large margin separation), instead of just learning to output fixed-size values in a fully parametric manner, ignoring known inductive biases.

- Class prototype definition: Unlike previous work on learning prototypes requires constant re-estimation, this work places prototypes uniformly on hypersphere (following large margin separation principle). By doing this, the prototypes do not need to be inferred from data or changed constantly.

- Class prototype positioning: Uniformly distributing an arbitrary number of prototypes and output dimension is an open mathematical problem (i.e., "Tammes problem"). As an approximation, they design a training loss to learn the position of prototypes on hypersphere.

- Uniformity training loss: Minimize the nearest cosine similarity for each prototype.

- The related work Understanding Contrastive Representation Learning through Alignment and Uniformity on the Hypersphere) defines the uniformity loss as the logarithm of the average pairwise Gaussian potential.

- Prototypes with task-specific semantic information: Use ranking-based loss function that incorporates similarity order instead of direct similarities, to make prototypes align to pretrained word2vec (avoid to learn direct similarities since word vectors did not have uniformity property)

We may also as well incorporate the idea of learning ordinal regression (Soft Labels for Ordinal Regression) and contrastive learning.

- Classification:

- Classification training loss: (1 - cos(training_example_feature, fixed_class_prototype))^2

- Inference: argmax(cosine(training_example, fixed_class_prototype))

- Regression:

- Pick "lower & upper bound" prototypes by cosine similarity = -1; the lower bound and upper bound regression values are typically maximum and minimum regression values in training examples.

- Training using hyperspherical regression loss function.

- Differs from standard regression, which backpropagates losses on one dimensional outputs, this work learns on the line from lower bound prototype to upper bound prototype.

Links to other work

- Follow-up work for learning prototypes: Metric-Guided Prototype Learning

- Model uncertainty for prototypical networks: Uncertainty Estimation Using a Single Deep Deterministic Neural Network

- Using prototypes for regression to me is sort of like distributional quantile regression: Distributional Reinforcement Learning with Quantile Regression and its extension for data uncertainty: Modelling heterogeneous distributions with an Uncountable Mixture of Asymmetric Laplacians.

- Spherical learning: