vagrant_cloud

vagrant_cloud copied to clipboard

vagrant_cloud copied to clipboard

Direct Upload Failures

@chrisroberts Hi Chris, I've used the direct upload interface for a few robox releases, and it has been provided a big performance imporvement.

Unfortunately, I also seem to be running into a bug that is causing some of those uploads to fail. Unfortunately, unlike the failures via the old API, these errors have proven difficult to detect automatically... which means I don't actually realize the upload failed until I try to use the box in question, and the checksum fails. That's because with this issue, the Vagrant cloud thinks it has the box file, and provides Vagrant with a URL, that either errors or returns an empty file.

I managed to add logic to the direct.sh upload script which detects the upload problem and retries it. But that hasn't fixed anything, because the upload simply fails 10 times in a row, and gives up.

I have a test script that can check to ensure all of the boxes have been uploaded (without actually downloading them), but these errors slip past because all the usual error indicators pass.

The last time I ran into an issue like this, i ended up creating Travis-CI jobs to check for broken downloads. But that process was really inefficient, and as the project grew, the bandwidth costs got out of hand. issue. That was 2 years ago. With the size of each release these days, that option is even less viable, so I'm hoping you can help.

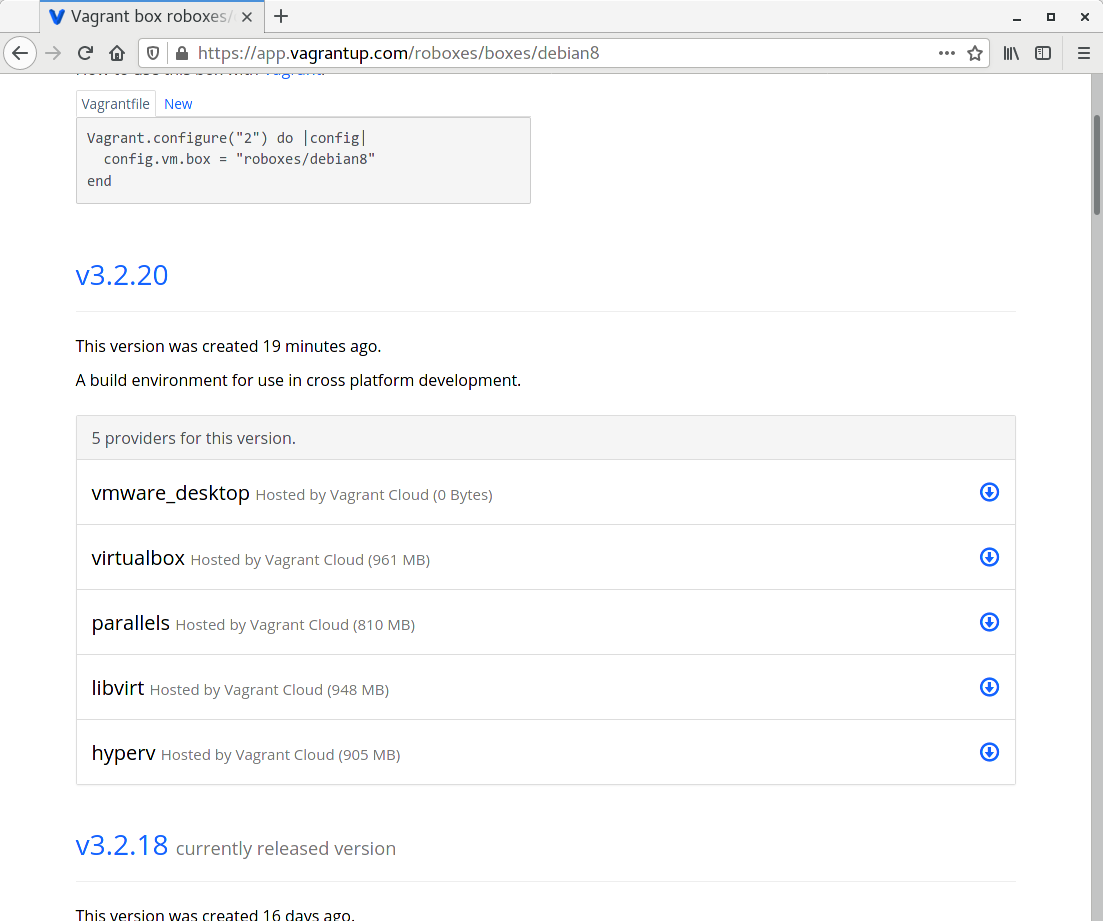

The only indication I've found so far that there was an issue, is by visiting the Vagrant Cloud website. If a problem occurred, I'll see the provider listed, but with a size of 0 bytes. See the screenshot below.

Using the old API, whenever an upload failed, the size would be absent on the website, and Vagrant wouldn't see the provider as being available.

As for the log output from my upload attempts, I'll include it here, although I truncated the upload URL, both for readability, and because I don't whether those S3 URL contain any sensitive info:

FILE: roboxes-debian8-vmware-3.2.20.box

CODE: 400

IP: 13.249.22.85

BYTES: 12386304

RATE: 5024869.000

TOTAL TIME: 2.465769

FILE: roboxes-debian8-vmware-3.2.20.box

CODE: 400

IP: 13.249.22.85

BYTES: 15990784

RATE: 29287150.000

TOTAL TIME: 0.546655

FILE: roboxes-debian8-vmware-3.2.20.box

CODE: 400

IP: 13.249.22.85

BYTES: 14090240

RATE: 26585358.000

TOTAL TIME: 0.530695

FILE: roboxes-debian8-vmware-3.2.20.box

CODE: 400

IP: 13.249.22.85

BYTES: 16318464

RATE: 30275443.000

TOTAL TIME: 0.539022

FILE: roboxes-debian8-vmware-3.2.20.box

CODE: 400

IP: 13.249.22.85

BYTES: 19791872

RATE: 52498334.000

TOTAL TIME: 0.377990

FILE: roboxes-debian8-vmware-3.2.20.box

CODE: 400

IP: 13.249.22.85

BYTES: 15597568

RATE: 39993764.000

TOTAL TIME: 0.390117

FILE: roboxes-debian8-vmware-3.2.20.box

CODE: 400

IP: 13.249.22.85

BYTES: 13762560

RATE: 24144842.000

TOTAL TIME: 0.570653

FILE: roboxes-debian8-vmware-3.2.20.box

CODE: 400

IP: 13.249.22.85

BYTES: 15138816

RATE: 27828705.000

TOTAL TIME: 0.544366

FILE: roboxes-debian8-vmware-3.2.20.box

CODE: 400

IP: 13.249.22.85

BYTES: 10878976

RATE: 32571784.000

TOTAL TIME: 0.334831

FILE: roboxes-debian8-vmware-3.2.20.box

CODE: 400

IP: 13.249.22.85

BYTES: 14745600

RATE: 26237722.000

TOTAL TIME: 0.562949

curl: (22) The requested URL returned error: 400 Bad Request

/opt/vagrant/embedded/bin/curl --tlsv1.2 --fail --silent --output /dev/null --show-error --request PUT --max-time 7200 --expect100-timeout 7200 --header Connection: keep-alive --write-out FILE: roboxes-debian8-vmware-3.2.20.box CODE: %{http_code}IP: %{remote_ip}BYTES: %{size_upload}RATE: %{speed_upload}TOTAL TIME: %{time_total} --upload-file /home/ladar/robox/output/roboxes-debian8-vmware-3.2.20.box https://vagrantcloud-files-production.s3-accelerate.amazonaws.com/archivist/boxes/<snip> failed... retrying 2 of 10.

curl: (22) The requested URL returned error: 400 Bad Request

/opt/vagrant/embedded/bin/curl --tlsv1.2 --fail --silent --output /dev/null --show-error --request PUT --max-time 7200 --expect100-timeout 7200 --header Connection: keep-alive --write-out FILE: roboxes-debian8-vmware-3.2.20.box CODE: %{http_code}IP: %{remote_ip}BYTES: %{size_upload}RATE: %{speed_upload}TOTAL TIME: %{time_total} --upload-file /home/ladar/robox/output/roboxes-debian8-vmware-3.2.20.box https://vagrantcloud-files-production.s3-accelerate.amazonaws.com/archivist/boxes/<snip> failed... retrying 3 of 10.

curl: (22) The requested URL returned error: 400 Bad Request

/opt/vagrant/embedded/bin/curl --tlsv1.2 --fail --silent --output /dev/null --show-error --request PUT --max-time 7200 --expect100-timeout 7200 --header Connection: keep-alive --write-out FILE: roboxes-debian8-vmware-3.2.20.box CODE: %{http_code}IP: %{remote_ip}BYTES: %{size_upload}RATE: %{speed_upload}TOTAL TIME: %{time_total} --upload-file /home/ladar/robox/output/roboxes-debian8-vmware-3.2.20.box https://vagrantcloud-files-production.s3-accelerate.amazonaws.com/archivist/boxes/<snip> failed... retrying 4 of 10.

curl: (22) The requested URL returned error: 400 Bad Request

/opt/vagrant/embedded/bin/curl --tlsv1.2 --fail --silent --output /dev/null --show-error --request PUT --max-time 7200 --expect100-timeout 7200 --header Connection: keep-alive --write-out FILE: roboxes-debian8-vmware-3.2.20.box CODE: %{http_code}IP: %{remote_ip}BYTES: %{size_upload}RATE: %{speed_upload}TOTAL TIME: %{time_total} --upload-file /home/ladar/robox/output/roboxes-debian8-vmware-3.2.20.box https://vagrantcloud-files-production.s3-accelerate.amazonaws.com/archivist/boxes/<snip> failed... retrying 5 of 10.

curl: (22) The requested URL returned error: 400 Bad Request

/opt/vagrant/embedded/bin/curl --tlsv1.2 --fail --silent --output /dev/null --show-error --request PUT --max-time 7200 --expect100-timeout 7200 --header Connection: keep-alive --write-out FILE: roboxes-debian8-vmware-3.2.20.box CODE: %{http_code}IP: %{remote_ip}BYTES: %{size_upload}RATE: %{speed_upload}TOTAL TIME: %{time_total} --upload-file /home/ladar/robox/output/roboxes-debian8-vmware-3.2.20.box https://vagrantcloud-files-production.s3-accelerate.amazonaws.com/archivist/boxes/<snip> failed... retrying 6 of 10.

curl: (22) The requested URL returned error: 400 Bad Request

/opt/vagrant/embedded/bin/curl --tlsv1.2 --fail --silent --output /dev/null --show-error --request PUT --max-time 7200 --expect100-timeout 7200 --header Connection: keep-alive --write-out FILE: roboxes-debian8-vmware-3.2.20.box CODE: %{http_code}IP: %{remote_ip}BYTES: %{size_upload}RATE: %{speed_upload}TOTAL TIME: %{time_total} --upload-file /home/ladar/robox/output/roboxes-debian8-vmware-3.2.20.box https://vagrantcloud-files-production.s3-accelerate.amazonaws.com/archivist/boxes/<snip> failed... retrying 7 of 10.

curl: (22) The requested URL returned error: 400 Bad Request

/opt/vagrant/embedded/bin/curl --tlsv1.2 --fail --silent --output /dev/null --show-error --request PUT --max-time 7200 --expect100-timeout 7200 --header Connection: keep-alive --write-out FILE: roboxes-debian8-vmware-3.2.20.box CODE: %{http_code}IP: %{remote_ip}BYTES: %{size_upload}RATE: %{speed_upload}TOTAL TIME: %{time_total} --upload-file /home/ladar/robox/output/roboxes-debian8-vmware-3.2.20.box https://vagrantcloud-files-production.s3-accelerate.amazonaws.com/archivist/boxes/<snip> failed... retrying 8 of 10.

curl: (22) The requested URL returned error: 400 Bad Request

/opt/vagrant/embedded/bin/curl --tlsv1.2 --fail --silent --output /dev/null --show-error --request PUT --max-time 7200 --expect100-timeout 7200 --header Connection: keep-alive --write-out FILE: roboxes-debian8-vmware-3.2.20.box CODE: %{http_code}IP: %{remote_ip}BYTES: %{size_upload}RATE: %{speed_upload}TOTAL TIME: %{time_total} --upload-file /home/ladar/robox/output/roboxes-debian8-vmware-3.2.20.box https://vagrantcloud-files-production.s3-accelerate.amazonaws.com/archivist/boxes/<snip> failed... retrying 9 of 10.

curl: (22) The requested URL returned error: 400 Bad Request

/opt/vagrant/embedded/bin/curl --tlsv1.2 --fail --silent --output /dev/null --show-error --request PUT --max-time 7200 --expect100-timeout 7200 --header Connection: keep-alive --write-out FILE: roboxes-debian8-vmware-3.2.20.box CODE: %{http_code}IP: %{remote_ip}BYTES: %{size_upload}RATE: %{speed_upload}TOTAL TIME: %{time_total} --upload-file /home/ladar/robox/output/roboxes-debian8-vmware-3.2.20.box https://vagrantcloud-files-production.s3-accelerate.amazonaws.com/archivist/boxes/<snip> failed... retrying 10 of 10.

curl: (22) The requested URL returned error: 400 Bad Request

The command failed 10 times.

I found this possible issue, which might be related. There you say the issue is file size, but the box file above is actually 905151894 bytes, or only 864 MiB, well below the 5 GiB limit.

It might be worth noting from the log output above, that if you look at the BYTES line, a different number is included with each attempt. This indicates curl was able to upload part of the file before the attempt fails.

Just the error code won't be super helpful since 400 is used for a bunch of different errors. When an error is encountered from the S3 side (like this 400 error) the contents of the response body will include details of the error which will provide the underlying issue. So capturing the response and outputting it on error would probably be a good first step to seeing what's up. You could also have the retry be the full process of requesting the direct upload and performing the upload so if there is an issue with the generated URL you'd be getting a new one for the retry.

@chrisroberts I don't suppose you know how I can capture the response body you mentioned, when I'm using the curl command line tool to perform a PUT request? The only way I can think of, would involve capturing the raw network data using tcpdump or Wireshark. Only with this issue, the failures are unpredictable, and occur in randomly inside a 500+ GiB upload. That makes it though. To say nothing of intercepting HTTPS traffic.

During the previous release, I spent time troubleshooting and realized the 400s weren't being caught previously. That's because curl returns an exit code of 0 by default for partial uploads. I tweaked code so it retried those errors this time around, for anything other than a 200. IT wasn't ideal, but I was hoping it fixed the problem. I also added logic to repeat the final POST multiple times. Which seems to have cut down on the failures. But I still got that single failure.

I 've thought about retrying the entire upload process automatically, but it will take a bit of dev effort to do right. If I cut corners, it will only be a matter of time until the script ends up in a recursive/non-terminating loop.

I had a situation recently where the box file hash got appended to an existing sha256 file by accident. And the upload used the first line hash, instead of the correct hash on the second line. Fortunately that triggered a failure. But if something like that happened again, and I was retrying the whole process, it wouldn't be pretty.

I was hoping you had log file on the backend that might point to a culprit, and lead to either a fix or workaround.

I believe you should be able to just update your --output /dev/null to --output upload-response.txt and any response content you receive will end up in there. Since these uploads are going directly to S3, there's no request logging for the actual upload that's available.

@chrisroberts I tried that a long time ago, and got nothing, but that was using the old API. I'll give it another shot during my next release. Unfortunately that won't be for another 1, or more likely 2 weeks.

@chrisroberts yuploading a new box version, and got this output/error... does it help?

<!DOCTYPE html>

<html>

<head>

<meta name="viewport" content="width=device-width, initial-scale=1">

<meta charset="utf-8">

<title>Application Error</title>

<style media="screen">

html,body,iframe {

margin: 0;

padding: 0;

}

html,body {

height: 100%;

overflow: hidden;

}

iframe {

width: 100%;

height: 100%;

border: 0;

}

</style>

</head>

<body>

<iframe src="//www.herokucdn.com/error-pages/application-error.html"></iframe>

</body>

</html>

FILE: generic-debian10-vmware-3.2.22.box

CODE: 503

IP: 18.205.36.100

BYTES: 271974400

RATE: 11233505.000

TOTAL TIME: 24.211351

@chrisroberts I got another upload error (the output is below). I'm going to cancel this upload attempt, and restart it, but with only 4 parallel upload jobs, instead of 8. Based on past experience, this should reduce the number of upload failures I see (while also slowing down the overall upload process).

<!DOCTYPE html>

<html>

<head>

<meta name="viewport" content="width=device-width, initial-scale=1">

<meta charset="utf-8">

<title>Application Error</title>

<style media="screen">

html,body,iframe {

margin: 0;

padding: 0;

}

html,body {

height: 100%;

overflow: hidden;

}

iframe {

width: 100%;

height: 100%;

border: 0;

}

</style>

</head>

<body>

<iframe src="//www.herokucdn.com/error-pages/application-error.html"></iframe>

</body>

</html>

FILE: generic-fedora29-libvirt-3.2.22.box

CODE: 503

IP: 18.205.36.100

BYTES: 422117376

RATE: 13816358.000

TOTAL TIME: 30.552256

@chrisroberts with 4 jobs running, I saw a different kind of failure. See the output below. This what I used to see when I used the old upload API. Note there was no output from the server. And cURL records the HTTP status as 100, which is the "continue" code is sees before sending any data. Fortunately these errors get detected by my script logic and are automatically retried after a short backoff delay.i

FILE: generic-devuan3-parallels-3.2.22.box

CODE: 100

IP: 18.205.36.100

BYTES: 431751168

RATE: 41530508.000

TOTAL TIME: 10.396647

curl: (55) OpenSSL SSL_write: Connection reset by peer, errno 104

/opt/vagrant/embedded/bin/curl --tlsv1.2 --silent --show-error --request PUT --max-time 7200 --expect100-timeout 7200 --header Connection: keep-alive --write-out FILE: generic-devuan3-parallels-3.2.22.boxCODE: %{http_code}IP: %{remote_ip}BYTES: %{size_upload}RATE: %{speed_upload}TOTAL TIME: %{time_total} --upload-file /home/ladar/robox/output/generic-devuan3-parallels-3.2.22.box https://archivist.vagrantup.com/v1/object/eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJrZXkiOiJib3hlcy82NGQyM2Y5ZS0zODEwLTQwZjMtOWY0Yy05NDc3Y2E3ODAyZDEiLCJtb2RlIjoidyIsImV4cGlyZSI6MTYyMjc3ODQwNywiY2FsbGJhY2siOiJodHRwczovL2FwcC52YWdyYW50dXAuY29tL2FwaS9pbnRlcm5hbC9hcmNoaXZpc3QvY2FsbGJhY2sifQ.9aGbuZr3BzT4aAVZqDxAXZQFtZgEcKCAELXY6cf0ls4 failed... retrying 2 of 10.

FILE: generic-devuan3-parallels-3.2.22.box

CODE: 200

IP: 18.205.36.100

BYTES: 554051004

RATE: 31553676.000

TOTAL TIME: 17.559541

Not sure if you're making realtime tweaks, but I just saw a different type of error scroll by. This time it was a 500 error code, and it looks like all the server returned was the single line error message instead of the HTML page we got with 503 errors above...

Internal Server Error

FILE: generic-fedora29-libvirt-3.2.22.box

CODE: 500

IP: 18.205.36.100

BYTES: 1025128414

RATE: 30235316.000

TOTAL TIME: 33.905867

@chrisroberts I still had 19 upload failures... even after I limited the upload to 4 parallel jobs. Below is a list of the boxes that failed and the HTTP status codes.... which I got my grepping the attached log. You can skip straight to the errors inside the log that way... but it didn't seem like the generic HTML page being returned was all that useful. Should I make any more changes to the upload script, so it provides more detailed debug/error info? I removed the output to dev null as you suggested, and the log contains all that provided.

FILE: generic-devuan3-parallels-3.2.22.box

CODE: 100

--

FILE: generic-fedora29-libvirt-3.2.22.box

CODE: 500

--

FILE: generic-fedora32-libvirt-3.2.22.box

CODE: 503

FILE: generic-fedora32-parallels-3.2.22.box

CODE: 503

--

FILE: generic-netbsd9-libvirt-3.2.22.box

CODE: 503

--

FILE: generic-oracle7-parallels-3.2.22.box

CODE: 503

--

FILE: generic-ubuntu1804-hyperv-3.2.22.box

CODE: 503

--

FILE: generic-ubuntu1810-hyperv-3.2.22.box

CODE: 503

--

FILE: generic-ubuntu1910-vmware-3.2.22.box

CODE: 503

--

FILE: generic-ubuntu2004-parallels-3.2.22.box

CODE: 503

--

FILE: lineage-libvirt-3.2.22.box

CODE: 503

--

FILE: magma-alpine36-vmware-3.2.22.box

CODE: 503

--

FILE: magma-gentoo-vmware-3.2.22.box

CODE: 503

--

FILE: roboxes-centos8-parallels-3.2.22.box

CODE: 100

--

FILE: roboxes-gentoo-hyperv-3.2.22.box

CODE: 503

--

FILE: roboxes-ubuntu1604-libvirt-3.2.22.box

CODE: 500

--

FILE: roboxes-ubuntu1604-virtualbox-3.2.22.box

CODE: 100

--

FILE: roboxes-ubuntu1810-hyperv-3.2.22.box

CODE: 503

--

FILE: roboxes-ubuntu2010-hyperv-3.2.22.box

CODE: 503

The full log file...