tempo

tempo copied to clipboard

tempo copied to clipboard

Status: 500 Internal Server Error Body: too many failed block queries 5

Describe the bug I have recently replicated the data from the current S3 bucket to a new S3 bucket. When querying against data in this new S3 bucket, I am seeing error "failed to get trace with id: d0504c815a12942af2fb23fbdd6fdee3 Status: 500 Internal Server Error Body: too many failed block queries 5 (max 0)".

How may I troubleshoot/fix this? Thanks.

To Reproduce Steps to reproduce the behavior:

- Replicate data from old to new S3 bucket

- Configure Tempo to use the new S3 bucket

- Run a query

Please check your querier logs for errors on pulling data from the S3 bucket. Perhaps this is a permissions or polling issue?

Thanks for the response.

I don't see any error in the querier logs though. Seems like there is no permission issue as I can see log lines like this one from the querier: level=info ts=2022-04-14T01:02:00.01027576Z caller=tempodb.go:348 org_id=single-tenant msg="searching for trace in block" findTraceID=00000000000000006002a1f980d7e12c block=0092d0a0-cb7a-4156-8958-ca9bc5a75685 found=false

There are some query that could go through but most of them failed with too many failed block queries.

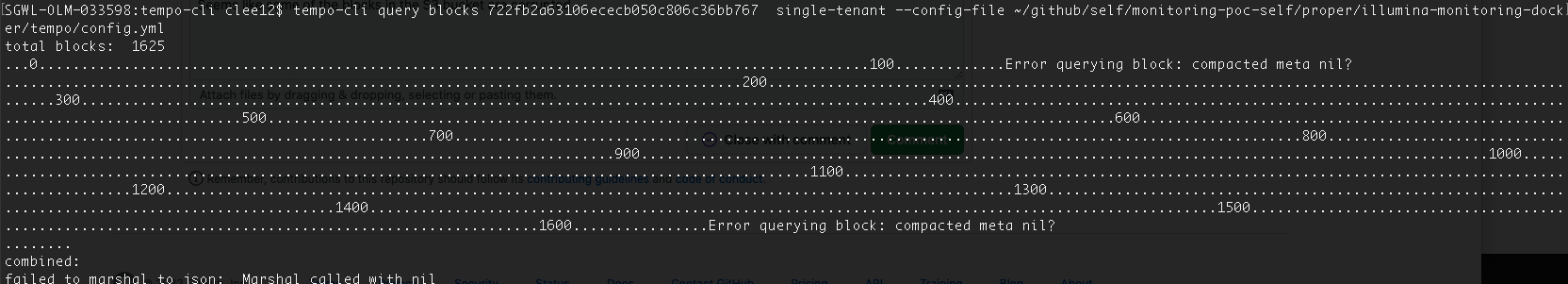

I tried running tempo-cli to query against the blocks in backend S3 directly. Seems like some of the blocks in the S3 bucket are corrupted. Just wondering how may I single those blocks out? thanks

Well, we should be able to see at least some failures in the querier logs that would help us understand why the blocks are failing.

Perhaps the replication copied over blocks in the process of being created and these partial blocks are a problem? I'd really like to find the log messages from the querier so we can feel very confident we understand why the blocks are failing.

@chenfeilee Did you ever resolve this? we randomly started seeing this in our cluster today, for old and new data.

"Failed block queries" could be due to a huge number of reasons. That log message is where the query frontend is counting up the failed blocks to see if it passes the tolerate_failed_blocks threshold. Your queriers should have real log messages that indicate why the blocks are not being queried correctly.

"Failed block queries" could be due to a huge number of reasons. That log message is where the query frontend is counting up the failed blocks to see if it passes the

tolerate_failed_blocksthreshold. Your queriers should have real log messages that indicate why the blocks are not being queried correctly.

Thanks for the response, sorry for hijacking this issue. Ended up being Azure (should have assumed)

Not sure if that will help, but from logs I see following:

error finding trace by id, blockID: e9fa6814-9b13-4879-851d-1475a7887b8d: error retrieving bloom (single-tenant, e9fa6814-9b13-4879-851d-1475a7887b8d): reading storage container: Get \"https://whatever.blob.core.windows.net/tempoprod/single-tenant/e9fa6814-9b13-4879-851d-1475a7887b8d/bloom-0?timeout=61\": dial tcp: lookup whatever.blob.core.windows.net on 10.0.0.10:53: dial udp 10.0.0.10:53: operation was canceled

which makes me believe that in our case it is issue with coredns in azure kubernetes rather than tempo itself and even worse it seems like tempo was not even able to connect to it (if I understand error message correctly)

Notes:

- error complaining about "too many failed blocks queries" is in frontend querier

- error describing what happened is in queriers

For Azure DNS issues please see this thread: https://github.com/grafana/tempo/issues/1462. It's long running but toward the bottom you will see some of the steps we took to resolve our issues.

This issue has been automatically marked as stale because it has not had any activity in the past 60 days. The next time this stale check runs, the stale label will be removed if there is new activity. The issue will be closed after 15 days if there is no new activity. Please apply keepalive label to exempt this Issue.

Hi, having same errors. Querier logs says "error retrieving bloom bloom-0 (single-tenant, e6f45a73-99c3-4803-a5eb-52ee3dda2dfa): does not exist" So i guess i have to check on the S3 side. Seems strange anyway as other instrumentation pushes to same S3 bucket and not having this issue

Hello @flenoir , did you manage to solve this error ? I'm having the same behavior

- Backend: Azure

- Errors on logs : error finding trace by id, blockID: XXX: error retrieving bloom bloom-0 (single-tenant, XXX): does not exist;

We have since removed this feature:

https://github.com/grafana/tempo/pull/2416

This will make it far easier to pinpoint issues with the trace by id path. This change will be available in the next release of Tempo.

Hello @flenoir , did you manage to solve this error ? I'm having the same behavior

* Backend: Azure * Errors on logs : error finding trace by id, blockID: XXX: error retrieving bloom bloom-0 (single-tenant, XXX): does not exist;

Having the same issue..

Hi, it seems the deletion of s3 content solved the issue but i have same issue again.

Will check for a solution and will post here what i can find

So, i did try tempo-cli commands and found some errors related to vParquet (tempo-cli: error: unsupported block version: vParquet), when using "list index" on an existing traceID in my s3 bucket. Finally seems to have solved it by using a setting in grafana datasource. In TraceId Query, i did activate "use time range in query" and found it working now.

I hope this can help, those who have same kind of issue.