dm_control

dm_control copied to clipboard

dm_control copied to clipboard

How to create an environment to give depth images?

The vision environment provide only RGB images, instead of RGBD (depth) images, but I see some partial implementation of depth images. Here's my attempt to set depth=True, but it failes because the dtype is set to uint8 which cannot be inf.

import collections

import numpy as np

from dm_control import manipulation

from dm_control.manipulation.bricks import _reassemble

from dm_control.manipulation.shared import registry, tags, observations

from dm_control.manipulation.shared.observations import ObservationSettings, _ENABLED_FEATURE, _ENABLED_FTT, \

_DISABLED_FEATURE, CameraObservableSpec, ObservableSpec

class MyCameraObservableSpec(collections.namedtuple(

'CameraObservableSpec', ('depth', 'height', 'width') + ObservableSpec._fields)):

"""Configuration options for camera observables."""

__slots__ = ()

ENABLED_CAMERA_DEPTH = MyCameraObservableSpec(

height=84,

width=84,

enabled=True,

depth=True,

update_interval=1,

buffer_size=1,

delay=0,

aggregator=None,

corruptor=None)

VISION_DEPTH = ObservationSettings(

proprio=_ENABLED_FEATURE,

ftt=_ENABLED_FTT,

prop_pose=_DISABLED_FEATURE,

camera=ENABLED_CAMERA_DEPTH)

@registry.add(tags.VISION)

def my_env():

return _reassemble(obs_settings=VISION_DEPTH,

num_bricks=5,

randomize_initial_order=True,

randomize_desired_order=True)

env = manipulation.load('my_env', seed=0)

spec = env.action_spec()

for i in range(10):

a = np.random.uniform(spec.minimum, spec.maximum, spec.shape)

time_step = env.step(a)

This is actually a bug in the code. Turns out that when you call obs.configure(depth=True), it only sets the depth attribute and forgets to update the dtype attribute, which is only set in the constructor.

A hacky fix is to edit dm_control/dm_control/manipulation/shared/cameras.py:

def add_camera_observables(entity, obs_settings, *camera_specs):

"""Adds cameras to an entity's worldbody and configures observables for them.

Args:

entity: A `composer.Entity`.

obs_settings: An `observations.ObservationSettings` instance.

*camera_specs: Instances of `CameraSpec`.

Returns:

A `collections.OrderedDict` keyed on camera names, containing pre-configured

`observable.MJCFCamera` instances.

"""

obs_dict = collections.OrderedDict()

for spec in camera_specs:

camera = entity.mjcf_model.worldbody.add('camera', **spec._asdict())

obs = observable.MJCFCamera(camera)

obs.configure(**obs_settings.camera._asdict())

# ====== #

obs.configure(depth=True)

obs._dtype = np.float32 # Explicitly change the dtype.

obs._n_channels = 1 # Explicitly change the no. of channels.

# ====== #

obs_dict[spec.name] = obs

return obs_dict

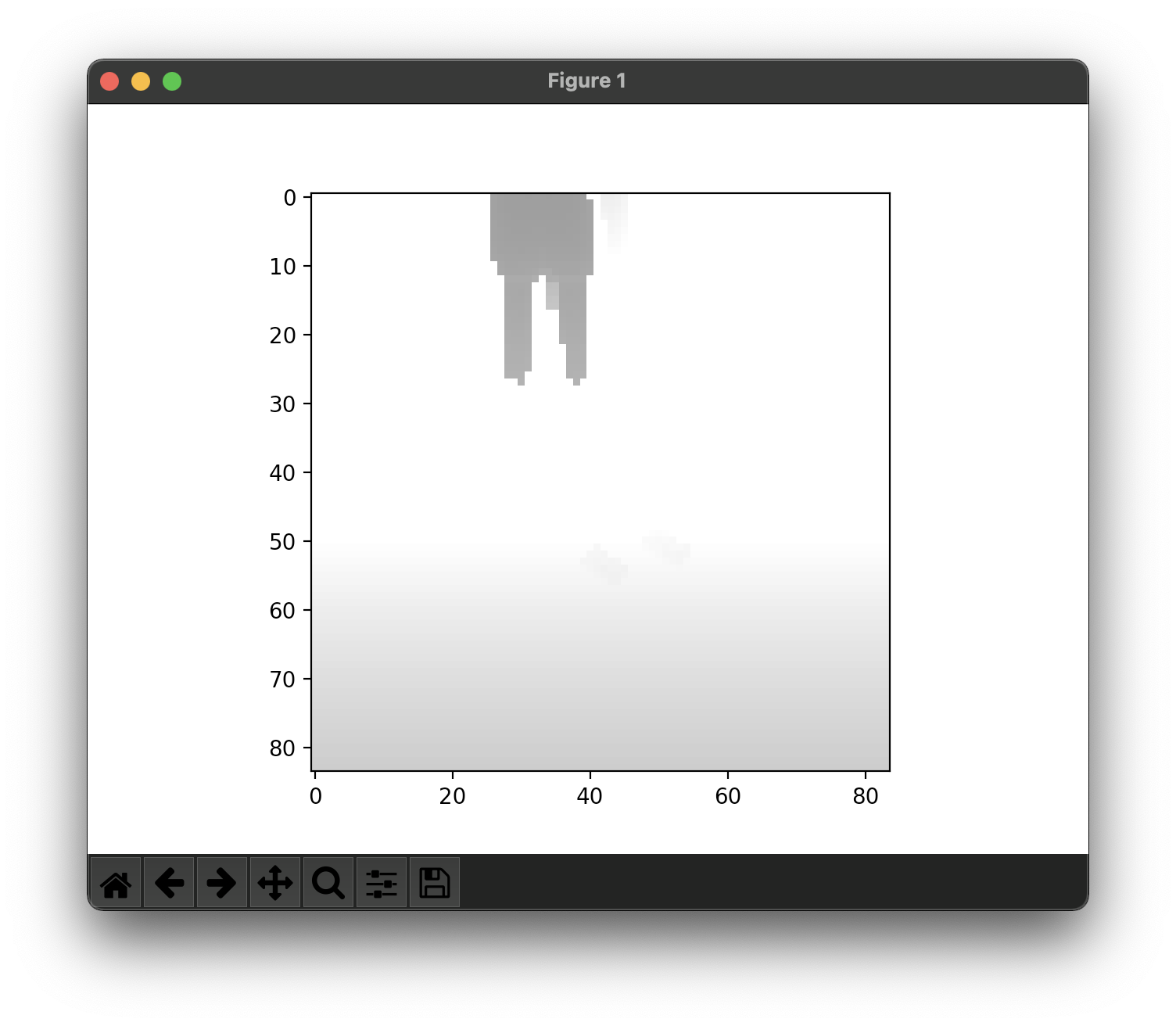

Now when I run explore with say stack_2_bricks_vision, I can plot timestep.observation["front_close"] and obtain the following (84, 84, 1) np.float32 array: