go

go copied to clipboard

go copied to clipboard

cmd/asm: refactor the framework of the arm64 assembler

I propose to refactor the framework of the arm64 assembler.

Why ?

1, The current framework is a bit complicated, not easy to understand, maintain and extend. Especially the handling of constants and the design of optab.

2, Adding a new arm64 instruction is taking more and more effort. For some complex instructions with many formats, a lot of modifications are needed. For example, https://go-review.googlesource.com/c/go/+/273668, https://go-review.googlesource.com/c/go/+/233277, etc.

3, At the moment, we are still missing ~1000 assembly instructions, including NEON and SVE. The potential cost for adding those instructions are high.

People is paying more and more attention to arm64 platform, and there are more and more requests to add new instructions, see https://github.com/golang/go/issues/40725, https://github.com/golang/go/issues/42326, https://github.com/golang/go/issues/41092 etc. Arm64 also has many new features, such as SVE. We hope to construct a better framework to solve the above problems and make future work easier.

Goals for the changes 1, More readable and easy to maintain. 2, Easy to add new instructions. 3, Friendly to testing, Can be cross checked with GNU tools. 4, Share instruction definition with disassembly to avoid mismatch between assembler and disassembler.

How to refactor ?

First let's take a look of the current framework.

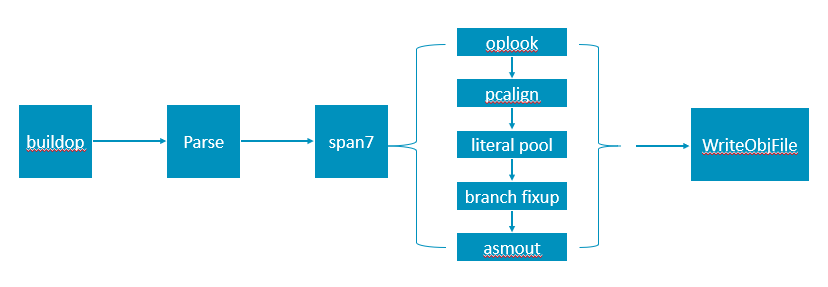

We mainly focus on the span7 function which encodes a function's Prog list. The main idea of this function is that for a specific Prog, first find its corresponding item in optab (by oplook function), and then encode it according to optab._type (in asmout function).

In oplook, we need to handle the matching relationship between a Prog and an optab item, which is quite complex especially those constant types and constant offset types. In optab, each format of an instruction has an entry. In theory, we need to write an encoding function for each entry, fortunately we can reuse some similar cases. However sometimes we don't know whether there is a similar implementation, and as instructions increase, the code becomes more and more complex and difficult to maintain.

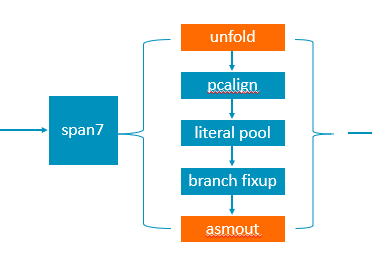

We propose to change this logic to: separate the preprocessing and encoding of a Prog. The specific method is to first unfold the Prog into a Prog corresponding to only one machine instruction (by hardcode), and then encode it according to the known bits and argument types. Namely: encoding_of_P = Known_bits | arg1 | arg2 | ... | argn

The control flow of span7 becomes:

We basically have a complete argument type description list and arm64 instruction table, see argument types and instruction table When we know the known bits and parameter types of an instruction, it is easy to encode it.

With this change, we don't need to handle the matching relationship between the Prog and the item of optab any more, and we won't encode a specific instruction but the instruction argument type. The number of the instruction argument type is much less than the instruction number, so theoretically the reusability will increase and complexity will decrease. In the future to add new instructions we only need to do this:

1, Set an index to the goal instruction in the arm64 instruction table.

2, Unfold a Prog to one or multiple arm64 instructions.

3, Encode the parameters of the arm64 instruction if they have not been implemented.

I have a prototype patch for this proposal, including the complete framework and support for a few instructions such as ADD, SUB, MOV, LDR and STR. See https://go-review.googlesource.com/c/go/+/297776. The patch is incomplete, more work is required to make it work. If the proposal is accepted, we are committed to taking charge of the work.

TODO list: 1, Enable more instructions. 2, Add more tests. 3, Fix the assembly printing issue. 4, Cross check with GNU tools.

CC @cherrymui @randall77 @ianlancetaylor

Keep in mind that the "Why?" section applies to pretty much all of the architectures. To the extent that we can design a system that works across architectures (sharing code, or even just sharing high-level design & testing), that would be great.

See also CL 275454 for the testing piece.

See also my comment in CL 283533.

Keep in mind that the "Why?" section applies to pretty much all of the architectures. To the extent that we can design a system that works across architectures (sharing code, or even just sharing high-level design & testing), that would be great.

I agree, but designing a general framework requires knowledge of multiple architectures. If Google can do this design, it would be best. We are happy to follow and complete the arm64 part.

See also CL 275454 for the testing piece.

Yes, I know this patch, Junchen is my colleague. This patch takes the mutual test of assembler and disassembler one step forward, and we also hope to use the gnu toolchain to test the Go assembler. It would be great if we could merge the golang/arch repo into the golang/go repo, so that the disassembler and assembler could share a lot of code.

See also my comment in CL 283533. "But maybe there's a machine transformation that could automate reorganizing the tables?"

This table is best to be as simple as possible, preferably decoupled from the Prog struct, and only contains the architecture-related instruction set.

Refer to Openjdk and GCC, if we divide the assembly process into two stages, macro assembly and assembly. The assembly is only responsible for encoding an architecture-related instruction, and the macro assembly is responsible for the preprocessing of the Prog before encoding. Then we only need to use this table for encoding and decoding.

I hope to create a new branch to complete the refactoring of the arm64 assembler if this is acceptable. Thanks for your comment.

Hi, are there any further comments or suggestions?

If you think the above scheme is feasible, I am willing to extend it to all architectures. I can complete this architecture-independent framework and the arm64 part, and other architectures need to be completed by others. Of course, we will retain the old code before completing the porting of an architecture and set a switch for the new and old code paths.

We have tried to add initial SVE support to the assembler under the existing framework, see CL 153358, but in order to get rid of the many existing cases, we had to add an asmoutsve function, it looks just like a copy of asmout. But it is foreseeable that with the increase of SVE instructions in the future, the same problem will appear again. Go may not currently support SVE instructions, but Go will always support new architecture instructions, maybe AVX512 instructions, or other new architecture instructions. But as long as this problem still exists, it will only become more and more difficult to deal with as time goes on. To be honest, we hope that upstream can give us a clear reply, so that we will feel easier to make the plan for next quarter. Can I apply for Upstream to discuss this issue at the next compiler & runtime meeting? Thank you.

Thanks for the proposal.

The current framework is a bit complicated, not easy to understand, maintain and extend.

I think this is subjective. That said, I agree that the current optab approach is probably not the best fit for ARM64. I'm not the original author of the ARM64 assembler, but I'm pretty sure the design comes from the assembler of other RISC architectures, which comes from the Plan 9 assemblers. In my perspective the original design is actually quite simple, if the encoding of the machine instruction is simple. Think of MIPS or RISC-V, there are just a small number of encodings (that's where oprrr, opirr, etc. come from); offsets are pretty much fixed width and encoded at the same bits. However, this is not the case of ARM64. Being RISC it started simple but more and more things get added. Offsets/immediates have so many ranges/alignment requirements that differs from instruction to instruction, not to mention the BITCON encoding. Vector instruction is much worse -- I would say there is no regularity (sorry about being subjective). That said, the encoding is quite bit-efficient for a fixed-width instruction set.

Given that the ARM64 instruction encoding is not like what the optab approach is best suited, I think it is reasonable to come up a different approach. I'm willing to give this a try.

unfold

This sounds reasonable to me. It might simplify a bunch of things. It may also make it possible to machine-generate the encoding table that converts individual machine instruction to bytes.

But keep in mind that there are things handled in the Prog level, e.g. unsafe point and restartable sequence marking. Doing it at Prog level is simple because some Prog is restartable while its underlying individual instructions are not. I wonder how this is handled.

======

cc @laboger @pmur I think the PPC64 assembler is also undergoing a refactor, and there may be similar issues. It would be nice if ARM64 and PPC64 contributors could work together on a common/similar design, so it is easier to maintain cross-architecture-wise.

Currently, in my perspective there are essentially 4 kinds of assembler backends in Go: x86, ARM32/ARM64/MIPS/PPC64/S390X which are somewhat similar, RISC-V which is a bit different, and WebAssembly. As someone maintaining or co-maintaining those backends, I'd really appreciate if we can keep this number small. Thanks.

I have not had much time to digest this yet. Speaking for my PPC64 work, I have no existing plans to rewrite our assembler. Adding ISA 3.1 support (particularly for 64b instructions) requires some extra work, but not so much to make it look much different than it does today. A couple thoughts below on the current PPC64 asm deficiencies:

A framework to decompose go opcodes which don't map 1-1 with a machine instruction would likely simplify laying out code. Similarly, we need to fixup large jumps from conditional operations and respect alignment restrictions with 64b ISA 3.1 instructions.

Likewise, something to more reliably synchronize the supported assembler opcodes against pp64.csv (residing with the disassembler) would be desirable to avoid the churn of adding new instruction support as needed.

But keep in mind that there are things handled in the Prog level, e.g. unsafe point and restartable sequence marking. Doing it at Prog level is simple because some Prog is restartable while its underlying individual instructions are not. I wonder how this is handled.

This is easy to fix, we just need to mark the first instruction of the sequence as restartable. For example:

op $movcon, [R], R -> mov $movcon, REGTMP + op REGTMP, [R], R

We'll mark the first instruction mov $movcon, REGTMP as restartable (although it uses REGTMP) so this instruction can be preempted, and leave the second instruction op REGTMP, [R], R as non-restartable because it uses REGTMP so that it won't be preempted. Anyway we can fix this problem by making a mark when unfolding.

Another problem is the printing of assembly instructions. Currently we print Go syntax assembly listing, namely unfolded "Prog"s, after unfolding, we can no longer print the previous Prog form. And we can't print before unfolding, because at that time we haven't got the Pc value of each Prog. Of course, if we feel that the new print format is also reasonable, then this is not a problem.

The situation of different architectures seems to be different, but arm64 has many new features and instructions that are moving from design to real device, so I can see how much work is needed to add and maintain new instructions. so can we just do arm64 first? In the future, other architectures can decide whether to adopt the arm64 solution according to their own situation. I believe that even if Google designs a general assembly framework later, our work will not be wasted.

I think the PPC64 assembler is also undergoing a refactor, and there may be similar issues. It would be nice if ARM64 and PPC64 contributors could work together on a common/similar design, so it is easier to maintain cross-architecture-wise.

We were not the original authors of the PPC64 assembler either and there is a lot of opportunity for clean up. The refactoring work @pmur is doing now is a lot of simplification of existing code, in addition to making changes to prepare for instructions in the new ISA. IMO his current work won't necessarily be a wasted effort if we want to use a new design at some point because he is eliminating a lot of clutter.

I'm not an ARM64 expert but my understanding is that we are similar enough that their design should be compatible for us. I'm not sure about all the other architectures you list above -- depends on whether the goal is to have a common one for all or just start with ARM64 and PPC64.

It sounds like ARM64 wants to move ahead soon, probably this release, and I'm not sure that is feasible for all other architectures. But if others later adopt the same or similar design that should at least simplify the support effort Cherry mentions above.

I am totally in favor of sharing code as much as possible.

cc @billotosyr @ruixin-bao

Yeah, I think we can start with ARM64.

If there is anything that PPC64 or other architecture needs, it is probably a good time to bring it up now. Then we can incorporate that and come up a design that is common/similar across architectures.

I think ARM64 and PPC64 are the more relatively complex ones. If a new design works for them, it probably will work well for MIPS and perhaps ARM32 as well.

Okay, then I will start to complete the above prototype patch.

Regarding cross-architecture and sharing code, I totally agree with this idea and will try my best to do it. But because the implementation of assembler is closely related to the architecture, to be honest, I don’t think we can share a lot of code, but the implementation idea is sharable. Just like the current framework, many architectures use the optab design.

Let me repeat our refactoring ideas:

... -> Unfold -> Fixup -> Encoding ->...

What we do in the Unfold function:

- If one

Progcorresponds to multiple machine instructions, expand it into multipleProgs, and finally eachProgcorresponds to one machine instruction. - Set the relocation type if necessary.

- Make some marks if necessary, such as literal pool, unsafe pointer, restartable.

- Hardcode each

Progto the right machine instruction.

What we do in the Fixup function:

- Branch fixup.

- Literal pool.

- Instruction alignment.

- Set the

Pcvalue of each Prog. - Any other processing before encoding.

Originally, steps 1, 2, and 3 are separate stages, but I am not sure if all architectures require these processes, so I put them all in a large function

Fixup, which actually deals with anything before encoding.

What we do in the Encoding function:

The Encoding function only calculates the binary encoding of each Prog. The idea for arm64 is: known_bits | args_encoding. An architecture instruction information table will be used here, which contains the known bits and parameter types of each instruction, so encoding an instruction will be converted to encoding the instruction parameters. Maybe the instructions of each architecture are different, but I think it shouldn't be difficult to encode a machine instruction for each architecture.

I'm going do it according to this idea, and I also welcome any suggestions on this design and code at any time.

I'm looking at the design and code and I have a question related to the unfoldTab and using it to include the function to be called for processing. I believe that means as each prog is processed, the function being called to process it will be called through a function pointer found in the table, and I don't think a function called this way can be inlined. Won't this cause a lot more overhead at compile/assembly time, since currently the handling of each opcode is done through a huge switch statement?

Won't this cause a lot more overhead at compile/assembly time, since currently the handling of each opcode is done through a huge switch statement?

Yes. originally I guess table lookup maybe better than switch-case, I didn't consider inlining. I'll check which one is better, thanks.

Hi, update my progress and the problems encountered, and hope to get your help.

I only changed the arm64/linux assembler at present, and the plan is to do the arm64 first and then consider the cross-architecture part. The code is basically completed, all tests of all.bash have pass except for the ARM64EndToEnd test.

Repeat the implementation ideas before talking about the problems encountered:

- Split all

Progsinto smallProgsin a function calledunfold, so that eachProgcorresponds to only one machine instruction, and calculate the corresponding entry of eachProgin theoptabinstruction table in this function. - Deal with literal pool.

- Processing branch fixup.

- Encode each

Prog.

The two problems encountered are:

-

Since we split the large

Proginto smallProg, the assembly instructions printed by the-Soption of the assembler and compiler are different from the previous ones. For example,MOVD $0x123456, R1The previous print result is:MOVD $0x123456, R1The print result now is:MOVZ $13398, R1+MOVK $18, R1Another situation is that Prog has not been split, but its parameters for encoding were changed, such asSYSL $0, R2Previous print result:SYSL ZR, R2The print result now:SYSL ZR, $0, $0, $0, R2In addition, since the codegen test depends on the printing result, the change of the printing format will cause the corresponding change of the codegen test. -

As mentioned earlier, the ARM64EndToEnd test failed, which is also caused by the splitting of large

Proginto smallProg. For example,SUB $0xaaaaaa, R2, R3The expected encoding result is43a82ad163a86ad1, which is a combination of two instruction encodings. But now the largeProgcorresponding to this instruction was split into two smallProgs, and our test is to check the coding results of the largeProg. What we actually get is the encoding 43a82ad1 of the first smallProg, so the test fails.

These problems are all caused by the original Prog being split or modified. The test failures can be fixed by some methods, what I want to ask is whether the change in the assembly printing format is acceptable?

If it is unacceptable, I will find a way to keep the original Prog when unfolding, and save the small Progs in a certain field of the original Prog for later encoding. This may use a little more memory, but I guess the impact should be small.

Hope to get your comments. /CC @cherrymui @randall77 Thanks.

I think it is okay to change the printing or adjusting tests, if the instructions have the same semantics.

Rewriting a Prog to other Prog (or Progs) is more of a concern for me. Things like rewriting SUB $const to ADD $-const are okay, which is pretty local. Rewriting one Prog to multiple Progs, especially with REGTMP live across Progs, is more concerning. This may need to be reviewed case by case. Or use a different data structure.

I think it is okay to change the printing or adjusting tests, if the instructions have the same semantics.

I will prepare two versions, one with Prog split, and the other without Prog split.

Rewriting a Prog to other Prog (or Progs) is more of a concern for me. Things like rewriting

SUB $consttoADD $-constare okay, which is pretty local. Rewriting one Prog to multiple Progs, especially with REGTMP live across Progs, is more concerning. This may need to be reviewed case by case. Or use a different data structure.

Yes, this is also the most troublesome part. According to what I have observed so far, splitting a Prog into multiple Progs only affects whether an instructions is isRestartable. Because if a Prog does not use REGTMP, the split has no effect on it. If a Prog uses REGTMP, the small Prog after splitting becomes an unsafe point due to the use of REGTMP and becomes non-preemptible. It was restartable before, but it is not anymore. But this does not affect the correctness, and the performance impact should be relatively small.

Change https://golang.org/cl/347490 mentions this issue: cmd/internal/obj/arm64: refactor the assembler for arm64 v1

Change https://golang.org/cl/297776 mentions this issue: cmd/internal/obj/arm64: refactor the assembler for arm64 v2

Change https://golang.org/cl/347531 mentions this issue: cmd/internal/obj/arm64: refactor the assembler for arm64 v3

Hi, I uploaded three implementation versions, of which the second and third versions are based on the first, because I'm not sure which one is better. Their implementation ideas are basically the same, the difference is whether to modify the original Prog when unfolding, and whether to use a new data structure.

- The first version will basically not change the original Prog when unfolding. If a Prog corresponds to multiple machine instructions, it will create new Progs and save the unfolded result in the Rel field of the original Prog. Example, unfold p1, q1~q3 are also Progs.

... -> p0->p1->p2->p3...

|

| Rel

\|/

q1-> q2->q3

- The second version will expand the Prog in place, so it will modify the original Prog. It will change the printing form of some assembly instructions. Such as MOVD may be printed as MOVZ + MOVK

... -> p0->p1->p2->p3... will be unfolded as

... -> p0->q1->q2->q3->p2->p3...

- The third version will not modify the original Prog, it newly defines a new data structure Inst to represent machine instructions. The advantage of this is that it is convenient to convert Go assembly instructions into GNU instructions, and mutual test with GNU.

... -> p0->p1->p2->p3...

|

| Inst

\|/

inst1-> inst2->inst3

Generally speaking, the instructions generated by the three versions are the same, so there is no difference in speed. However, there will be a little difference in memory usage. The second version causes the smallest memory allocation regression, the first version is the second, and the third version is the worst.

https://go-review.googlesource.com/c/go/+/347532 is an example of adding instructions, based on the first version. This example is relatively simple, I will add a more representative example later.

Finally we'll only select one of them, so could you help review these patches help me figure out which one is what we want, or better idea? I know these patches are quite large and hard to review, but I don't find a good way to split them into smaller ones, because then bootstrapping will fail. Thanks.

Based on some early feedback from @cherrymui , we now only keep one version, which is basically version 3 mentioned above, but with a little difference. Due to the large amount of change, it is not easy to review, so the code has been reviewed for several release cycles. I wonder if it's possible to merge this into the master branch in the early stage when the 1.20 tree is open? That way, if there are issues, we'll have plenty of time to deal with them before the 1.20 release. In fact, since this implementation only affects arm64, and the logic is relatively simple, I am very confident in maintaining the code.

I've rebased the code many times since the first push, and the implementation compiles successfully and passes all tests, which also proves that my implementation has no correctness issues. So what are our concerns? The design or code quality or just no time to review?

Change https://go.dev/cl/424137 mentions this issue: cmd/internal/obj/arm64: new path for adding new instructions

@erifan Thank you for all your contributions. Excited to see your work land in an upcoming release!

Please forgive me if I'm joining this discussion too late or raising topics that have already been covered.

I'm the author of avo, a high-level assembly generator for Go that aims to make assembly code easier to write and review. Thus far, it's been amd64 only, but as I'm sure you understand, I'm getting a lot of interest in arm64 support https://github.com/mmcloughlin/avo/issues/189.

One of the major inputs to this work is the instruction database. I have spent a lot of time looking at the ARM Machine Readable Specification, but I've found it to be frustrating in the sense that it's both extraordinarily detailed but also fails to provide certain useful data, at least without substantial effort to derive it. I've got the impression from Alastair Reid's posts (such as Bidirectional ARM Assembly Syntax Specifications), as well as Wei Xiao's arm64 disassembler CL, that ARM may have internal data sources that are more convenient to use. Beyond this, there are also various ways in which the Go assembler syntax differs from the standard specification, which have to be captured somehow.

Therefore, I would like to ask if it might be possible that alongside this work to extend and improve arm64 support in the Go assembler, that you might also be able to provide a structured representation of the instruction set? The goal would be a JSON file or any structured file format that other tooling developers could use. This would be immensely valuable as an input to code generation in avo.

If this sounds like something you might be open to, I'd be happy to get into the nitty-gritty details.

Thanks again for all your work!

Hi @mmcloughlin , thanks for your support of arm64. There are indeed many arm64 instructions missing in the Go backend, especially the vector instruction. This is why this issue exists. As for a formatted instruction set, we don't have one either. Here are two files that we obtained by parsing the pdf document when we were doing the arm64 disassembler. Hope it will be helpful to you. https://github.com/golang/arch/blob/master/arm64/arm64asm/inst.json , https://github.com/golang/arch/blob/1bb480fc256aacee6555e668dedebd1f8225c946/arm64/arm64asm/tables.go#L949

@erifan Thanks for your quick reply.

I should say I'm not referring to missing instructions. All of the instructions are in the XML files distributed by ARM. My concern is about getting the details required about the instructions and their operands, and some of the details are not easy to derive.

For comparison, avo x86 instruction database is derived from a combination of Maratyszcza/Opcodes and the go/arch x86.v0.2.csv. These are combined together with various fixes and custom logic into avo's own database: see types and data. I will need essentially the same kind of data for arm64 support. For each instruction form we'd need to know:

- Opcode. Specifically what the Go assembler calls it.

- Operands.

- Implicit operands: that is, any registers/flags read/written that are not explicitly in the assembly representation.

- Action on the operands: read/write/... for every operand. This is needed so that

avocan do liveness analysis and register allocation. - ...

I have already seen the files you referenced, but I don't think they provide the above information? Specifically, although the operands might be there, they're not in a form that can be easily used. I don't think implicit operands are represented there, or the operand actions?

You also said you derived these from a PDF, but from the code review comments I thought there was an internal tool that could not be open-sourced? Here @cherrymui asks "Is it possible to check in the generator somewhere?" and Wei Xiao replies:

The generator is a tool written with C++ for reading ARM processor description files to generate back-end utility functions for various languages and JIT projects. But it's only for internal use and not open-sourced so far.

See: https://go-review.googlesource.com/c/arch/+/43651/3..11/arm64/arm64asm/condition.go#b83

In another comment, Wei Xiao acknowledges that the JSON representation does not contain enough information about operands and that he had to use the internal tool instead:

But we soon find that there are a lot of manual work since JSON file doesn't classify operands of each instruction, which is actually the major work for generating the table. Classifying operands (i.e. decoder arguments) manually is also error-prone and unsystematic since there are +1k instructions. So we switch to our internal tool which has already classified all instruction operands and just reuse the JSON for test case generation.

See: https://go-review.googlesource.com/c/arch/+/43651/comment/ac7615dd_07db74f9/

So the question is whether you can provide a database in go/arch which would be like the inst.json you shared but with more complete information about the instruction operands and actions, which would be enough to provide the same kind of data that avo uses for x86?

Thanks again!

So the question is whether you can provide a database in go/arch which would be like the inst.json you shared but with more complete information about the instruction operands and actions, which would be enough to provide the same kind of data that avo uses for x86?

Sorry @mmcloughlin I don't have such a document. There are some xml documents inside ARM, but the format is not exactly in line with our needs. It needs some processing before it can be used. I can't share it with you.

Hi all, thank you for working on this. Just wanted to mention that Avo support is pretty critical for us on the cryptography side. We've found Avo generators much easier to produce and review than raw assembly, and I'd be much more likely to review new Avo than new assembly for arm64 (and amd64).

Thanks all, especially @mmcloughlin, for the interesting discussion about avo and machine readable instruction data!

As @erifan mentioned that currently there isn't such data, I wonder if a better option would be creating such data (even if it is hand-written), which could be beneficial for both the Go assembler (for this issue) and tools (including e.g. avo, and Go's disassembler)?

The current CL writes operand processing and instruction encoding functions by hand. What about write the same information as a table instead? I'd think they are roughly the same amount of effort? For the assembler, we could then use the table, either generating code from the table, or using some table-driven technique to process the instructions.

Thanks.

I wonder if a better option would be creating such data (even if it is hand-written),

I think our current practice is exactly like this, but inst.go only contains the fields required for encoding, and avo needs more information.

By the way, this table is not readily available, we need some manual work to get it. But once we get it, it doesn't cost much to maintain because it doesn't need to be updated very often.

The current CL writes operand processing and instruction encoding functions by hand.

The current CL does not need to process operands, it only needs to match the operands of Go assembly instructions to the operands of machine instructions. This mapping is essential.

The current CL changes the encoding of the instruction into the encoding of the operand, and this part of the work is manual. I also said that we can make it automatic, but I think the effort of writing generating code by hand may be higher than or equal to the effort of manual encoding, because the encoding of these operands is actually very simple.

I don't know if we can get enough information to get rid of the encoding of the operand, that is, refine the operand into 'elements', and then only need a few auxiliary functions to encode the elements. Maybe this is what you mean? @cherrymui