self-hosted

self-hosted copied to clipboard

self-hosted copied to clipboard

Sentry Activity Tab is broken if a member is in a team that has >500 projects

Self-Hosted Version

22.7.0

CPU Architecture

x86_64

Docker Version

Docker Compose Version

Steps to Reproduce

- Clean install of Sentry

- Create a team

- Create 1000 projects in this team

- Access the "Activity" tab

Expected Result

A functional Activity Tab

Actual Result

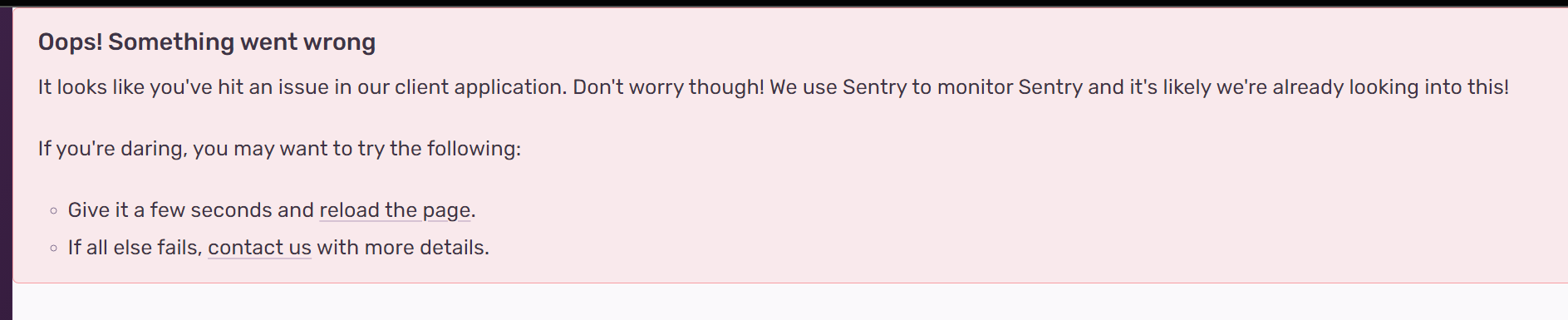

We'll see an "Oops! Something went wrong" message:

I've done a fair bit of digging into the issue, but I'm not familiar enough with Django to immediately know what a solution might be.

Looking at the logs of the web pod we seem to hit a recursion error:

File "/usr/local/lib/python3.8/site-packages/django/db/models/sql/compiler.py", line 434, in get_combinator_sql

part_sql, part_args = compiler.as_sql()

File "/usr/local/lib/python3.8/site-packages/django/db/models/sql/compiler.py", line 483, in as_sql

result, params = self.get_combinator_sql(combinator, self.query.combinator_all)

File "/usr/local/lib/python3.8/site-packages/django/db/models/sql/compiler.py", line 434, in get_combinator_sql

part_sql, part_args = compiler.as_sql()

File "/usr/local/lib/python3.8/site-packages/django/db/models/sql/compiler.py", line 483, in as_sql

result, params = self.get_combinator_sql(combinator, self.query.combinator_all)

File "/usr/local/lib/python3.8/site-packages/django/db/models/sql/compiler.py", line 434, in get_combinator_sql

part_sql, part_args = compiler.as_sql()

File "/usr/local/lib/python3.8/site-packages/django/db/models/sql/compiler.py", line 474, in as_sql

extra_select, order_by, group_by = self.pre_sql_setup()

File "/usr/local/lib/python3.8/site-packages/django/db/models/sql/compiler.py", line 56, in pre_sql_setup

self.where, self.having = self.query.where.split_having()

File "/usr/local/lib/python3.8/site-packages/django/db/models/sql/where.py", line 38, in split_having

if not self.contains_aggregate:

File "/usr/local/lib/python3.8/site-packages/django/utils/functional.py", line 80, in __get__

res = instance.__dict__[self.name] = self.func(instance)

File "/usr/local/lib/python3.8/site-packages/django/db/models/sql/where.py", line 170, in contains_aggregate

return self._contains_aggregate(self)

File "/usr/local/lib/python3.8/site-packages/django/db/models/sql/where.py", line 165, in _contains_aggregate

return any(cls._contains_aggregate(c) for c in obj.children)

File "/usr/local/lib/python3.8/site-packages/django/db/models/sql/where.py", line 165, in <genexpr>

return any(cls._contains_aggregate(c) for c in obj.children)

File "/usr/local/lib/python3.8/site-packages/django/db/models/sql/where.py", line 165, in _contains_aggregate

return any(cls._contains_aggregate(c) for c in obj.children)

File "/usr/local/lib/python3.8/site-packages/django/db/models/sql/where.py", line 165, in <genexpr>

return any(cls._contains_aggregate(c) for c in obj.children)

RecursionError: maximum recursion depth exceeded

The above code is triggered by organization_activity.py in https://github.com/getsentry/sentry/blame/master/src/sentry/api/endpoints/organization_activity.py#L69

I've managed to programmatically reproduce the RecursionError using this script, which basically borrows lines from all the relevant parts of the Sentry codebase:

from functools import reduce

from sentry.api.base import EnvironmentMixin

from sentry.api.bases import OrganizationMemberEndpoint

from sentry.api.paginator import DateTimePaginator

from sentry.api.serializers import OrganizationActivitySerializer, serialize

from sentry.models import Activity, OrganizationMemberTeam, Project

from sentry.types.activity import ActivityType

from sentry.utils.cursors import Cursor

cursor = Cursor.from_string('0:0:0')

base_qs = Activity.objects.exclude(type=ActivityType.UNMERGE_SOURCE.value).values_list("id", flat=True)

paginator = DateTimePaginator(base_qs, order_by="-datetime")

if cursor is not None and cursor.value:

cursor_value = paginator.value_from_cursor(cursor)

else:

cursor_value = 0

base_qs = paginator.build_queryset(cursor_value, False)

union_qs = Activity.objects.none()

organization=1

member=1

project_ids = list(Project.objects.filter(organization=organization,teams__in=OrganizationMemberTeam.objects.filter(organizationmember=member).values("team"),).values_list("id", flat=True))

# project_ids must have a length of greater than 500 for this to successfully replicate the recursionerror

if project_ids:

union_qs = reduce(lambda qs1, qs2: qs1.union(qs2, all=True),[base_qs.filter(project_id=project)[: paginator.max_limit]for project in project_ids],)

queryset = Activity.objects.filter(id__in=union_qs[: paginator.max_limit]).select_related("project", "group", "user")

cursor_result = DateTimePaginator(queryset=queryset, order_by="-datetime").get_result(limit=100, cursor=cursor)

- To run this, exec into a sentry web pod and run

sentry shellbefore copy-pasting the above chunk into the shell

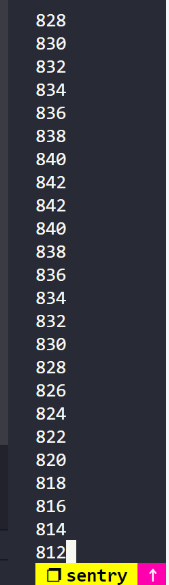

To confirm that it's a stack issue, I added an import inspect; print(len(inspect.stack(0)) line to the offending django code at /usr/local/lib/python3.8/site-packages/django/db/models/sql/compiler.py:434. Printing the stack depth, we see that:

-

If we constrain the length of project_ids to something like 400, this is the range of stack depths we get:

(goes up to 842 before going back down; this script runs successfully and gives us the activities we want)

(goes up to 842 before going back down; this script runs successfully and gives us the activities we want) -

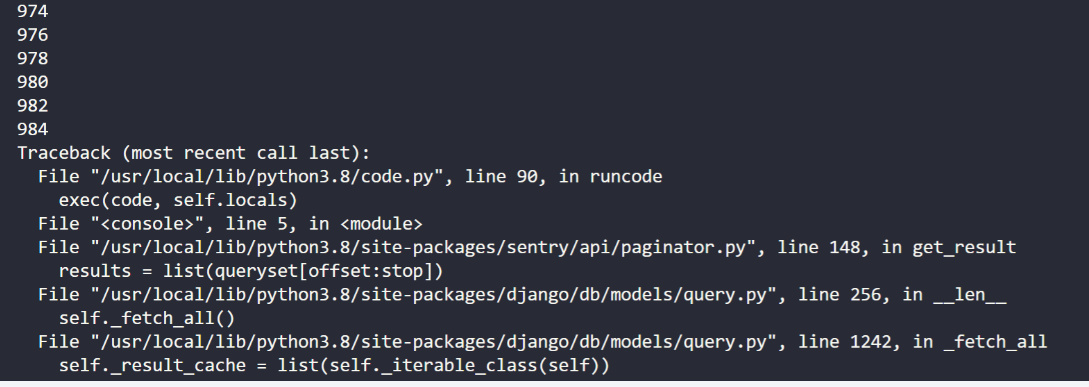

If we don't constrain the length of project_ids, it goes up to 984 before presumably throwing because it has exceeded Python's default 1000 stack limit:

-

This is independent of the projects themselves. If I supply dummy project IDs, the same recursion limit will hit (in this case, project IDs 1,000,000 onwards are nonexistent in the sentry backend I'm interacting with):

Could someone try to replicate this on their end? I'm not sure how this should be resolved in a Django-compatible manner; it seems like there's something fundamentally wrong about a large queryset union requiring deeper and deeper stack frames, when these should be parallelizable instead of a recursive query.