database

database copied to clipboard

database copied to clipboard

zophar

Updates:

I manually checked every file; sadly there are two big, giant, homebrew packs containing a total number of 1185 files .

After tweaking a little bit the dupe_scraper.py, I've found that we have "only" 325 files.

You can check what I've deleted here: https://gist.github.com/dag7dev/f9dcf20e326e9397e61e7ac240de3b43

Numbers

- before cleaning: 383 gb files and 802 gbc files

- after cleaning: 290 gb files and 570 gbc files

What I've done:

- cloned everything in zophar website

- detect for duplicates in the database folder

- add a small fragment for zophar entries (roms in

betafolder) - if duplicates while parsing beta are detected, then delete those duplicates

What's the matter:

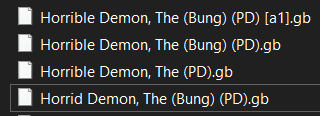

- things like this:

will happen. I don't have any idea why they are different, all

will happen. I don't have any idea why they are different, all The Horrible Demonlooks like the same while emulated (manually checked) and perhaps they are the same, but their MD5 is different so they are treated like different files.- there will be several cases

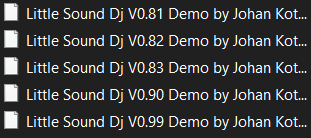

- versioning:

there are like 19 versions of this demo. Do we need to archive all of them?

there are like 19 versions of this demo. Do we need to archive all of them?

- obviously, there will be several cases here too of other roms

- missing metadata: files have been taken from those zip files on the zophar website. Unless we manually check every rom and try to understand who has made that rom and why, we are unable to find out more

Question Time:

- is there a way to understand the author of a homebrew by simply checking in a certain memory address in a rom (e.g. the first 4 bytes are reserved...)? If yes, how to automatize it?

- same as question number 1, but for building date. We can simplify and assume it that build time and release time match.

- how to automatize versioning? do we need to develop a script that check the similarities among rom names and if they "differ only for xxx chars" then it place those similar roms in a separate folder? e.g. lsdjv1.gb and lsdjv2.gb and lsdjv3.gb will be placed in a single folder named "lsdj".

- in case we can't automatize those two fundamental things, we will take an infinite amount of time by manually reviewing everything. Should we create a "review" system?

PoC: website will give to user who wants to contribute (or just play a random undiscovered game) a random rom in the uncat pool. The user will try that rom and tries to fill every information he could discover (developer, year if available, title, brief description). This data will be sent to a shared database.

Once per month, there will be some special users (called review users) that will approve or modify the contributions.

If the contrib is valid, then we just need to create a final json in an appropriate folder, update it with the data filled by user, extract a screenshot from the rom and we're good. If the contrib is not valid, we just need to throw out the beta json and insert the rom back in the beta pool.

Download

You will need 7z to decompress this file, since it's been compressed with LZMA. There are 850 files.

Compressed size: ~24mb Uncompressed size: ~170mb

Link: https://send.vis.ee/download/7173a8a6034a34e1/#7I4AqGpg9YKVneYfKgKc-w (expiring in 7 days or 20 download, if you need help just pm me)

@dag7dev everything that you mentioned is reproducible somewhere? or was it done manually?

@dag7dev everything that you mentioned is reproducible somewhere? or was it done manually?

Everything has been done manually.

If needed, as long as I will have some free time, I can arrange a Python script "on the fly" that does the things I wrote in "What I've done" section, but check it out yourself: just download those two giant packs, unzip everything in folder called "beta" in the database root folder, add this snippet:

# foreach file in beta folder

for f in os.listdir('../beta'):

hash = get_file_hash('../beta/' + f, 'md5')

if hash not in d:

d[hash] = []

d[hash].append(f)

else:

try:

print(f + " is duped. I am gonna remove it... ")

os.remove('../beta/' + f)

except:

print("Error while deleting.\n")

to dupe_finder.py, run dupe_finder and see with your eyes.

I cleaned up everything to be quicker, and the archive is there for our convenience. I can also upload a normal zip file if the problem is the extension.

@dag7dev everything that you mentioned is reproducible somewhere? or was it done manually?

Everything has been done manually.

If needed, as long as I will have some free time, I can arrange a Python script "on the fly" that does the things I wrote in "What I've done" section, but check it out yourself: just download those two giant packs, unzip everything in folder called "beta" in the database root folder, add this snippet:

# foreach file in beta folder for f in os.listdir('../beta'): hash = get_file_hash('../beta/' + f, 'md5') if hash not in d: d[hash] = [] d[hash].append(f) else: try: print(f + " is duped. I am gonna remove it... ") os.remove('../beta/' + f) except: print("Error while deleting.\n")to

dupe_finder.py, rundupe_finderand see with your eyes.I cleaned up everything to be quicker, and the archive is there for our convenience. I can also upload a normal zip file if the problem is the extension.

I don't it's a good idea to flood the database with those two huge compilations if we don't have enough information for the manifests and to correctly spot duplicates. Why don't you start with the rest of the entries on that page? Looks like everything has an author, a description and it refers to a single entry.

I don't it's a good idea to flood the database with those two huge compilations if we don't have enough information for the manifests and to correctly spot duplicates. Why don't you start with the rest of the entries on that page? Looks like everything has an author, a description and it refers to a single entry.

I don't think either, even if I think a community-review based system could work, but they have tons of revision and hard to find elsewhere stuff...

Ok for the other entries (even if some of them are duplicates), but what about the other entries in the giant zip folders?

I don't it's a good idea to flood the database with those two huge compilations if we don't have enough information for the manifests and to correctly spot duplicates. Why don't you start with the rest of the entries on that page? Looks like everything has an author, a description and it refers to a single entry.

I don't think either, even if I think a community-review based system could work, but they have tons of revision and hard to find elsewhere stuff...

Ok for the other entries (even if some of them are duplicates), but what about the other entries in the giant zip folders?

I suggest to start with the (non-duplicate) single entries.

For the ROM compilations, we should do it the other way round: look for ROM/game names we don't have yet in our database (e.g. names/slugs/filenames that have no similarities with the existing ones and that have files with checksums not already present).

For the ROM compilations, we should do it the other way round: look for ROM/game names we don't have yet in our database (e.g. names/slugs/filenames that have no similarities with the existing ones and that have files with checksums not already present).

Keep in mind that the zip I provided already contains the new potential entries in database, they're simply uncategorized.

I already cleaned up those zip packages so they are duplicates free (always by checking checksums, I am unable at this time to spot duplicates "with name").

For the ROM compilations, we should do it the other way round: look for ROM/game names we don't have yet in our database (e.g. names/slugs/filenames that have no similarities with the existing ones and that have files with checksums not already present).

Keep in mind that the zip I provided already contains the new potential entries in database, they're simply uncategorized.

I already cleaned up those zip packages so they are duplicates free (always by checking checksums, I am unable at this time to spot duplicates "with name").

Ok, then I suggest you to start preparing a PR with the non compilations entries