feathr

feathr copied to clipboard

feathr copied to clipboard

[BUG] Demo failing at get_offline_features using Azure Synapse

Willingness to contribute

No. I cannot contribute a bug fix at this time.

Feathr version

v0.7.1

System information

- OS Platform and Distribution (e.g., Linux Ubuntu 20.0): macOS Monterey 12.5.1

- Python version: Python 3.8.13+ from pyenv

Describe the problem

I'm running the demo notebooks locally and I can't get client.get_offline_features() to work. I'm pretty new to Azure, so it might be problem with that.

I've tried it with both product_recommendation and nyc_driver demos, the only thing I've changed were:

- using

! az login --tenant ***.onmicrosoft.com+AzureCliCredential instead of! az login --use-device-code+DefaultAzureCredential since I have multiple tenants and I had issues with selecting the default one - modyfying redis env variables

- adding a few more env variables

I've used Azure Resource Provisioning to deploy the resources and those notebook demos:

Tracking information

Error log from the notebook:

2022-08-30 12:09:10.239 | INFO | feathr.spark_provider._synapse_submission:submit_feathr_job:166 - See submitted job here: https://web.azuresynapse.net/en-us/monitoring/sparkapplication

2022-08-30 12:09:10.522 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:09:40.871 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:10:11.094 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:10:41.324 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:11:11.554 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:11:41.921 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:12:12.212 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:12:42.455 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:13:12.673 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:13:42.971 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:14:13.197 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:14:43.436 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:15:13.677 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:15:44.018 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:16:14.237 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:16:44.596 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:17:14.818 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:17:45.274 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:18:15.509 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:18:45.747 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:19:15.971 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:19:46.320 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:20:16.561 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: not_started

2022-08-30 12:20:46.801 | INFO | feathr.spark_provider._synapse_submission:wait_for_completion:176 - Current Spark job status: error

2022-08-30 12:20:46.804 | ERROR | feathr.spark_provider._synapse_submission:wait_for_completion:180 - Feathr job has failed.

---------------------------------------------------------------------------

HTTPError Traceback (most recent call last)

Input In [13], in <cell line: 22>()

15 settings = ObservationSettings(

16 observation_path="wasbs://[email protected]/sample_data/product_recommendation_sample/user_observation_mock_data.csv",

17 event_timestamp_column="event_timestamp",

18 timestamp_format="yyyy-MM-dd")

19 feathr_client.get_offline_features(observation_settings=settings,

20 feature_query=feature_query,

21 output_path=output_path)

---> 22 feathr_client.wait_job_to_finish(timeout_sec=1000)

File ~/.pyenv/versions/3.8-dev/lib/python3.8/site-packages/feathr/client.py:702, in FeathrClient.wait_job_to_finish(self, timeout_sec)

699 def wait_job_to_finish(self, timeout_sec: int = 300):

700 """Waits for the job to finish in a blocking way unless it times out

701 """

--> 702 if self.feathr_spark_launcher.wait_for_completion(timeout_sec):

703 return

704 else:

File ~/.pyenv/versions/3.8-dev/lib/python3.8/site-packages/feathr/spark_provider/_synapse_submission.py:181, in _FeathrSynapseJobLauncher.wait_for_completion(self, timeout_seconds)

179 elif status in {LivyStates.ERROR.value, LivyStates.DEAD.value, LivyStates.KILLED.value}:

180 logger.error("Feathr job has failed.")

--> 181 logger.error(self._api.get_driver_log(self.current_job_info.id).decode('utf-8'))

182 return False

183 else:

File ~/.pyenv/versions/3.8-dev/lib/python3.8/site-packages/feathr/spark_provider/_synapse_submission.py:331, in _SynapseJobRunner.get_driver_log(self, job_id)

329 token = self._credential.get_token("https://dev.azuresynapse.net/.default").token

330 req = urllib.request.Request(url=url, headers={"authorization": "Bearer %s" % token})

--> 331 resp = urllib.request.urlopen(req)

332 return resp.read()

File ~/.pyenv/versions/3.8-dev/lib/python3.8/urllib/request.py:222, in urlopen(url, data, timeout, cafile, capath, cadefault, context)

220 else:

221 opener = _opener

--> 222 return opener.open(url, data, timeout)

File ~/.pyenv/versions/3.8-dev/lib/python3.8/urllib/request.py:531, in OpenerDirector.open(self, fullurl, data, timeout)

529 for processor in self.process_response.get(protocol, []):

530 meth = getattr(processor, meth_name)

--> 531 response = meth(req, response)

533 return response

File ~/.pyenv/versions/3.8-dev/lib/python3.8/urllib/request.py:640, in HTTPErrorProcessor.http_response(self, request, response)

637 # According to RFC 2616, "2xx" code indicates that the client's

638 # request was successfully received, understood, and accepted.

639 if not (200 <= code < 300):

--> 640 response = self.parent.error(

641 'http', request, response, code, msg, hdrs)

643 return response

File ~/.pyenv/versions/3.8-dev/lib/python3.8/urllib/request.py:569, in OpenerDirector.error(self, proto, *args)

567 if http_err:

568 args = (dict, 'default', 'http_error_default') + orig_args

--> 569 return self._call_chain(*args)

File ~/.pyenv/versions/3.8-dev/lib/python3.8/urllib/request.py:502, in OpenerDirector._call_chain(self, chain, kind, meth_name, *args)

500 for handler in handlers:

501 func = getattr(handler, meth_name)

--> 502 result = func(*args)

503 if result is not None:

504 return result

File ~/.pyenv/versions/3.8-dev/lib/python3.8/urllib/request.py:649, in HTTPDefaultErrorHandler.http_error_default(self, req, fp, code, msg, hdrs)

648 def http_error_default(self, req, fp, code, msg, hdrs):

--> 649 raise HTTPError(req.full_url, code, msg, hdrs, fp)

HTTPError: HTTP Error 404: Not Found

The error in synapse:

Code to reproduce bug

No response

What component(s) does this bug affect?

- [ ]

Python Feathr Client: This is the client users use to interact with most of our API. Mostly written in Python. - [X]

Computation Engine: The computation engine that execute the actual feature join and generation work. Mostly in Scala and Spark. - [ ]

Feature Registry API Layer: The storage layer supports SQL, Purview(Atlas). The API layer is in Python(FAST API) - [ ]

Feature Registry Web UI layer: The Web UI for feature registry. Written in React with a few UI frameworks.

Assign to @jainr , our oncall dev.

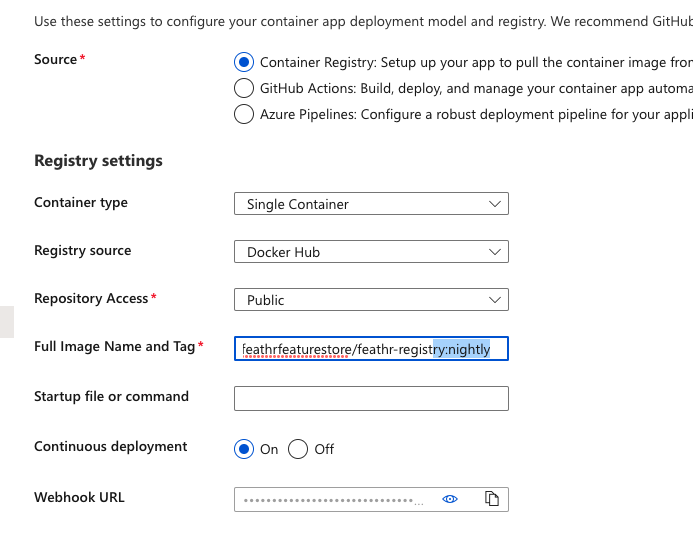

Hello @zkocur - Following up on this bug, can you please retry it with latest docker image and let me know what error you run into. There was issue in terms of what error codes were return in case of error which we fixed, that will help us getting to the root cause. You can change the deployment image for your web app by going to registry/UI web app and changing the docker image

This will enable you to latest release of the registry/ui docker image.

I don't suspect this to be an authentication issue since you would have run into errors much before this cell. Also can you confirm that you executed these permissions in cloud shell?

@zkocur closing this issue, please open a new one if you are facing issues.