segment-anything

segment-anything copied to clipboard

segment-anything copied to clipboard

how to save segmented image annotation in yolo format ? or segmentation support format

i tested it on image & im getting the mask, now i want to save this mask/annotations in yolo or mask rcnn or any segmentation format. what should i need to do. thanks.

If you're doing instance segmentation using COCO format, you'd just need to provide the bounding box output from SAM model for the given mask, and for the instance segmentation, you'd probably need to use something like OpenCv's find contour method to get a list of the vertices, and supply to the segmentation variable in the file. Alternatively, given the mask, just calculate the bounding box coordinates:

# Find contours in the mask image

contours, _ = cv2.findContours(mask, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

# Convert the contour to the format required for segmentation in COCO format

segmentation = []

for contour in contours:

# Flatten the contour coordinates into a 1D array

contour = contour.flatten().tolist()

# Convert the 1D array to a list of (x, y) pairs

contour_pairs = [(contour[i], contour[i+1]) for i in range(0, len(contour), 2)]

# Convert the (x, y) pairs to a list of integers

segmentation.append([int(coord) for pair in contour_pairs for coord in pair])

# Define annotation in COCO format

annotation = {

'id': 1,

'image_id': 1,

'category_id': 1,

'segmentation': segmentation,

'area': int(cv2.contourArea(contours[0])),

'bbox': [int(x) for x in cv2.boundingRect(contours[0])],

'iscrowd': 0

}

COCO format:

{

"info": {},

"licenses": [],

"images": [

{

"id": 1,

"file_name": "example.jpg",

"height": 480,

"width": 640

}

],

"annotations": [

{

"id": 1,

"image_id": 1,

"category_id": 1,

"segmentation": [[10,10,100,10,100,100,10,100]],

"area": 8100,

"bbox": [10,10,90,90],

"iscrowd": 0

}

],

"categories": [

{

"id": 1,

"name": "example",

"supercategory": "object"

}

]

}

For YOLO format, you just modify the bbox coordinates and write a text file:

# Write bounding boxes to file in YOLO format

with open('bounding_boxes.txt', 'w') as f:

for contour in contours:

# Get the bounding box coordinates of the contour

x, y, w, h = cv2.boundingRect(contour)

# Convert the coordinates to YOLO format and write to file

f.write('0 {:.6f} {:.6f} {:.6f} {:.6f}\n'.format((x+w/2)/mask_image.shape[1], (y+h/2)/mask_image.shape[0], w/mask_image.shape[1], h/mask_image.shape[0]))

YOLO format:

0 0.25 0.25 0.5 0.5

We added support in Roboflow for annotating using SAM. We convert the masks to polygons & you can export to YOLO format for training a model.

@Jordan-Pierce my mask is

type(masks)->numpy.ndarray im getting this output if i print it

masks

array([[[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

...,

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False]]])

passing this to function giving me error,

# Find contours in the mask image

contours, _ = cv2.findContours(masks, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

_, contours, _ = cv2.findContours(masks, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

# Convert the contour to the format required for segmentation in COCO format

segmentation = []

for contour in contours:

# Flatten the contour coordinates into a 1D array

contour = contour.flatten().tolist()

# Convert the 1D array to a list of (x, y) pairs

contour_pairs = [(contour[i], contour[i+1]) for i in range(0, len(contour), 2)]

# Convert the (x, y) pairs to a list of integers

segmentation.append([int(coord) for pair in contour_pairs for coord in pair])

# Define annotation in COCO format

annotation = {

'id': 1,

'image_id': 1,

'category_id': 1,

'segmentation': segmentation,

'area': int(cv2.contourArea(contours[0])),

'bbox': [int(x) for x in cv2.boundingRect(contours[0])],

'iscrowd': 0

}

ERROR:

1 # Find contours in the mask image

2 #contours, _ = cv2.findContours(masks, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

----> 3 _, contours, _ = cv2.findContours(masks, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

4

5

error: OpenCV(4.7.0) :-1: error: (-5:Bad argument) in function 'findContours'

> Overload resolution failed:

> - image data type = 0 is not supported

> - Expected Ptr<cv::UMat> for argument 'image

@Jordan-Pierce here is my code, im getting the mask, now want to convert that mask into annotation format.

import cv2

import matplotlib.pyplot as plt

import numpy as np

from ultralytics import YOLO

from segment_anything import sam_model_registry, SamPredictor

from PIL import Image

def yolov8_detection(image):

model = YOLO('/content/best.pt')

results = model(image, stream=True) # generator of Results objects

for result in results:

boxes = result.boxes # Boxes object for bbox outputs

bbox = boxes.xyxy.tolist()[0]

return bbox

def show_mask(mask, ax, random_color=False):

if random_color:

color = np.concatenate([np.random.random(3), np.array([0.6])], axis=0)

else:

color = np.array([30/255, 144/255, 255/255, 0.6])

h, w = mask.shape[-2:]

mask_image = mask.reshape(h, w, 1) * color.reshape(1, 1, -1)

ax.imshow(mask_image)

def show_points(coords, labels, ax, marker_size=375):

pos_points = coords[labels==1]

neg_points = coords[labels==0]

ax.scatter(pos_points[:, 0], pos_points[:, 1], color='green', marker='*', s=marker_size, edgecolor='white', linewidth=1.25)

ax.scatter(neg_points[:, 0], neg_points[:, 1], color='red', marker='*', s=marker_size, edgecolor='white', linewidth=1.25)

def show_box(box, ax):

x0, y0 = box[0], box[1]

w, h = box[2] - box[0], box[3] - box[1]

ax.add_patch(plt.Rectangle((x0, y0), w, h, edgecolor='green', facecolor=(0,0,0,0), lw=2))

# Load image

# image_path = '/content/myimg.jpg'

# image = cv2.imread(image_path)

# image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Load image

image_path = 'Cigaretee_i_Weapon_Pragati137.jpg'

image = Image.open(image_path).convert('RGB')

# Detection with YOLOv5

yolov8_boxex = yolov8_detection(image)

input_box = np.array(yolov8_boxex)

# Segment with SAM

sam_checkpoint = "sam_vit_h_4b8939.pth"

model_type = "vit_h"

device = "cpu"

sam = sam_model_registry[model_type](checkpoint=sam_checkpoint)

sam.to(device=device)

predictor = SamPredictor(sam)

predictor.set_image(image)

masks, _, _ = predictor.predict(

point_coords=None,

point_labels=None,

box=input_box[None, :],

multimask_output=False,

)

@akashAD98 sorry, I should have said it's a non-tested response, but that's an approach that should help you get started. Here's what I'm using, hope it helps.

# Read the image

image = cv2.imread(image_path)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Convert the bounding box to a tensor

input_box = torch.tensor(bbox, device=predictor.device)

transformed_box = predictor.transform.apply_boxes_torch(input_box, image.shape[:2])

# Set the image

predictor.set_image(image)

# Predict the mask

mask, _, _ = predictor.predict_torch(

point_coords=None,

point_labels=None,

boxes=transformed_box,

multimask_output=False,

)

# Convert the mask to a binary image

binary_mask = mask.cpu().numpy().squeeze().astype(np.uint8)

# Find the contours of the mask

contours, hierarchy = cv2.findContours(binary_mask,

cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE)

# Get the largest contour based on area

largest_contour = max(contours, key=cv2.contourArea)

# Get the new bounding box

bbox = [int(x) for x in cv2.boundingRect(largest_contour)]

# Get the segmentation mask for object

segmentation = largest_contour.flatten().tolist()

return bbox, segmentation

@Jordan-Pierce thank you so much. here is the implementation of yolov8 with SAM. if someone wants to try https://github.com/akashAD98/YOLOV8_SAM , Now I want to create directly supported format for mask rcnn,yolo segmentation,in which we can directly save annotations in.json format. if you have any reference for the same please let me know. thanks

@Jordan-Pierce

im getting extra annotation, may i know what the problem, i used the below script for converting segmentation into yolo support format

define the segmentation mask

from PIL import Image

import numpy as np

mask=segmentation

# load the image

img = Image.open("/content/Cigaretee_i_Weapon_Pragati137.jpg")

width, height = img.size

# convert mask to numpy array of shape (N,2)

mask = np.array(mask).reshape(-1,2)

# normalize the pixel coordinates

mask_norm = mask / np.array([width, height])

# compute the bounding box

xmin, ymin = mask_norm.min(axis=0)

xmax, ymax = mask_norm.max(axis=0)

bbox_norm = np.array([xmin, ymin, xmax, ymax])

# concatenate bbox and mask to obtain YOLO format

yolo = np.concatenate([bbox_norm, mask_norm.reshape(-1)])

# write the yolo values to a text file

with open('yolo_cigarnew137newew.txt', 'w') as f:

for val in yolo:

f.write("{:.6f} ".format(val))

We added support in Roboflow for annotating using SAM. We convert the masks to polygons & you can export to YOLO format for training a model.

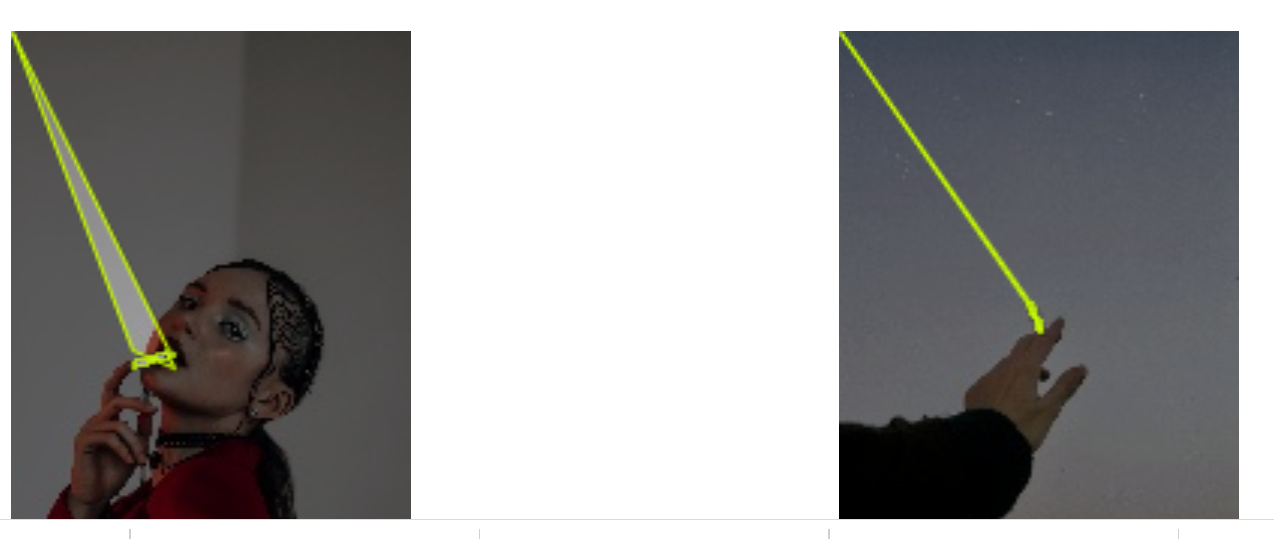

hi im opening my files in roboflow & its showing these extra lines, may i know what's wrong with this

Looks like you’re not converting to polygons very well. You have points that lie outside of your core shape, your shape doesn’t start and end near the same spot, and you’re adding a polygon for every point along the perimeter of the mask. Looks like your coordinate space might be messed up too.

What happens when you try the built-in SAM integration?

@yeldarby thanks for reply .i tried with SAM & its not giving the annotation in yolo/coco format. can you give some refrence for converting segmention box values to yolo format? i think my conversion is wrong.

I don't know how to do it in Python; as noted above, we built SAM into Roboflow & you can export your dataset in YOLO format.

How can I convert the masks file in .json format (COCO RLE) to a polygon format that can be read by an annotation software such as CVAT?

im done with script, if someone want to try they can feel free to use it. https://github.com/akashAD98/YOLOV8_SAM

is there any solution to convert SA model output to Yolo segmentation format?

please check here https://github.com/akashAD98/YOLOV8_SAM @SzaremehrjardiMT

I solved the problem with conversion of SAM output to yolov8 seg annotation.

After converting bounding boxes format from yolov8 output to SA model input format (label, x_center, y_center, w, h -----> label, x1, y1, x2, y2)

img_path= <>

home = os.getcwd()

img_no=0

for filename in os.listdir(img_path):

# Read original images

image = cv2.imread(os.path.join(img_path,filename))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

width, height,____ = image.shape

# Set the images to the SA predictor

predictor.set_image(image)

in_tensor = [] #bounding boxes as input

input_label = [] #label as input

for idx, bbs in enumerate(Prompts[img_no]):

# converted_bb = yolobbox2bbox(bbs[0],bbs[1],bbs[2],bbs[3],bbs[4])

# Prompts[img_no][idx] = converted_bb

converted_bb_tensor = bbs[:]

# converted_bb_tensor = torch.FloatTensor(converted_bb_tensor)

in_tensor.append(converted_bb_tensor[1:])

input_label.append(converted_bb_tensor[0])

# Prepar the input prompts

input_boxes = torch.tensor(in_tensor, device=predictor.device)

input_label = torch.tensor(input_label)

transformed_boxes = predictor.transform.apply_boxes_torch(input_boxes, image.shape[:2])

masks, _, _ = predictor.predict_torch(

point_coords=None,

point_labels=input_label,

boxes=transformed_boxes,

multimask_output=False,

)

After you get all of your masks for an image you can do this:

yolo_masks = []

for idxm, mask in enumerate(masks):

# Convert the mask to a binary image

binary_mask = masks[idxm].squeeze().cpu().numpy().astype(np.uint8)

# Find the contours of the mask

contours, hierarchy = cv2.findContours(binary_mask,

cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE)

# Get the largest contour based on area

largest_contour = max(contours, key=cv2.contourArea)

# Get the segmentation mask for object

segmentation = largest_contour.flatten().tolist()

mask=segmentation

# convert mask to numpy array of shape (N,2)

mask = np.array(mask).reshape(-1,2)

# normalize the pixel coordinates

mask_norm = mask / np.array([width, height])

# compute the bounding box

xmin, ymin = mask_norm.min(axis=0)

xmax, ymax = mask_norm.max(axis=0)

# concatenate label and mask to obtain YOLO seg format

lbl = str(int(input_label[idxm].numpy())) + ' '

m_norm = ' '.join(map(str, mask_norm.reshape(-1)))

yolo_line = lbl + m_norm +'\n'

yolo_masks.append(yolo_line)

os.chdir('Segmented_labels')

with open(filename[:-4]+'.txt', 'a') as f:

for val in yolo_masks:

f.write(val)

f.write('\n')

# return to home directory

os.chdir(home)

To convert the mask image to polygons, you can use this script mask2polygons.py.

To convert the COCO RLE format to polygons, you can use this script rle2polygons.py.

RectLabel is an offline image annotation tool for object detection and segmentation. Although this is not an open source program, you can label polygons using Segment Anything models and read/write/export the YOLO segmentation format.

Improved "Create polygon using SAM" feature so that you can label pixels using the pixels option. You can label Segment Anything 1 Billion (SA-1B) like dataset by yourself.

the easiest way to automate the labeling is follow this colab notebook : colab

then using dino you will get the labels in xml format then convert the xml labels to yolo format using any open source frameworks you can use makesense.ai to visualise and convert the labels to other formats