etcd

etcd copied to clipboard

etcd copied to clipboard

etcd v3.4.13 making frequent unexpected leader re-election

What happened?

I am running etcd V3.4.13 as cluster used by kubernetes platform v1.21.4

etcd is making a leader re-election lets say as average once per 1 hour which is causing some calls failure from kubernetes. etcd cluster is using SSD storage per instance.

I used to monitor the VMs network where etcd cluster is hosted during that time butu there is no packets dropped.

What did you expect to happen?

etcd stop making leader re-election to stop requests failure at the time of re-election

How can we reproduce it (as minimally and precisely as possible)?

it is unexpected behavior

Anything else we need to know?

No response

Etcd version (please run commands below)

$ etcd --version

etcd Version: 3.4.13

Git SHA: ae9734ed2

Go Version: go1.12.17

Go OS/Arch: linux/amd64

$ etcdctl version

etcdctl version: 3.4.13

API version: 3.4

Etcd configuration (command line flags or environment variables)

[Unit]

Description=etcd key-value store

Documentation=https://github.com/coreos/etcd

[Service]

Type=notify

Environment=ETCD_DATA_DIR=/var/lib/etcd

ExecStart=/opt/kubernetes/bin/etcd -name fms-k8s-master-dev-1

-client-cert-auth -trusted-ca-file=/opt/kubernetes/certs/ca.pem

-cert-file=/opt/kubernetes/certs/client.pem

-key-file=/opt/kubernetes/certs/client-key.pem

-peer-client-cert-auth -peer-trusted-ca-file=/opt/kubernetes/certs/ca.pem

-peer-cert-file=/opt/kubernetes/certs/client.pem

-peer-key-file=/opt/kubernetes/certs/client-key.pem

-advertise-client-urls=https://172.16.1.19:2379

-listen-client-urls=https://0.0.0.0:2379

-listen-peer-urls=https://0.0.0.0:2380

-initial-advertise-peer-urls=https://172.16.1.19:2380

-initial-cluster=fms-k8s-master-dev-1=https://172.16.1.19:2380,fms-k8s-master-dev-2=https://172.16.1.18:2380,fms-k8s-master-dev-3=https://172.16.1.17:2380

-initial-cluster-state=new

-initial-cluster-token=*****************

-enable-v2=true

Restart=always

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

Etcd debug information (please run commands blow, feel free to obfuscate the IP address or FQDN in the output)

$ etcdctl member list -w table

+------------------+---------+----------------------+--------------------------+--------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+----------------------+--------------------------+--------------------------+------------+

| c53ac51d7b26029 | started | fms-k8s-master-dev-3 | https://172.16.1.17:2380 | https://172.16.1.17:2379 | false |

| fe314885babde9f | started | fms-k8s-master-dev-1 | https://172.16.1.19:2380 | https://172.16.1.19:2379 | false |

| c7105e2f327de317 | started | fms-k8s-master-dev-2 | https://172.16.1.18:2380 | https://172.16.1.18:2379 | false |

+------------------+---------+----------------------+--------------------------+--------------------------+------------+

$ etcdctl --endpoints=<member list> endpoint status -w table

+--------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+--------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://172.16.1.19:2379 | fe314885babde9f | 3.4.13 | 224 MB | false | false | 530484 | 1986034962 | 1986034962 | |

| https://172.16.1.18:2379 | c7105e2f327de317 | 3.4.13 | 224 MB | true | false | 530484 | 1986034962 | 1986034962 | |

| https://172.16.1.17:2379 | c53ac51d7b26029 | 3.4.13 | 224 MB | false | false | 530484 | 1986034962 | 1986034962 | |

+--------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

Relevant log output

# below is the logs of initial etcd leader before re-election

Mar 13 20:18:34 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:34 WARN: c53ac51d7b26029 stepped down to follower since quorum is not active

Mar 13 20:18:34 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:34 INFO: c53ac51d7b26029 became follower at term 530478

Mar 13 20:18:34 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:34 INFO: raft.node: c53ac51d7b26029 lost leader c53ac51d7b26029 at term 530478

Mar 13 20:18:35 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:35 INFO: c53ac51d7b26029 is starting a new election at term 530478

Mar 13 20:18:35 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:35 INFO: c53ac51d7b26029 became candidate at term 530479

Mar 13 20:18:35 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:35 INFO: c53ac51d7b26029 received MsgVoteResp from c53ac51d7b26029 at term 530479

Mar 13 20:18:35 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:35 INFO: c53ac51d7b26029 [logterm: 530478, index: 1986017322] sent MsgVote request to fe314885babde9f at term 530479

Mar 13 20:18:35 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:35 INFO: c53ac51d7b26029 [logterm: 530478, index: 1986017322] sent MsgVote request to c7105e2f327de317 at term 530479

Mar 13 20:18:37 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:37 INFO: c53ac51d7b26029 is starting a new election at term 530479

Mar 13 20:18:37 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:37 INFO: c53ac51d7b26029 became candidate at term 530480

Mar 13 20:18:37 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:37 INFO: c53ac51d7b26029 received MsgVoteResp from c53ac51d7b26029 at term 530480

Mar 13 20:18:37 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:37 INFO: c53ac51d7b26029 [logterm: 530478, index: 1986017322] sent MsgVote request to fe314885babde9f at term 530480

Mar 13 20:18:37 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:37 INFO: c53ac51d7b26029 [logterm: 530478, index: 1986017322] sent MsgVote request to c7105e2f327de317 at term 530480

Mar 13 20:18:38 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:38 INFO: c53ac51d7b26029 is starting a new election at term 530480

Mar 13 20:18:38 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:38 INFO: c53ac51d7b26029 became candidate at term 530481

Mar 13 20:18:38 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:38 INFO: c53ac51d7b26029 received MsgVoteResp from c53ac51d7b26029 at term 530481

Mar 13 20:18:38 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:38 INFO: c53ac51d7b26029 [logterm: 530478, index: 1986017322] sent MsgVote request to fe314885babde9f at term 530481

Mar 13 20:18:38 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:38 INFO: c53ac51d7b26029 [logterm: 530478, index: 1986017322] sent MsgVote request to c7105e2f327de317 at term 530481

Mar 13 20:18:40 fms-k8s-master-dev-3 etcd[14783]: timed out waiting for read index response (local node might have slow network)

Mar 13 20:18:40 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-17\" " with result "error:etcdserver: request timed out" took too long (7.000281898s) to execute

Mar 13 20:18:40 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/leases/kube-system/kube-controller-manager\" " with result "error:context canceled" took too long (4.996323322s) to execute

Mar 13 20:18:40 fms-k8s-master-dev-3 etcd[14783]: WARNING: 2022/03/13 20:18:40 grpc: Server.processUnaryRPC failed to write status: connection error: desc = "transport is closing"

Mar 13 20:18:40 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:40 INFO: c53ac51d7b26029 is starting a new election at term 530481

Mar 13 20:18:40 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:40 INFO: c53ac51d7b26029 became candidate at term 530482

Mar 13 20:18:40 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:40 INFO: c53ac51d7b26029 received MsgVoteResp from c53ac51d7b26029 at term 530482

Mar 13 20:18:40 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:40 INFO: c53ac51d7b26029 [logterm: 530478, index: 1986017322] sent MsgVote request to fe314885babde9f at term 530482

Mar 13 20:18:40 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:40 INFO: c53ac51d7b26029 [logterm: 530478, index: 1986017322] sent MsgVote request to c7105e2f327de317 at term 530482

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgAppResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgAppResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from fe314885babde9f [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgAppResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgAppResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:41 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgHeartbeatResp message with lower term from c7105e2f327de317 [term: 530478]

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: failed to revoke 63177f63798e0578 ("etcdserver: request timed out")

Mar 13 20:18:41 fms-k8s-master-dev-3 etcd[14783]: failed to revoke 60297f637a741457 ("etcdserver: request timed out")

Mar 13 20:18:42 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:42 INFO: c53ac51d7b26029 [term: 530482] ignored a MsgVote message with lower term from c7105e2f327de317 [term: 530479]

Mar 13 20:18:42 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/leases/kube-system/kube-scheduler\" " with result "error:context deadline exceeded" took too long (4.992771216s) to execute

Mar 13 20:18:42 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:42 INFO: c53ac51d7b26029 is starting a new election at term 530482

Mar 13 20:18:42 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:42 INFO: c53ac51d7b26029 became candidate at term 530483

Mar 13 20:18:42 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:42 INFO: c53ac51d7b26029 received MsgVoteResp from c53ac51d7b26029 at term 530483

Mar 13 20:18:42 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:42 INFO: c53ac51d7b26029 [logterm: 530478, index: 1986017322] sent MsgVote request to fe314885babde9f at term 530483

Mar 13 20:18:42 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:42 INFO: c53ac51d7b26029 [logterm: 530478, index: 1986017322] sent MsgVote request to c7105e2f327de317 at term 530483

Mar 13 20:18:42 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:42 INFO: c53ac51d7b26029 received MsgVoteResp rejection from c7105e2f327de317 at term 530483

Mar 13 20:18:42 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:42 INFO: c53ac51d7b26029 has received 1 MsgVoteResp votes and 1 vote rejections

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:43 INFO: c53ac51d7b26029 [term: 530483] ignored a MsgVote message with lower term from fe314885babde9f [term: 530480]

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/configmaps/default/fms-dev-flink-reports-engine-0a586b0-restserver-leader\" " with result "error:context canceled" took too long (9.999325023s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: WARNING: 2022/03/13 20:18:43 grpc: Server.processUnaryRPC failed to write status: connection error: desc = "transport is closing"

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:43 INFO: c53ac51d7b26029 [term: 530483] received a MsgVote message with higher term from c7105e2f327de317 [term: 530484]

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:43 INFO: c53ac51d7b26029 became follower at term 530484

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:43 INFO: c53ac51d7b26029 [logterm: 530478, index: 1986017322, vote: 0] cast MsgVote for c7105e2f327de317 [logterm: 530479, index: 1986017324] at term 530484

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: raft2022/03/13 20:18:43 INFO: raft.node: c53ac51d7b26029 elected leader c7105e2f327de317 at term 530484

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/persistentvolumeclaims/fms-dev/fms-dev-redis-hajj-report\" " with result "error:etcdserver: leader changed" took too long (6.881998243s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-3\" " with result "error:etcdserver: leader changed" took too long (3.561952138s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-18\" " with result "error:etcdserver: leader changed" took too long (8.033357387s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-7\" " with result "error:etcdserver: leader changed" took too long (3.824914237s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-5\" " with result "error:etcdserver: leader changed" took too long (9.029458476s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/persistentvolumeclaims/fms-dev/fms-dev-postgres-chat\" " with result "error:etcdserver: leader changed" took too long (8.361246563s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-2\" " with result "error:etcdserver: leader changed" took too long (7.187156008s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/configmaps/default/fms-dev-flink-reports-engine-0a586b0-resourcemanager-leader\" " with result "error:etcdserver: leader changed" took too long (8.533308871s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/persistentvolumeclaims/fms-dev/fms-dev-redis-seat-belt-engine\" " with result "error:etcdserver: leader changed" took too long (9.087712167s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/monitoring.coreos.com/prometheusrules/\" range_end:\"/registry/monitoring.coreos.com/prometheusrules0\" count_only:true " with result "error:etcdserver: leader changed" took too long (3.926413915s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/leases/\" range_end:\"/registry/leases0\" count_only:true " with result "error:etcdserver: leader changed" took too long (7.475427498s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-18\" " with result "error:etcdserver: leader changed" took too long (5.419474847s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-worker-dev-1\" " with result "error:etcdserver: leader changed" took too long (7.035695275s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-20\" " with result "error:etcdserver: leader changed" took too long (4.859589593s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-16\" " with result "error:etcdserver: leader changed" took too long (5.446529053s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-7\" " with result "error:etcdserver: leader changed" took too long (6.455564863s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-8\" " with result "error:etcdserver: leader changed" took too long (6.038533088s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/ingress/\" range_end:\"/registry/ingress0\" count_only:true " with result "error:etcdserver: leader changed" took too long (6.074183404s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-5\" " with result "error:etcdserver: leader changed" took too long (6.086652965s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-12\" " with result "error:etcdserver: leader changed" took too long (9.43482722s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/namespaces/default\" " with result "error:etcdserver: leader changed" took too long (6.434897686s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/apiregistration.k8s.io/apiservices/\" range_end:\"/registry/apiregistration.k8s.io/apiservices0\" count_only:true " with result "error:etcdserver: leader changed" took too long (7.810551584s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-6\" " with result "error:etcdserver: leader changed" took too long (8.927403076s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-2\" " with result "error:etcdserver: leader changed" took too long (5.013019661s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/keycloak.org/keycloakrealms/\" range_end:\"/registry/keycloak.org/keycloakrealms0\" count_only:true " with result "error:etcdserver: leader changed" took too long (3.965050665s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/csistoragecapacities/\" range_end:\"/registry/csistoragecapacities0\" count_only:true " with result "error:etcdserver: leader changed" took too long (8.701122716s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-master-dev-3\" " with result "error:etcdserver: leader changed" took too long (3.734929332s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-13\" " with result "error:etcdserver: leader changed" took too long (7.877374591s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-9\" " with result "error:etcdserver: leader changed" took too long (7.918831884s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/snapshot.storage.k8s.io/volumesnapshotcontents/\" range_end:\"/registry/snapshot.storage.k8s.io/volumesnapshotcontents0\" count_only:true " with result "error:etcdserver: leader changed" took too long (9.484779286s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-6\" " with result "error:etcdserver: leader changed" took too long (7.202628212s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-11\" " with result "error:etcdserver: leader changed" took too long (8.693041644s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-master-dev-2\" " with result "error:etcdserver: leader changed" took too long (7.667840536s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/namespaces/default\" " with result "error:etcdserver: leader changed" took too long (4.638812888s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/ingress/\" range_end:\"/registry/ingress0\" count_only:true " with result "error:etcdserver: leader changed" took too long (5.154395086s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-2\" " with result "error:etcdserver: leader changed" took too long (4.748083806s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-5\" " with result "error:etcdserver: leader changed" took too long (5.196715699s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/rolebindings/\" range_end:\"/registry/rolebindings0\" count_only:true " with result "error:etcdserver: leader changed" took too long (8.09328229s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/persistentvolumeclaims/fms-qa/fms-qa-redis-playback\" " with result "range_response_count:1 size:1323" took too long (1.410915382s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/services/endpoints/sop-dev/ignite\" " with result "range_response_count:1 size:967" took too long (153.890373ms) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/pods/kube-system/custom-fluentd-fluentd-elasticsearch-d58j6\" " with result "range_response_count:1 size:7943" took too long (157.492878ms) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-7\" " with result "range_response_count:1 size:11806" took too long (1.237742767s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/runtimeclasses/\" range_end:\"/registry/runtimeclasses0\" count_only:true " with result "range_response_count:0 size:8" took too long (372.169732ms) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-19\" " with result "range_response_count:1 size:12755" took too long (1.584596794s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-14\" " with result "range_response_count:1 size:9906" took too long (458.930231ms) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/persistentvolumeclaims/sop-dev/sop-dev-postgres-shift-roaster\" " with result "range_response_count:1 size:1391" took too long (284.559018ms) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/serviceaccounts/\" range_end:\"/registry/serviceaccounts0\" count_only:true " with result "range_response_count:0 size:10" took too long (877.962587ms) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-17\" " with result "range_response_count:1 size:10097" took too long (327.824423ms) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-10\" " with result "range_response_count:1 size:9527" took too long (2.734478938s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-master-dev-1\" " with result "range_response_count:1 size:3148" took too long (3.106657618s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/services/endpoints/fms-dev/cassandra\" " with result "range_response_count:1 size:1463" took too long (1.646994311s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/clusterrolebindings/\" range_end:\"/registry/clusterrolebindings0\" count_only:true " with result "range_response_count:0 size:10" took too long (3.240382686s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/deployments/\" range_end:\"/registry/deployments0\" count_only:true " with result "range_response_count:0 size:11" took too long (2.048708884s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/leases/kube-system/kube-controller-manager\" " with result "range_response_count:1 size:322" took too long (355.311571ms) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-4\" " with result "range_response_count:1 size:11873" took too long (2.623035552s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/persistentvolumeclaims/fms-dev/fms-dev-postgres-connector\" " with result "range_response_count:1 size:2146" took too long (2.643336246s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-master-dev-3\" " with result "range_response_count:1 size:3442" took too long (2.494846358s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/cronjobs/\" range_end:\"/registry/cronjobs0\" count_only:true " with result "range_response_count:0 size:10" took too long (2.462090493s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/rolebindings/\" range_end:\"/registry/rolebindings0\" count_only:true " with result "range_response_count:0 size:10" took too long (2.103543966s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/services/endpoints/sop-qa/sms-gateway\" " with result "range_response_count:1 size:897" took too long (2.963699369s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/persistentvolumeclaims/fms-qa/fms-qa-postgres-driver\" " with result "range_response_count:1 size:1327" took too long (1.3046304s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-node-dev-4\" " with result "range_response_count:1 size:11873" took too long (1.395729819s) to execute

Mar 13 20:18:43 fms-k8s-master-dev-3 etcd[14783]: read-only range request "key:\"/registry/minions/fms-k8s-master-dev-1\" " with result "range_response_count:1 size:3148" took too long (816.274271ms) to execute

@tangcong

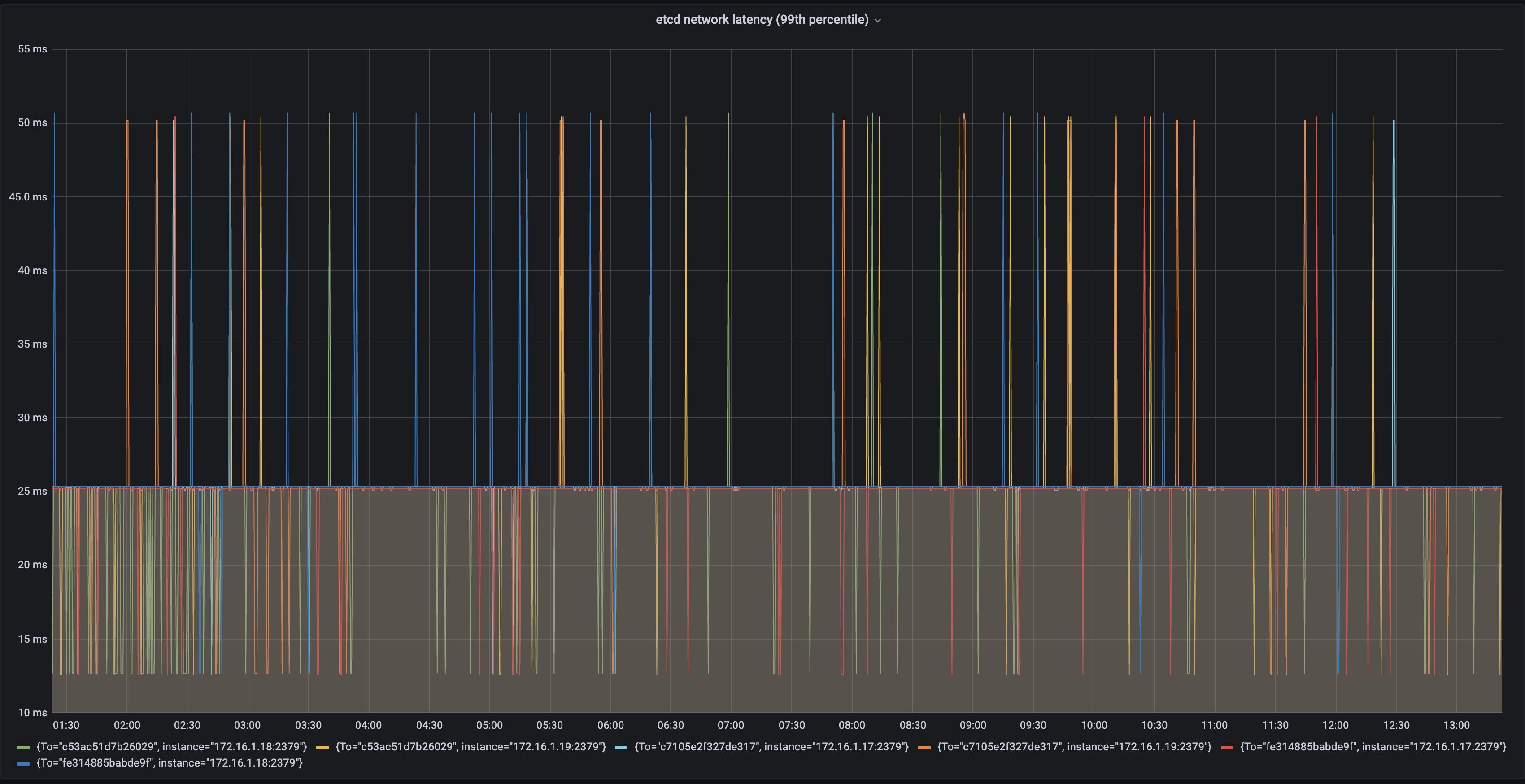

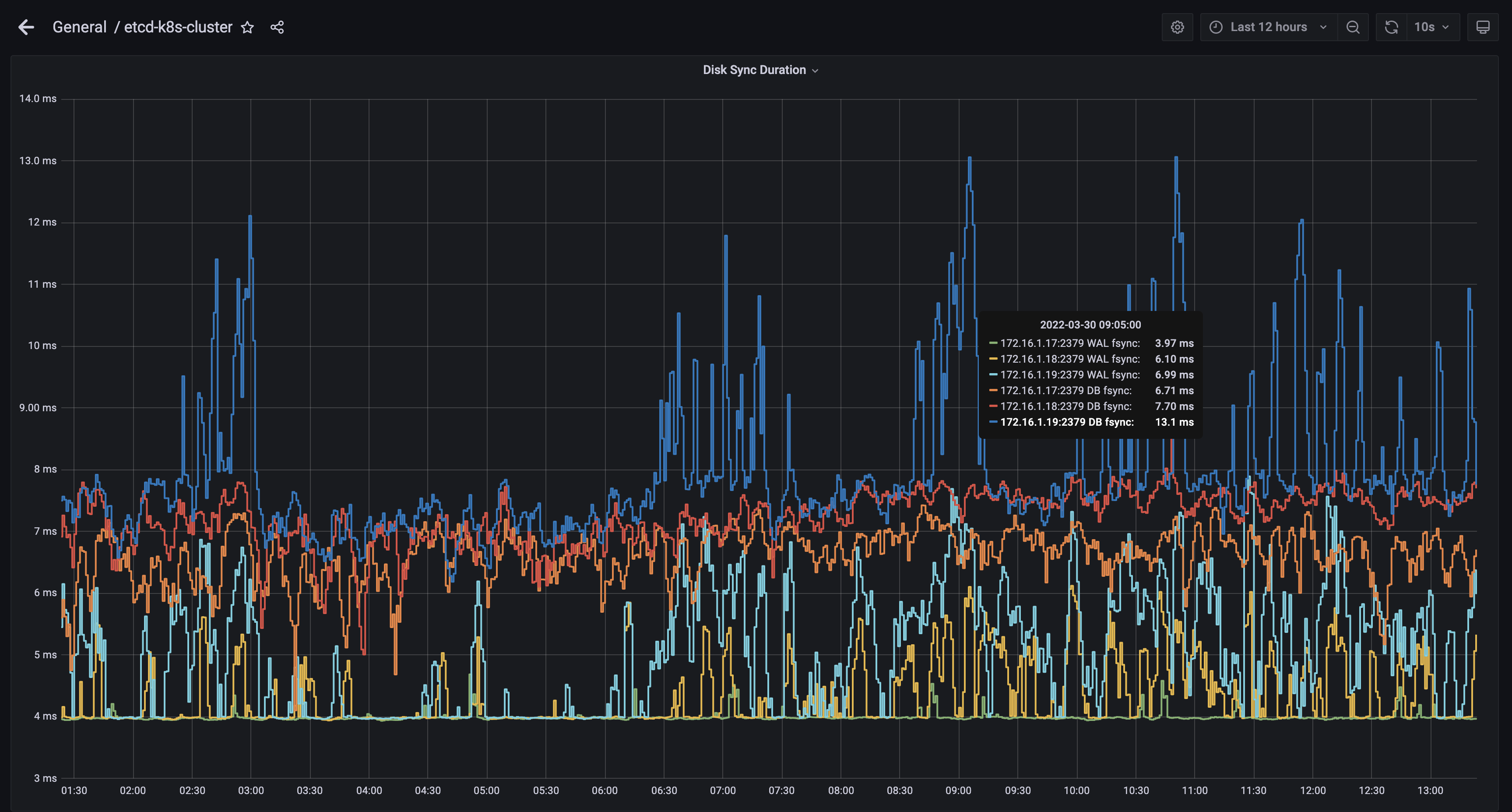

I used to monitor my etcd for a couple of days so kindly you can see below the RTT dashboard as well as the IO disk for WAL commit and backend commit:

Hi, this may not be a solution to your issue, but maybe it could help alleviate a problem.

Have you tried enabling the --pre-vote flag? Maybe the issue is related to that flag? Thanks.

Thanks @vivekpatani for your response but could you explain more what this option does ?

@mootezbessifi I don't know if that's the issue you're facing. I'm guessing.

Problem:

The idea of preVote is to address the issue, where one or more members are partitioned from their own cluster due to any nature of connectivity issues, the minority cluster can trigger an election because there is no leader anymore in that partition, and after it joins the cluster, the newly elected leader (from minority) tends to force a step down of the original leader in the majority partition. This issue causes frequent unwarranted leader elections, resulting in the flux maybe you're seeing. To address this issue, they use a PreVote flag

Solution:

The Pre-Vote algorithm solves the issue of a partitioned server disrupting the cluster when it rejoins. While a server is partitioned, it won’t be able to increment its term, since it can’t receive permission from a majority of the cluster. Then, when it rejoins the cluster, it still won’t be able to increment its term, since the other servers will have been receiving regular heartbeats from the leader. Once the server receives a heartbeat from the leader itself, it will return to the follower state (in the same term).

Source: https://web.stanford.edu/~ouster/cgi-bin/papers/OngaroPhD.pdf (Section 9.6), hope this helps.

This issue has been automatically marked as stale because it has not had recent activity. It will be closed after 21 days if no further activity occurs. Thank you for your contributions.