text-detection-ctpn

text-detection-ctpn copied to clipboard

text-detection-ctpn copied to clipboard

Questions about training data

Thanks for your code and dataset!

I have two questions about training data:

- Some images' short side length is not equal to 600(e.g. img_1651), but the label's width is

16, so when get_minibatch , each box's width ingt_boxeswill not equal to 16. Will this behavior affect the text detect performance? - Should we remove labels like following (red part) to get better performance?

img_1652.jpg

img_1676.jpg

There are also some images have wrong labels, like img_3591.jpg

img_5737.jpg and img_5741.jpg

update: These images are wrong labeled in original MLT17 training dataset

I am trying to clean the data and recreate the anchor labels from MLT17 according to the minAreaRect of a text line. Not sure whether the training result will be better or not, but I think it worth a try. I will release the cleaned data at tf_ctpn once finished.

Split text line by bounding box:

Split text line by minAreaRect:

After recreate the ground truth labels and make several changes (see https://github.com/Sanster/tf_ctpn/commit/dc533e030e5431212c1d4dbca0bcd7e594a8a368 and https://github.com/Sanster/tf_ctpn/commit/7ae3d50d72bbdccb16f00987a5edb97659d6fbf2), I got better result on ICDAR13:

| Net | Dataset | Recall | Precision | Hmean |

|---|---|---|---|---|

| Origin CTPN | ICDAR13 + ? | 73.72% | 92.77% | 82.15% |

| vgg16 without commit https://github.com/Sanster/tf_ctpn/commit/dc533e030e5431212c1d4dbca0bcd7e594a8a368 and https://github.com/Sanster/tf_ctpn/commit/7ae3d50d72bbdccb16f00987a5edb97659d6fbf2 | data provided by @eragonruan | 63.69% | 71.46 % | 67.35% |

| vgg16 with commit https://github.com/Sanster/tf_ctpn/commit/dc533e030e5431212c1d4dbca0bcd7e594a8a368 without commit https://github.com/Sanster/tf_ctpn/commit/7ae3d50d72bbdccb16f00987a5edb97659d6fbf2 | data provided by @eragonruan | 69.70% | 70.10% | 69.90% |

| vgg16 | MLT17 latin/chn new ground truth + icdar13 training data | 74.26% | 82.46% | 78.15% |

@Sanster good job!

@Sanster why the score of last line (VGG 16) is worse than the first line (Origin CTPN), i.e.

74.26% | 82.46% | 78.15% v.s. 73.72% | 92.77% | 82.15% (recall, precision, Hmean) ?

@interxuxing Maybe

- No side-refinement part

- Different way from Conv5 to BLSTM see https://github.com/eragonruan/text-detection-ctpn/issues/193

- The training data is different

- Use

adam. Origin CTPN use SGD - ...

@Sanster Thank you for your prompt reply. I figured out that there are several differences between this implementation and the original paper.

As you have explored, the MTL datasets is not very clean/accurate for training. Do you think using the synthesized data such as SynthText is useful for better performance, though the text are synthesized and embedded in some template image.

@interxuxing I think it worth a try

- Cleaned data MLT17(latin+chinese) + ICDAR13: google drive

- Code for split text line by

minAreaRect: mlt17_to_voc.py

"origin CTPN" means the model release by paper, or caffe repo

@saicoco The result of "origin CTPN" is from ICDAR13 result page

ok. get it

@Sanster I have a problem for it, if you remove labels like following (red part) , In the process of PRN, Those small text may be selected as Negative samples, so that is there a problem for training CTPN?

@Wangweilai1 I think vertical words(not suitable for CTPN), very small words(can't recognize by human) should be negative examples, or we can create a ignore mask like in EAST. Not sure which way is better.

Yea,may be create a ignore mask is a good idea, In order to avoid text be selected as negative. Thanks for you answer!

Hi @Sanster @eragonruan

Could you please share how you guys draw the ground truth boxes on training image? I am analyzing the difference between this model and CTPN original model. Zhi Tian(CTPN author) suggested me to check your dataset, maybe the ground truth is too wider than text content. Thanks.

@Sanster can you tell me how to calculate the precision and recall? thanks !

@Sanster @eragonruan 你好,我使用的自己的数据集,手写体,且文字方向不固定,检测结果效果不好,特别是竖直文字,想问一下,我如何修改算法能够适合竖直文字的检测?或者有没有更好的算法,多谢

这个怎么改?好奇怪

这个怎么改?好奇怪

@Sanster @eragonruan 你好,我使用的自己的数据集,手写体,且文字方向不固定,检测结果效果不好,特别是竖直文字,想问一下,我如何修改算法能够适合竖直文字的检测?或者有没有更好的算法,多谢

ctpn只是用来检测水平文字的

这个怎么改?好奇怪

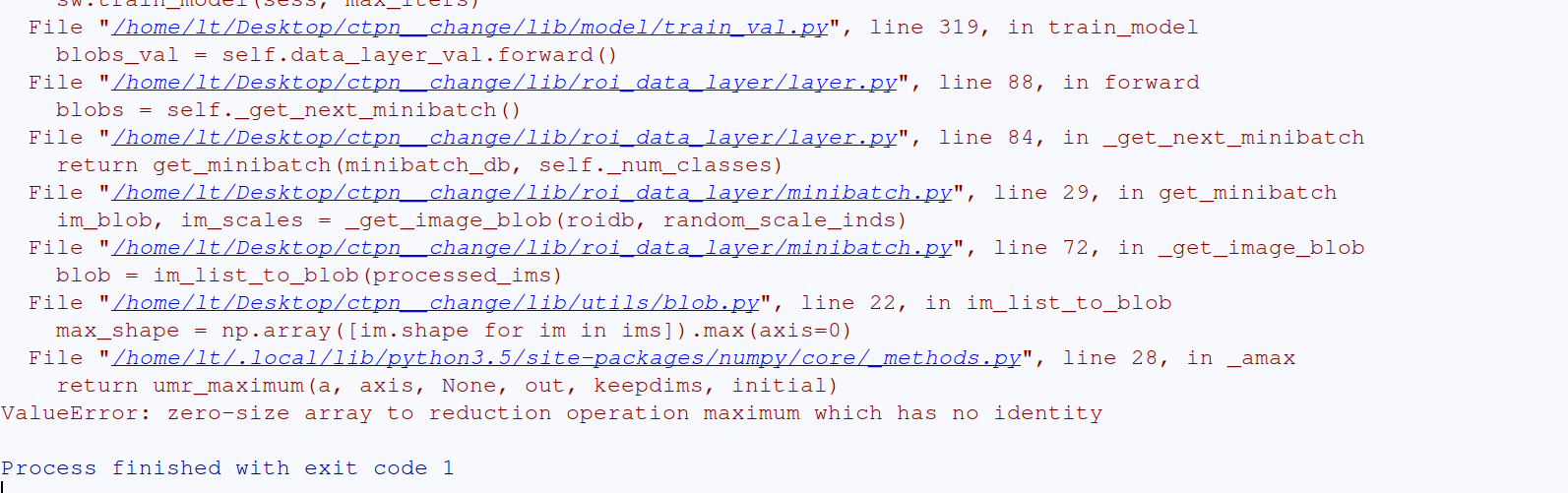

数据集有问题,把对应错误的变量打印出来,检查一下

Hi , How did you annotate your own data while preparing the training dataset ?

I am trying to clean the data and recreate the anchor labels from MLT17 according to the

minAreaRectof a text line. Not sure whether the training result will be better or not, but I think it worth a try. I will release the cleaned data at tf_ctpn once finished.Split text line by bounding box:

Split text line by minAreaRect:

下载不了谷歌网盘的文件,所以找不到清理之后的数据,请问,可以提供吗?

@Sanster can you tell me how to calculate the precision and recall? thanks !

Have you solve the problem? I have the same questions, could you share the methods?