kubernetes-reflector

kubernetes-reflector copied to clipboard

kubernetes-reflector copied to clipboard

[BUG] reflector does not update secrets anymore after some time - restart fixes the problem

Hi guys,

we are happily using reflector in our clusters.

But after some time the pod is still running, but secrets are not updated/created anymore.

When I restart the deployment the new pod instantly creates or updates the target secrets.

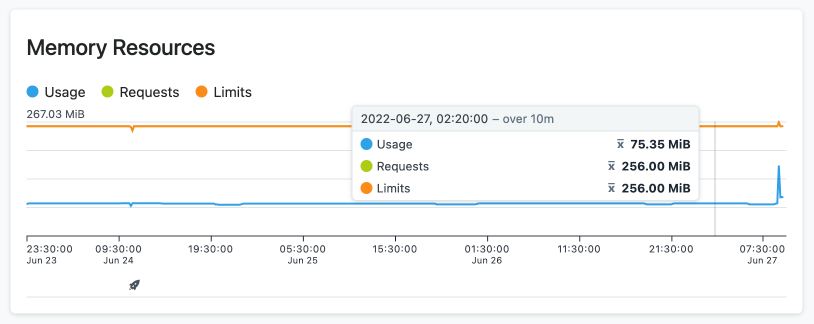

So it does not seem to be a memory leak:

Although there is some kind of pattern in the memory stats.

Although there is some kind of pattern in the memory stats.

I can't check the logs too far in the past, but there were no logs before the restart for at least 30 days.

We encountered this problem several times on multiple cluster, is there any way we can further debug this issue?

Best regards Alex

Hi,

I am encountering the same issue as @aeimer on my cluster which is running v6.1.23.

When the issue occurs all logging stops and the process appears to not be doing anything anymore. A restart of the deployment or a deletion of the "stuck pod" fixes the issue when the reflector service restarts. For me on the last freeze the very last line looked like this:

2022-07-02 06:41:09.338 +00:00 [INF] () Starting host

2022-07-02 06:41:11.618 +00:00 [INF] (ES.Kubernetes.Reflector.Core.NamespaceWatcher) Requesting V1Namespace resources

2022-07-02 06:41:11.764 +00:00 [INF] (ES.Kubernetes.Reflector.Core.SecretWatcher) Requesting V1Secret resources

2022-07-02 06:41:11.830 +00:00 [INF] (ES.Kubernetes.Reflector.Core.ConfigMapWatcher) Requesting V1ConfigMap resources

2022-07-02 06:41:13.622 +00:00 [INF] (ES.Kubernetes.Reflector.Core.SecretMirror) Auto-reflected cert-manager/xx-xxxx-net-lego-cert where permitted. Created 0 - Updated 5 - Deleted 0 - Validated 5.

And then nothing else in the logs... just stuck there.

After i restarted the deployment the log output was exactly the same but with no validation done Created 0 - Updated 5 - Deleted 0 - Validated 0.

Here are logs after a restart and a successful patch of the auto-reflected resources:

2022-07-21 06:41:09.338 +00:00 [INF] () Starting host

2022-07-21 06:41:11.618 +00:00 [INF] (ES.Kubernetes.Reflector.Core.NamespaceWatcher) Requesting V1Namespace resources

2022-07-21 06:41:11.764 +00:00 [INF] (ES.Kubernetes.Reflector.Core.SecretWatcher) Requesting V1Secret resources

2022-07-21 06:41:11.830 +00:00 [INF] (ES.Kubernetes.Reflector.Core.ConfigMapWatcher) Requesting V1ConfigMap resources

2022-07-21 06:41:13.622 +00:00 [INF] (ES.Kubernetes.Reflector.Core.SecretMirror) Auto-reflected cert-manager/hs-x-net-lego-cert where permitted. Created 0 - Updated 5 - Deleted 0 - Validated 0.

2022-07-21 06:41:14.514 +00:00 [INF] (ES.Kubernetes.Reflector.Core.SecretMirror) Patched default/hs-x-net-lego-cert as a reflection of cert-manager/hs-x-net-lego-cert

2022-07-21 06:41:14.575 +00:00 [INF] (ES.Kubernetes.Reflector.Core.SecretMirror) Patched cluster-service/hs-x-net-lego-cert as a reflection of cert-manager/hs-x-net-lego-cert

2022-07-21 06:41:14.624 +00:00 [INF] (ES.Kubernetes.Reflector.Core.SecretMirror) Patched home-automation/hs-x-net-lego-cert as a reflection of cert-manager/hs-x-net-lego-cert

2022-07-21 06:41:14.667 +00:00 [INF] (ES.Kubernetes.Reflector.Core.SecretMirror) Patched home-media/hs-x-net-lego-cert as a reflection of cert-manager/hs-x-net-lego-cert

2022-07-21 06:41:14.738 +00:00 [INF] (ES.Kubernetes.Reflector.Core.SecretMirror) Patched ingress/hs-x-net-lego-cert as a reflection of cert-manager/hs-x-net-lego-cert

So something in Core.SecretMirror() seems to be blocking perhaps?

facing the same issue.

i believe it is somehow due to broken watchers (maybe due to dropped idle connection by a firewall for example?)

for me the secrets got synced again after the watcher session has been internally closed and restarted.

so i believe setting the configuration.watcher.timeout to a low timeout like 60or 120 seems to be a workaround for that issue.

The issue is indeed the watchers. I'm working on a new solution to monitor the watchers and restart them if they're dead. Fallback would be a timeout, but the problem with watcher timeout is that some clusters have thousands of secrets reflected and the watchers may timeout before everything is processed resulting in a performance degradation.

The issue is indeed the watchers. I'm working on a new solution to monitor the watchers and restart them if they're dead. Fallback would be a timeout, but the problem with watcher timeout is that some clusters have thousands of secrets reflected and the watchers may timeout before everything is processed resulting in a performance degradation.

Let me know if you need help testing a beta version... i dont have thousands of secrets on my cluster though. Just a handful that get reflected to 3 other namespaces.

Automatically marked as stale due to no recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

Calling attention to this bug again. Please do not close it. I am happy to help if needed.

Removed stale label.

Just following up as this problem is causing us quite the headache as well. We're running into the issue on every cluster we're using Reflector on, regardless of watcher timeout length (have tried 120, 60, and 30). We're copying less than 100 secrets per cluster.

Any updates on progress or expected timeline?

Sorry to be annoying - thx for the good work!

Same issue sadly here. Reflecting, literally 1 secret across 7 namespaces only, so at least we know it is not load dependent.

Can we add something like a liveness probe that automatically restart reflector pod if it stucks?

Probably a good quick fix, if the problem cant be fixed nearly soon.

Hi.

It would be nice if we're able through the helmchart to override the livelinessProb.

So I submitted a pr for this purpose: https://github.com/emberstack/kubernetes-reflector/pull/310

A bit more information not found in previous messages:

- happens on 6.1.47 (very last release at time of writing)

- happened last time when I updated master nodes (so when API server got restarted, likely breaking the watcher).

From experience, in golang services, there is a RetryWatcher. Is there something similar in C# SDK?

For anyone looking for a solution to this, it might be worth using https://github.com/ktsstudio/mirrors until a fix is pushed.

I published a workaround based on cronjob here: https://github.com/emberstack/kubernetes-reflector/pull/310

But this seems a more better alternative if you got time to replace reflector by something else:

For anyone looking for a solution to this, it might be worth using https://github.com/ktsstudio/mirrors until a fix is pushed.

I published a workaround based on cronjob here: #310

@idrissneumann Thx for the workaround! That's essentially what we've been using as a stop gap - a cronjob that runs and restarts the pod every 10 minutes. It's obviously very inelegant, but it's been working without issue for the past few weeks.

But this seems a more better alternative if you got time to replace reflector by something else:

For anyone looking for a solution to this, it might be worth using https://github.com/ktsstudio/mirrors until a fix is pushed.

I'm curious for your thoughts - and @Rid 's - for why this mirrors solution might be a stronger alternative. From a quick glance at the repo it doesn't seem to have any active work/maintenance on it (last commit was a README change 3 months ago), which is the major issue with this Kubernetes-Reflect repo.

Have you guys used mirrors successfully, or do you know the owner, or have other reasons for recommending it? I'm certainly open to it but wary of adopting something new unless it has a strong maintainer behind it.

It might be worth to check if External Secrets Operator can handle the same task. You can define a ClusterSecretStore with a k8s source. might be possible to define the source and target k8s as the same. Not tested yet, but will...

https://external-secrets.io/v0.6.1/provider/kubernetes/

Just wanted to echo the above - we're having the same issue with Reflector.

Please try the new version. This issue should be fixed. Please reopen if this is still a problem (some scenarios are extremely hard to reproduce and help is required to validate the fix).

Hi @winromulus

Thanks for the update here. I've updated our version of Reflector to the latest. I waited approximately 2 hours before testing and the issue still appears to occur. I followed the steps below to reproduce the issue.

- Updated Reflector

- Approx 2hrs later - updated a secret.

- Secret did not replicate - nothing shown in the logs.

- Restarted Reflector, Secret then replicates successfully immediately.

The logs don't really offer anything of value, so haven't included, but let us know if we can provide any more diagnostic info.

Thanks, Mark