Dnn.Platform

Dnn.Platform copied to clipboard

Dnn.Platform copied to clipboard

Improve DNN installer experience

Description of problem

I have been installing extensions for a client on Azure with limited resources. Although the obvious solution for this is to add more resources, the lack of feedback by the install process is a problem IMO. DNN does not give any feedback if the install process fails due to a timeout (?) I have also seen this on servers with enough resources but that were busy at the time, in general with packages of 4MB+ in size. I guess there's a timeout at the background, but there is no feedback you get as a user.

When you reload the page after some minutes (as nothing happens), it's uncertain if the extension installed or not and in some cases we even noticed that it seemed to be installed, but the dll in the bin was not the correct version.

Description of solution

IMO DNN should at least show a message if there is a timeout or maybe limit the install process to x minutes and show a fail message. Ideal (although I realize this is not easy) would be if a user would get direct feedback of the install process steps. That way it would also be easier to see what's going on. FYI, Polydeploy has the exact same issue, it just stops without any notice.

Description of alternatives considered

none

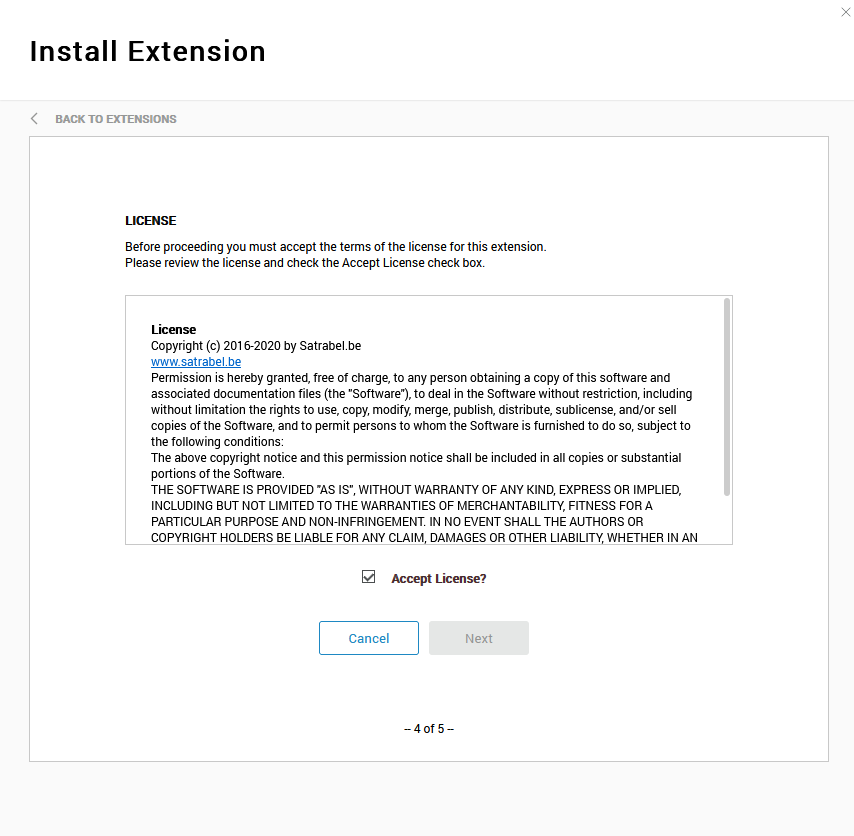

Screenshots

Thank you for reporting this. It's beginning to be something I'm also seeing more and more - and not just in the mutual environment we might be talking about here. It's also had a bunch of forum posts over the years.

This is a very interesting situation, and one that is a bit more specific and harder to catch from an Azure perspective.

Azure App Services have a hard-set limit of 230 seconds for a request, without any way to change that behavior. (https://learn.microsoft.com/en-us/answers/questions/839464/how-to-increase-api-timeout-on-azure-which-in-depl.html)

However, the way the transaction drops off is a bit hard to test/validate/report on from a DNN perspective

I think an update that would go a long way for any DNN user would be a more detailed explanation of where/what happened, as Timo suggests.

Right now, there's a bullet list and even a veteran DNN administrator has a lot of troubleshooting ahead of them to figure out why in some cases.

The timeout issue, specifically, could or might be an iterative step after whatever happens with this Issue now, because it could be a different "value" or limit in various environments, and difficult to approach as Mitch sugggested.

I've tried to catch this myself a few times, the issue is that the connection is just "dropped," it doesn't actually end the request, and often times the server is still processing, meaning that the install COULD complete, or may not.

Its a very interesting failure pattern for sure, and a reason to ensure that modules that are notorious for heavy loads need to run ONLY on scaled environments.

FYI, I also see this behavior on VPS not on azure where this 230sec limit should not exist. Not sure why..

I'm just wondering if not making the user feedback dependent of the request could make this better. So just triggering the install and use some kind of "ajax" like process to get feedback of the install status. I think it might also be good if the extension installer process could write a log file so you can at least check where it ended and that could also be the basis for the feedback?

(FYI, I didn't check the code yet)

Yes. More information is all we need for right now. Once we're able to at least log more details, then we can create a new issue later based on what we find, and further improve the module installer experience.

BTW, i just tried this (hacky) workaround. The open content install package is 11 MB and contains a lot of small files. These are all in Resources.zip inside the package. My guess is that unpacking these files takes longer than the 230 seconds limit on Azure.

So I created 7 separate installers all with only a part of the Resources.zip content and those do get installed without a timeout. (although not in one go using PolyDeploy)

I first tested with 4 packages that failed then tried 5 etc.

I noticed that's it's not the size of the package, it's the number of files in the package I think..

BTW, i just tried this (hacky) workaround. The open content install package is 11 MB and contains a lot of small files. These are all in Resources.zip inside the package. My guess is that unpacking these files takes longer than the 230 seconds limit on Azure.

So I created 7 separate installers all with only a part of the Resources.zip content and those do get installed without a timeout. (although not in one go using PolyDeploy)

I first tested with 4 packages that failed then tried 5 etc.

I noticed that's it's not the size of the package, it's the number of files in the package I think..

Oh, that's not ideal. If the files aren't installed during install/upgrade, they end up being orphaned files later when you uninstall a module. :(

Well, is not the Azure App Service limitation, is the Azure Load Balancer who drops off the connection after not sending any byte back for more than 230secs.

I've faced this in other projects by having a main thread that opens the "operation" in another sub-thread. If the sub-thread goes for more than 200sec, then the main thread starts to send back a byte that doen't break the response (i.e. if returning HTML or json, perhaps a char(20), if XML, then more tricky, because you must need to send the XML declaration at the very first part of the result).

With that approach, I've been able to run processes in Azure App Service for 15-20min. Not ideal, but is a workaround that could be implemented on the package installation.

Also, if you have more Azure pieces like an Azure Application Gateway, an API Management, another Azure Load balancer in the mix, remember to verify the connection timeout on all those parts, since the default timeout is 4 minutes as well (those can be easly increased on the Azure portal).

PS: currently, to avoid this situation, I ALWAYS scale up the hosting plan tier to a P2 or P3, and then scale in to the regular situation, the cost will be just some cents.

@davidjrh good point, thus the reason they stay open.

Scale up is the only real option right now for sure

Well, that's the only option for an actual "fix." We can still potentially give at least a bit more information than a listing of things that might have happened. Right? :)

Yes I agree. BTW as stated before we have also seen the installer "hanging" on non Azure environments.

I have exactly the same problem on a VPS (with enough resources) today. I cannot install a 4 MB skin package, the installer just seems to stall. So this is not an Azure only issues.

BTW, the other client we have this issue with in Azure is on P3 and the install still "fails" a lot. Well, actually, you have no idea if it succeeded or not as the process stopped..

@Timo-Breumelhof said...

BTW, the other client we have this issue with in Azure is on P3 and the install still "fails" a lot. Well, actually, you have no idea if it succeeded or not as the process stopped..

Indeed... P3V3, to be specific. :)

What DB spec at that time?

The one that Timo and I mutually know about has issues with three different versions of the database. We've seen it happen on one that has a dedicated database connection, and on the others in two different DB configurations of the same kind, with one having ~20 databases and the other having about ~4. They're elastic pools that scale up, except for the one dedicated one - and it happens to all of them, seemingly at random. The timing would mostly be normal day hours in EU, since Timo runs into this the most. :)

@WillStrohl any idea of the spec of the elastic pool?

Been installing on Azure for years, and my standard SOP

- App Service - Scale to top tier of whatever plan they are on (S3, P3V2, or P3v3)

- DB - Scale to a minimum of S4 (200 DTU) - If on a pool ensure that at least 200 DTU is available

I have 100% success rate with installs/upgrades using these configurations. Just trying to spot any differences as I'm working towards some guidance for Azure Installs

As Timo mentioned, we can see this in non-Azure environments as well. I've even seen this happen on local installs in some cases, but not recently.

The specific Azure environment we're talking about already has a P3V3 web app service and the DB is nearly at a max level as well. The SQL database is using a significantly large elastic pool that scales. The DB change was one we scaled up earlier this year, and it solved a bunch of problems, but not this one. In this case, our own testing seems to point at it's not an Azure-specific issue. We just happen to be also using and like Azure. :)

@WillStrohl @mitchelsellers BWT, I also have this issue installing a 4MB skin package, which does not contain any SQL at all

On a local copy of the site @WillStrohl and @Timo-Breumelhof are talking about, we also experienced the same slowness when installing.

I created a custom build of DNN (9.11, current develop branch) and upgraded the local site to that version. Using log lines (a LOT) @Timo and I already found that clearing the log is taking way too long, and a lot longer than in a clean installation of the same custom build.

I've been digging deeper and there's really something going on with clearing the cache. In the clean install, clearing the cache from the HttpRuntime.Cache takes a total of 0.1 second. In the original installation, it takes over 2 minutes: log from clean install (note the timestamps):

2023-01-11 14:33:06.665+01:00 DotNetNuke.Services.Cache.CachingProvider - 40F: Cache.Remove (DNN_Tab_TabAliasSkins-1)

2023-01-11 14:33:06.666+01:00 DotNetNuke.Services.Cache.CachingProvider - 40F: Cache.Remove (DNN_Tab_Tabs-1)

2023-01-11 14:33:06.666+01:00 DotNetNuke.Services.Cache.CachingProvider - 40F: Cache.Remove (DNN_PersonaBarMenuController_Dnn.SiteGroups)

2023-01-11 14:33:06.667+01:00 DotNetNuke.Services.Cache.CachingProvider - 40F: Cache.Remove (DNN_TabSettings0)

versus (note the timestamps)

2023-01-11 15:05:10,260 DotNetNuke.Services.Cache.CachingProvider - 40F: Cache.Remove (DNN_PersonaBarResourcesen-US)

2023-01-11 15:05:11,012 DotNetNuke.Services.Cache.CachingProvider - 40F: Cache.Remove (DNN_TabModules55)

2023-01-11 15:05:11,695 DotNetNuke.Services.Cache.CachingProvider - 40F: Cache.Remove (DNN_Tab_Tabs-1)

2023-01-11 15:05:12,365 DotNetNuke.Services.Cache.CachingProvider - 40F: Cache.Remove (DNN_ModuleSettings89)

Since this is the call straight to System.Web.Caching.Cache.Remove, we don't have much more to see there. Nevertheless it's still strange that it's so very slow, and the clean install isn't, while it's on the same build of DNN, the same hardware, the same IIS, ...

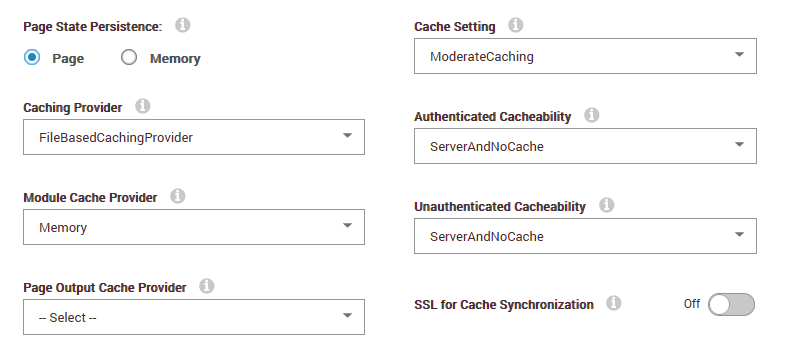

What caching modes are enabled.

All the default things, basically:

- file based caching provider

- moderate caching

Also, the cache contains a bit more keys than on a clean installation, but only 20-30 more, so that shouldn't be an issue either.

I'm curious, if you used memory based caching if you would see different results

I'm curious, if you used memory based caching if you would see different results

Not sure if I know what you mean. Caching is set to the FileBasedCachingProvider and the only other option is SimpleWebFarmCachingProvider

FYI, there seems to be a difference between 9.10.2 and 9.11.0 The skin package I was unable to install on 9.10.2 installations does seem to install in 9.11.0 (at least on the 2 installations I tested it on) (these are not the same installations but they are very much alike)

But they're in the same environment and are mostly the same website, right, @Timo-Breumelhof ?

But they're in the same environment and are mostly the same website, right, @Timo-Breumelhof ?

Yes.

FYI, there seems to be a difference between 9.10.2 and 9.11.0 The skin package I was unable to install on 9.10.2 installations does seem to install in 9.11.0 (at least on the 2 installations I tested it on) (these are not the same installations but they are very much alike)

Also: we had those issues on a clean 9.10.2 installation and we had them a lot less (as in: installation took a bit long but proceeded without errors) on a clean 9.11.0 installation.

I'm currently in the process of upgrading open content 4.7 to 5.0.4 on 40 sites in azure and I'm checking the install process through FTP. This upgrade does not run any SSL, but contains al lot of files I can see that the install process finishes after between 2 and 5 minutes, but the "installer/polydeploy" does not respond any more