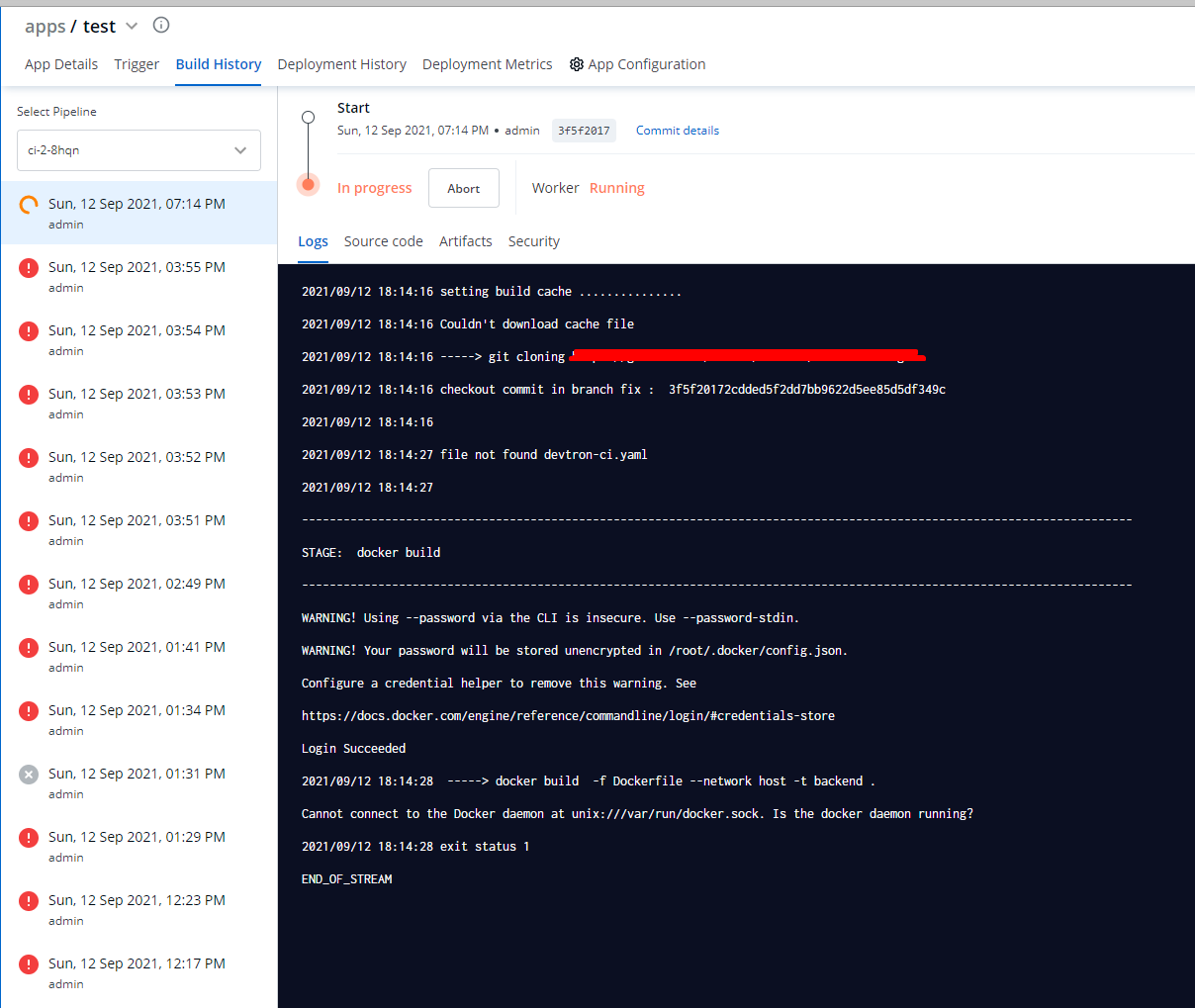

Docker build is failing with docker.sock. Is the docker daemon running?

main ------------------------------------------------------------------------------------------------------------------------ │

│ main STAGE: docker build │

│ main ------------------------------------------------------------------------------------------------------------------------ │

│ main 2021/09/12 11:23:32 DEVTRON -----> docker login -u xxx -p xxx xxxxx │

│ main WARNING! Using --password via the CLI is insecure. Use --password-stdin. │

│ main WARNING! Your password will be stored unencrypted in /root/.docker/config.json. │

│ main Configure a credential helper to remove this warning. See │

│ main https://docs.docker.com/engine/reference/commandline/login/#credentials-store │

│ main Login Succeeded │

│ main 2021/09/12 11:23:33 DEVTRON docker file location: │

│ main 2021/09/12 11:23:33 -----> docker build -f Dockerfile --network host -t backend . │

│ main Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running? │

│ main 2021/09/12 11:23:33 exit status 1 │

│ main 2021/09/12 11:23:33 DEVTRON false exit status 1 │

│ main 2021/09/12 11:23:33 DEVTRON artifacts map[] │

│ main 2021/09/12 11:23:33 DEVTRON no artifact to upload │

│ main 2021/09/12 11:23:33 DEVTRON exit status 1 <nil> │

│ main stream closed

Is there any documentation how to ensure that build containers in the cluster are created in privileged mode so they can access docker engine? Thank you

@Born2Bake can you please help me to understand the usecase. In general in ci step users don't require to interact with docker daemon. Also please share the Dockerfile

@Born2Bake can you please help me to understand the usecase. In general in ci step users don't require to interact with docker daemon. Also please share the Dockerfile

k get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master-2 Ready control-plane,master 2d4h v1.20.1 172.16.1.131 <none> Talos (v0.12.0) 5.10.58-talos containerd://1.5.5

master-3 Ready control-plane,master 2d4h v1.20.1 172.16.1.132 <none> Talos (v0.12.0) 5.10.58-talos containerd://1.5.5

master-dev-1 Ready control-plane,master 2d4h v1.20.1 172.16.1.130 <none> Talos (v0.12.0) 5.10.58-talos containerd://1.5.5

worker-1 Ready <none> 2d4h v1.20.1 172.16.1.133 <none> Talos (v0.12.0) 5.10.58-talos containerd://1.5.5

worker-2 Ready <none> 2d4h v1.20.1 172.16.1.134 <none> Talos (v0.12.0) 5.10.58-talos containerd://1.5.5

worker-3 Ready <none> 2d4h v1.20.1 172.16.1.135 <none> Talos (v0.12.0) 5.10.58-talos containerd://1.5.5

worker-4 Ready <none> 2d4h v1.20.1 172.16.1.136 <none> Talos (v0.12.0) 5.10.58-talos containerd://1.5.5

worker-5 Ready <none> 2d4h v1.20.1 172.16.1.137 <none> Talos (v0.12.0) 5.10.58-talos containerd://1.5.5

worker-6 Ready <none> 2d4h v1.20.1 172.16.1.138 <none> Talos (v0.12.0) 5.10.58-talos containerd://1.5.5

Usecase: Bare-metal k8s cluster provisioned. Devtron installed via helm with default settings. Gitlab repo added, registry added. Pipeline created - build docker image using Dockerfile from the repository and then deploy it. It works for me if I am using gitlab-runners - they can build images using pods in kubernetes. However, when I try to build docker image via Devtron, I am getting that error after the build command. I ve tried many different executors for argo, and none of them work unfortunately.

Is there any plans for update to fix that issue?

docker daemon starting fails on hetzner vm wilth following logs

time="2021-11-20T13:33:57.656528738Z" level=warning msg="could not change group /var/run/docker.sock to docker: group docker not found"

time="2021-11-20T13:33:57.656645778Z" level=warning msg="[!] DON'T BIND ON ANY IP ADDRESS WITHOUT setting --tlsverify IF YOU DON'T KNOW WHAT YOU'RE DOING [!]"

time="2021-11-20T13:33:57.657462489Z" level=info msg="libcontainerd: started new containerd process" pid=78

time="2021-11-20T13:33:57.657497089Z" level=info msg="parsed scheme: \"unix\"" module=grpc

time="2021-11-20T13:33:57.657510199Z" level=info msg="scheme \"unix\" not registered, fallback to default scheme" module=grpc

time="2021-11-20T13:33:57.657563029Z" level=info msg="ccResolverWrapper: sending new addresses to cc: [{unix:///var/run/docker/containerd/containerd.sock 0 <nil>}]" module=grpc

time="2021-11-20T13:33:57.657579629Z" level=info msg="ClientConn switching balancer to \"pick_first\"" module=grpc

time="2021-11-20T13:33:57.657629629Z" level=info msg="pickfirstBalancer: HandleSubConnStateChange: 0xc420734950, CONNECTING" module=grpc

time="2021-11-20T13:33:57.672586864Z" level=info msg="starting containerd" revision=894b81a4b802e4eb2a91d1ce216b8817763c29fb version=v1.2.6

time="2021-11-20T13:33:57.672785814Z" level=info msg="loading plugin "io.containerd.content.v1.content"..." type=io.containerd.content.v1

time="2021-11-20T13:33:57.672848524Z" level=info msg="loading plugin "io.containerd.snapshotter.v1.btrfs"..." type=io.containerd.snapshotter.v1

time="2021-11-20T13:33:57.672936394Z" level=warning msg="failed to load plugin io.containerd.snapshotter.v1.btrfs" error="path /var/lib/docker/containerd/daemon/io.containerd.snapshotter.v1.btrfs must be a btrfs filesystem to be used with the btrfs snapshotter"

time="2021-11-20T13:33:57.672944954Z" level=info msg="loading plugin "io.containerd.snapshotter.v1.aufs"..." type=io.containerd.snapshotter.v1

time="2021-11-20T13:33:57.677685149Z" level=warning msg="failed to load plugin io.containerd.snapshotter.v1.aufs" error="modprobe aufs failed: "ip: can't find device 'aufs'\nmodprobe: can't change directory to '/lib/modules': No such file or directory\n": exit status 1"

time="2021-11-20T13:33:57.677723609Z" level=info msg="loading plugin "io.containerd.snapshotter.v1.native"..." type=io.containerd.snapshotter.v1

time="2021-11-20T13:33:57.677848819Z" level=info msg="loading plugin "io.containerd.snapshotter.v1.overlayfs"..." type=io.containerd.snapshotter.v1

time="2021-11-20T13:33:57.678017559Z" level=info msg="loading plugin "io.containerd.snapshotter.v1.zfs"..." type=io.containerd.snapshotter.v1

time="2021-11-20T13:33:57.678167189Z" level=warning msg="failed to load plugin io.containerd.snapshotter.v1.zfs" error="path /var/lib/docker/containerd/daemon/io.containerd.snapshotter.v1.zfs must be a zfs filesystem to be used with the zfs snapshotter"

time="2021-11-20T13:33:57.678180219Z" level=info msg="loading plugin "io.containerd.metadata.v1.bolt"..." type=io.containerd.metadata.v1

time="2021-11-20T13:33:57.678234699Z" level=warning msg="could not use snapshotter btrfs in metadata plugin" error="path /var/lib/docker/containerd/daemon/io.containerd.snapshotter.v1.btrfs must be a btrfs filesystem to be used with the btrfs snapshotter"

time="2021-11-20T13:33:57.678244639Z" level=warning msg="could not use snapshotter aufs in metadata plugin" error="modprobe aufs failed: "ip: can't find device 'aufs'\nmodprobe: can't change directory to '/lib/modules': No such file or directory\n": exit status 1"

time="2021-11-20T13:33:57.678254199Z" level=warning msg="could not use snapshotter zfs in metadata plugin" error="path /var/lib/docker/containerd/daemon/io.containerd.snapshotter.v1.zfs must be a zfs filesystem to be used with the zfs snapshotter"

time="2021-11-20T13:33:57.766308744Z" level=info msg="loading plugin "io.containerd.differ.v1.walking"..." type=io.containerd.differ.v1

time="2021-11-20T13:33:57.766352184Z" level=info msg="loading plugin "io.containerd.gc.v1.scheduler"..." type=io.containerd.gc.v1

time="2021-11-20T13:33:57.766418074Z" level=info msg="loading plugin "io.containerd.service.v1.containers-service"..." type=io.containerd.service.v1

time="2021-11-20T13:33:57.766466735Z" level=info msg="loading plugin "io.containerd.service.v1.content-service"..." type=io.containerd.service.v1

time="2021-11-20T13:33:57.766491205Z" level=info msg="loading plugin "io.containerd.service.v1.diff-service"..." type=io.containerd.service.v1

time="2021-11-20T13:33:57.766514025Z" level=info msg="loading plugin "io.containerd.service.v1.images-service"..." type=io.containerd.service.v1

time="2021-11-20T13:33:57.766534675Z" level=info msg="loading plugin "io.containerd.service.v1.leases-service"..." type=io.containerd.service.v1

time="2021-11-20T13:33:57.766548975Z" level=info msg="loading plugin "io.containerd.service.v1.namespaces-service"..." type=io.containerd.service.v1

time="2021-11-20T13:33:57.766562565Z" level=info msg="loading plugin "io.containerd.service.v1.snapshots-service"..." type=io.containerd.service.v1

time="2021-11-20T13:33:57.766576415Z" level=info msg="loading plugin "io.containerd.runtime.v1.linux"..." type=io.containerd.runtime.v1

time="2021-11-20T13:33:57.766799905Z" level=info msg="loading plugin "io.containerd.runtime.v2.task"..." type=io.containerd.runtime.v2

time="2021-11-20T13:33:57.766925855Z" level=info msg="loading plugin "io.containerd.monitor.v1.cgroups"..." type=io.containerd.monitor.v1

time="2021-11-20T13:33:57.767335685Z" level=info msg="loading plugin "io.containerd.service.v1.tasks-service"..." type=io.containerd.service.v1

time="2021-11-20T13:33:57.767361185Z" level=info msg="loading plugin "io.containerd.internal.v1.restart"..." type=io.containerd.internal.v1

time="2021-11-20T13:33:57.767400005Z" level=info msg="loading plugin "io.containerd.grpc.v1.containers"..." type=io.containerd.grpc.v1

time="2021-11-20T13:33:57.767414045Z" level=info msg="loading plugin "io.containerd.grpc.v1.content"..." type=io.containerd.grpc.v1

time="2021-11-20T13:33:57.767427085Z" level=info msg="loading plugin "io.containerd.grpc.v1.diff"..." type=io.containerd.grpc.v1

time="2021-11-20T13:33:57.767439335Z" level=info msg="loading plugin "io.containerd.grpc.v1.events"..." type=io.containerd.grpc.v1

time="2021-11-20T13:33:57.767452455Z" level=info msg="loading plugin "io.containerd.grpc.v1.healthcheck"..." type=io.containerd.grpc.v1

time="2021-11-20T13:33:57.767465195Z" level=info msg="loading plugin "io.containerd.grpc.v1.images"..." type=io.containerd.grpc.v1

time="2021-11-20T13:33:57.767481945Z" level=info msg="loading plugin "io.containerd.grpc.v1.leases"..." type=io.containerd.grpc.v1

time="2021-11-20T13:33:57.767494475Z" level=info msg="loading plugin "io.containerd.grpc.v1.namespaces"..." type=io.containerd.grpc.v1

time="2021-11-20T13:33:57.767506935Z" level=info msg="loading plugin "io.containerd.internal.v1.opt"..." type=io.containerd.internal.v1

time="2021-11-20T13:33:57.767804676Z" level=info msg="loading plugin "io.containerd.grpc.v1.snapshots"..." type=io.containerd.grpc.v1

time="2021-11-20T13:33:57.767839297Z" level=info msg="loading plugin "io.containerd.grpc.v1.tasks"..." type=io.containerd.grpc.v1

time="2021-11-20T13:33:57.767853957Z" level=info msg="loading plugin "io.containerd.grpc.v1.version"..." type=io.containerd.grpc.v1

time="2021-11-20T13:33:57.767866897Z" level=info msg="loading plugin "io.containerd.grpc.v1.introspection"..." type=io.containerd.grpc.v1

time="2021-11-20T13:33:57.768088077Z" level=info msg=serving... address="/var/run/docker/containerd/containerd-debug.sock"

time="2021-11-20T13:33:57.768166887Z" level=info msg=serving... address="/var/run/docker/containerd/containerd.sock"

time="2021-11-20T13:33:57.768178787Z" level=info msg="containerd successfully booted in 0.096004s"

time="2021-11-20T13:33:57.770262228Z" level=info msg="pickfirstBalancer: HandleSubConnStateChange: 0xc420734950, READY" module=grpc

time="2021-11-20T13:33:57.774699012Z" level=info msg="parsed scheme: \"unix\"" module=grpc

time="2021-11-20T13:33:57.774720382Z" level=info msg="scheme \"unix\" not registered, fallback to default scheme" module=grpc

time="2021-11-20T13:33:57.774829453Z" level=info msg="parsed scheme: \"unix\"" module=grpc

time="2021-11-20T13:33:57.774841053Z" level=info msg="scheme \"unix\" not registered, fallback to default scheme" module=grpc

time="2021-11-20T13:33:57.775177974Z" level=info msg="ccResolverWrapper: sending new addresses to cc: [{unix:///var/run/docker/containerd/containerd.sock 0 <nil>}]" module=grpc

time="2021-11-20T13:33:57.775192864Z" level=info msg="ClientConn switching balancer to \"pick_first\"" module=grpc

time="2021-11-20T13:33:57.775222304Z" level=info msg="pickfirstBalancer: HandleSubConnStateChange: 0xc420734ee0, CONNECTING" module=grpc

time="2021-11-20T13:33:57.775321054Z" level=info msg="pickfirstBalancer: HandleSubConnStateChange: 0xc420734ee0, READY" module=grpc

time="2021-11-20T13:33:57.775360434Z" level=info msg="ccResolverWrapper: sending new addresses to cc: [{unix:///var/run/docker/containerd/containerd.sock 0 <nil>}]" module=grpc

time="2021-11-20T13:33:57.775369244Z" level=info msg="ClientConn switching balancer to \"pick_first\"" module=grpc

time="2021-11-20T13:33:57.775387324Z" level=info msg="pickfirstBalancer: HandleSubConnStateChange: 0xc4207351c0, CONNECTING" module=grpc

time="2021-11-20T13:33:57.775466174Z" level=info msg="pickfirstBalancer: HandleSubConnStateChange: 0xc4207351c0, READY" module=grpc

time="2021-11-20T13:33:57.895638290Z" level=info msg="Graph migration to content-addressability took 0.00 seconds"

time="2021-11-20T13:33:57.895933010Z" level=warning msg="Your kernel does not support cgroup memory limit"

time="2021-11-20T13:33:57.895967520Z" level=warning msg="Unable to find cpu cgroup in mounts"

time="2021-11-20T13:33:57.895980790Z" level=warning msg="Unable to find blkio cgroup in mounts"

time="2021-11-20T13:33:57.895989500Z" level=warning msg="Unable to find cpuset cgroup in mounts"

time="2021-11-20T13:33:57.896045240Z" level=warning msg="mountpoint for pids not found"

time="2021-11-20T13:33:57.896993651Z" level=info msg="stopping healthcheck following graceful shutdown" module=libcontainerd

time="2021-11-20T13:33:57.897136081Z" level=info msg="stopping event stream following graceful shutdown" error="context canceled" module=libcontainerd namespace=plugins.moby

time="2021-11-20T13:33:57.897405352Z" level=info msg="pickfirstBalancer: HandleSubConnStateChange: 0xc4207351c0, TRANSIENT_FAILURE" module=grpc

time="2021-11-20T13:33:57.897457462Z" level=info msg="pickfirstBalancer: HandleSubConnStateChange: 0xc4207351c0, CONNECTING" module=grpc

Error starting daemon: Devices cgroup isn't mounted```

related to https://github.com/containerd/containerd/issues/3994

We are still not sure if it might be related to https://github.com/devtron-labs/devtron/issues/1130 cause docker build is working for me if I install Devtron using kubespray/ubuntu. However, it's still failing using Talos https://www.talos.dev/docs/v0.14/local-platforms/docker/#requirements && https://github.com/talos-systems/talos/releases/tag/v0.14.1 ; Also, it might be related to the https://docs.gitlab.com/ee/ci/docker/using_docker_build.html#troubleshooting & https://gitlab.com/gitlab-org/gitlab-runner/-/issues/4566

Any update on this one?

Can we try to reproduce this @pawan-59

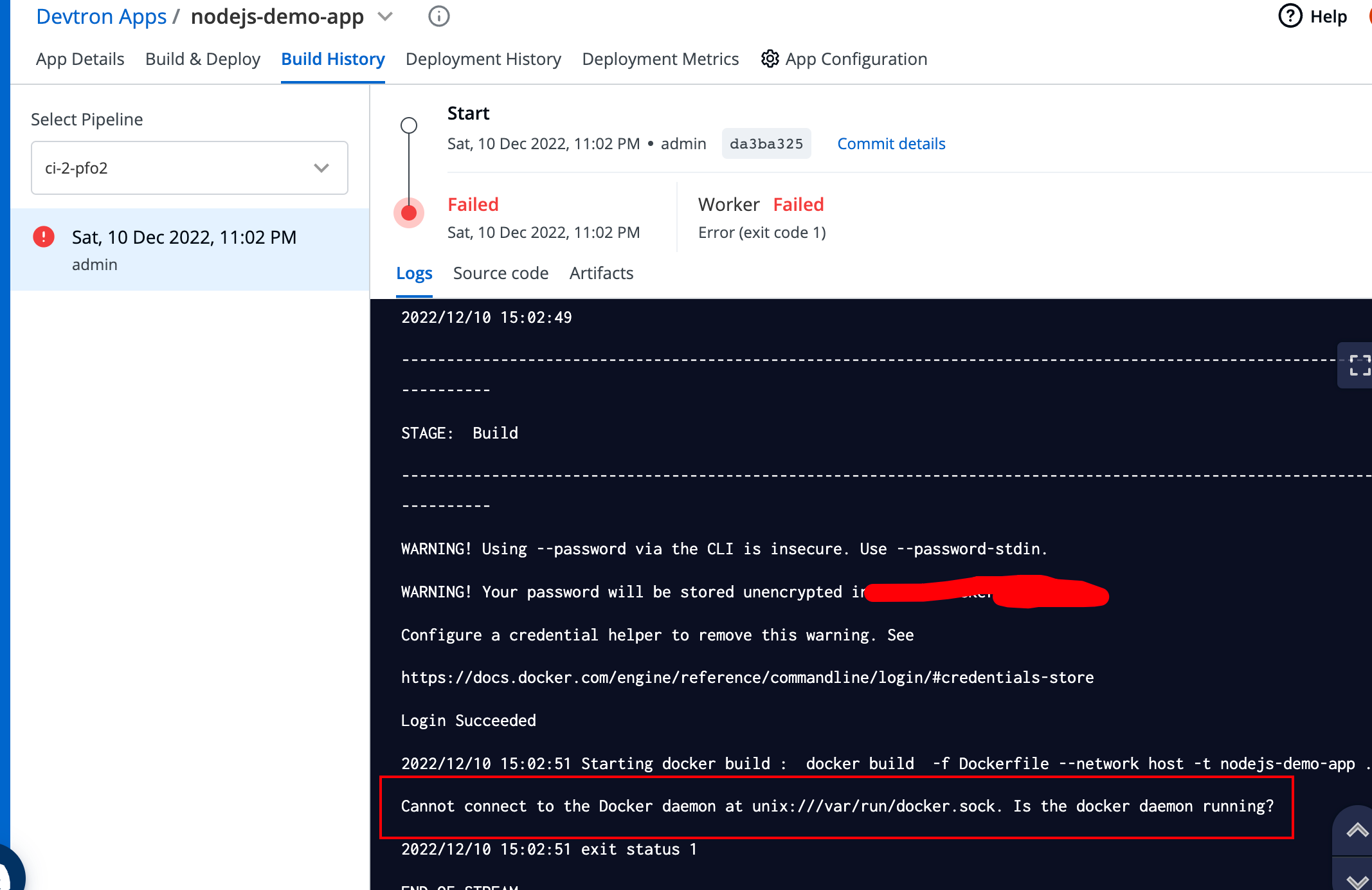

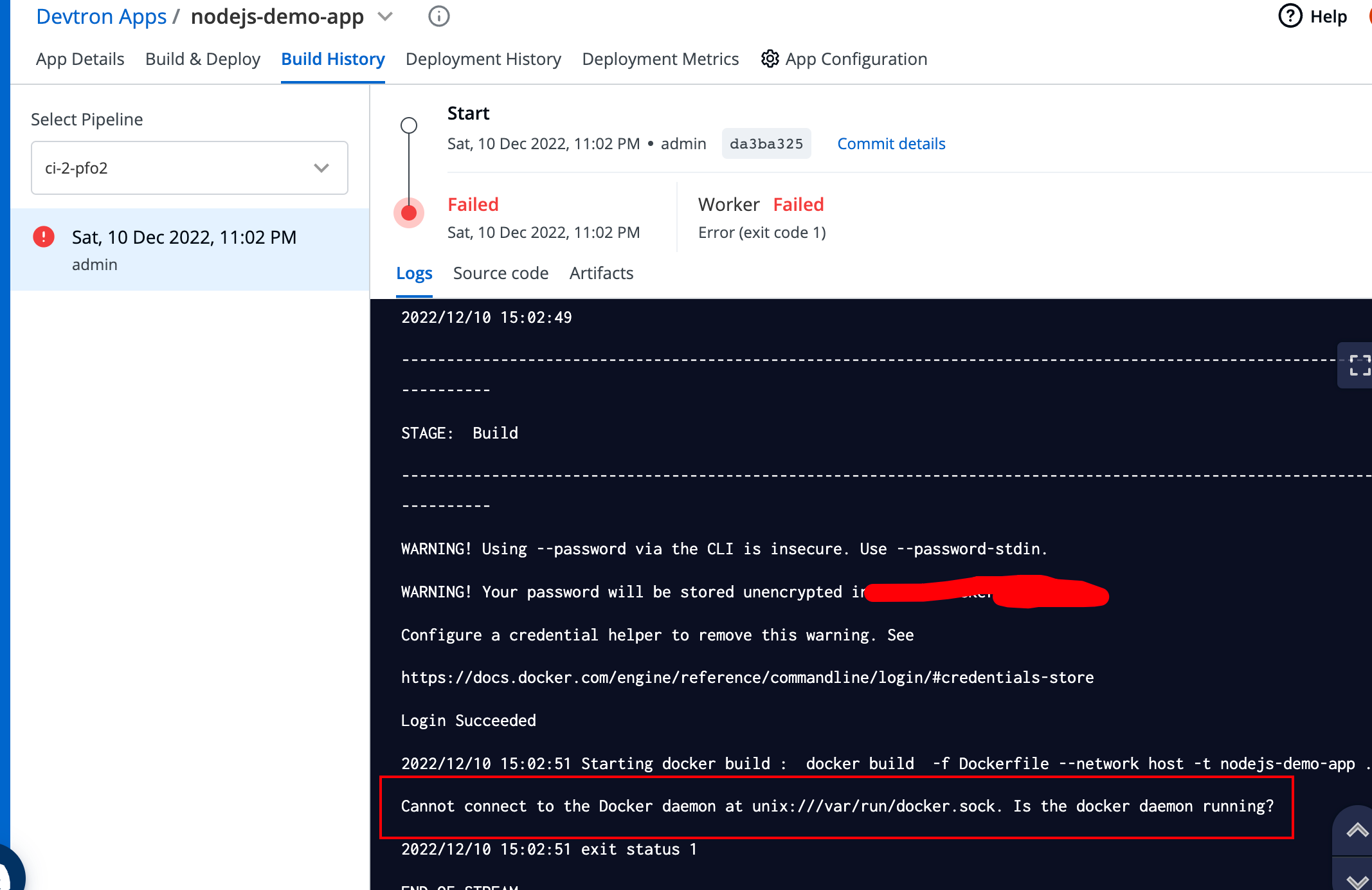

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

version: k3s, v1.23.6

I also encountered a similar problem here, is there a solution for this now?

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?无法连接到位于 unix:///var/run/docker.sock 的 Docker 守护程序。 docker 守护进程是否正在运行?

version: k3s, v1.23.6版本:k3s,v1.23.6

I also encountered a similar problem here, is there a solution for this now?我这里也遇到了类似的问题,请问现在有解决办法吗?

https://appuals.com/cannot-connect-to-the-docker-daemon-at-unix-var-run-docker-sock/

mark, this method can fix the error~

i got the same problem. hope to solve.

https://github.com/devtron-labs/ci-runner/issues/105

i got the same problem. hope to solve.

i find my problem。

in my /usr/local/bin/nohup.out log :

Status: invalid argument "--host=unix:///var/run/docker.sock" for "--insecure-registry" flag: invalid index name (--host=unix:///var/run/docker.sock)

code in ci-runner:

func StartDockerDaemon(dockerConnection, dockerRegistryUrl, dockerCert, defaultAddressPoolBaseCidr string, defaultAddressPoolSize int, ciRunnerDockerMtuValue int)

{

// my config in OCI Registry

// dockerRegistryUrl = "registry.cn-hangzhou.aliyuncs.com"

u, err := url.Parse(dockerRegistryUrl)

if err != nil {

log.Fatal(err)

}

// ...

// u.Host is null , fix : add `https://` to dockerRegistryUrl

dockerdstart = fmt.Sprintf("dockerd %s --insecure-registry %s --host=unix:///var/run/docker.sock %s --host=tcp://0.0.0.0:2375 > /usr/local/bin/nohup.out 2>&1 &", defaultAddressPoolFlag, u.Host, dockerMtuValueFlag)

}

Any news ?

Fixed with linked issue