dbt-databricks

dbt-databricks copied to clipboard

dbt-databricks copied to clipboard

Can't generate dbt docs

Describe the bug

We use the dbt-databricks adapter and can successfully read our models from dbt and also write them. However, when we call dbt docs generate we get the following error: Expected only one database in get_catalog, found.

To be on the safe side, I took another look at the source.yml. Unfortunately, only the schema is set there and I don't know why dbt docs generate shouldn't work.

Steps To Reproduce

Try to generate the dbt docs for a databricks destination / source

Expected behavior

Docs should be generated

Screenshots and log output

System information

The output of dbt --version:

╰─$ dbt --version

installed version: 1.0.1

latest version: 1.0.1

Up to date!

Plugins:

- databricks: 1.0.0

- spark: 1.0.0

The operating system you're using:

The output of python --version:

╰─$ python --version

Python 3.8.12

@mcfuhrt Could you also try with dbt-databricks 1.0.1? @jtcohen6 Could you help to take a look at this? Seems like the error came from dbt-spark or dbt-core.

@ueshin I installed dbt-databricks 1.01. but the result of dbt docs generateis the same.

╰─$ dbt --version

installed version: 1.0.1

latest version: 1.0.1

Up to date!

Plugins:

- databricks: 1.0.1

- spark: 1.0.0

06:32:18 Found 84 models, 0 tests, 0 snapshots, 0 analyses, 215 macros, 0 operations, 0 seed files, 55 sources, 0 exposures, 0 metrics

06:32:18

06:32:19 Concurrency: 1 threads (target='prep')

06:32:19

06:32:19 Done.

06:32:19 Building catalog

06:32:20 Encountered an error:

Compilation Error

Expected only one database in get_catalog, found [<InformationSchema INFORMATION_SCHEMA>, <InformationSchema INFORMATION_SCHEMA>]

@ueshin dbt-spark is raising this explicit error in the get_catalog method here, because it:

- Operates on one schema at a time (today by running

show table extended in <schema_name> like '*' - Stores all those schemas as belonging to the same "null" database (since dbt is organized to think in three hierarchy levels, with

databaseat the top) - Won't know what to do if passed more than one database, since even the first is really just an internal placeholder

The history here is all around supporting full dbt functionality on Spark, which doesn't have a distinction between database + schema as separate hierarchy layers: https://github.com/dbt-labs/dbt-spark/pull/92

The method that passes schemas into get_catalog, called _get_catalog_schemas, is not reimplemented in dbt-spark, so we're using the version defined in dbt-core. The idea is to walk through the enabled relational nodes in the manifest, and to return a SchemaSearchMap() that includes all databases relevant for catalog generation, mapped to the schemas they contain.

In Spark/Databricks, this SchemaSearchMap should look like:

{<InformationSchema INFORMATION_SCHEMA>: {'schema_one', 'schema_two'}}

Note that the database name is missing (specifically, it's set to None). On adapters that have separate notions of schema/database, even ones that support only one active database, we'd see:

{<InformationSchema "database_name".INFORMATION_SCHEMA>: {'schema_one', 'schema_two'}}

On a database that supports multiple databases, and a different hierarchy between them, we should see this object return:

{<InformationSchema "database_one".INFORMATION_SCHEMA>: {'schema_one', 'schema_two}, <InformationSchema "database_two".INFORMATION_SCHEMA>: {'schema_three'}}

Yet, somehow, @mcfuhrt is seeing two different databases returned in the SchemaSearchMap, both with missing (None) database names. I've tried a few different things locally to reproduce this exact error (source definitions, model configurations, profiles.yml configurations). The only way I've managed to trip this error, without first tripping a different error, is by setting database for a source, using the same value as its schema. E.g.:

sources:

- name: my_src

schema: my_src

database: my_src

tables:

- name: tbl_one

Then I see:

08:46:55 Encountered an error:

Compilation Error

Expected only one database in get_catalog, found [<InformationSchema INFORMATION_SCHEMA>, <InformationSchema my_src.INFORMATION_SCHEMA>]

Crucially, however, I see the database name (my_src) included in the second SchemaSearchMap entry, which gives me a hint as to where the error is originating.

@jtcohen6, is there anything I can do to support here or do you need more information (e.g. configuration, source.yml, etc.) from me?

I am not sure how to proceed to solve the problem.

@mcfuhrt I think we'll need some more configuration details to get to the bottom of this one. Are you able to reproduce the error in a simpler project, with the same basic config as your real project? If so, would you be up to share it here?

If not, and you're willing to share your real project (including profiles.yml) via DM in dbt Slack, that also works

Of course! I'll build a simpler project tomorrow and send you the configuration. Either here or directly at Slack

I have built a very small example that can be reproduced quickly. Unfortunately, I also get the error when generating the doc files.

Setup:

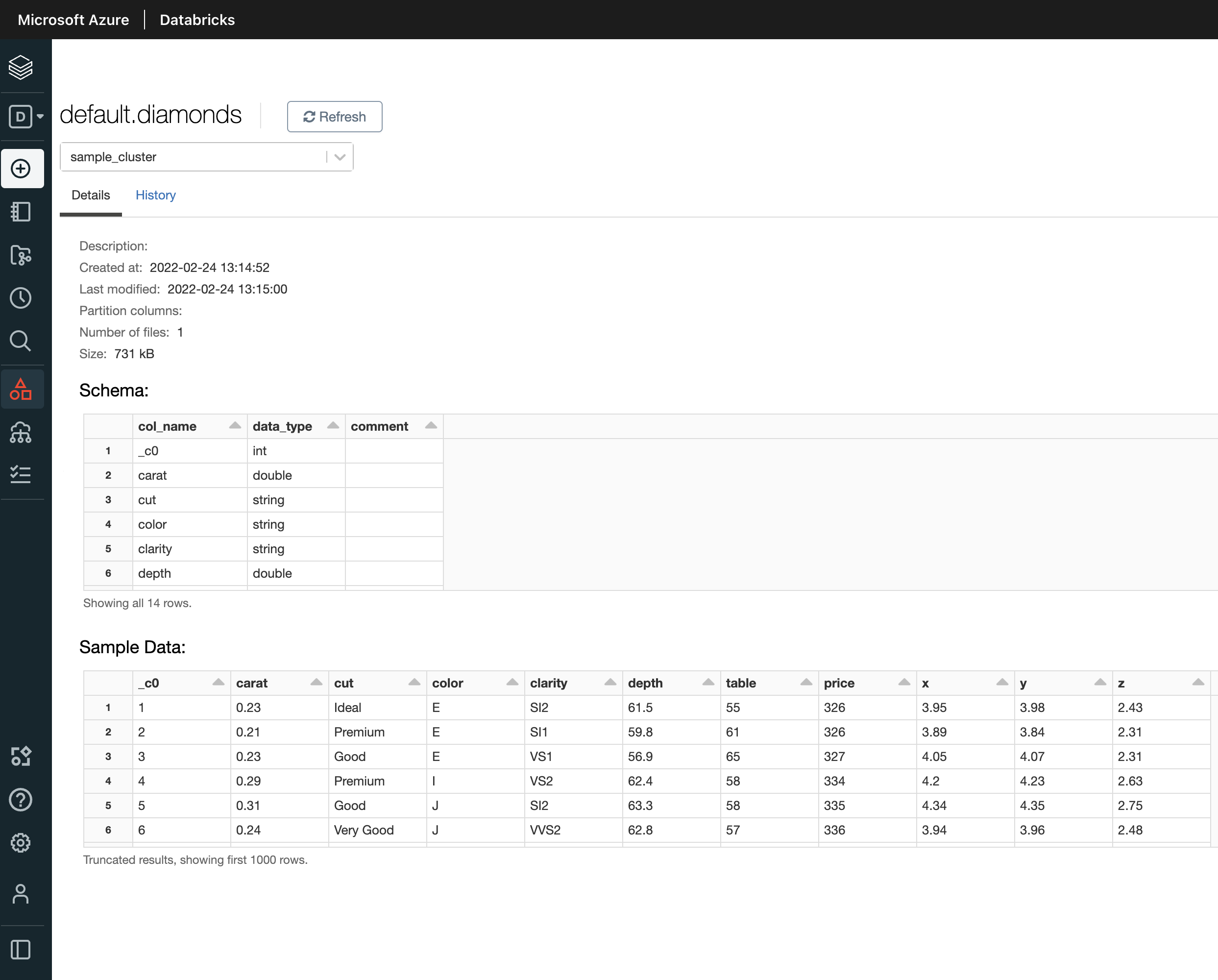

Single node cluster hosted on Azure (West-Europe).

Notebook for reading in the sample data and saving it as a delta table.

schema = 'default'

table = 'diamonds'

spark.sql(f"CREATE DATABASE IF NOT EXISTS {schema};")

diamonds = spark.read.format('csv').options(header='true', inferSchema='true').load('/databricks-datasets/Rdatasets/data-001/csv/ggplot2/diamonds.csv')

(

diamonds

.write

.format("delta")

.option("overwriteSchema", "true")

.mode("overwrite")

.saveAsTable(f"{schema}.{table}")

)

Configuration of the dbt project

name: 'analytics'

version: '1.0.0'

config-version: 2

vars:

'dbt_date:time_zone': 'Europe/Berlin'

profile: 'databricks'

model-paths: ["models"]

analysis-paths: ["analysis"]

test-paths: ["tests"]

seed-paths: ["data"]

macro-paths: ["macros"]

snapshot-paths: ["snapshots"]

target-path: "target"

clean-targets:

- "target"

- "dbt_modules"

models:

analytics:

Configuration sources.yml

version: 2

sources:

- name: default

schema: default

loader: Direct

loaded_at_field: none

quoting:

database: true

schema: false

identifier: false

freshness:

warn_after: {'count': 8, 'period': hour }

error_after: {'count': 24, 'period': hour}

tables:

- name: diamonds

Configuration of the Databricks profile

databricks:

outputs:

prep:

host: #HOST#

http_path: #HTTP_PATH#

schema: default

threads: 1

token: #TOKEN#

type: databricks

target: prep

dbt, plugins and python version

╰─$ dbt --version

installed version: 1.0.3

latest version: 1.0.3

Up to date!

Plugins:

- databricks: 1.0.1 - Up to date!

- spark: 1.0.0 - Up to date!

╰─$ python --version

Python 3.8.12

dbt run result

╰─$ dbt run 130 ↵

13:56:37 Running with dbt=1.0.3

13:56:37 Unable to do partial parsing because a project config has changed

13:56:37 Found 1 model, 0 tests, 0 snapshots, 0 analyses, 212 macros, 0 operations, 0 seed files, 1 source, 0 exposures, 0 metrics

13:56:37

13:56:40 Concurrency: 1 threads (target='prep')

13:56:40

13:56:40 1 of 1 START view model default.default_diamonds_source......................... [RUN]

13:56:43 1 of 1 OK created view model default.default_diamonds_source.................... [OK in 2.31s]

13:56:43

13:56:43 Finished running 1 view model in 5.70s.

13:56:43

13:56:43 Completed successfully

13:56:43

13:56:43 Done. PASS=1 WARN=0 ERROR=0 SKIP=0 TOTAL=1

dbt docs generate result

╰─$ dbt docs generate

13:57:27 Running with dbt=1.0.3

13:57:27 Found 1 model, 0 tests, 0 snapshots, 0 analyses, 212 macros, 0 operations, 0 seed files, 1 source, 0 exposures, 0 metrics

13:57:27

13:57:29 Concurrency: 1 threads (target='prep')

13:57:29

13:57:29 Done.

13:57:29 Building catalog

13:57:29 Encountered an error:

Compilation Error

Expected only one database in get_catalog, found [<InformationSchema INFORMATION_SCHEMA>, <InformationSchema INFORMATION_SCHEMA>]

Thanks for this @mcfuhrt! I was able to reproduce the error, using the example you've provided.

It looks like this line in your source definition is to blame:

quoting:

database: true

If I switch that to database: false, or remove that line entirely, docs generate works fine.

Alternatively, if I add those lines to dbt_project.yml so that the project-wide quoting config matches (docs), it also works.

There are two ways forward I can see:

- Fix the logic within

SchemaSearchMap(called by_get_catalog_schemas), which currently views a quoted"None"database and an unquotedNonedatabase as distinct entries in the set of databases. This makes sense in the general case—they have differentquote_policy—but it doesn't make sense when the value isNone. - Defining this config doesn't (shouldn't) have any actual effect. Given that we don't allow

databaseto be defined elsewhere indbt-spark(+dbt-databricks, at least until https://github.com/dbt-labs/dbt-spark/issues/281), it really feels like our answer here should be to raise an explicit error any time thequotingconfig is defined at thedatabaselevel. That could look like adding a__post_init__hook to theSparkQuotePolicy:

def __post_init__(self):

if self.database:

raise RuntimeException('Cannot set database-level quoting!')

Thank you @jtcohen6 for your detailed explanation of how the error came about and how you deal with it!

I also think that it should be enough at this point to throw the corresponding exception and then refer to the database quoting in the dpt_project.yml. I tested your fix yesterday and as expected, dbt docs generate now runs successfully.

@jtcohen6 is there a possibility to set the database name in the generated docs? I want to connect our dbt docs and our databricks tables within amundsen and it seems like this won't work while the database names are inconsistent or null.

@mcfuhrt For adapters with "two-tiered" namespacing, the top-level tier (database) will always be null. This should be totally consistent, minus the issue you uncovered around quoting. I don't imagine this would prevent you from being able to integrate with Amundsen, so long as you're matching on the schema + identifier for each object?

Looking ahead:

- Definitely aware of the need for cosmetic improvement in the docs site: https://github.com/dbt-labs/dbt-docs/issues/104

- We're also aware that Spark is looking to adopt a three-tiered namespace, with the top-level tier called "catalog," at which point the behavior in

dbt-spark+dbt-databrickswill be more consistent with other adapter plugins

This issue has been marked as Stale because it has been open for 180 days with no activity. If you would like the issue to remain open, please remove the stale label or comment on the issue, or it will be closed in 7 days.

@jtcohen6 I'm facing the same error with database: false when using dbt with mysql. Is there a way around this ?

dbt: 1.0.9 dbt-mysql: 1.0.0