autobahn-js

autobahn-js copied to clipboard

autobahn-js copied to clipboard

Add CI tests for big messages

Hi,

I've been using AutobahnJS for quite some time on a project of ours. Everything has been working fine internally up until we imported the user's real world data into the database. As soon as this happened, we'd randomly run into the above mentioned errors. The project is a Angular2 project, so we're using AutobahnJS with Typescript. The customer's data is from a Danish source, so characters such as "æøå" are used. But using a clean database and then creating data that contains these characters does not force the error to appear.

I've been trying to figure out where I even start debugging this. Commenting out random autobahn.session.call functionality sometimes makes the error dissapear, but sometimes doesn't. It's a very fleeting error and I'm having huge issues attempting to track down the root issue. I don't even know where to begin. The stacktrace of the exception points to websocket.js:162

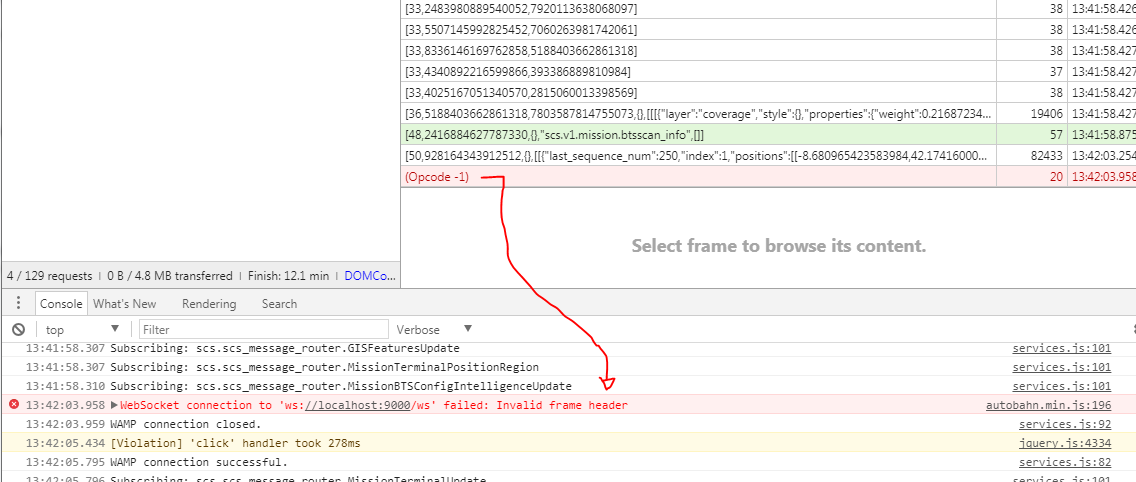

I've attempted to use the Chrome Websocket debugger for whenever it happens and in there, I can only see communcation going back and forth and then suddenly receiving a frame with "opcode: -1" after which Chrome closes the connection with either of the errors above, apparently random which one is given.

Everything runs asynchronously, so I'm wondering if it has something to do with many async calls getting sent to the router and then receiving many results at the same time.

Everything runs asynchronously, so I'm wondering if it has something to do with many async calls getting sent to the router and then receiving many results at the same time.

I've tried turning on / off websocket compression.

The crossbar log doesn't tell me much either:

2017-06-19T17:42:34+0200 [Router 5968 crossbar.router.protocol.WampWebSocketServerProtocol] dropping connection to peer tcp4:62.198.41.153:21805 with abort=False

2017-06-19T17:42:34+0200 [Router 5968 crossbar.router.protocol.WampWebSocketServerProtocol] Connection to/from tcp4:62.198.41.153:21805 was lost in a non-clean fashion: write error -- unknown (64)

2017-06-19T17:42:34+0200 [Router 5968 crossbar.router.protocol.WampWebSocketServerProtocol] _connectionLost: [Failure instance: Traceback (failure with no frames): <class 'twisted.internet.error.ConnectionLost'>: Connection to the other side was lost in a non-clean fashion: write error -- unknown (64).

]

2017-06-19T17:42:34+0200 [Router 5968 crossbar.router.protocol.WampWebSocketServerProtocol] WAMP-over-WebSocket transport lost: wasClean=True, code=1002, reason="WebSocket Protocol Error"

2017-06-19T17:42:34+0200 [Router 5968 crossbar.router.router.Router] <<<<<< session 2088602419227414 LEFT "test" <<<<<<

{u'authprovider': u'dynamic', u'authid': u'dev', u'authrole': u'client', u'authmethod': u'wampcra', u'session': 2088602419227414L, u'transport': {u'cbtid': None, u'protocol': 'wamp.2.json', u'http_headers_received': {u'origin': u'http://localhost:61489', u'upgrade': u'websocket', u'accept-language': u'en-US,en;q=0.8,da;q=0.6', u'accept-encoding': u'gzip, deflate', u'sec-websocket-version': u'13', u'sec-websocket-protocol': u'wamp.2.json, wamp.2.msgpack', u'host': u'<removed>', u'sec-websocket-key': u'VWLKCegoym7av8zF7pPAFg==', u'user-agent': u'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.86 Safari/537.36', u'dnt': u'1', u'connection': u'Upgrade', u'pragma': u'no-cache', u'cache-control': u'no-cache', u'sec-websocket-extensions': u'permessage-deflate; client_max_window_bits'}, u'peer': u'tcp4:62.198.41.153:21805', u'http_headers_sent': {}, u'websocket_extensions_in_use': [{'mem_level': 5, 'extension': 'permessage-deflate', 'is_server': True, 'server_no_context_takeover': True, 'client_max_window_bits': 12, 'client_no_context_takeover': True, 'server_max_window_bits': 12}], u'type': 'websocket'}}

I'd appreciate any help at this stage honestly. I'm mostly looking for pointers as to where I can continue my debugging efforts, because honestly at this point I feel like I've run out of options.

After some helpful advice and ideas from agronholm and oberstet, I've tried out some additional things: Msgpack serialisation instead makes no difference. It still fails, but with the following error instead: "One or more reserved bits are on: reserved1 = 0, reserved2 = 1, reserved3 = 0"

Testing the exact same procedure calls and subscriptions with in an otherwise empty javascript file within the browser doesn't make the same error happen. The same data is returned as in the "real" product however.

With Crossbar logging level set to tracing instead, I can sometimes get the following error: "EXCEPTION: Unexpected token i in JSON at position 261661" Chrome tells me that the text frame that it had received at this point was the following: https://pastebin.com/qERFAmRq (it's pretty long, rather deliberately. Running a lot of traffic at the same time seems to make this error happen consistently) Looking at position 261661, the json is obviously malformed. It seems like the json string was split up and combined at this point, albeit unsuccesfully.

I've attached the crossbarlog and a wireshark log of the error happening. crossbarlog.txt wiresharklog.zip

Might this have something to do with longer messages and the splitting/combining of individual messages or requests writing to the buffer while it is being read?

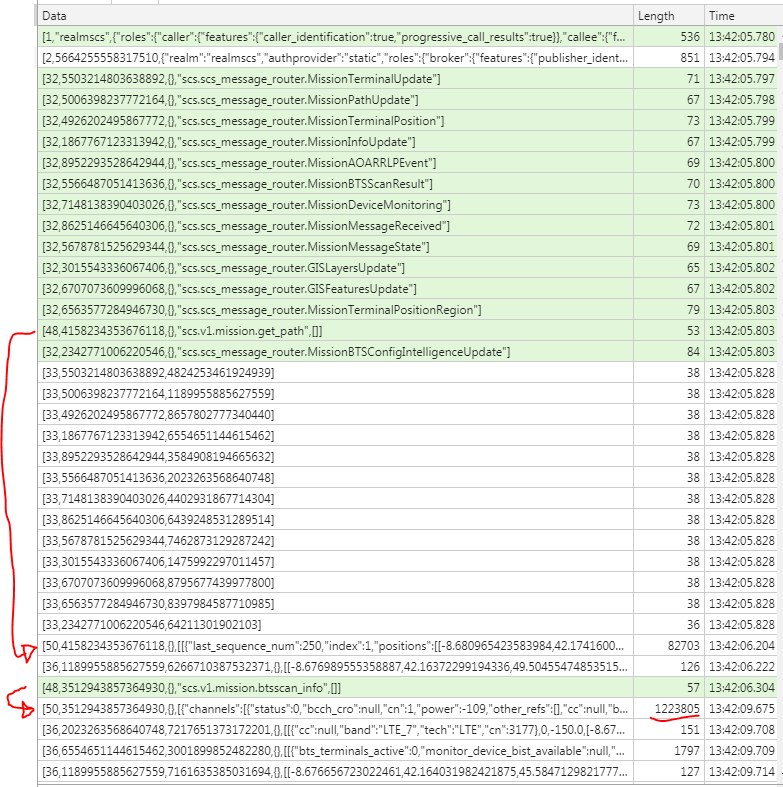

@Capsup The reply is more than 5MB in size!

Could you try the following?

- CBOR

- no WebSocket compression

- Set

auto_fragment_size=65536in Crossbar.io (http://crossbar.io/docs/WebSocket-Options/)

Hi @oberstet,

it's definitely a reply with a huge payload, but it's what was necessary for me to get the error to happen consistently instead of fleetingly. With the current setup that I have, this error happens 100% consistently. It is random whether it fails with "Invalid frame header" or "Could not decode a text frame as UTF-8" however.

The JSON serialisation is handled automatically by the Wamp implementation that I use (WampSharp) on the callee, but CBOR looks like it could possibly reduce the message sizes? If that's the real issue here, it'll only be a matter of time until we run into the same problems because this customer has a huge amount of data that they use. We're already implementing lazy loading everywhere and if the problem is caused by too big message sizes, we'll just continue with that work as part of the solution.

I tried without websocket compression and with auto_fragment_size set to 65536 as part of my crossbar config: https://pastebin.com/S5LfaPAJ but it didn't seem to make a difference.

I haven't figured out what's going on here, but I think it might have to do with buffering etc .. essentially this is blasting a single huge reply (~5MB) onto the wire, and I'm wondering if the OS (or Twisted) is sad about that and drops some of the data?

That said, it looks more like a framing problem as it's some data "in the middle" that's being dropped...

@Capsup somewhere the big (JSON/UTF8) string is broken at the wrong place, and the assumption is this only happens with big messages.

You can implement lazy loading in your frontend and use WAMP progressive results to get the result. Both will help and are the "right way".

Now, this doesn't explain why it breaks, and it certainly should work - regardless there being a better way (above).

If you want to really get to the bottom of this, we would need to verify if the WebSocket framing is broken, or if the breakage is somewhere else.

To do so, you need to verify all WebSocket messages and frames for the big payload, check if it is correctly fragmented. Does it continue with the right bytes in the follow up frame?

@oberstet Ok, so, I'm not really sure how I would go about verifying the frames and the right order of bytes. As far as I can tell from the wireshark output, something goes wrong after entry 428 in the log data. From here on out, the opcodes on the individual TCP packets appear to be wrong, and the websocket appears to start interpreting the data as binary amongst others, while it is still receiving data from the initial procedure call?

Also impacted by this. Let me know if I can help.

@jjmontesl I haven't been able to replicate this myself -- perhaps it is client-side? It looks like some data is getting truncated somewhere on really-huge messages. A reliable way to replicate the bug would go a long ways to getting a solution :)

@jjmontesl I'm almost glad to hear that I'm not the only one. Are you using Angular2 as well?

@meejah I haven't had the time to dive deeper into this since we last talked but I'll get around to it sometime soon hopefully. I have a feeling it's got something to do with Angular2 and with how WS buffer messages internally, while awaiting response for atleast multiple procedures at the same time, but it's nothing but a feeling and I don't have any kind of proof yet.

It wasn't possible for me to replicate this in a nodeJS program that called the exact same procedures as my Angular2 frontend, while the Angular project also has the same error happen across all 3 browsers, so it isn't browser specific.

However it seems to be data-agnostic, so all you need to do (atleast in my case) is attempt to call two procedures at the same time where atleast one returns a reply that is more than about 100 kB in size. It doesn't matter if your data contains non-ASCII characters or not.

@capsup would you be able to see if https://github.com/voryx/thruway.js behaves the same way? It's built with rxjs, so it should be easier to use with angular2.

I can replicate it in my environment very consistently, it happens 90% of the time our app issues a request that returns over 1MB of data.

Server side: Python 2.7, Crossbar 17.6.1.post3, Autobahn 17.7.1 and Twisted 17.5.0 on Windows7 64bit (also happens on Ubuntu 16.04). I updated to this version set trying to resolve this, but it also happened on Crossbar 0.11.1, Autobahn 0.10.9 and Twisted 15.4.0.

Client side (UI): Autobahn JS 17.5.2, Angular v1.4.4, Chrome 59.0.3071.115 64 bit, on Windows 7 64bit. This also happens on Ubuntu 16.04 64 bit with Chromium. I just updated Autobahn trying to fix this, but I was using 0.9.6 before.

Client side (service): It's also Python 2.7, Autobahn 17.7.1 on Twisted 15.4.0. Also failing on both Windows 7 and Ubuntu 16.04. Again, it was equally failing before upgrading Autobahn from Autobahn 0.10.9.

Here is what we see when the problem triggers:

Our client app (web based) immediately reconnects and issues the request again. This sometimes triggers a sequence of disconnects/reconnects, but it will sometimes work when this request is issued shortly after reconnecting and resubscribing to all topics:

In my case, the response message does not contain any non-ASCII character.

Like @Capsup, I also feel it might be related to concurrency, or a race condition. At least that's a possibility.

Any help or insight is welcome. Let me know how I can help. Can I debug these messages with Wireshark?

couple of notes:

- AutobahnPython is extensively tested at the WebSocket level using AutobahnTestsuite, including with huge WebSocket messages and frames. I doubt the issue is coming from there.

- What we should do here is add more tests to https://github.com/crossbario/autobahn-js/tree/master/test using big application payloads (in WAMP calls and events)