kube-router does not install received routes on second session

Hi all. We have a cluster with external BGP peering (all routing performed by network hardware). We have some nodes which will work as load-balancers. LB's have 2 interfaces — internal and external. 2 sessions configured (local AS 65002):

- internal interface to internal router with access to the whole cluster (AS 65001).

- external interface to external router which provides Internet connectivity (AS 65003). We announce internal networks from AS65001, and default (0.0.0.0/0) from AS65003.

The problem is that not all routes are received and propagated to Linux kernel, e.g. default route from AS65003.

--- BGP Neighbors ---

Peer AS Up/Down State |#Received Accepted

10.40.24.254 65001 00:06:19 Establ | 48 6

<peer IP> 65003 00:06:13 Establ | 1 1

--- BGP Route Info ---

Network Next Hop AS_PATH Age Attrs

*> 0.0.0.0/0 <peer IP> 65003 00:06:13 [{Origin: i}]

*> 10.11.41.0/24 10.40.24.254 65001 00:06:19 [{Origin: i}]

*> 10.40.20.0/24 10.40.24.254 65001 65500 00:06:19 [{Origin: i} {Extcomms: [1:1430]}]

*> 10.40.22.0/24 10.40.24.254 65001 65500 00:06:19 [{Origin: i} {Extcomms: [1:1430]}]

*> 10.40.24.0/24 10.40.24.254 65001 00:06:19 [{Origin: i}]

*> 10.40.30.0/24 10.40.24.254 65001 00:06:19 [{Origin: i}]

*> 10.40.31.0/24 10.40.24.254 65001 00:06:19 [{Origin: i}]

*> 10.244.3.0/24 10.40.24.11 00:01:30 [{Origin: i}]

*> <external IP>/32 10.40.24.11 00:01:30 [{Origin: i}]

*> 172.30.0.1/32 10.40.24.11 00:01:30 [{Origin: i}]

*> 172.30.0.3/32 10.40.24.11 00:01:30 [{Origin: i}]

*> 172.30.12.198/32 10.40.24.11 00:01:30 [{Origin: i}]

*> 172.30.49.14/32 10.40.24.11 00:01:30 [{Origin: i}]

*> 172.30.158.186/32 10.40.24.11 00:01:30 [{Origin: i}]

*> 172.30.231.234/32 10.40.24.11 00:01:30 [{Origin: i}]

ip route

default via 10.40.24.254 dev team0 proto static metric 300

10.11.41.0/24 via 10.40.24.254 dev team0 proto 17

10.40.20.0/24 via 10.40.24.254 dev team0 proto 17

10.40.22.0/24 via 10.40.24.254 dev team0 proto 17

10.40.24.0/24 via 10.40.24.254 dev team0 proto 17

10.40.24.0/24 dev team0 proto kernel scope link src 10.40.24.11 metric 300

10.40.30.0/24 via 10.40.24.254 dev team0 proto 17

10.40.31.0/24 via 10.40.24.254 dev team0 proto 17

10.244.3.0/24 dev kube-bridge proto kernel scope link src 10.244.3.1

<peer IP network>/29 dev team0.1492 proto kernel scope link src <local IP> metric 400

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

Checking kube-router logs I see no processing of incoming routes from external peer IP, only from internal one. Please help us to solve the problem.

@rearden-steel Would this https://github.com/cloudnativelabs/kube-router/blob/master/docs/bgp.md#bgp-listen-address-list work?

If you make the kube-router to listen on both the IP's corresponding to internal and external interfaces?

@murali-reddy Hi. No, it did not help. Routes are still not installed in the system routing table (I check it by grepping Inject keyword in the kube-router log file). As you can notice above, both session has been successfully established and routes received even without bgp-local-addresses annotation. The problem is somewhere in selecting which routes to inject. Thank you.

@murali-reddy any ideas on this?

@rearden-steel I think that it might help if you outlined your use-case just a little more. Am I understanding correctly that you're looking for non-kubernetes based routes coming from your external peer on your external interface to be synced to your kernel's local routing table?

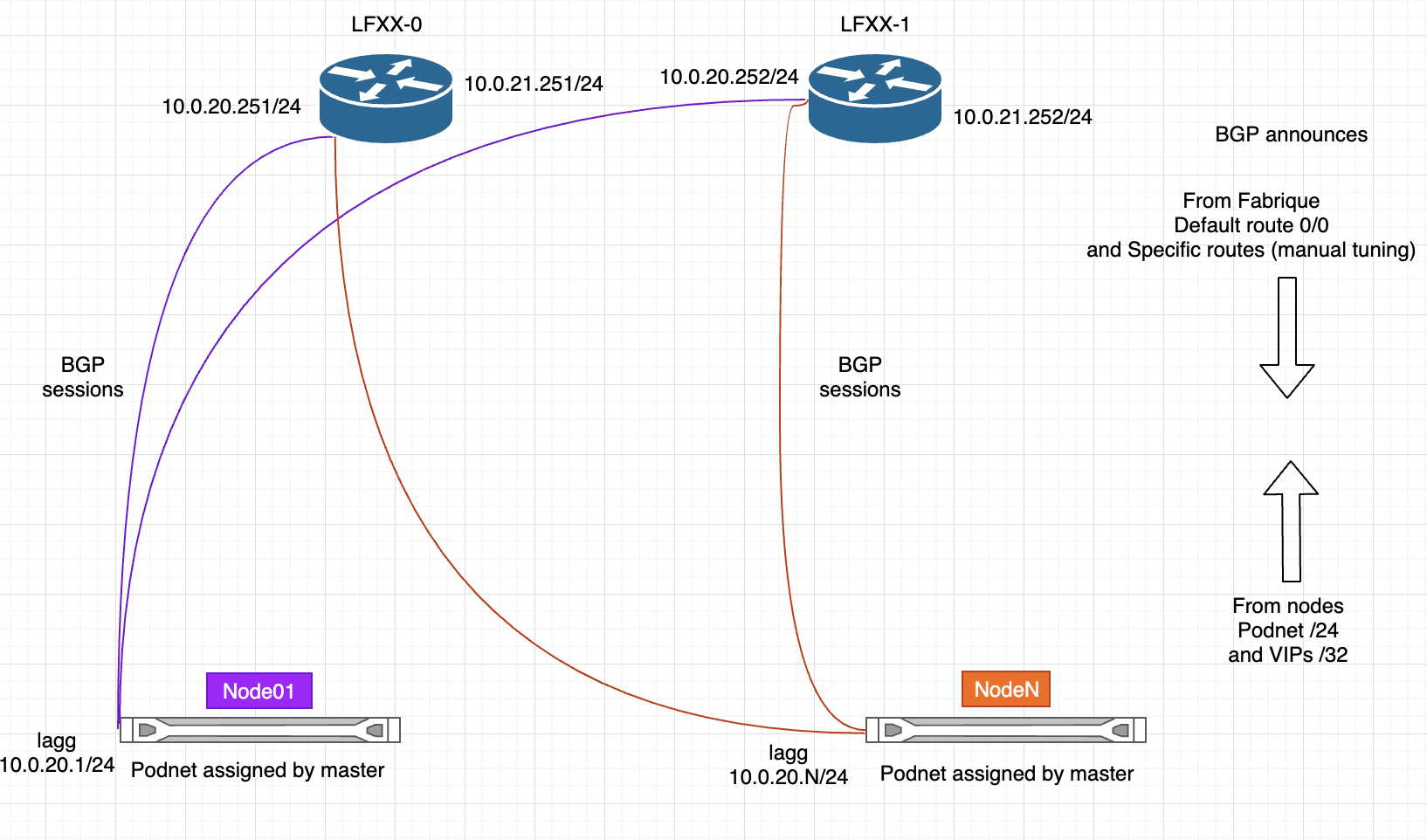

@aauren we have 2 sessions on each Kubernetes node to achieve router high-availability. We have IP Fabric deployed on our network, each server is connected to 2 edge routers and if we have routes from second session, everything will work good if one router fails.

Sorry, to re-request, but I'd still need more information to do something helpful with this. Can you get really detailed with the topology of your setup (a diagram would probably help here as well) and then explain a bit more about exactly what you're expecting from kube-router and then what you're seeing instead from the current functionality?

Here is a little network diagram:

All nodes configured to have 2 BGP sessions to the routers. Let's take a look-around on the 1-st node:

root@node-n01:~ #gobgp neigh

Peer AS Up/Down State |#Received Accepted

10.0.20.250 65000 39d 05:06:00 Establ | 1 1

10.0.20.251 65000 39d 05:05:27 Establ | 1 1

We see both session established successfully.

Then, the RIB:

root@node-n01:~ #gobgp global rib

Network Next Hop AS_PATH Age Attrs

*> 0.0.0.0/0 10.0.0.250 65000 65500 65001 31d 02:26:10 [{Origin: i} {Extcomms: [1:1430]}]

* 0.0.0.0/0 10.0.0.251 65000 65500 65001 31d 02:26:10 [{Origin: i} {Extcomms: [1:1430]}]

...

You see, we have 2 default routes received from both peers. Let's check system routing table now:

root@node-n01:~ #ip route

default via 10.0.20.250 dev team0 proto 17

default via 10.0.20.254 dev team0 proto static metric 300

There is only 1 route from RIB installed, so there will be no ECMP load-balancing for outgoing traffic there.

I've tried failing the 1-st BGP session and I see that gobgp installs second route into system routing table, so the failover case is covered.

@rearden-steel thanks for the detailed explanation. Though failover case is covered its good to have load distribution.

@aauren I am wondering if RouteReplace here could be the problem. Though I need to test to confirm. https://github.com/cloudnativelabs/kube-router/blob/v1.0.0/pkg/controllers/routing/network_routes_controller.go#L495

Closing as stale